We're excited to announce the culmination of many high-level productivity features ServiceStack gained in this release that makes it vastly more productive to rapidly develop data-driven APIs which has seen AutoQuery gain full CRUD support where it's now able to declaratively implement Create, Updates, Partial Updates and Deletes APIs.

To maximize their utility AutoQuery/CRUD Services gain additional declarative powers whereby most common operations can continue to be implemented entirely from just their declarative Request DTO definition which is able to apply mandatory filters to limit Multitenant & Soft Deleted records, populate internal data models with Audit info & Complex Expressions, Auto Mapping between different DTO & Data Model properties, support for Optimistic concurrency & the ability to easily enable high-end App-level features like full Executable Audit History for all CRUD operations enabling EventSourcing-like capabilities in being able to re-create entity state by re-running their Audit History.

Whilst all Services benefit from the new enhanced Fluent Validation capabilities where all built-in validators can now be applied declaratively, including new support for top-level "Type Validators" which can be used to enforce Type Authorization rules directly on DTOs without implementation dependencies. Declarative validation rules can also sourced from dynamic sources like an RDBMS where they're both instantly applied at runtime & cached locally for max performance.

If time constrained, skim the ToC below to get a quick overview of what's in this release and jump directly to features you're interested in:

Table of Contents

- Introducing ServiceStack Studio!

- Instantly Servicify existing Systems!

- autodto - Generate Types for RDBMS Tables

- Introducing SharpData!

- AutoQuery CRUD!

- Declarative Validation

- Executable Audit Log

- AutoGen AutoQuery & Crud Services

- AutoRegister AutoGen AutoQuery Services!

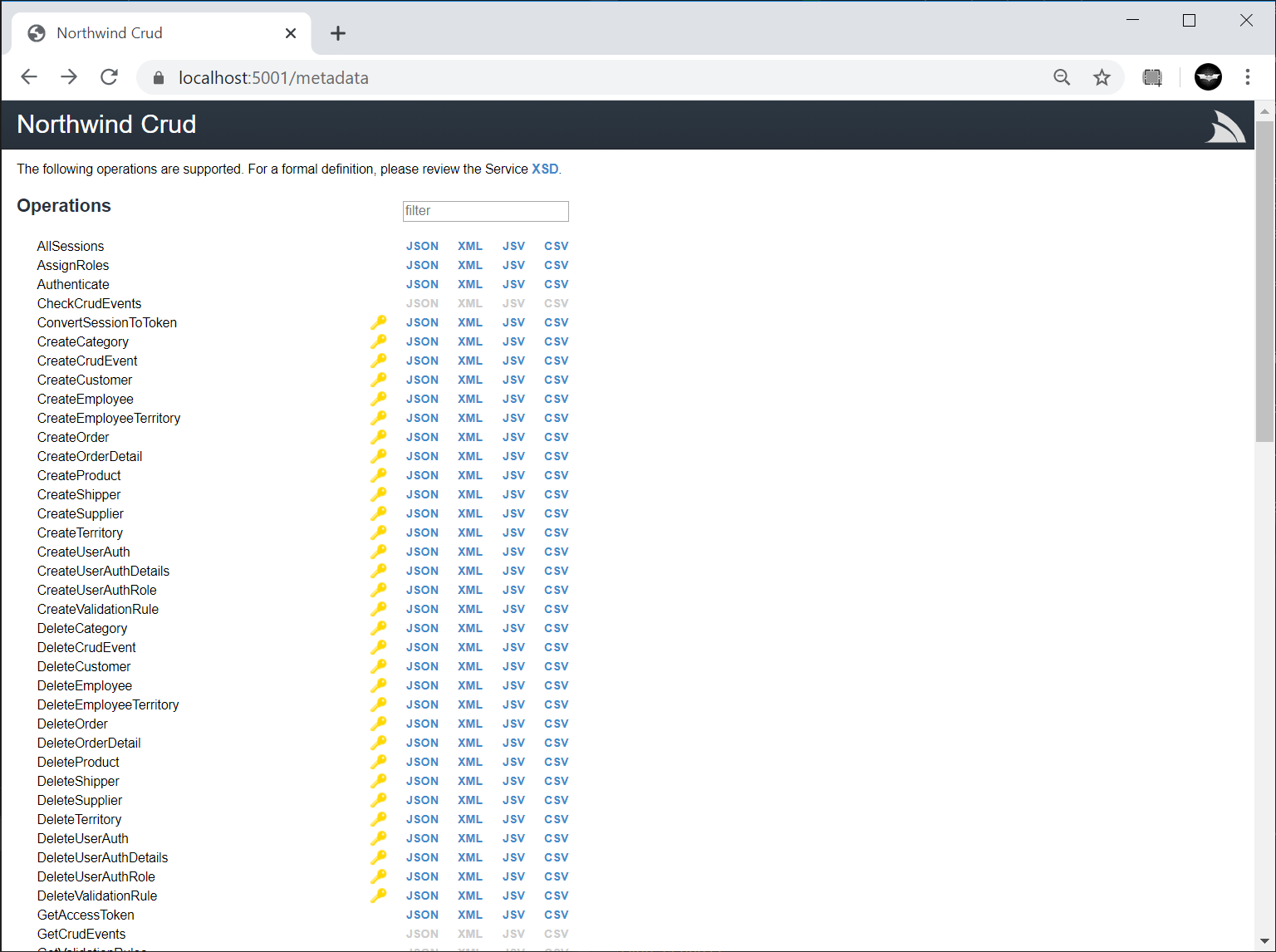

- Instantly Servicify Northwind DB with gRPC

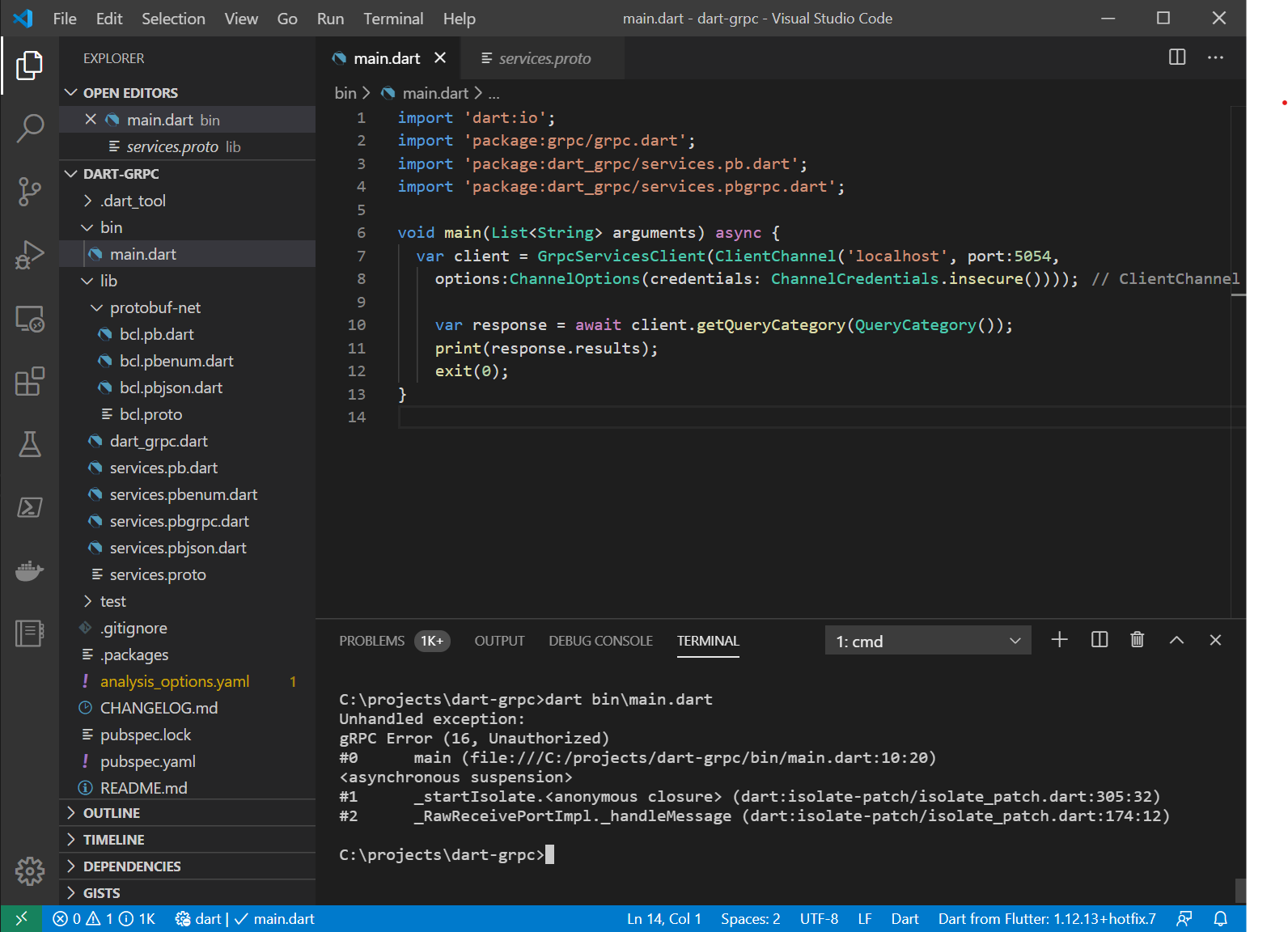

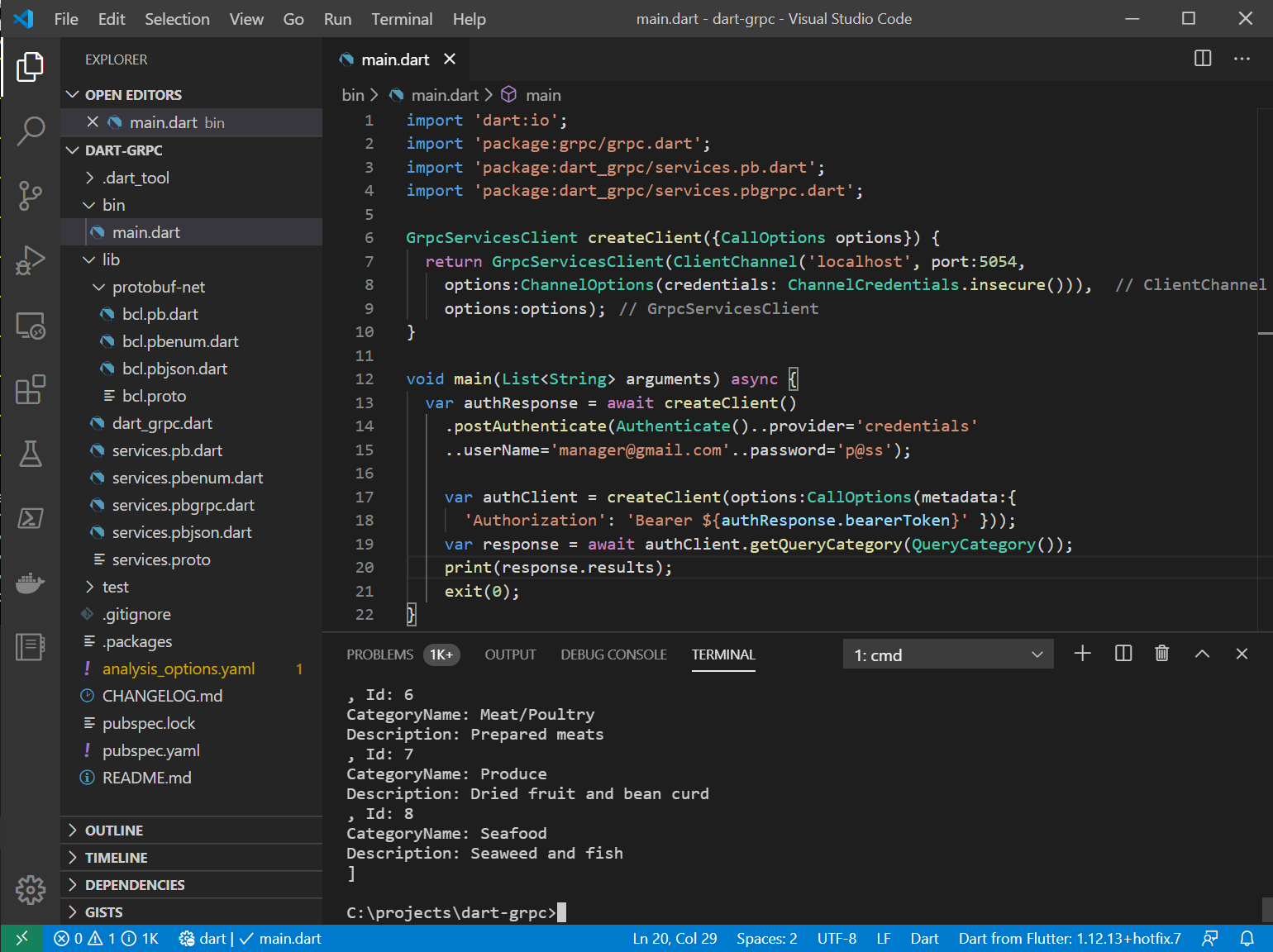

- Create Dart gRPC Console App

- Calling gRPC SSL Services

- AutoGen's AutoRegister Implementation

- CreateCrudServices Instructions

- Customize Code Generation to include App Conventions

- Mixing generated AutoQuery Services & existing code-first Services

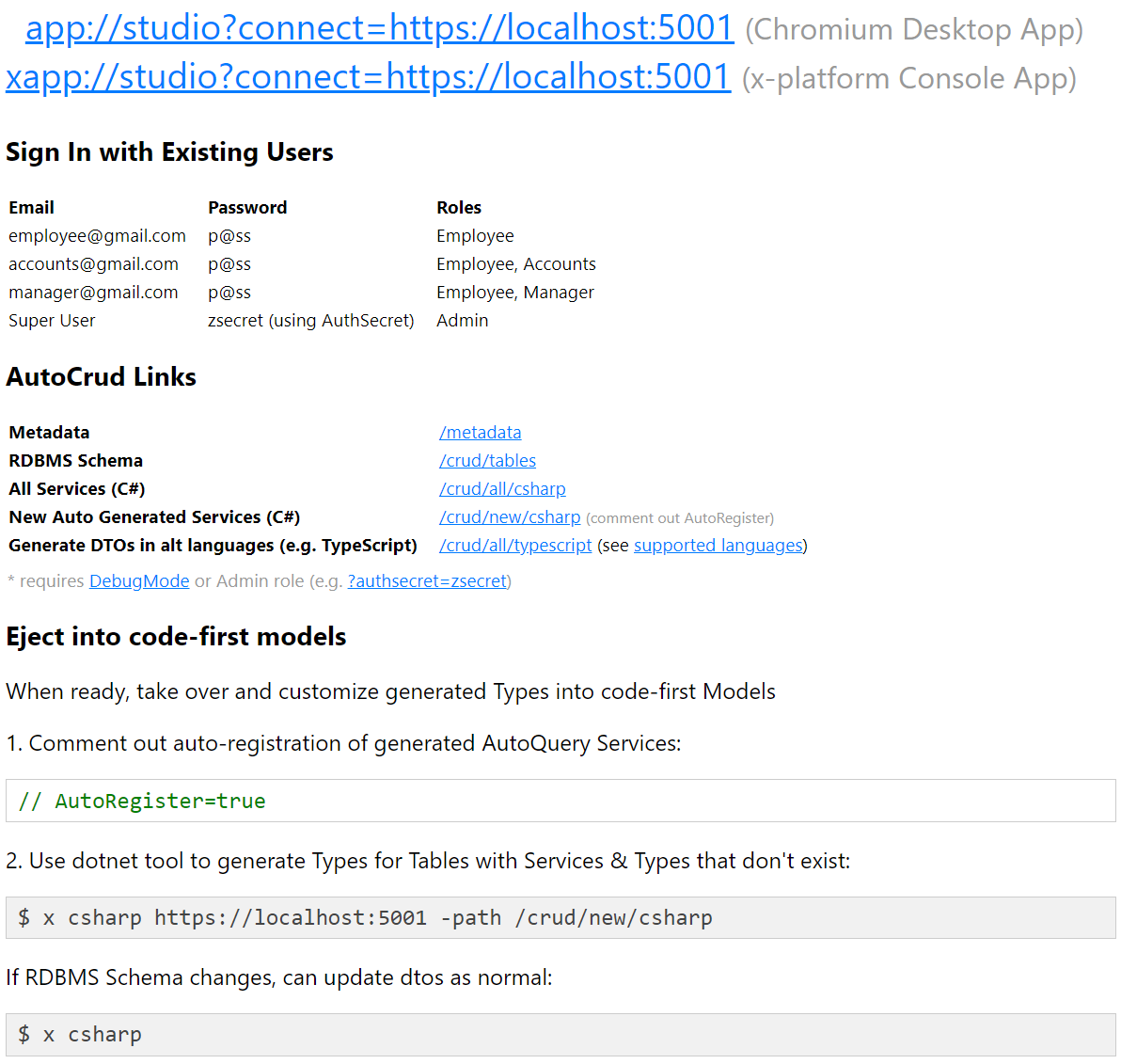

- Trying it out

- Open in ServiceStack Studio

- ServiceStack Studio

- Studio Desktop App vs ServiceStack.Admin

- Frequent out-of-band release cadence

- Light Footprint + Always use latest version

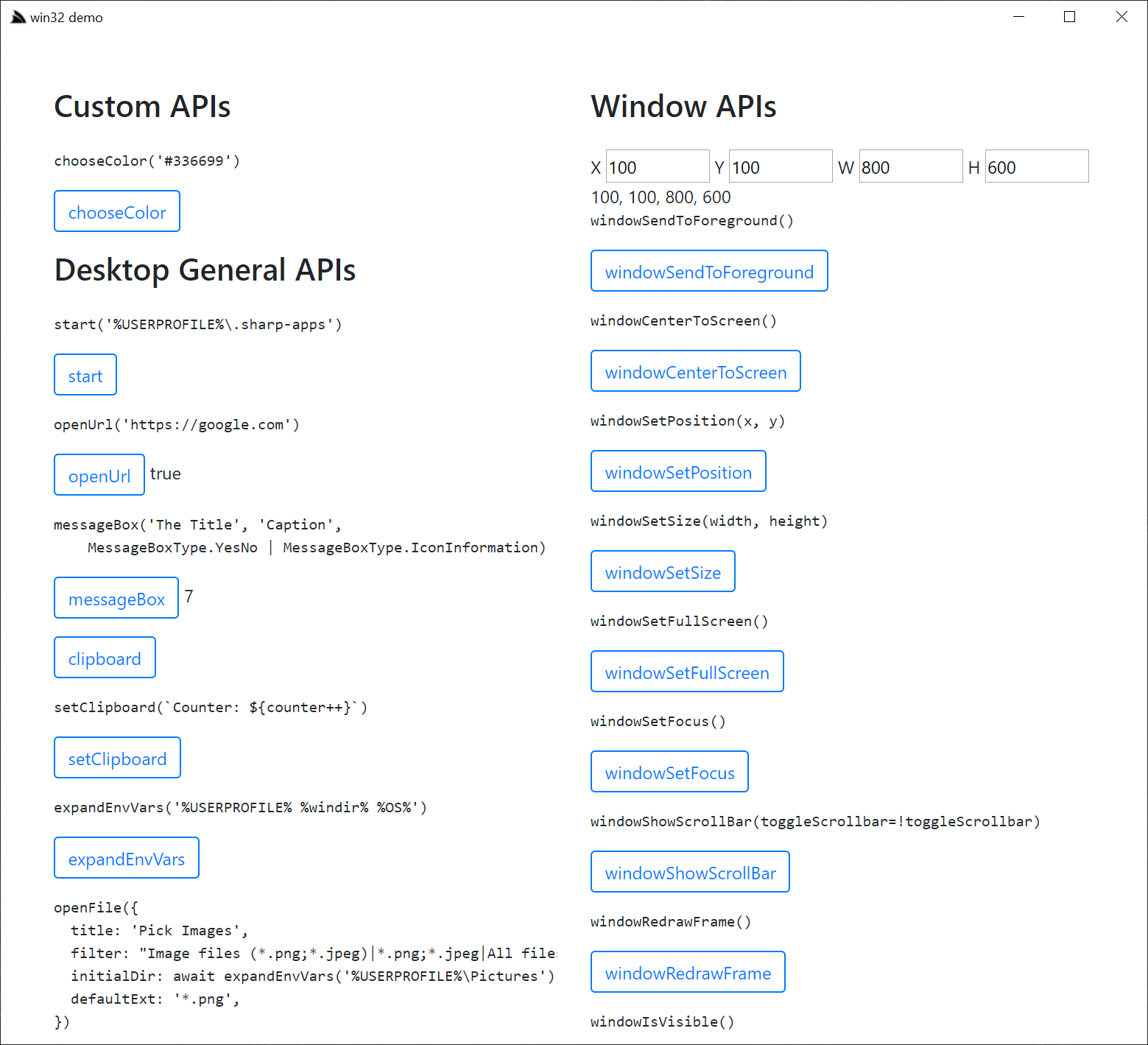

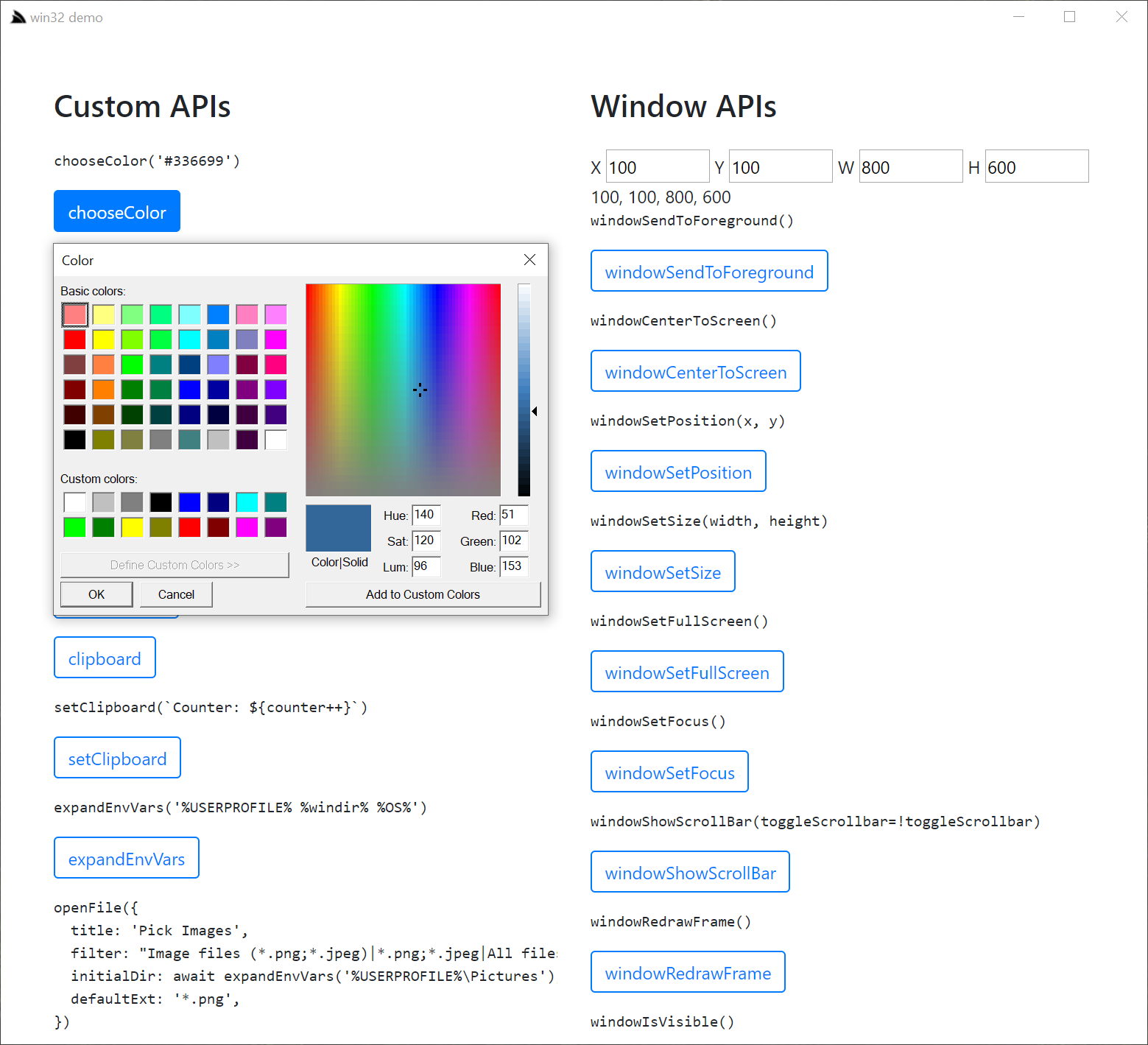

- Desktop Features

- ServiceStack.Desktop

- win32 demo

- Highly productive live-reloading Development experience

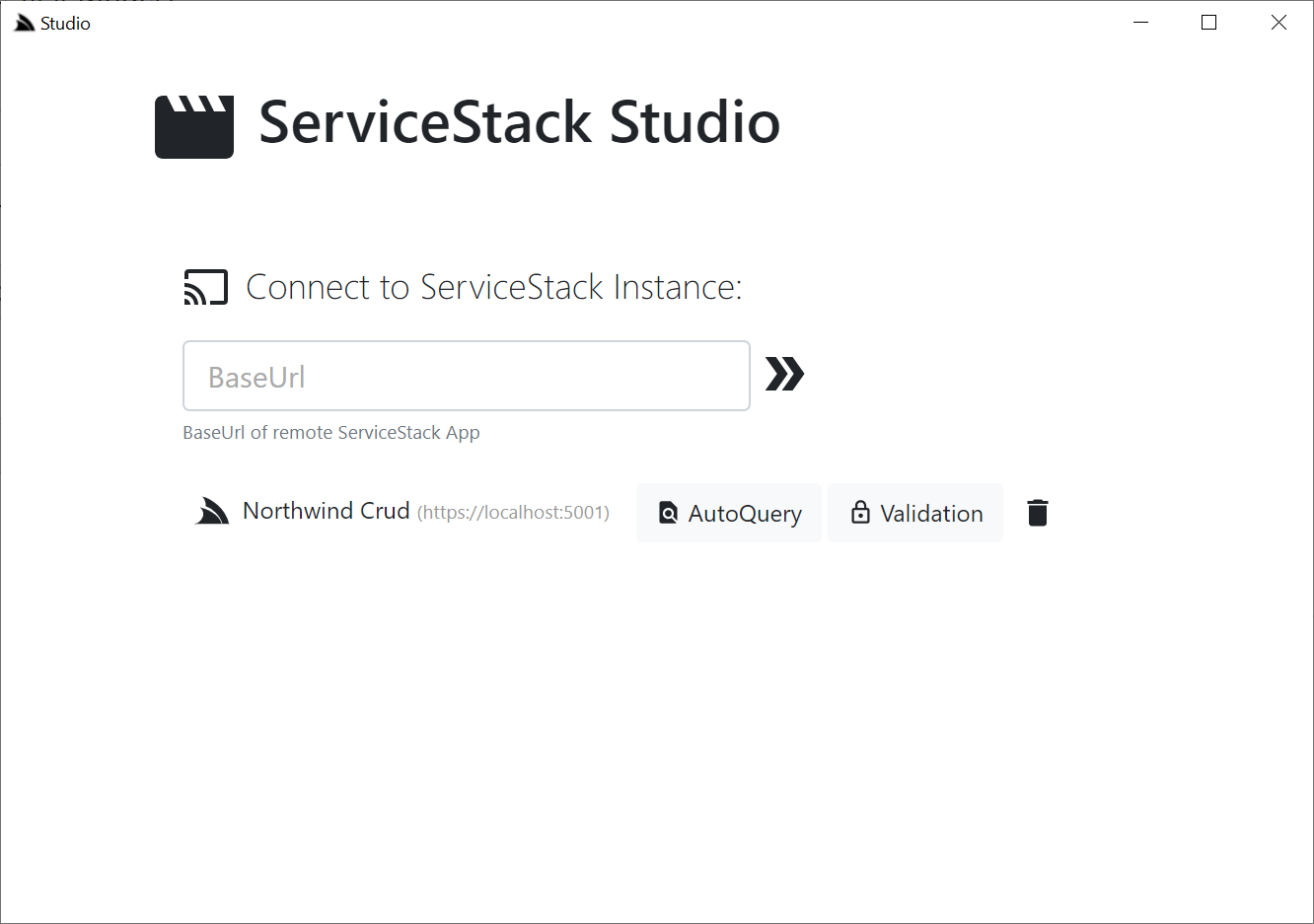

- Starting ServiceStack Studio

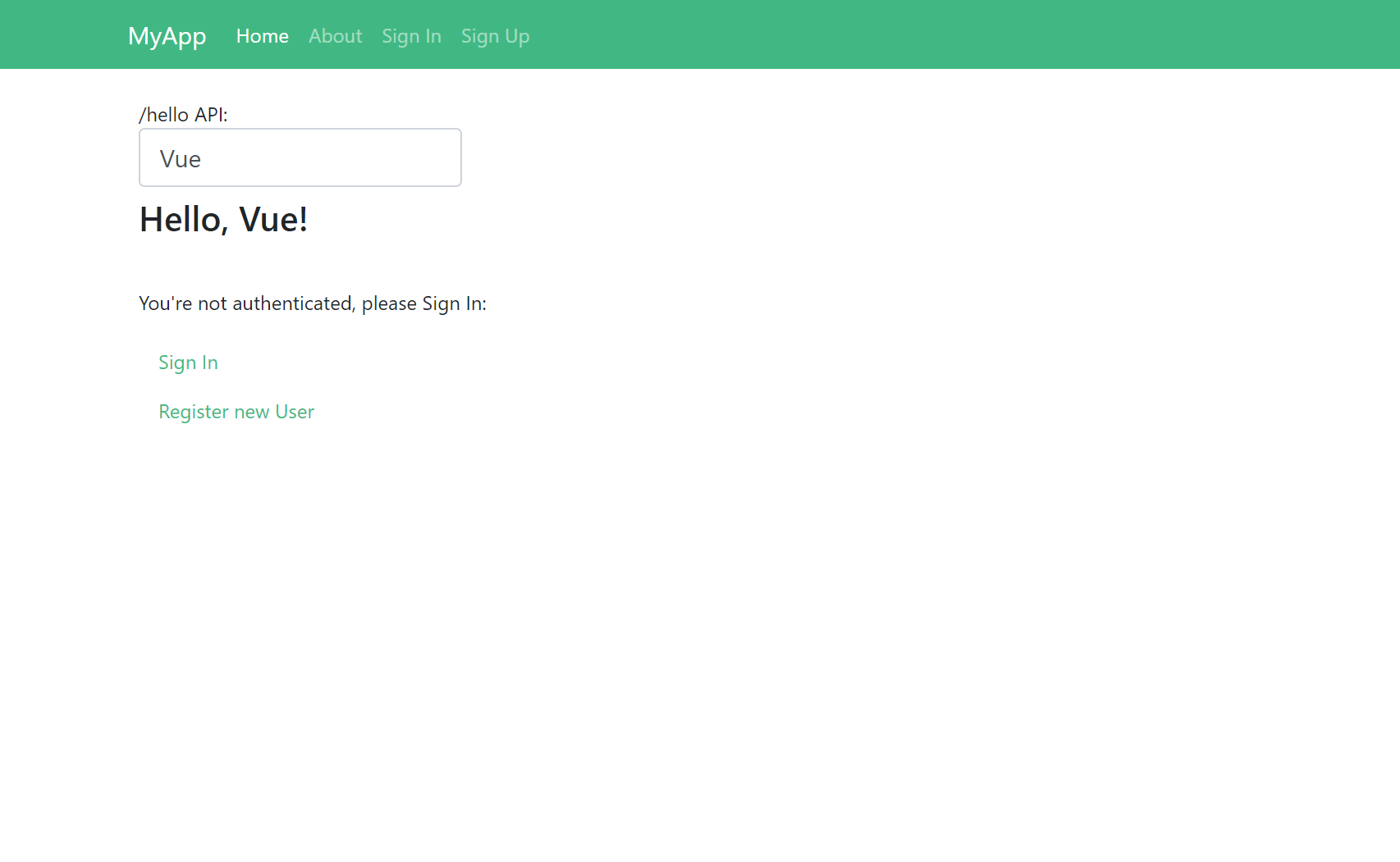

- Home Page

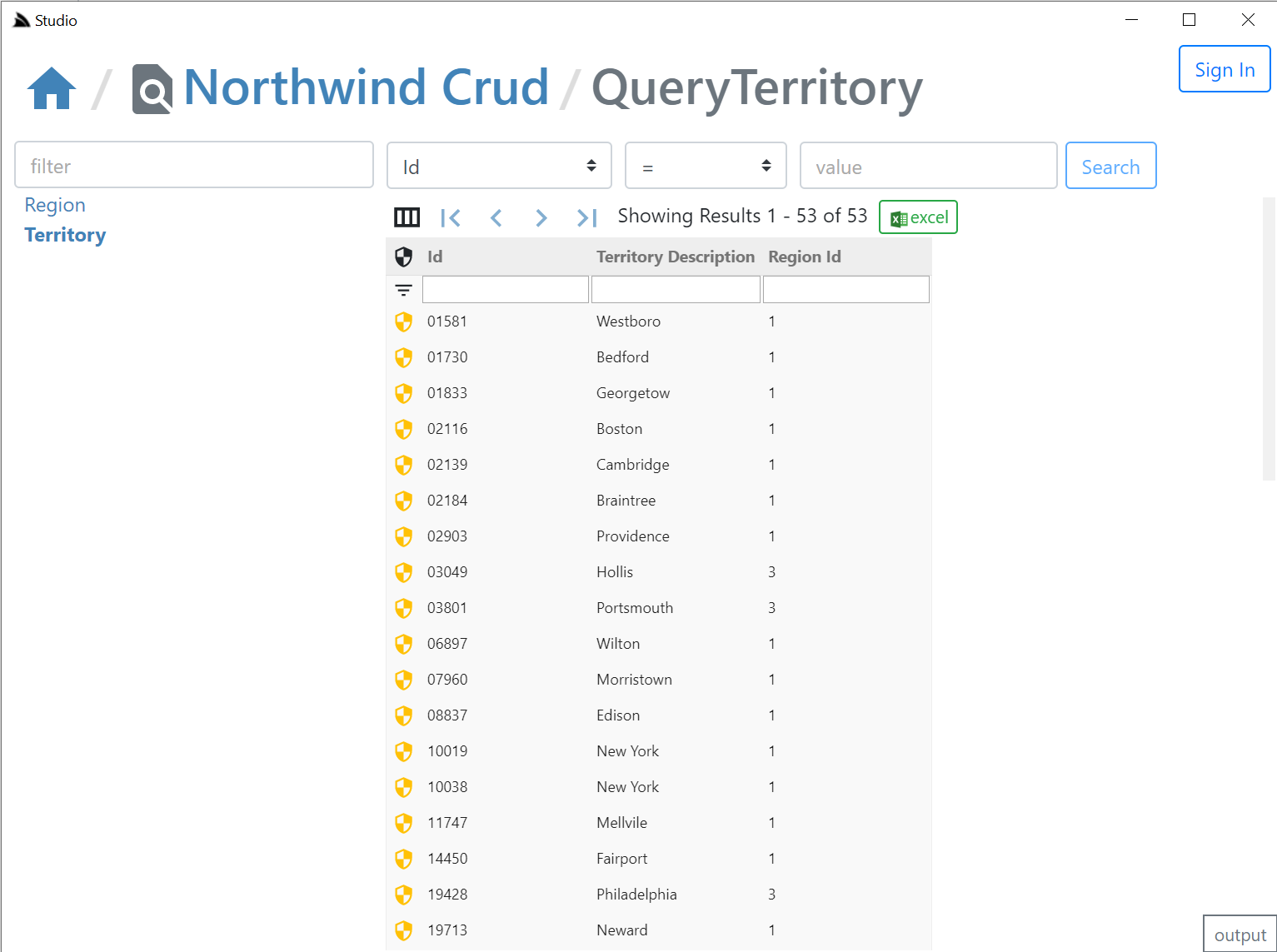

- AutoQuery UI

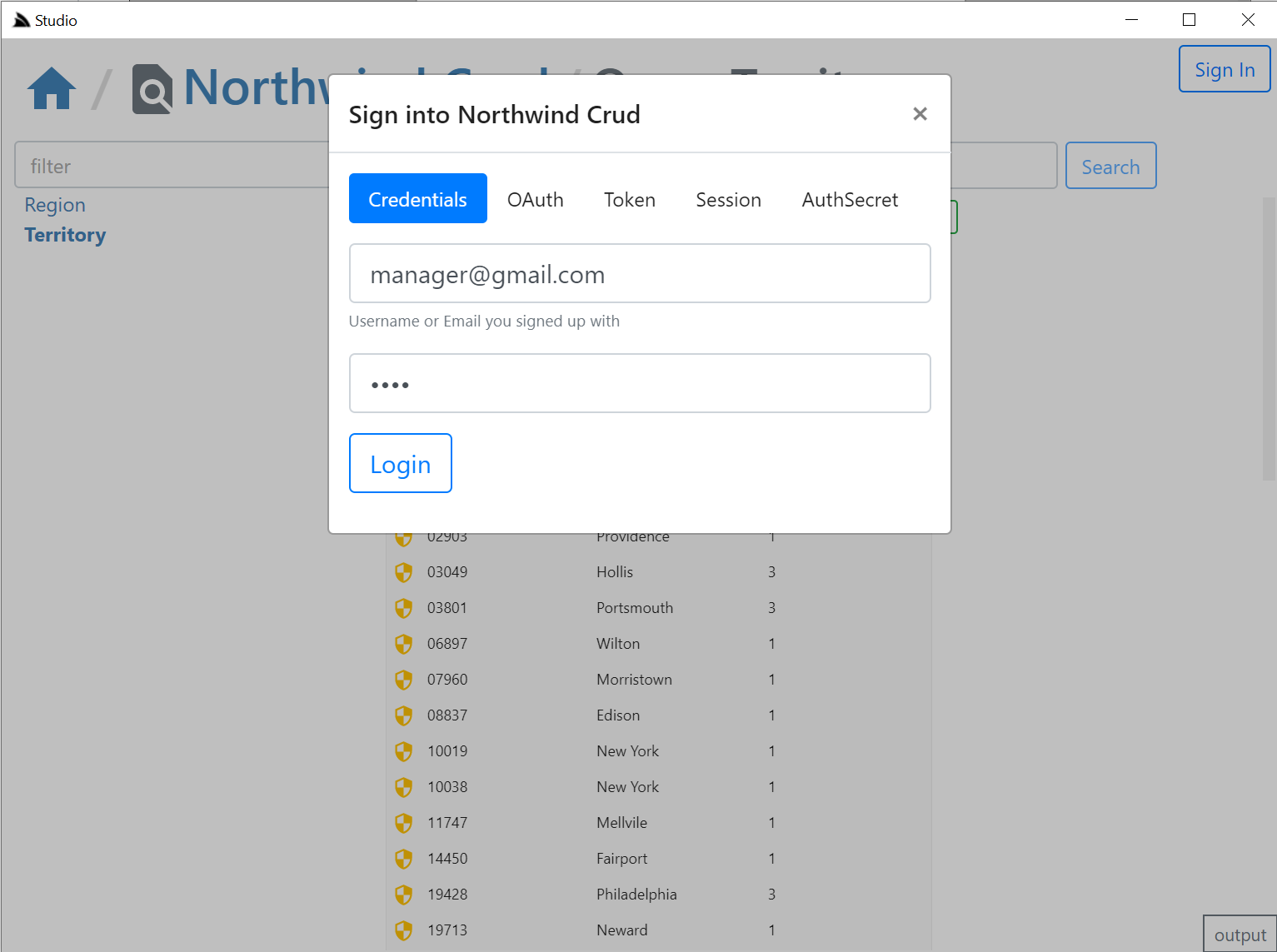

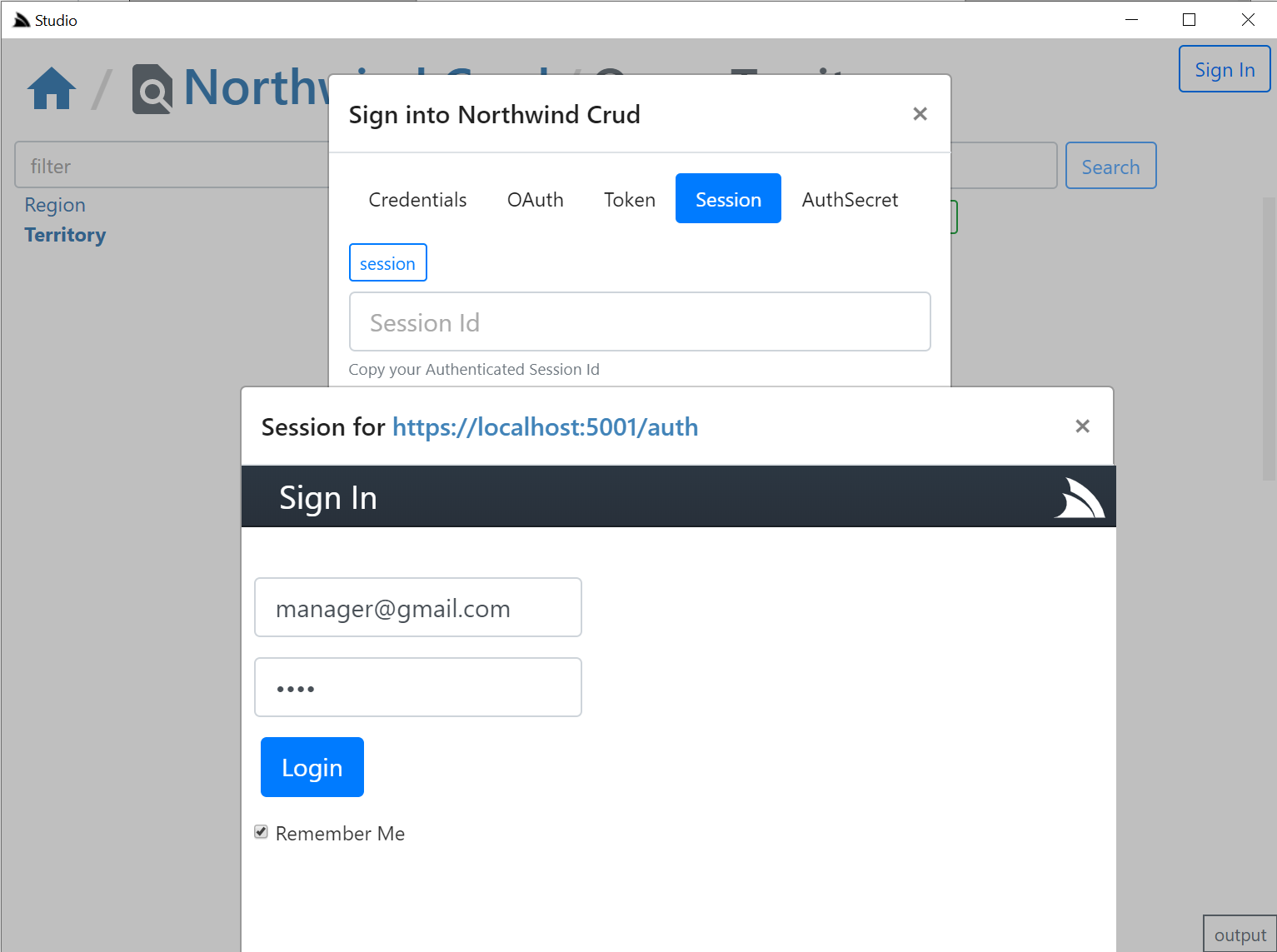

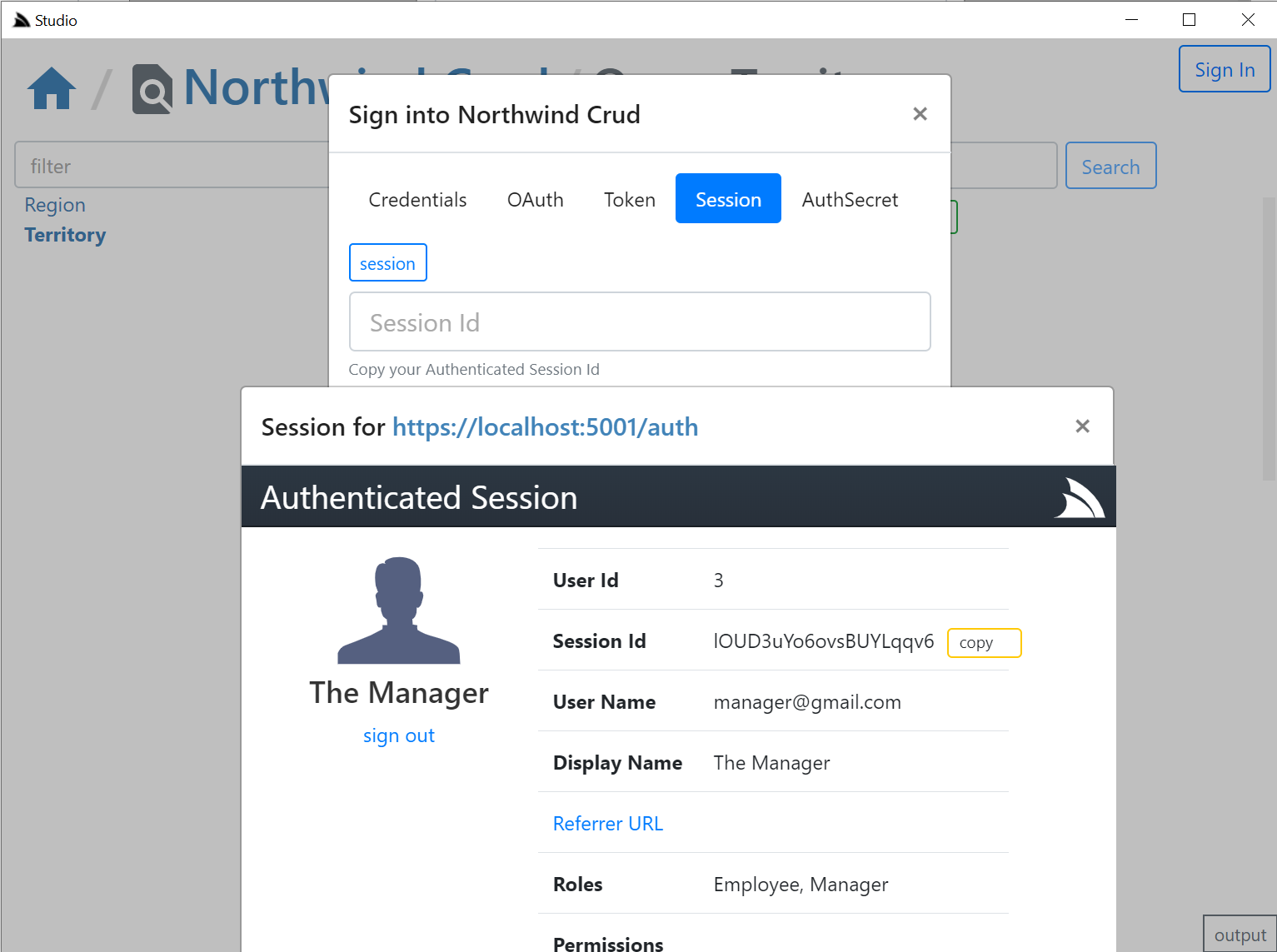

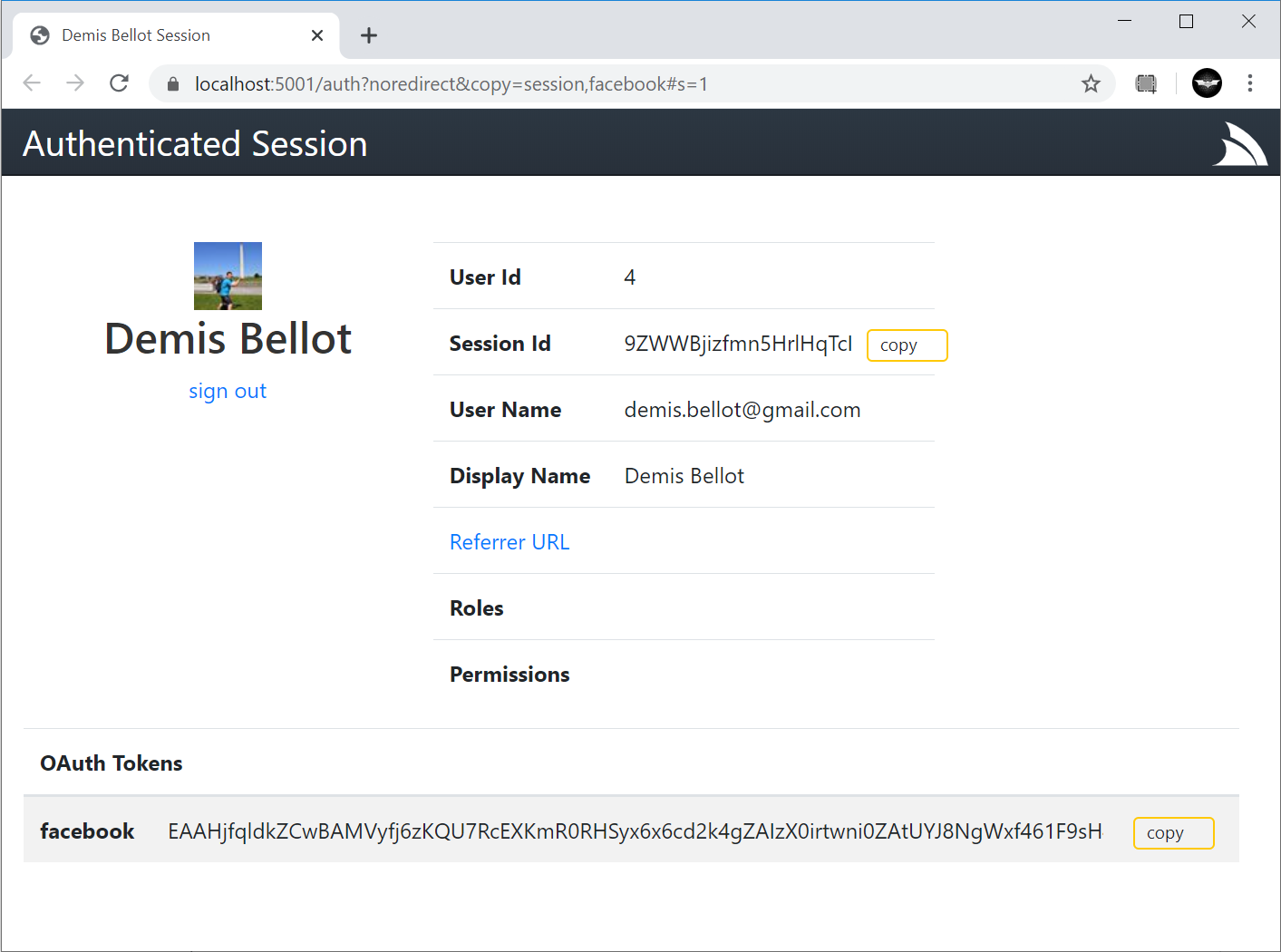

- Integrated Auth Component

- Desktop User State & Preferences

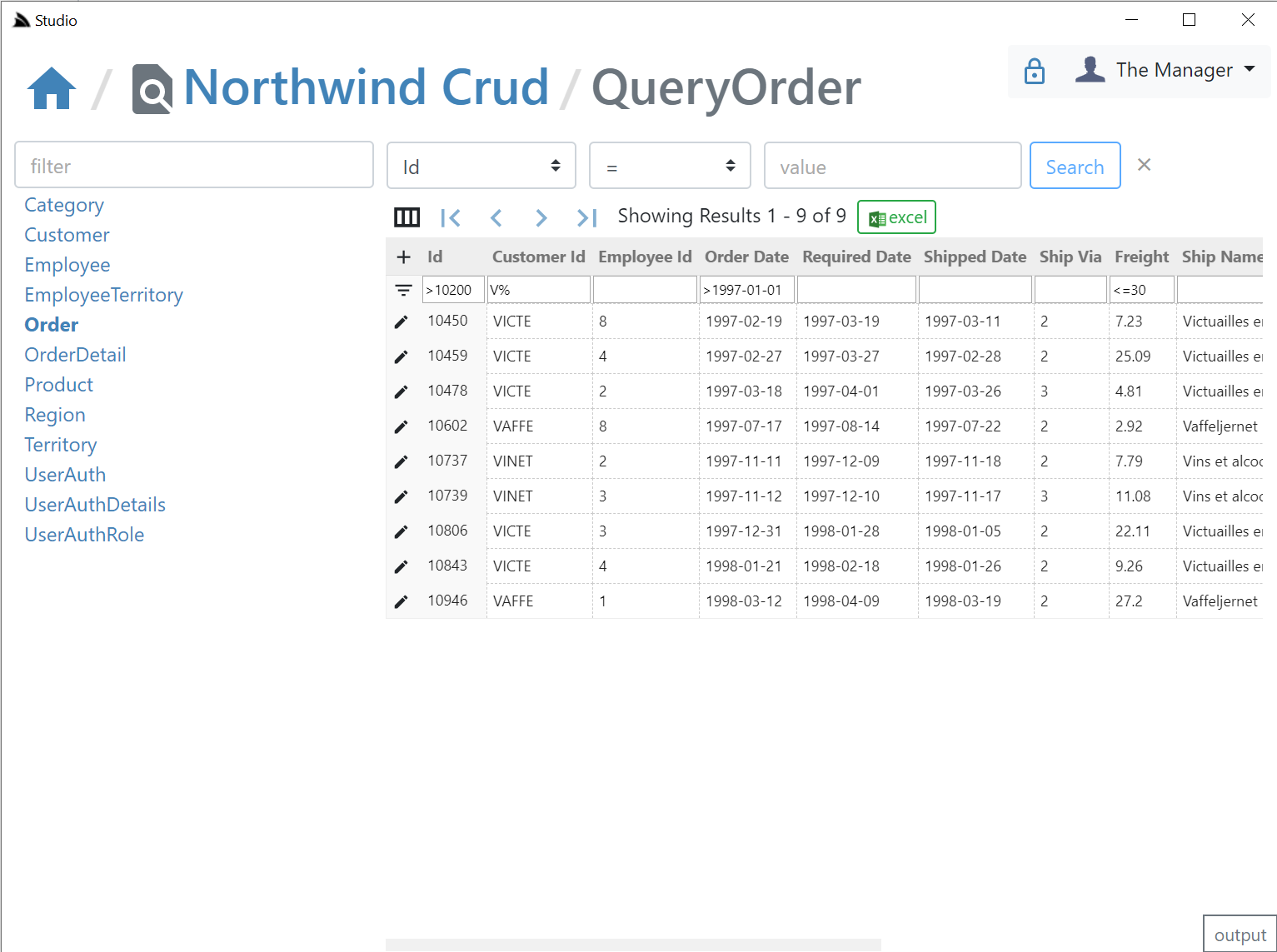

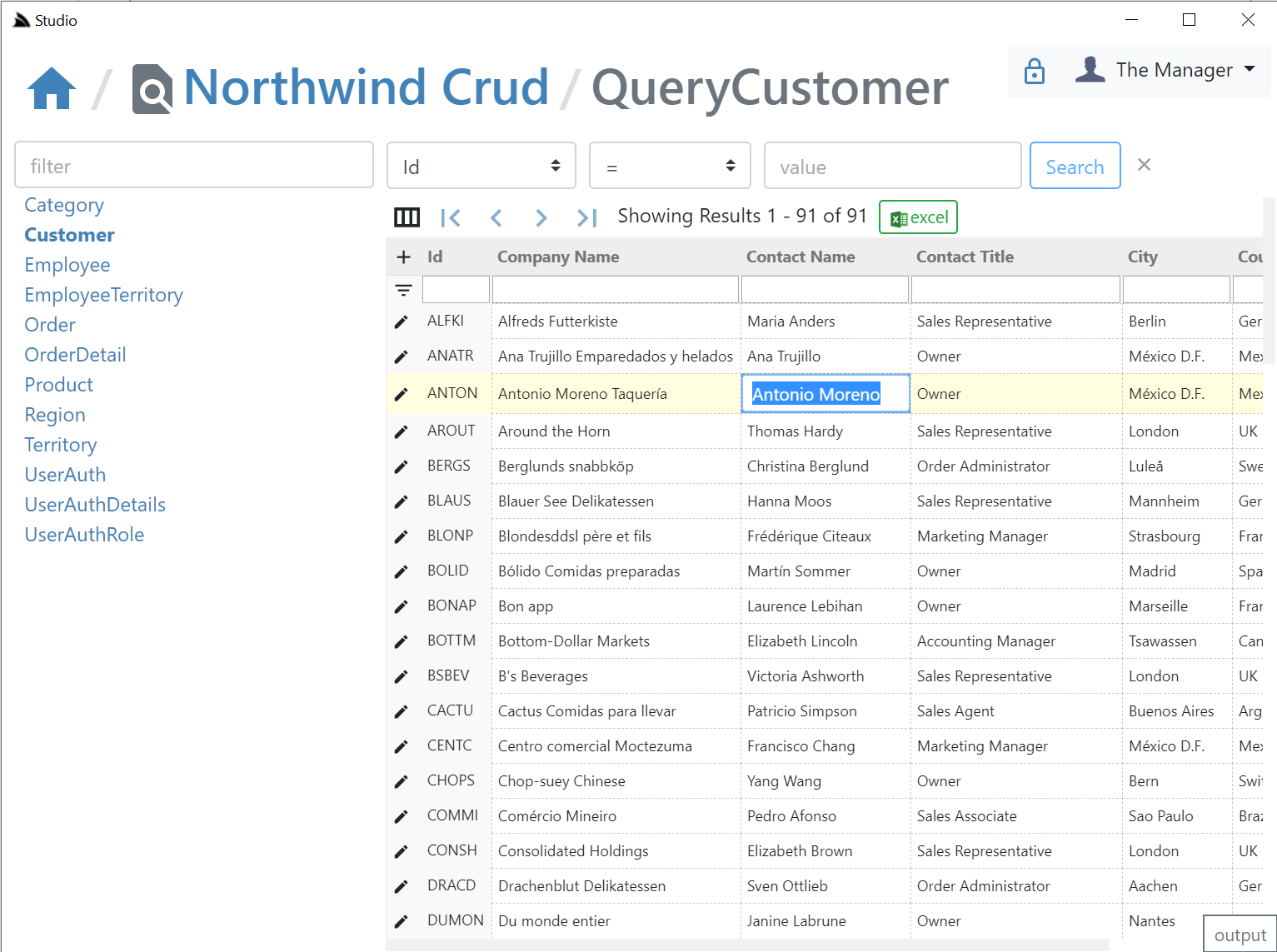

- AutoCrud Querying

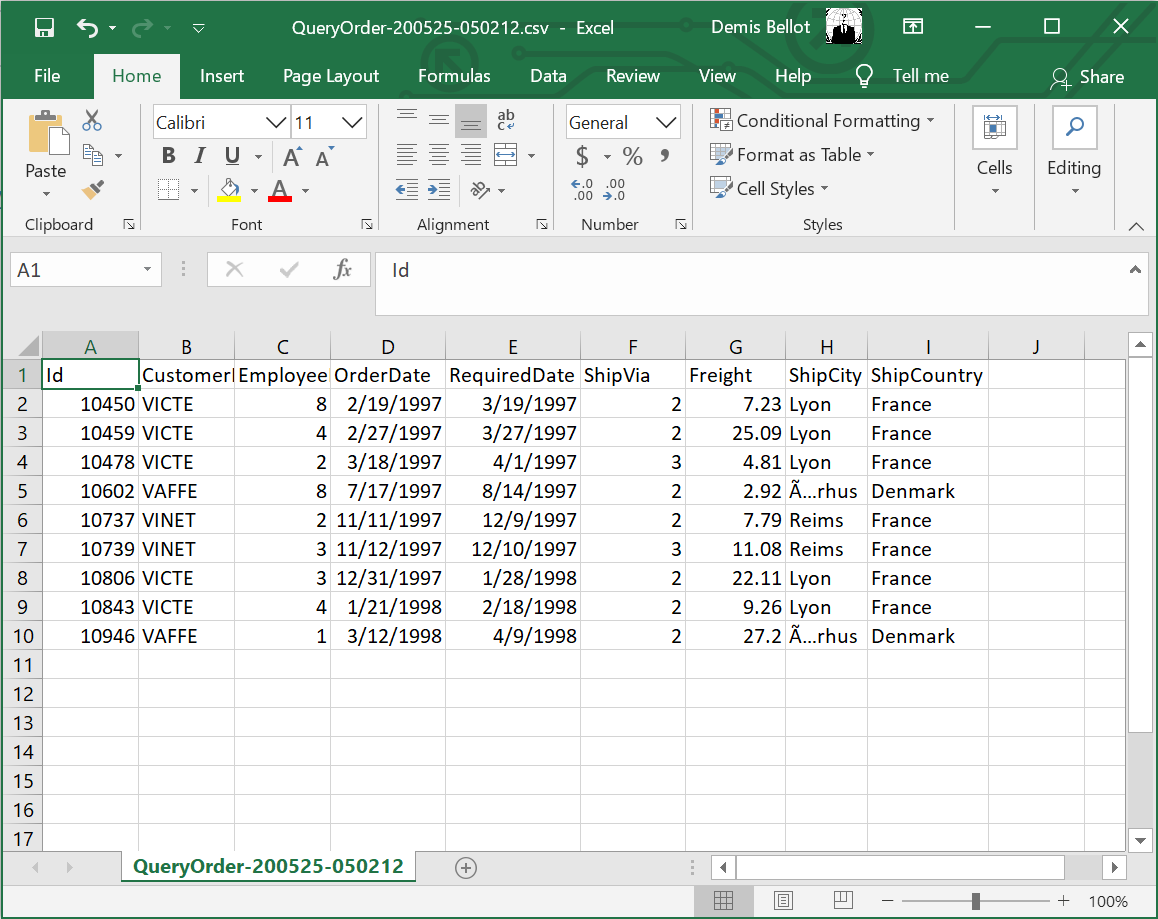

- Export to Excel

- AutoCrud Partial Updates

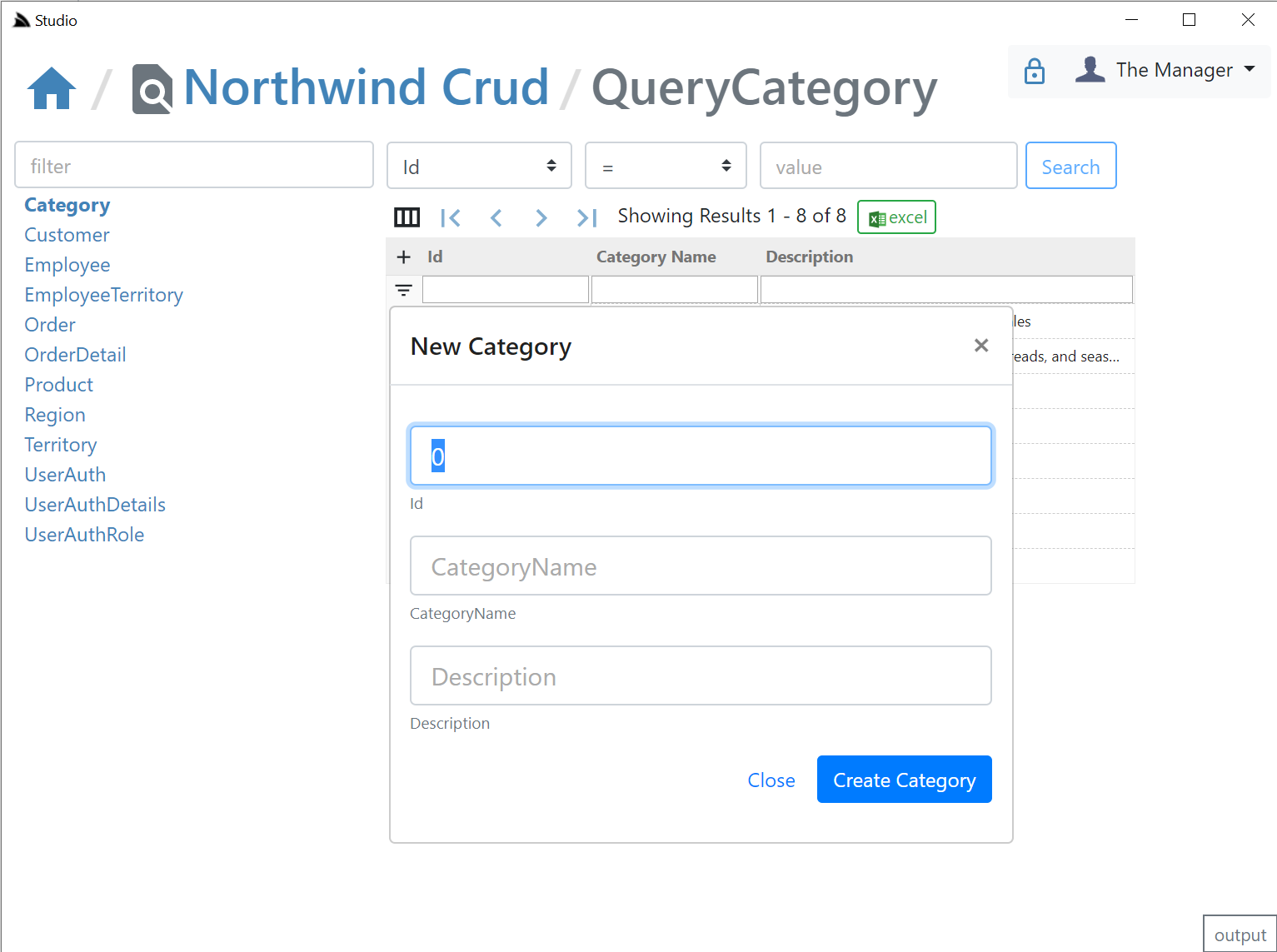

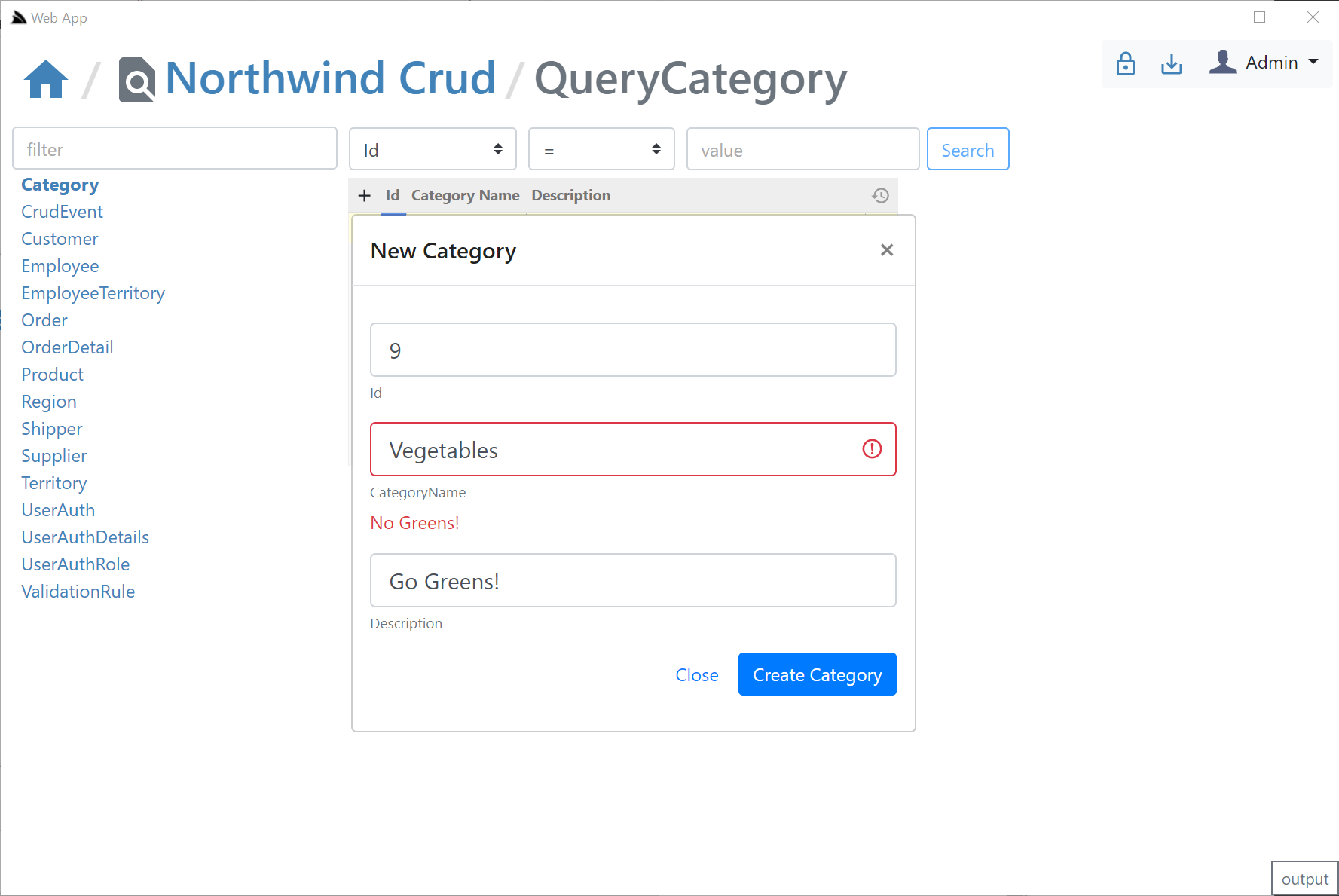

- AutoCrud Create

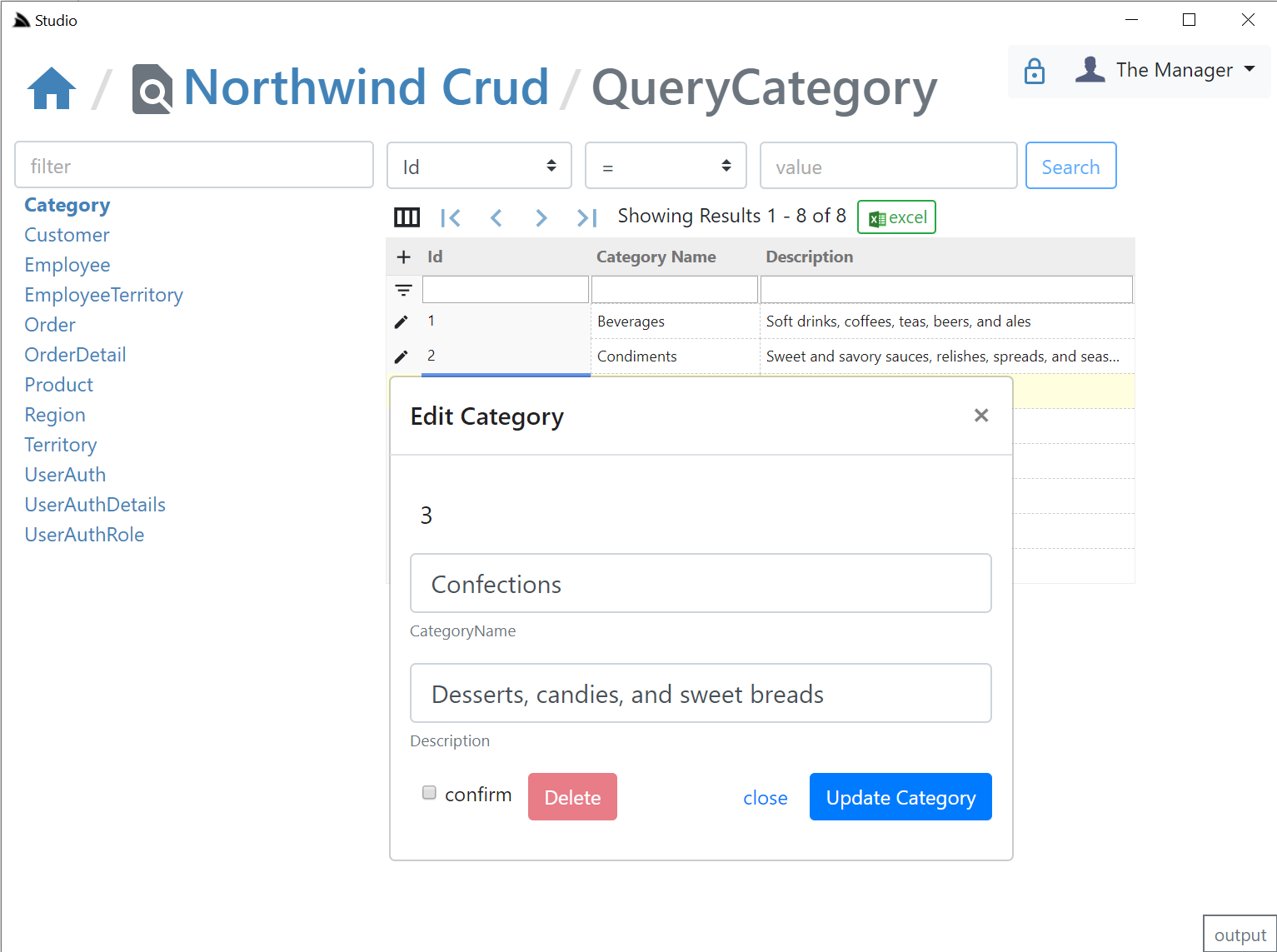

- AutoCrud Update and Delete

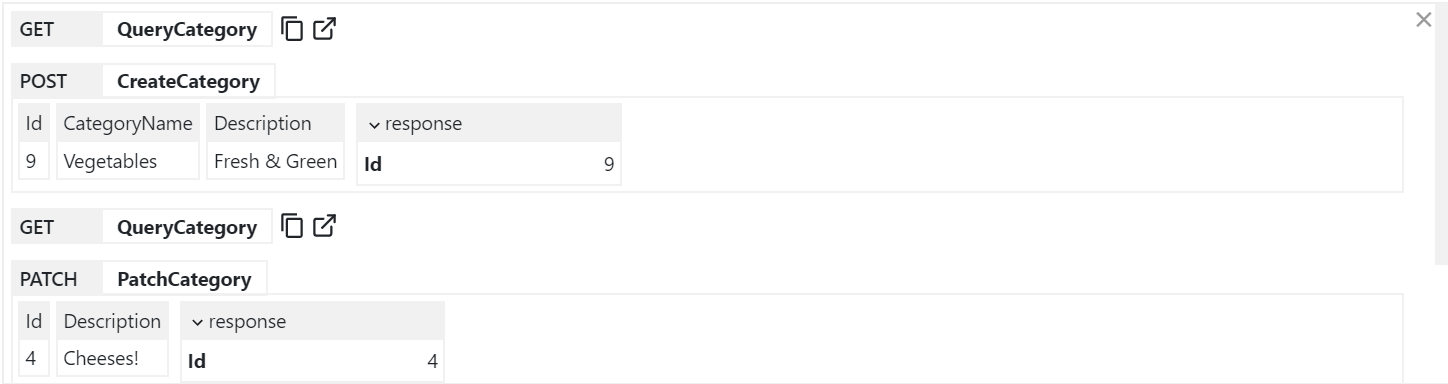

- API Log Viewer

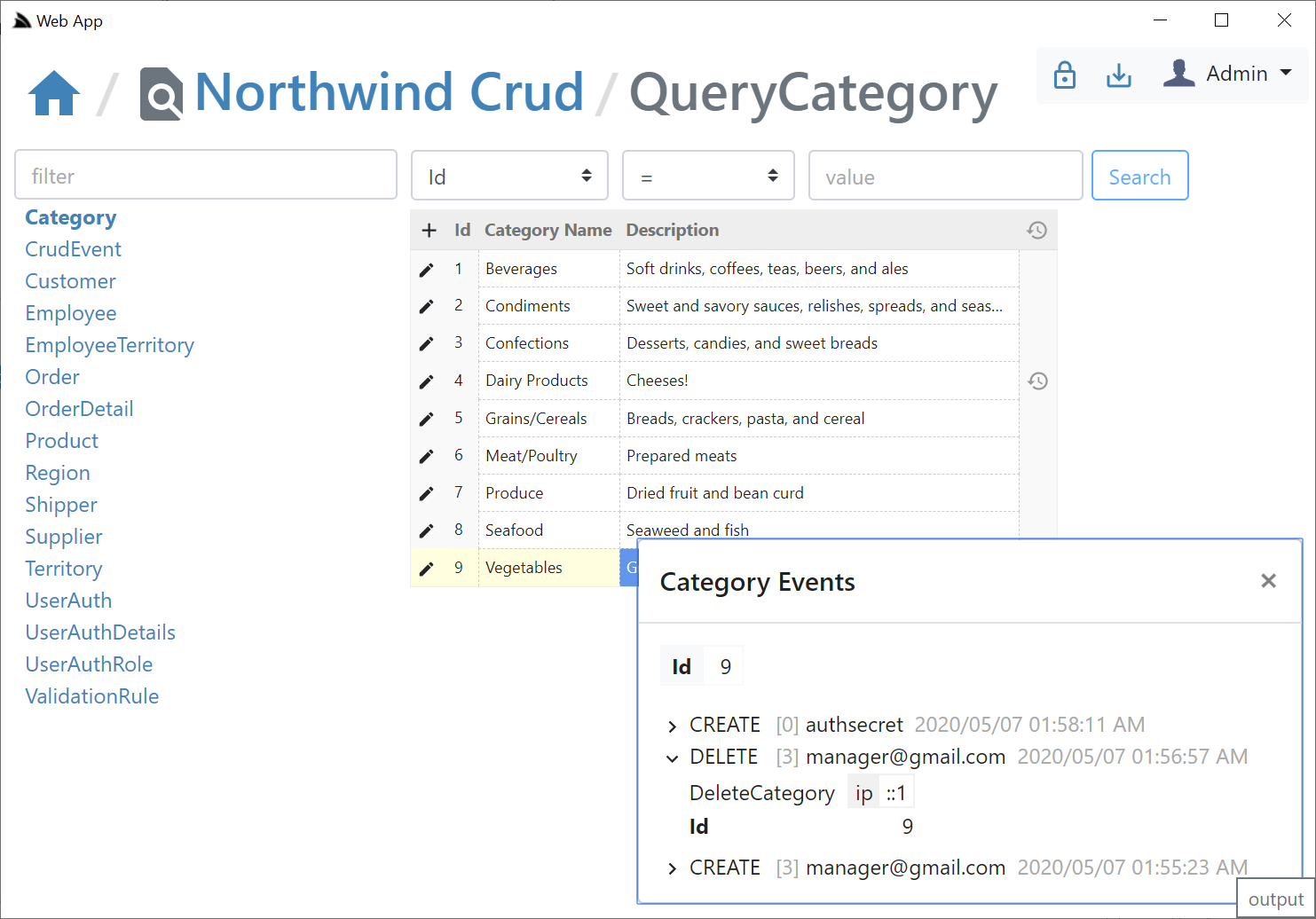

- Executable Audit History

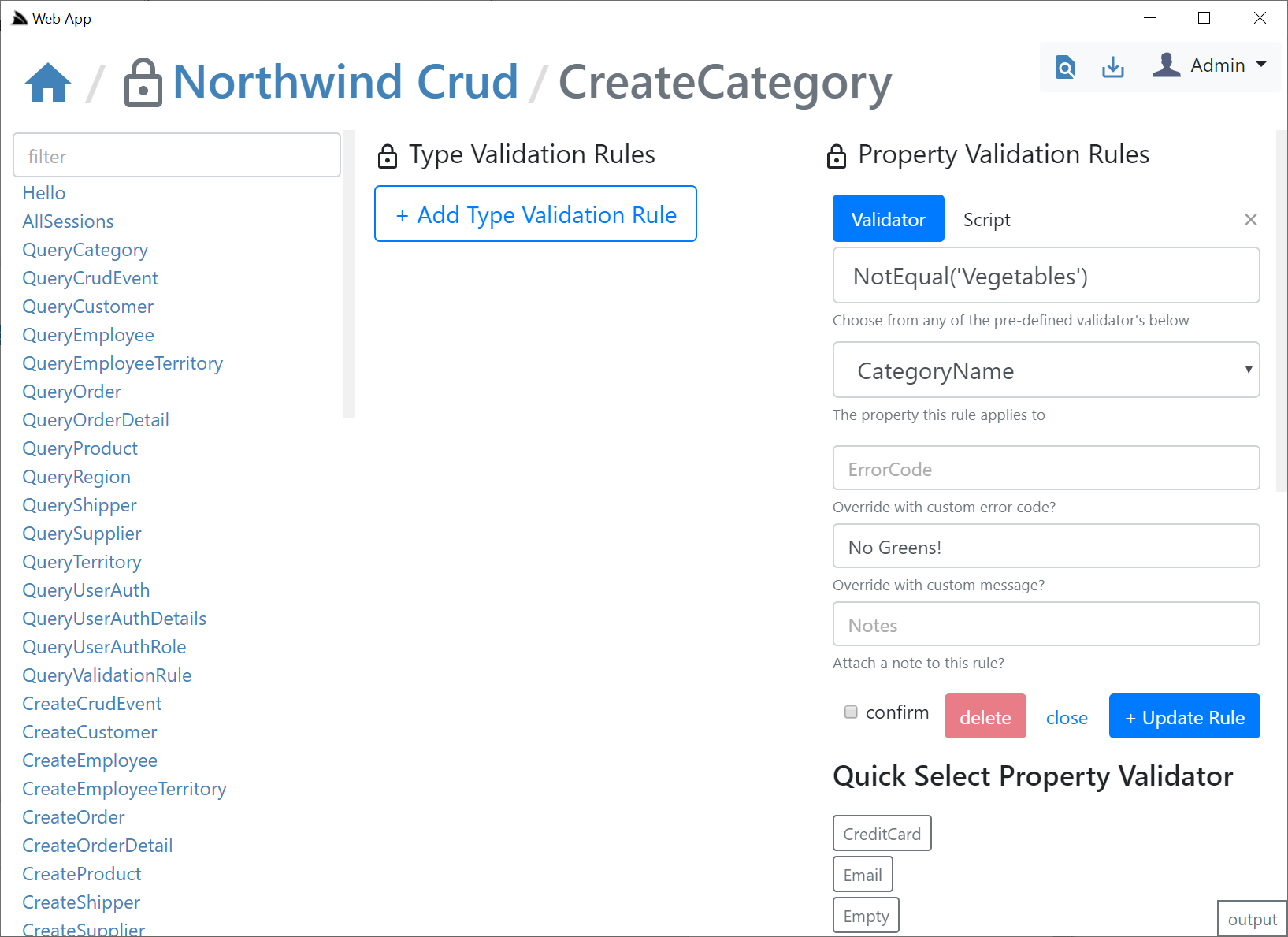

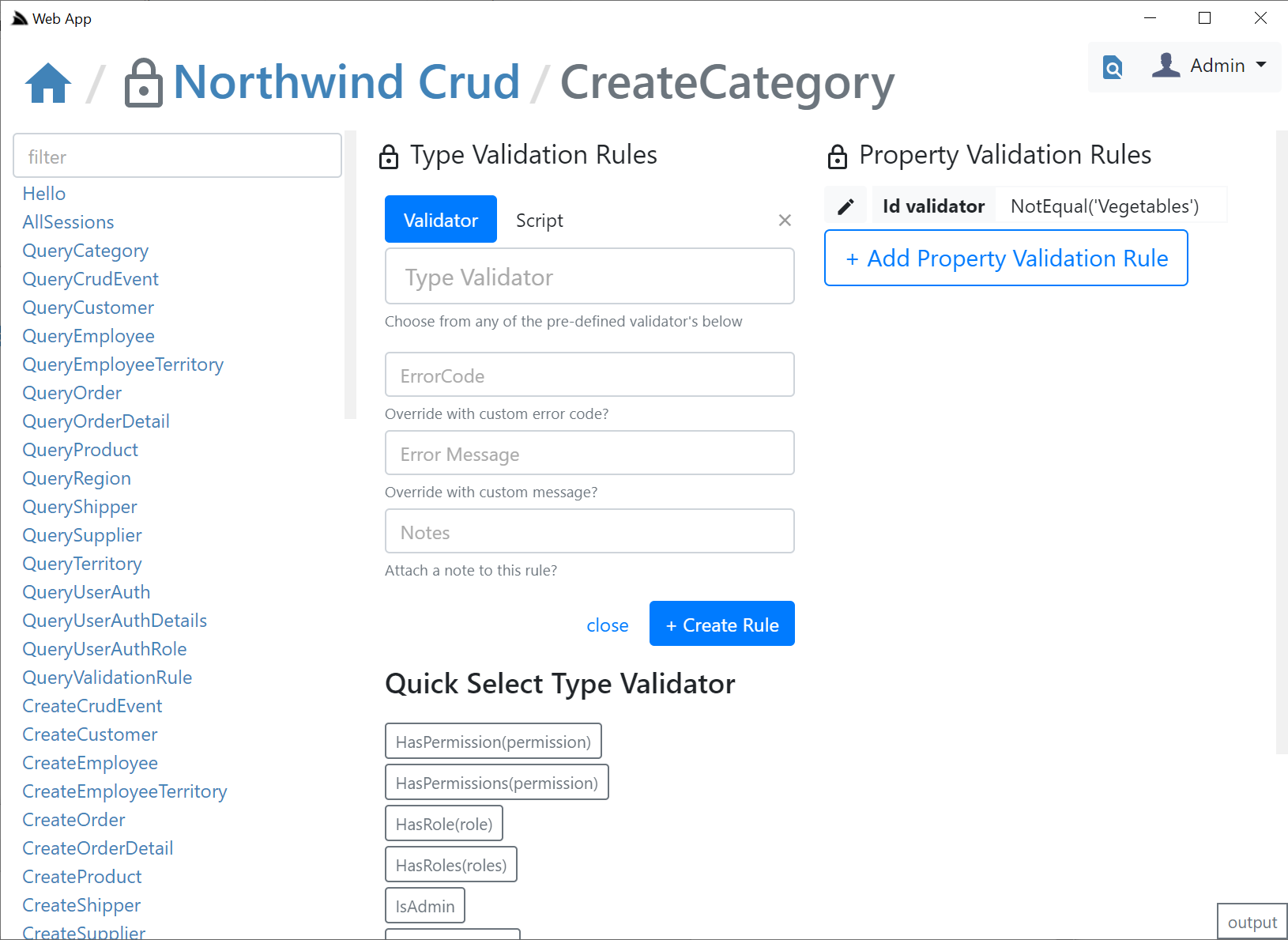

- Validators UI

- Future Updates

- Metadata App Export / Discovery

- AutoQuery

- gRPC code-first Development

- Single Page App Templates

- #Script

- ServiceStack

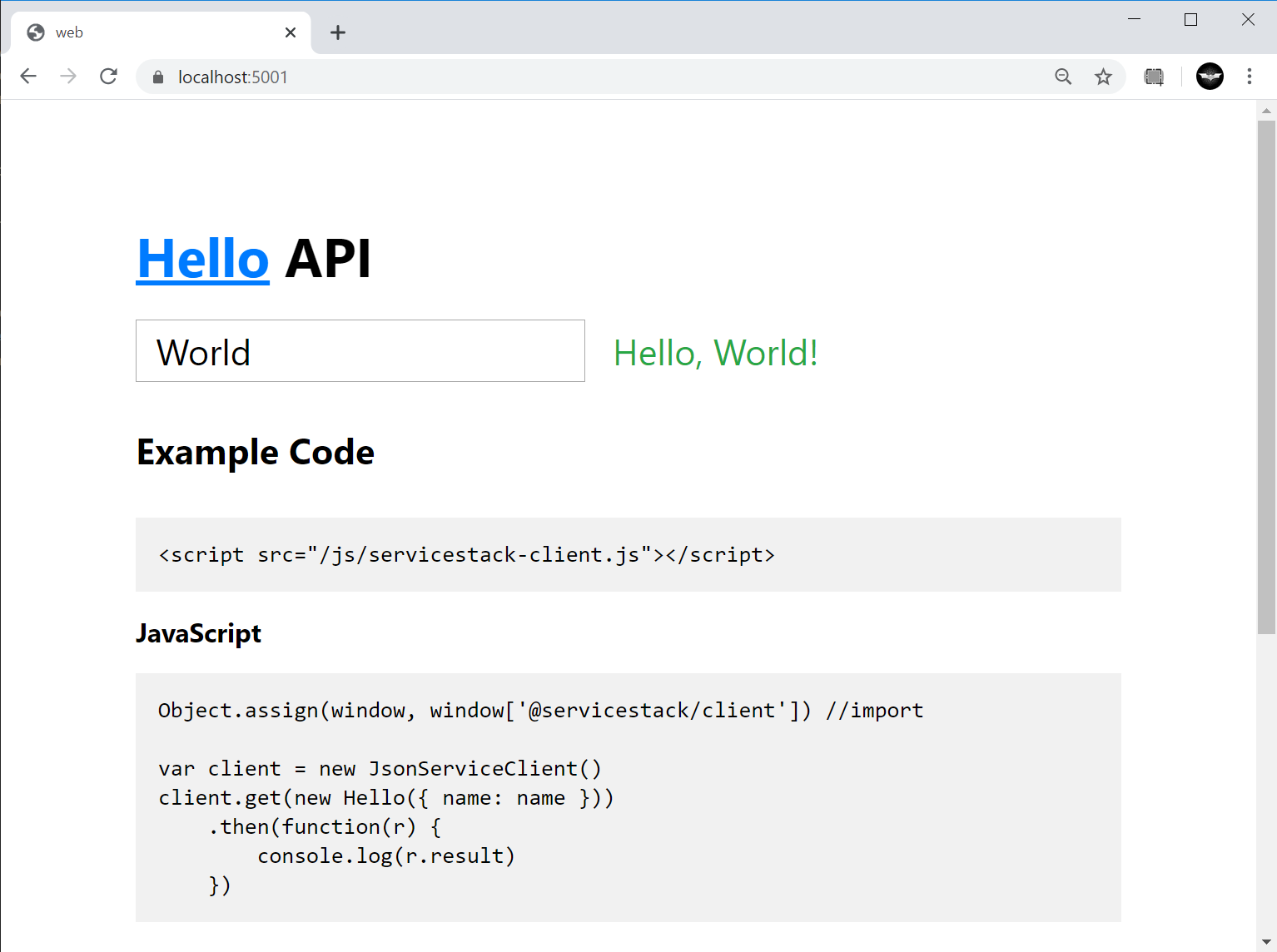

- Embedded UMD build of @servicestack/client

- TypeScript Nullable properties

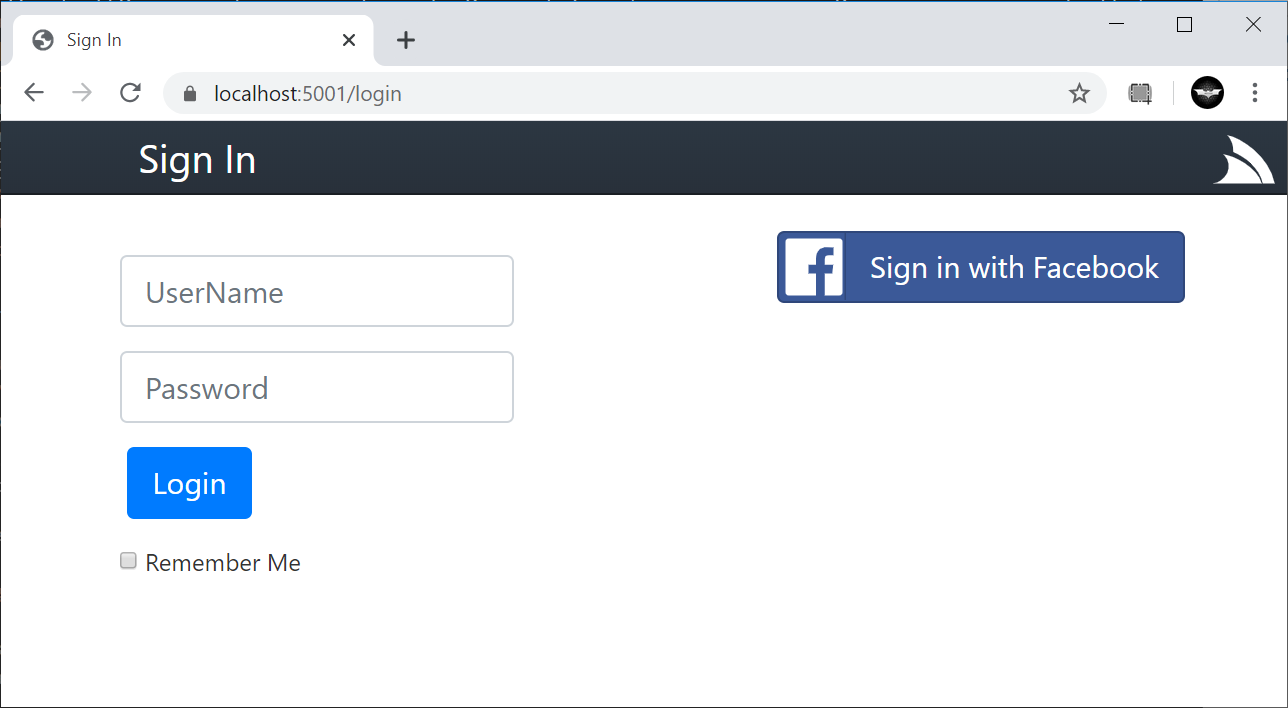

- Embedded Login Page fallback

- Lightweight Customizable HTML Templates

- Hosting ASP.NET Core Apps on Custom Path

- Pluralize and Singularize

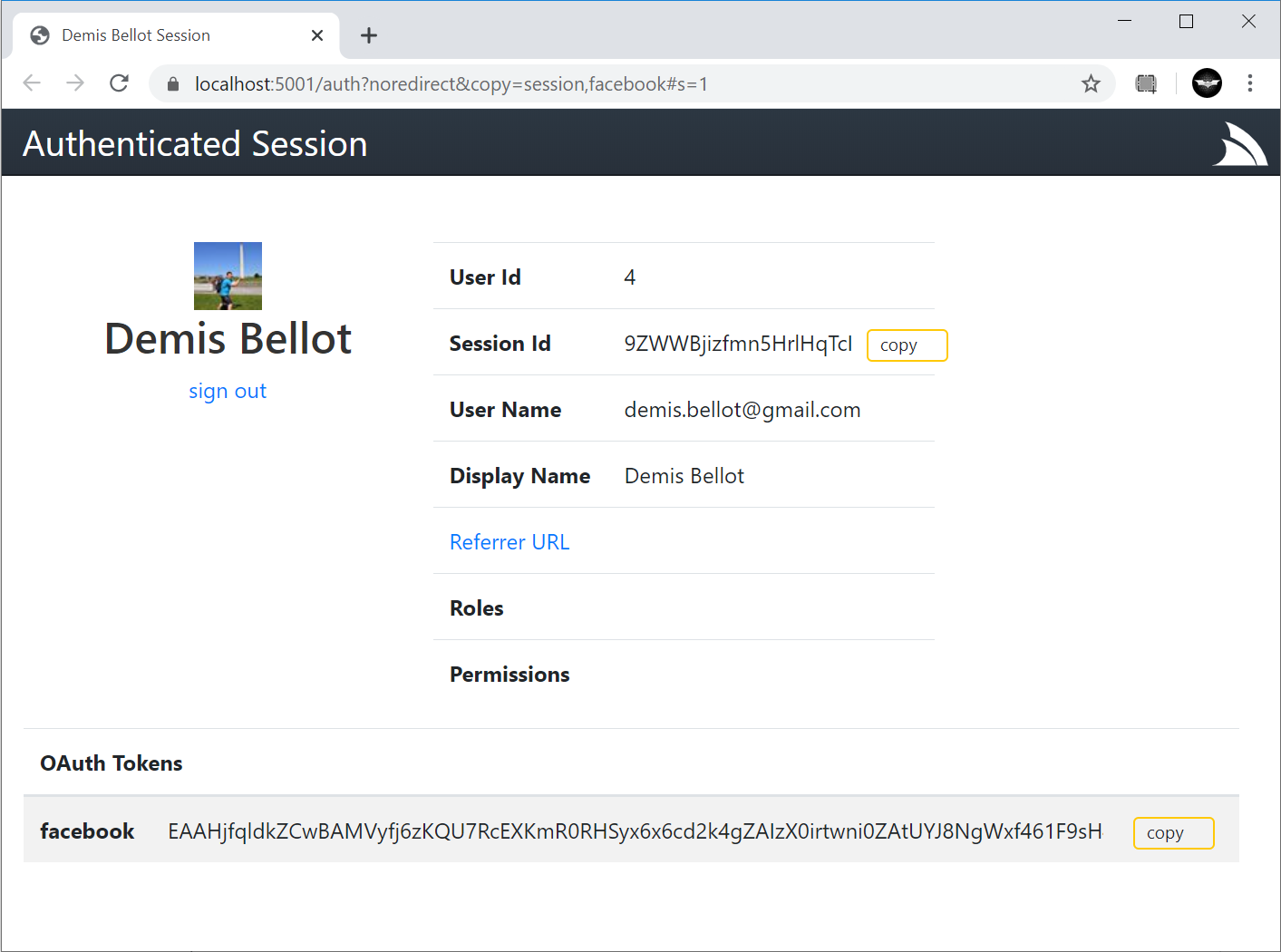

- AuthSecret Admin Session

- Exception Handling

- GatewayExceptionHandlers

- System.Web Shims removed

- XmlSerializerFormat Plugin

- Cache Client

- Server Sent Events

- Stand-alone Razor Views

- OpenApi

- OrmLite

v5.9.2 Patch Release Notes

If you're using JWT Auth please upgrade to v5.9.2 when possible to resolve a JWT signature verification issue.

Introducing ServiceStack Studio!

Another exciting development in this release is the successor to Admin UI: ServiceStack Studio! - a capability-based UI to manage multiple remote ServiceStack instances from either a Chromium Desktop App or cross-platform .NET Core Web App.

The richer metadata in ServiceStack Services allows Studio to logically group Services around Data Models, enabling its high-level semantic features like its native data-grid like UX over all AutoQuery Services to quickly discover, search, create, update and delete entities based on the available AutoQuery APIs and whether Authenticated Users have access to them.

YouTube: youtu.be/2FFRLxs7orU

Instantly Servicify existing Systems!

ServiceStack also reached maximum autonomy for a Services framework where in addition to AutoQuery automatically providing your Services implementations, Studio providing its instant UI, ServiceStack also gained the capability to generate your entire API! Including Typed API contracts, data models, implementations & human-friendly pluralized HTTP API routes over an existing System RDBMS's tables!

ServiceStack's AutoGen enables a number of exciting possibilities, predominantly it's the fastest way to ServiceStack-ify an existing systems RDBMS where it will serve as an invaluable tool for anyone wanting to quickly migrate to ServiceStack and access its functionality ecosystem around ServiceStack Services:

AutoGen's code generation is programmatically customizable where the generated types can be easily augmented with additional declarative attributes to inject your App's conventions into the auto generated Services & Types to apply custom behavior like Authorization & additional validation rules. After codifying your system conventions the generated classes can optionally be "ejected" where code-first development can continue as normal.

This feature enables rewriting parts or modernizing legacy systems with the least amount of time & effort, once Servicified you can take advantage of declarative features like Multitenancy, Optimistic Concurrency & Validation, enable automatic features like Executable Audit History, allow business users to maintain validation rules in its RDBMS, manage them through Studio & have them applied instantly at runtime and visibly surfaced through ServiceStack's myriad of client UI auto-binding options. Studio can then enable stakeholders with an instant UI to quickly access and search through their data, import custom queries directly into Excel or access them in other registered Content Types through a custom UI where fine-grained app-level access can be applied to customize which tables & operations different users have.

gRPC's Typed protoc Universe

AutoGen also enables access to ServiceStack's ecosystem of metadata services & connectivity options where it's now become the fastest way to generate gRPC endpoints over an existing system. This is especially exciting as in addition to enabling high-performance connectivity to your Systems data, it opens it up to all languages in gRPC's protoc universe.

Whilst the Smart, Generic C# / F# / VB.NET Service Clients continue to provide the best UX for consuming gRPC Services, one of the nicest protoc generated clients languages is Dart - a modern high-level language with native class performance & script-like productivity where individual source files can be run immediately without compilation, it's quality tooling, static analysis & high-level features like async/await make it an ideal exploratory language for consuming gRPC endpoints.

Dart gRPC Script Playground

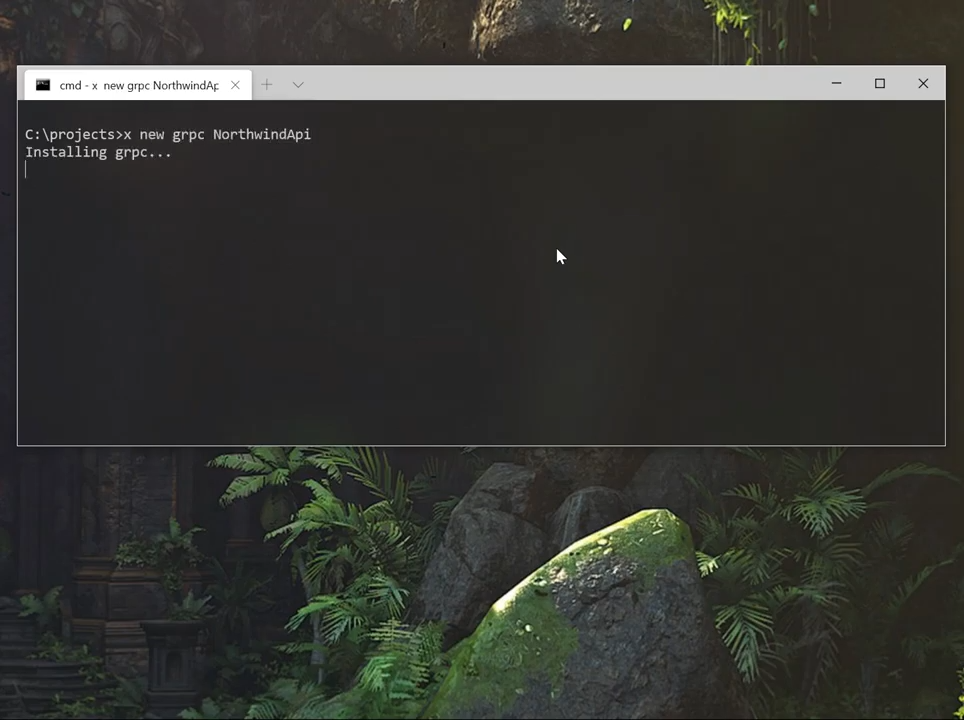

This quick demo shows an example of instantly Servicifying a database & accesses it via gRPC in minutes, starting with a new grpc project from scratch, it mixes in autocrudgen to configure AutoGen to generate AutoQuery services for the registered sqlite RDBMS that's copied into the project from the northwind.sqlite gist.

Once the servicified App is running it accesses the gRPC Services in a new Dart Console App using the UX-friendly Dart gRPC support in the x dotnet tool to call the protoc generated Services:

YouTube: youtu.be/5NNCaWMviXU

Flutter gRPC Android App

And if you can access it from Dart, you can access it from all platforms Dart runs on - the most exciting is Google's Flutter UI Kit for building beautiful, natively compiled applications for Mobile, Web, and Desktop from a single codebase:

YouTube: youtu.be/3iz9aM1AlGA

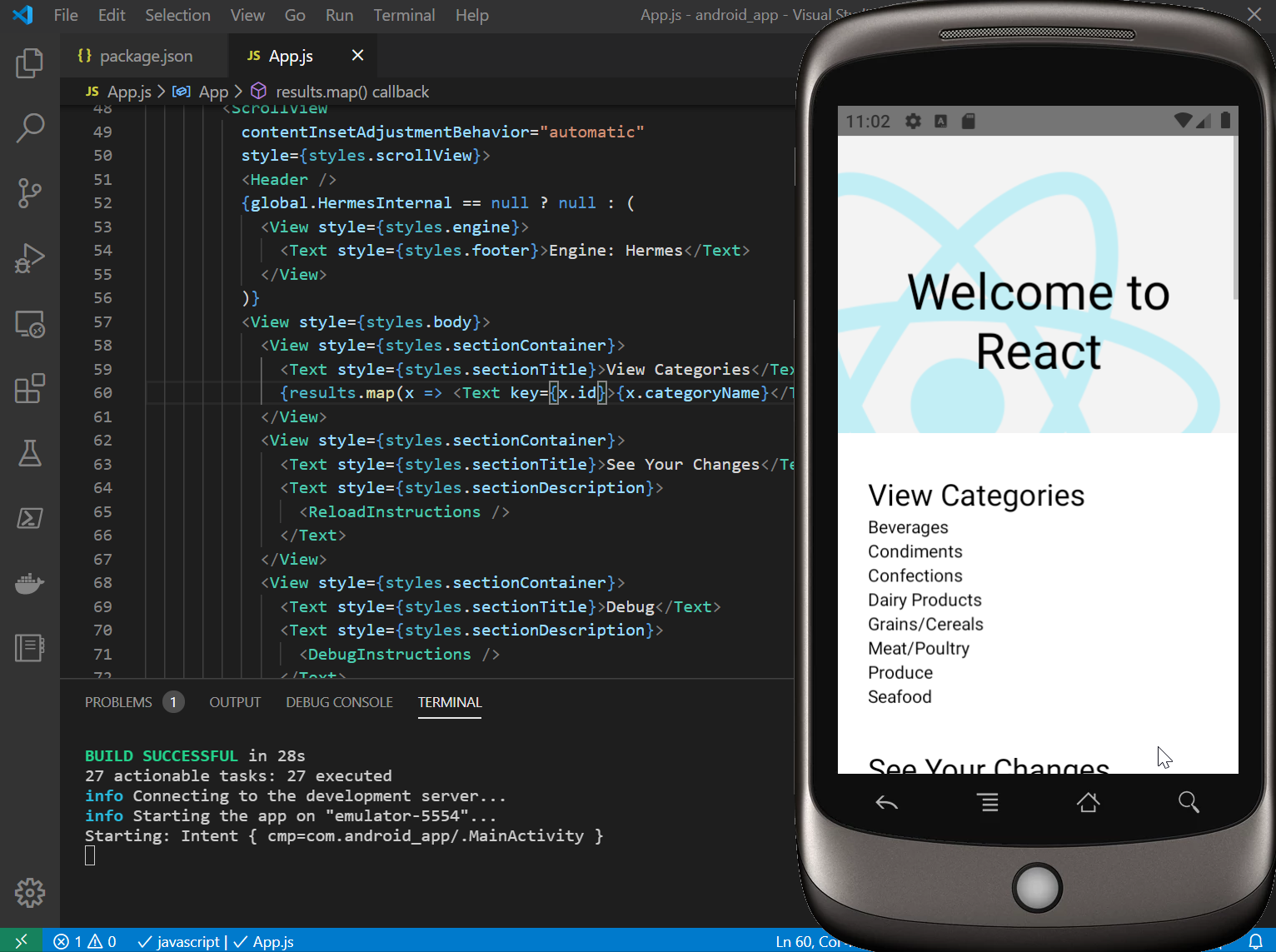

React Native Typed Client

gRPC is just one of the endpoints ServiceStack Services can be accessed from, for an even richer & more integrated development UX they're also available in all popular Mobile, Web & Desktop languages Add ServiceStack Reference supports.

Like TypeScript which can be used in Browser & Node TypeScript code-bases as well as JavaScript-only code-bases like React Native - a highly productive Reactive UI for developing iOS and Android Apps:

YouTube: youtu.be/6-SiLAbY63w

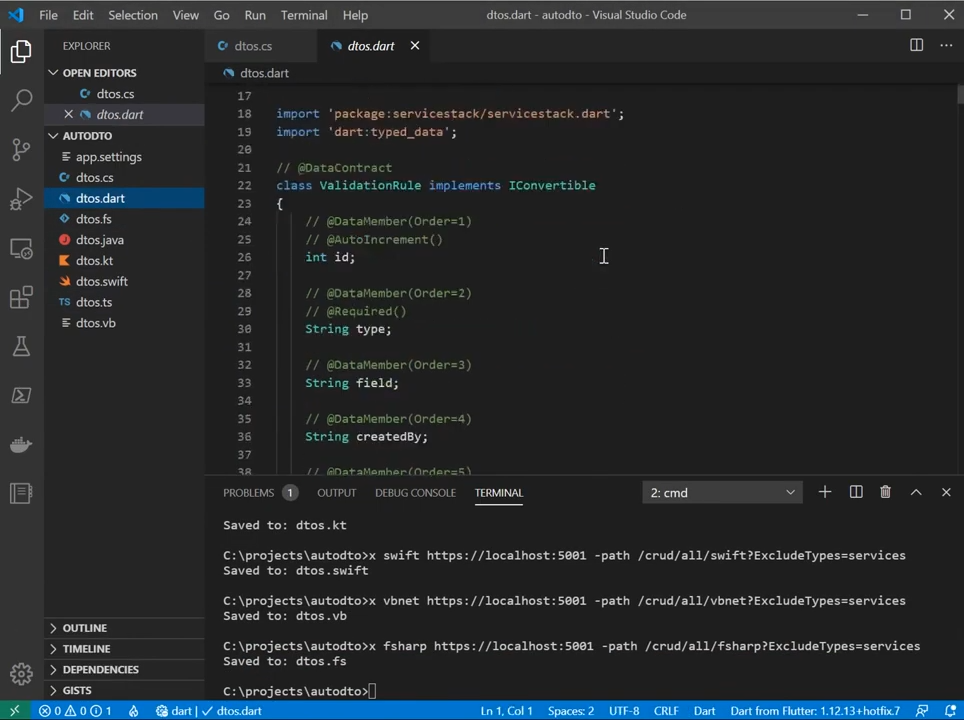

autodto - Generate Types for RDBMS Tables

An unintended consequence of AutoGen that's potentially universally appealing to even non .NET developers is that it's also a way to instantly generate Types for all RDBMS tables in all of ServiceStack supported client languages, which thanks to being configurable in a Sharp App can be executed from the command-line using the dotnet tools:

$ dotnet tool install --global x

We can then use the mix feature to download the autodto gist

containing a pre-configured app.settings, with the only configuration required is the RDBMS it should connect to, which you can

specify in a single-command when writing the gist, e.g:

$ x mix autodto -replace DIALECT=<dialect> -replace CONNECTION_STRING="<connection-string>"

Dialect: sqlite, sqlserver, postgres, mysql

Or if preferred the connection string can be referenced from an Environment variable with the $ prefix, e.g:

$ x mix autodto -replace DIALECT=postgres -replace CONNECTION_STRING=$TECHSTACKS_DB

| C# | TypeScript | Swift | Java | Kotlin | Dart | F# | VB.NET |

YouTube: youtu.be/1dFqzrF1mV8

If you don't have an RDBMS readily available to test this on, you can also mix in a copy of the northwind.sqlite database & configure it with the same command:

$ x mix autodto northwind.sqlite -replace DIALECT=sqlite -replace CONNECTION_STRING=northwind.sqlite

Alternatively you can download and update separately with:

$ x mix autodto

Then use a text editor to update app.settings with your RDBMS configuration:

debug true

name Auto DTO

defaultRedirect /metadata

features AutoQueryFeature

AutoQueryFeature { MaxLimit: 100 }

AutoQueryFeature.GenerateCrudServices { }

# Configure below. Supported dialects: sqlite, sqlserver, postgres, mysql

db sqlite

db.connection northwind.sqlite

Once configured, start this ServiceStack App with:

$ x

Then in another terminal you can download just the generated Types in each language you want, by excluding their Services:

$ x csharp https://localhost:5001 -path /crud/all/csharp?ExcludeTypes=services

$ x typescript https://localhost:5001 -path /crud/all/typescript?ExcludeTypes=services

$ x dart https://localhost:5001 -path /crud/all/dart?ExcludeTypes=services

$ x java https://localhost:5001 -path /crud/all/java?ExcludeTypes=services

$ x kotlin https://localhost:5001 -path /crud/all/kotlin?ExcludeTypes=services

$ x swift https://localhost:5001 -path /crud/all/swift?ExcludeTypes=services

$ x vbnet https://localhost:5001 -path /crud/all/vbnet?ExcludeTypes=services

$ x fsharp https://localhost:5001 -path /crud/all/fsharp?ExcludeTypes=services

Every language is customizable using their DTO Customization Options so you

could uncomment the GlobalNamespace option to generate types in your preferred namespace instead:

GlobalNamespace: TechStacks

Then regenerate it using the full language name above or their wrist-friendly 2-letter abbreviation:

x cs

x ts

x da

x ja

x kt

x sw

x vb

x fs

Or put this in a

.bator.shscript to automate re-generation for all languages in a single command.

Introducing SharpData!

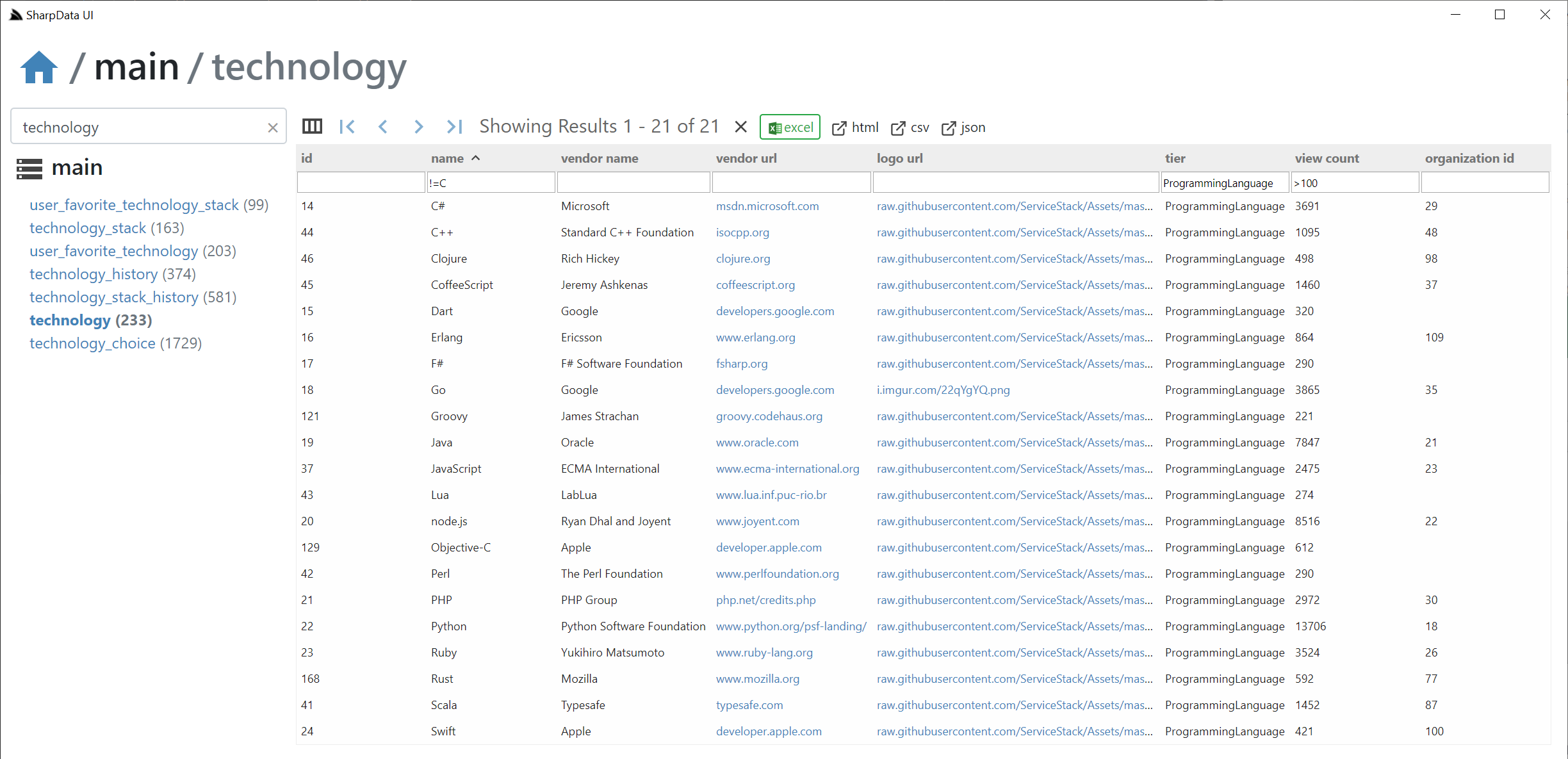

Before we delve into the typed world of AutoCrud, we'd like to introduce the SharpData .NET Core project - another generic tool (useful for non .NET devs) for providing an instant UI around multiple RDBMS's:

YouTube: youtu.be/GjVipOqwZMA

It makes use of the app dotnet tool for running Chromium Gist Desktop Apps on-the-fly without installation, from a single URL that can also mix in additional gists which can be used in SharpData to configure RDBMS's, copy SQLite databases and apply per-database customizations to add navigable deep links and customized UI Views to each table resultset.

Whilst SharpData supports connecting to most popular RDBMS's, it's especially useful for being able to deploy an instant stand-alone UI with an embedded SQLite databases which can be published independently in a gist and launched from a single URL.

For an example of this in action we've published customized gists for the Northwind and Chinook SQLite databases which after installing the latest app dotnet tool:

$ dotnet tool install -g app

Can be run from the link below in a Windows x64 Desktop App:

Or via command-line:

$ app open sharpdata mix northwind.sharpdata

$ app open sharpdata mix chinook.sharpdata

Cross platform using the x dotnet tool (in Default Browser):

$ x open sharpdata mix northwind.sharpdata

$ x open sharpdata mix chinook.sharpdata

Each of these options will download & run the latest version of SharpData along with a copy of the northwind.sharpdata or chinook.sharpdata gists on-the-fly containing the embedded SQLite DB along with any UI customizations.

Hosted as a .NET Core App

As NetCoreApps/SharpData is also a standard .NET Core project, it can also be deployed as a normal stand-alone .NET Core Web App:

https://sharpdata.netcore.io

Tiny footprint

An impressively capable .NET Core App that fits into a tiny 20kb .zip footprint thanks to Gist Desktop App's Architecture. It's small dynamic #Script & Vue TypeScript code-base also makes it highly customizable to tailor & further extend with

App-specific requirements - suitable for offering advanced system users a quick, capable customized read-only UI of your DBs.

SharpData started as a demonstration showing how productive #Script can be in the number of areas where dynamic languages offer far superior productivity then the typical .NET approach of using C# to type an entire code-base & models.

For example a single #Script page provides a lot of the functionality in AutoQuery where it provides an instant HTTP API

(in all registered ServiceStack formats) around all registered RDBMS tables, in all OrmLite supported RBDMS's, that includes support for custom fields,

multiple querying options, paging, multi OrderBy's in a parameterized SQL query executed with OrmLite's SQL async DB APIs:

AutoQuery Script

/db/_db/_table/index.html

{{ {namedConnection:db} |> if (db && db != 'main') |> useDb }}

```code|quiet

var ignore = ['db','fields','format','skip','take','orderBy']

var fields = qs.fields ? qs.fields.split(',').map(x => sqlQuote(x)).join(',') : '*'

var sql = `SELECT ${fields} FROM ${sqlQuote(table)}`

var filters = []

var queryMap = qs.toObjectDictionary().withoutKeys(ignore)

#each queryMap.Keys.toList()

var search = queryMap[it.sqlVerifyFragment()].sqlVerifyFragment();

#if search == '=null' || search == '!=null'

`${sqlQuote(it)} ${search=='=null' ? 'IS' : 'IS NOT'} NULL` |> addTo => filters

queryMap[it] = null

else if search.startsWith('=')

`${sqlQuote(it)} = @${it}` |> addTo => filters

queryMap[it] = search.substring(1).coerce()

else if search.startsWith('<=') || search.startsWith('>=') || search.startsWith('!=')

`${sqlQuote(it)} ${search.substring(0,2)} @${it}` |> addTo => filters

queryMap[it] = search.substring(2).coerce()

else if search.startsWith('<') || search.startsWith('>')

`${sqlQuote(it)} ${search.substring(0,1)} @${it}` |> addTo => filters

queryMap[it] = search.substring(1).coerce()

else if search.endsWith(',')

`${sqlQuote(it)} IN (${search.trimEnd(',').split(',').map(i=>i.toLong()).join(',')})` |> addTo=>filters

queryMap[it] = null

else if search.startsWith('%') || search.endsWith('%')

`${sqlQuote(it).sqlCast('varchar')} LIKE @${it}` |> addTo => filters

else

`${sqlQuote(it).sqlCast('varchar')} = @${it}` |> addTo => filters

/if

/each

#if !filters.isEmpty()

sql = `${sql} WHERE ${filters.join(' AND ')}`

/if

#if qs.orderBy

sql = `${sql} ORDER BY ${sqlOrderByFields(qs.orderBy)}`

/if

#if qs.skip || qs.take

sql = `${sql} ${sqlLimit(qs.skip,qs.take)}`

/if

sql |> dbSelect(queryMap) |> return

```

{{ ifError |> show(sql) }}

{{htmlError}}

The _ prefixes in the path utilizes Page Based Routing allowing for

CoC based

Clean URL routes without needing to define & maintain separate routes where the

same script supports querying all registered multitenancy databases.

Instant Customizable RDBMS UI

The SharpData project essentially provides a UI around this script, surfacing its features & give it instant utility which ended up being so useful that it's become the quickest way to perform fast adhoc DB queries as it's easy to configure which RDBMS's & tables to show in a simple text file, easy to customize its UI, enables 1-click export into Excel and its shortcut syntax support in column filters is a fast way to perform quick adhoc queries.

Quick Tour

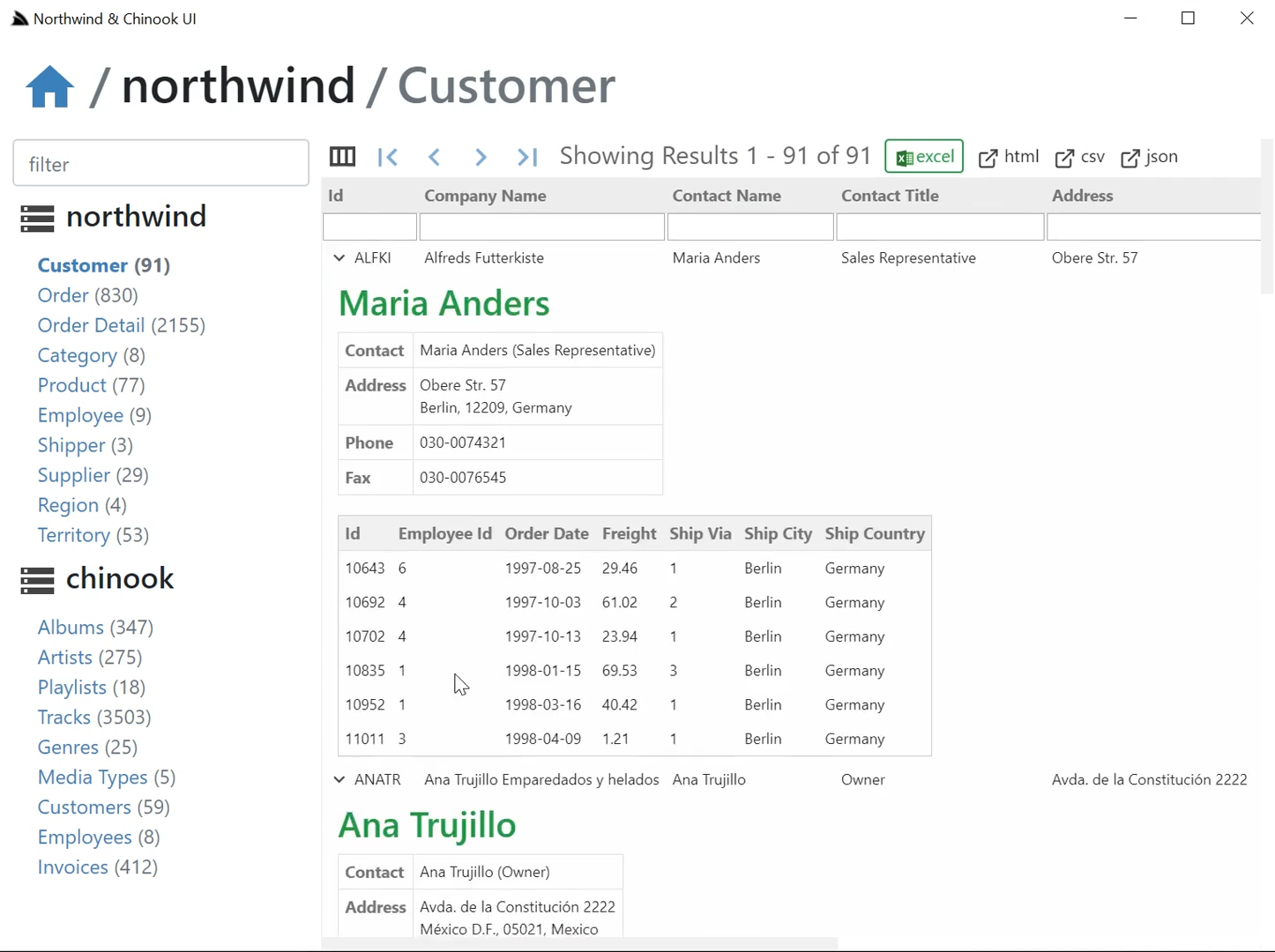

We'll quickly go through some of its features to give you an idea of its capabilities, from the above screenshot we can some of its

filtering capabilities. All results displayed in the UI are queried using the above

sharpdata #Script HTTP API

which supports the following features:

Filters

All query string parameter except for db,fields,format,skip,take,orderBy are treated as filters, where you can:

- Use

=nullor!=nullto searchNULLcolumns - Use

<=,<,>,>=,<>,!=prefix to search with that operator - Use

,trailing comma to perform anIN (values)search (integer columns only) - Use

%suffix or prefix to perform aLIKEsearch - Use

=prefix to perform a coerced "JS" search, for exactnumber,boolean,nulland WCF date comparisons - Otherwise by default performs a "string equality" search where columns are casted and compared as strings

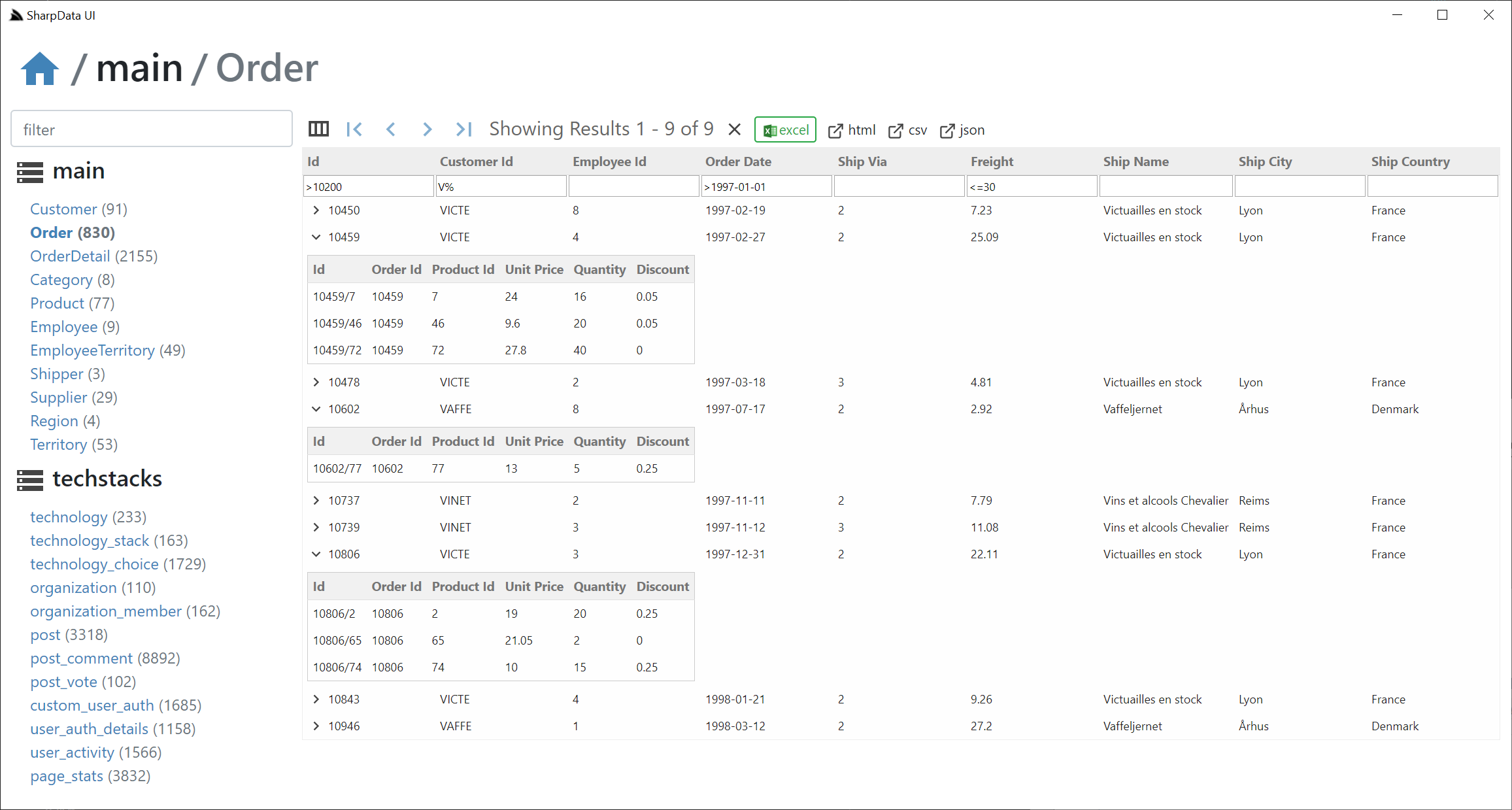

Here's the filtered list used in the above screenshot:

/db/main/Order?Id=>10200&CustomerId=V%&Freight=<=30&OrderDate=>1997-01-01

Custom Field Selection

The column selection icon on the top left of the results lets you query custom select columns which is specified using ?fields:

Multiple OrderBy's

You can use AutoQuery Syntax to specify multiple Order By's:

Paging

Use ?skip and ?take to page through a result set

Format

Use ?format to specify which Content-Type to return the results in, e.g:

Multitenancy

You can specify which registered DB to search using the path info, use main to query the default database:

/db/<named-db>/<table>

Launching SharpData

To run SharpData in a .NET Core Desktop App you'll need latest app dotnet tool:

$ dotnet tool update -g app

If on macOS/Linux you can use the x dotnet tool instead to view SharpData in your default browser

Configure RDBMS from command-line

You can override which database to connect to by specifying it on the command line, e.g. here's an example of connecting to https://techstacks.io RDBMS:

$ app open sharpdata -db postgres -db.connection $TECHSTACKS_DB

Which will open SharpData listing all of TechStack's RDBMS tables. If you have a lot of tables the Sidebar filter provides a quick way to find the table you want, e.g:

app URL Schemes

What can be done with the open command on the command-line can also be done from a custom URL Scheme, a feature that opens up a myriad of new

possibilities as app can open Gist Desktop Apps from Gists or in public & private GitHub repositories,

where it's able to download and launch Apps on the fly with custom arguments - allowing a single URL to run a never installed Desktop App stored in a

Gist & pass it custom params to enable deep linking.

With this organizations could maintain a dashboard of links to its different Desktop Apps that anyone can access, especially useful as the

only software that's needed to run any Sharp Apps is the app dotnet tool which thanks to all

ServiceStack .dll's & dependencies being bundled with the tool, (including Vue/React/Bootstrap fontawesome and Material SVG Icon assets),

the only files that need to be published are the App's specific resources, which is how Apps like SharpData can be compressed in a

20kb .zip - a tiny payload that's viable to download the latest app each on each run, removing the pain & friction to distribute updates as

everyone's already running the latest version every time it's run.

Should you need to (e.g. large Sharp App or github.com is down) you can run your previously locally cached App using run:

$ app run sharpdata

With Custom URL Schemes everyone with app installed can view any database they have network access to from specifying the db type and connection string in the URL:

app://sharpdata?db=postgres&db.connection={CONNECTION_STRING}

CONNECTION_STRING needs to be URL Encoded, e.g. with JS's

encodeURIComponent()

or by specifying an Environment variable containing the connection string:

app://sharpdata?db=postgres&db.connection=$TECHSTACKS_DB

In addition to Sharp Apps being downloaded and run on the fly, they're also able to take advantage of the dotnet tools mix support to also download another Gist's content into the Sharp App's working directory.

With this you can publish a custom dataset in an SQLite database save it as a gist and generate a single URL that everyone can use to download the database and open it in SharpData, e.g:

It's possible to use the user-friendly northwind.sqlite alias here as it's published in the global mix.md directory where it links to the northwind.sqlite gist.

For your custom databases you use the Gist Id instead or if you plan to use this feature a lot you can override which mix.md document that

app should source its links from by specifying another Gist Id in the MIX_SOURCE Environment variable (or see below - to create a local alias).

But if you're already mixing in an external gist you may as well include a custom app.settings in the Gist so it's pre-configured with custom

RDBMS registrations and table lists, e.g:

Which applies the northwind.sharpdata gist, which can also be referenced by Gist Id:

Alternatively you may instead prefer to publish it to a private GitHub repo instead of a Gist which anyone can open up with:

app://user/sharpdata-private?token={TOKEN}

The app dotnet tools will use the latest published GitHub release if there are any, otherwise will use the master.zip archive,

this feature can be used to maintain a working master repo and maintain control ver when to publish new versions of your custom SharpData App.

app local aliases

Where ever you can use a Gist Id, you can assign a local user-friendly alias to use instead. So if you had a custom sqlite database and sharpdata app.settings you could assign it to a local db alias with:

$ app alias db 0ce0d5b828303f1cb4637450b563adbd

Which you'll be able to use in place of the Gist Id, e.g. via command-line:

$ app open sharpdata mix db

or via URL Scheme:

app://sharpdata?mix=db

Likewise the gist alias can also be used for referencing Gist Desktop Apps, e.g. we can assign the redis gist app to use our preferred alias:

$ app alias local-redis 6de7993333b457445793f51f6f520ea8

That we can open via command-line:

$ app open local-redis

Or URL Scheme:

app://local-redis

Or if we want to run our own modified copy of the Redis Desktop App, we can mix the Gist files to our local directory:

$ app mix local-redis

Make the changes we want, then run our local copy by running app (or x) without arguments:

$ app

Other alias command include:

View all aliases

$ app alias

View single alias

$ app alias mydb

Remove an alias

$ app unalias mydb

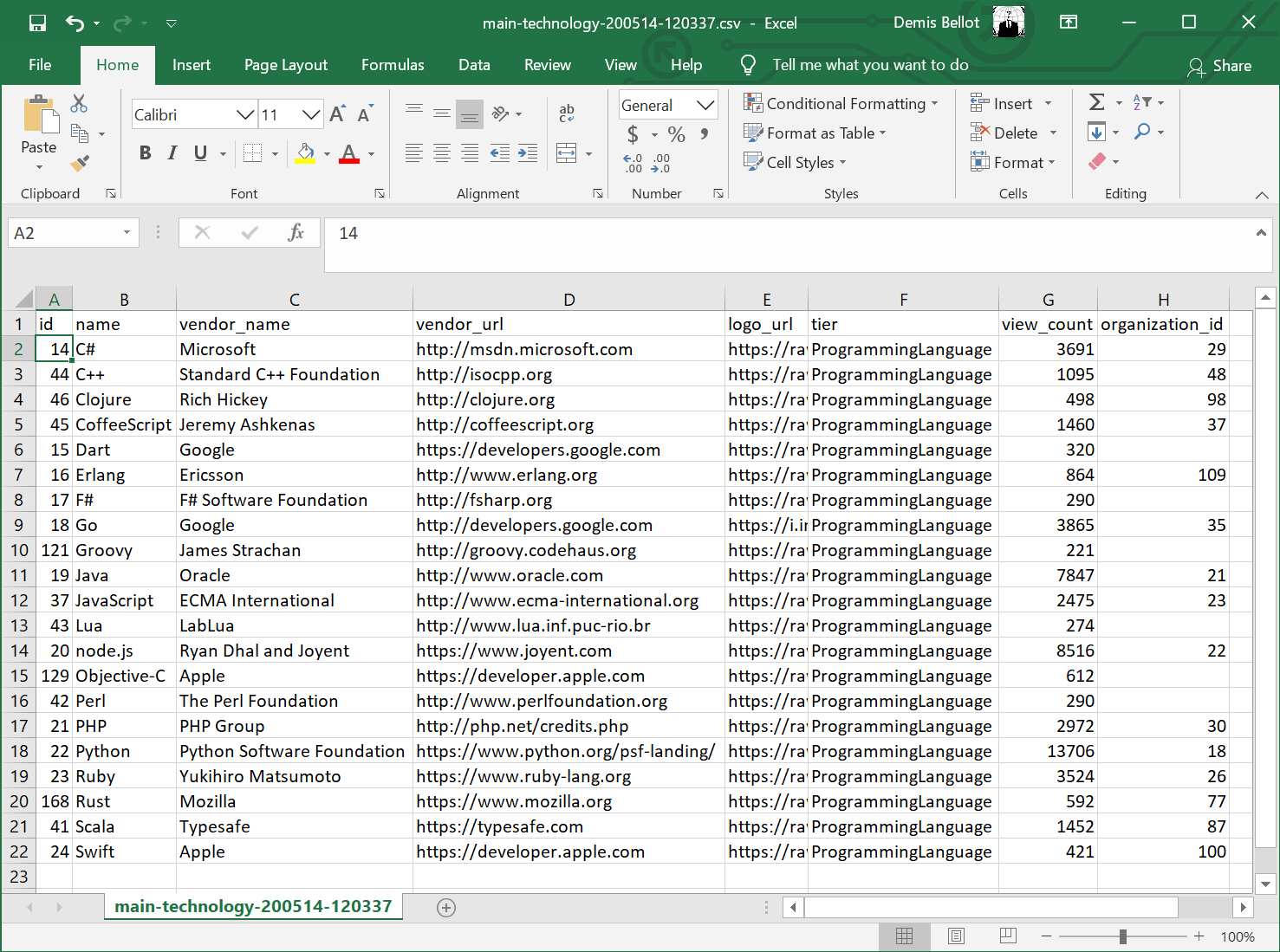

Open in Excel

SharpData detects if Excel is installed and lets you open the un-paged filtered resultset directly by clicking the Excel button

This works seamlessly as it's able to "by-pass" the browser download where the query is performed by the back-end .NET Core Server who streams the response directly to the Users Downloads folder and launches it in Excel as soon as it's finished.

Custom SharpData UI

Each time a Gist Desktop App is opened it downloads and overrides the existing Gist with the latest version which it loads in a Gist VFS where any of its files can be overridden with a local copy.

As the App's working directory is preserved between restarts you can provide a custom app.settings at:

%USERPROFILE%\.sharp-apps\sharpdata\app.settings

Custom app.settings

Where you can perform basic customizations like which RDBMS's and tables you want to be able to access, e.g:

debug false

name Northwind & TechStacks UI

appName sharpdata

db.connections[northwind] { db:sqlite, connection:'northwind.sqlite' }

db.connections[techstacks] { db:postgres, connection:$TECHSTACKS_DB }

args.tables Customer,Order,OrderDetail,Category,Product,Employee,EmployeeTerritory,Shipper,Supplier,Region,Territory

args.tables_techstacks technology,technology_stack,technology_choice,organization,organization_member,post,post_comment,post_vote,custom_user_auth,user_auth_details,user_activity,page_stats

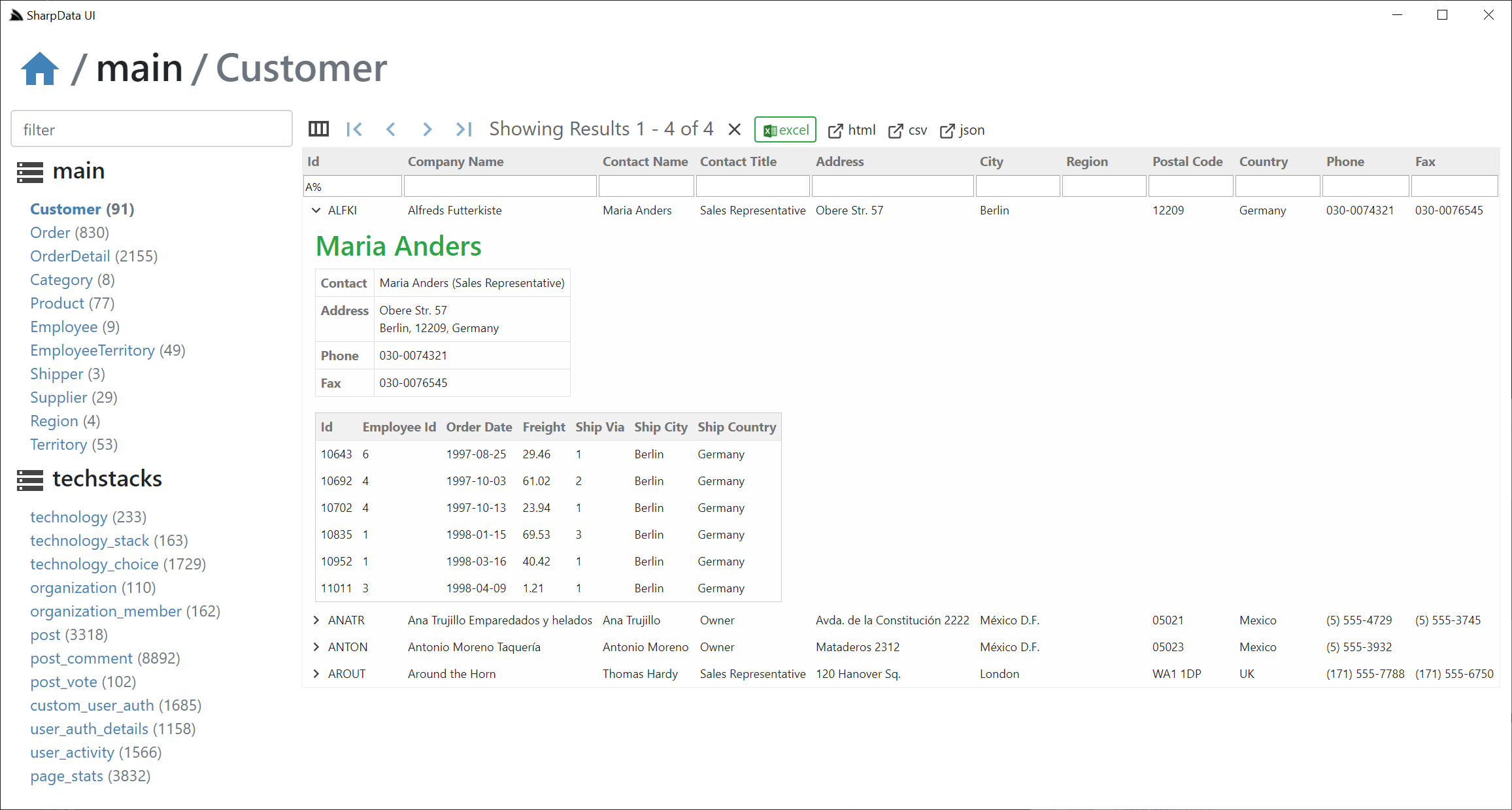

Which will display both RDBMS Databases, showing only the user-specified tables in app.settings above:

Advanced Customizations

More advanced customizations can be added via dropping TypeScript/JavaScript source files in the /custom folder, e.g:

Which is how the northwind.sharpdata and chinook.sharpdata mix gists enable Customized Views for the Northwind & Chinook databases via their dbConfig registrations below:

chinook

dbConfig('chinook', {

showTables: 'albums,artists,playlists,tracks,genres,media_types,customers,employees,invoices'.split(','),

tableName: splitPascalCase,

links: {

albums: {

ArtistId: (id:number) => `artists?filter=ArtistId:${id}`

},

employees: {

ReportsTo: (id:number) => `employees?filter=EmployeeId:${id}`

},

invoices: {

CustomerId: (id:number) => `customers?filter=CustomerId:${id}`

},

tracks: {

AlbumId: (id:number) => `albums?filter=AlbumId:${id}`,

MediaTypeId: (id:number) => `media_types?filter=MediaTypeId:${id}`,

GenreId: (id:number) => `genres?filter=GenreId:${id}`,

}

},

rowComponents: {

albums: Album,

artists: Artist,

playlists: Playlist,

}

});

northwind

dbConfig('northwind', {

showTables: 'Customer,Order,OrderDetail,Category,Product,Employee,Shipper,Supplier,Region'.split(','),

tableName: splitPascalCase,

links: {

Order: {

CustomerId: (id:string) => `Customer?filter=Id:${id}`,

EmployeeId: (id:string) => `Employee?filter=Id:${id}`,

ShipVia: (id:number) => `Shipper?filter=Id:${id}`,

},

OrderDetail: {

OrderId: (id:string) => `Order?filter=Id:${id}`,

ProductId: (id:string) => `Product?filter=Id:${id}`,

},

Product: {

SupplierId: (id:number) => `Supplier?filter=Id:${id}`,

CategoryId: (id:number) => `Category?filter=Id:${id}`,

},

Territory: {

RegionId: (id:number) => `Region?filter=Id:${id}`,

},

},

rowComponents: {

Order,

Customer,

}

});

These db customizations let you specify which RDBMS tables & the order that they should be displayed, the table names text casing function, which columns to linkify & any custom Row Components for different tables.

Deploying Customizations

When deploying as a .NET Core project the customizations are deployed with your /wwwroot as normal.

To make customizations available to load with the SharpData Gist Desktop App you'll need to publish the directory of customizations to a gist. Here are the customizations for the northwind.sharpdata and chinook.sharpdata gists:

/dist-mix

- /chinook

- /custom

- chinook.js - UI Customizations

- app.settings - Custom App Settings

- chinook.sqlite - Embedded SQLite database

- /custom

- /northwind

- /custom

- northwind.js - UI Customizations

- app.settings - Custom App Settings

- northwind.sqlite - Embedded SQLite database

- /custom

You can publish a directory of files to a GitHub Gist using the x publish command with the

GitHub AccessToken with gist write access you want to write to, e.g:

$ cd northwind

$ x publish -token %TOKEN%

Viewing Customizations

When published these Gist Customizations can be viewed by gist id directly or by a user friendly gist mix or local alias:

- app://sharpdata?mix=0ce0d5b828303f1cb4637450b563adbd

- app://sharpdata?mix=96b10369daf94897531810841cb097f2

Custom Row Components

Whilst a tabular grid view might be a natural UI for browsing a database for devs, we can do better since we have the full UI source code of the Vue components. A filtered tabular view makes it fast to find the record you're interested in, but it's not ideal for quickly finding related information about an Entity.

To provide a more customized UX for different App UIs, SharpData includes support for "Row Components" (defined in /wwwroot/custom) to be able to quickly drill down & view richer info on any record.

For example when viewing an Order, it's natural to want to view the Order Details with it, enabled with the custom Vue component registration below:

@Component({ template:

`<div v-if="id">

<jsonviewer :value="details" />

</div>

<div v-else class="alert alert-danger">Order Id needs to be selected</div>`

})

class Order extends RowComponent {

details:any[] = [];

get id() { return this.row.Id; }

async mounted() {

this.details = await sharpData(this.db,'OrderDetail',{ OrderId: this.id });

}

}

All Row components are injected with the db, table properties, the entire row object that was selected as well as the Column Schema definition for that table. Inside the component you're free to display anything, in this case we're using the sharpData helper for calling the server #Script HTTP API to get it to fetch all OrderDetail entries for this order.

If the resultset is filtered without the Order

IdPK it can't fetch its referenced data, so displays an error instead

The jsonviewer component used is similar to ServiceStack's HTML5 auto pages to quickly view contents of any object.

The registerRowComponent(db,table,VueComponent,componentName) API is used to register this component with SharpData to make it available to render any order.

With the Order component registered we can now drill down into any Order to view its Order Details:

You're free to render any kind of UI in the row component, e.g. here's the Customer.ts row component used to render a richer view for Customers:

@Component({ template:

`<div v-if="id" class="pl-2">

<h3 class="text-success">{{customer.ContactName}}</h3>

<table class="table table-bordered" style="width:auto">

<tr>

<th>Contact</th>

<td>{{ customer.ContactName }} ({{ customer.ContactTitle }})</td>

</tr>

<tr>

<th>Address</th>

<td>

<div>{{ customer.Address }}</div>

<div>{{ customer.City }}, {{ customer.PostalCode }}, {{ customer.Country }}</div>

</td>

</tr>

<tr>

<th>Phone</th>

<td>{{ customer.Phone }}</td>

</tr>

<tr v-if="customer.Fax">

<th>Fax</th>

<td>{{ customer.Fax }}</td>

</tr>

</table>

<jsonviewer :value="orders" />

</div>

<div v-else class="alert alert-danger">Customer Id needs to be selected</div>`

})

class Customer extends RowComponent {

customer:any = null;

orders:any[] = [];

get id() { return this.row.Id; }

async mounted() {

this.customer = (await sharpData(this.db,this.table,{ Id: this.id }))[0];

const fields = 'Id,EmployeeId,OrderDate,Freight,ShipVia,ShipCity,ShipCountry';

this.orders = await sharpData(this.db,'Order',{ CustomerId: this.id, fields })

}

}

Which looks like:

SharpData .NET Core Project

Whilst NetCoreApps/SharpData can live a charmed life as a Desktop App, it's also just a regular ServiceStack .NET Core App with a Startup.cs and AppHost that can be developed, published and deployed as you're used to, here's an instance of it deployed as a .NET Core App on Linux:

sharpdata.netcore.io

For best experience we recommend running against local network databases

It's a unique ServiceStack App in that it doesn't contain any ServiceStack Services as it's only using pre-existing functionality already built into ServiceStack,

#Script for its HTTP APIs and a Vue SPA for its UI, so requires no .dll's need to be deployed with it.

It uses the same Vue SPA solution as vue-lite to avoid npm's size & complexity where you only need to run TypeScript's tsc -w to enable its live-reload dev UX which provides its instant feedback during development.

Some other of its unique traits is that instead of manually including all the Vue framework .js libraries, it instead references the new ServiceStack.Desktop.dll for its Vue framework libraries and its Material design SVG icons which are referenced as normal file references:

{{ [

`/lib/js/vue/vue.min.js`,

`/lib/js/vue-router/vue-router.min.js`,

`/lib/js/vue-class-component/vue-class-component.min.js`,

`/lib/js/vue-property-decorator/vue-property-decorator.min.js`,

`/lib/js/@servicestack/desktop/servicestack-desktop.min.js`,

`/lib/js/@servicestack/client/servicestack-client.min.js`,

`/lib/js/@servicestack/vue/servicestack-vue.min.js`,

] |> map => `<script src="${it}"></script>` |> joinln |> raw }}

But instead of needing to exist on disk & deployed with your project it's referencing the embedded resources in ServiceStack.Desktop.dll and only the bundled assets need to be deployed with your project which is using the built-in NUglify support in the dotnet tools to produce its highly optimized/minified bundle without needing to rely on any npm tooling when publishing the .NET Core App:

<Target Name="Bundle" BeforeTargets="AfterPublish">

<Exec Command="x run _bundle.ss -to /bin/Release/netcoreapp3.1/publish/wwwroot" />

</Target>

The included /typings are just the TypeScript definitions for each library which TypeScript uses for its static analysis & its great dev UX in IDEs & VSCode, but are only needed during development and not deployed with the project.

Publish to Gist Desktop App

The primary way SharpData is distributed is as a Gist Desktop App, where it's able to provide instant utility by running on a users local machine inside a native Chromium Desktop App making it suitable for a much broader use-case as a fast, lightweight, always up-to-date Desktop App with deeper Windows integration all packaged in a tiny 20kb .zip footprint. There's no need to provision servers, setup CI, manage cloud hosting resources, you can simply run a script to update a Gist where its latest features are immediately available to your end users the next time it's run.

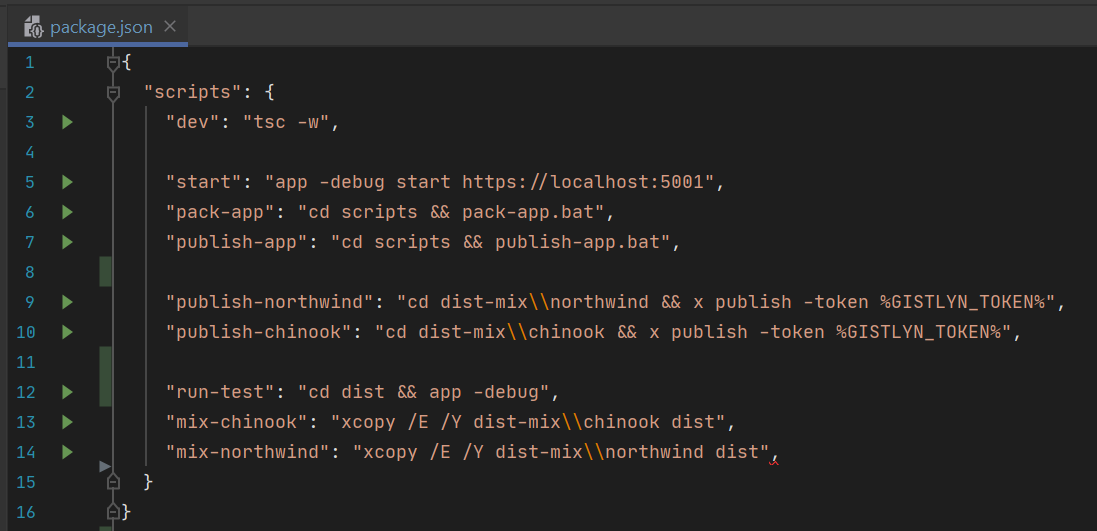

To run, test & publish it as a Desktop App you can use the pre-made scripts in package.json. Rider provides a nice UX here as it lets you run each individual script directly from their json editor:

Essentially to package it into a Sharp App you just need to run the pack script which will bundle & copy all required assets into the /dist folder which you can then test locally in a .NET Core Desktop App by running app in that folder:

$ cd dist

$ app

The init-test script just copies an example northwind.sqlite database and sample app.settings so you have something to test it with if you need it.

The publish-app script is if you want to publish it to a Gist, you will need it to provide the GitHub AccessToken with write access to the Gist User Account you want to publish it to. Adding an appName and description to app.settings will publish it to the Global App Registry, make it publicly discoverable and allow anyone to open your App using your user-friendly appName alias, otherwise they can run it using the Gist Id or Gist URL.

Alternatively the contents of the dist/ folder can be published to a GitHub repo (public or private) and run with:

$ app open <user>/<repo>

Or link to it with its custom URL Scheme:

app://<user>/repo

If it's in a private repo they'll need to either provide an AccessToken in the GITHUB_TOKEN Environment variable or using the -token argument:

$ app open <user>/<repo> -token <token>

URL Scheme:

app://<user>/repo?token=<token>

RDBMS Configuration

When running as a .NET Core App you'd need to register which RDBMS's you want to use with OrmLite's configuration, e.g. the screenshot above registers an SQLite northwind.sqlite database and the https://techstacks.io PostgreSQL Database:

container.Register<IDbConnectionFactory>(c => new OrmLiteConnectionFactory(

MapProjectPath("~/northwind.sqlite"), SqliteDialect.Provider));

var dbFactory = container.Resolve<IDbConnectionFactory>();

dbFactory.RegisterConnection("techstacks",

Environment.GetEnvironmentVariable("TECHSTACKS_DB"),

PostgreSqlDialect.Provider);

By default it shows all Tables in each RDBMS, but you can limit it to only show a user-defined list of tables with #Script Arguments:

Plugins.Add(new SharpPagesFeature {

//...

Args = {

//Only display user-defined list of tables:

["tables"] = "Customer,Order,OrderDetail,Category,Product,Employee,EmployeeTerritory,Shipper,Supplier,Region,Territory",

["tables_techstacks"] = "technology,technology_stack,technology_choice,organization,organization_member,post,post_comment,post_vote,custom_user_auth,user_auth_details,user_activity,page_stats",

}

});

When running as a Sharp App it's instead configured in its app.settings, here's equivalent settings for the above configuration:

# Configure below. Supported dialects: sqlite, mysql, postgres, sqlserver

db sqlite

db.connection northwind.sqlite

# db.connections[techstacks] { db:postgres, connection:$TECHSTACKS_DB }

args.tables Customer,Order,OrderDetail,Category,Product,Employee,EmployeeTerritory,Shipper,Supplier,Region,Territory

args.tables_techstacks technology,technology_stack,technology_choice,organization,organization_member,post,post_comment,post_vote,custom_user_auth,user_auth_details,user_activity,page_stats

Feedback

We hope SharpData serves useful in some capacity, whether it's being able to quickly develop and Ship a UI to stakeholders or as a template to develop .NET Core Apps that you can distribute as Sharp Apps, as an example to explore the delivery and platform potential of URL schemes and install-less Desktop Apps or just as an inspiration for areas where #Script shines & the different kind of Apps you can create with it.

Whilst app is Windows 64 only, you can use the x cross-platform tool and its xapp:// URL scheme to run Sharp Apps on macOS/Linux, it just wont have access to any of its Window Integration features.

AutoQuery CRUD!

AutoQuery Services enjoy new declarative super powers where it's able to implement much of a CRUD Services logic declaratively, including support for multi-tenancy, optimistic concurrency, declarative validation, Auto Mapping external of Request/Respond DTOs to data model properties, auto populating then using full #Script Expressions that can be used for example to populate timestamps, authenticating user information, generating new UUIDs, etc.

Just like AutoQuery, CRUD Services are ServiceStack Services where you can continue using the same functionality to specify optimal user-defined routes for HTTP APIs, same Request/Response and Attribute filters to apply custom logic and continue enjoying the entire ecosystem around ServiceStack Services including being able to invoke them via gRPC, MQ endpoints and its rich client ecosystem for enabling end-to-end Typed APIs with Add ServiceStack Reference.

AutoQuery Services are fast & emit clean optimal "pure serialized POCO" wire-format, they're built on OrmLite's high-performance

APIs where all AutoQuery APIs are async by default but also also offers native sync APIs if needing to enlist any of

AutoQuery's functionality in custom sync methods (that are unable to be converted into viral async APIs).

Importantly AutoQuery Services are "future-proofed" and can be overridden with a custom implementation that can either choose to augment the existing AutoQuery functionality and enhance it with custom behavior (e.g. if not possible to implement declaratively) or if needed its entire implementation can be replaced without breaking its design contract & existing client integrations, should it be necessary to reimplement later if the Service needs to be constructed to use alternative data sources.

Limitations of typical Auto querying Solutions

This is ultimately where many auto querying solutions fall down, they're typically executed with black-box binary implementations which only understand their opaque query languages normal Services wouldn't support, are exposed on unnatural routes you wouldn't use and return unclean verbose wire formats normal Services wouldn't return. So when it comes to needing to replace their implementation-specific APIs, it's often not feasible to reverse engineer a new implementation to match its existing Services contract and would need to resort in creating a new incompatible API, breaking existing clients and violating its Systems encapsulation which should be one of the core goals of Service design.

Creating AutoQuery CRUD Services

Just like AutoQuery, you just need to provide the typed Request DTOs definition for your DB Table APIs and AutoQuery automatically provides the implementation for the Service.

To enlist Auto CRUD behavior your Request DTOs need to implement one of the following interfaces which dictates the behavior of the Service:

ICreateDb<Table>- Create new Table EntryIUpdateDb<Table>- Update existing Table EntryIPatchDb<Table>- Partially update existing Table EntryIDeleteDb<Table>- Delete existing Table Entry

All Request DTOs also require either an IReturn<T> or IReturnVoid marker interface to specify the return type of the Service.

Can use built-in

IReturn<EmptyResponse>for an "empty" response where asIReturnVoidreturns "no" response.

Let's go through a simple example, starting with a simple POCO OrmLite data model we want to add to our RDBMS:

public class Rockstar

{

[AutoIncrement]

public int Id { get; set; }

public string FirstName { get; set; }

public string LastName { get; set; }

public int? Age { get; set; }

public DateTime DateOfBirth { get; set; }

public DateTime? DateDied { get; set; }

public LivingStatus LivingStatus { get; set; }

}

We can create a Service that inserts new Rockstar by defining all the properties we want to allow API consumers to provide when creating a new Rockstar:

public class CreateRockstar : ICreateDb<Rockstar>, IReturn<CreateRockstarResponse>

{

public string FirstName { get; set; }

public string LastName { get; set; }

public int? Age { get; set; }

public DateTime DateOfBirth { get; set; }

}

public class CreateRockstarResponse

{

public int Id { get; set; } // Id is auto populated with RDBMS generated Id

public ResponseStatus ResponseStatus { get; set; }

}

When ServiceStack starts it generates the implementation for this Service, which can now insert Rockstars using your populated Request DTO:

var client = new JsonServiceClient(baseUrl);

client.Post(new CreateRockstar {

FirstName = "Kurt",

LastName = "Cobain",

Age = 27,

DateOfBirth = new DateTime(20,2,1967),

});

Similarly you can define "Update" and "Delete" Services the same way, e.g:

public class UpdateRockstar : Rockstar,

IUpdateDb<Rockstar>, IReturn<UpdateRockstarResponse> {}

public class UpdateRockstarResponse

{

public int Id { get; set; } // Id is auto populated with RDBMS generated Id

public Rockstar Result { get; set; } // selects & returns latest DB Rockstar

public ResponseStatus ResponseStatus { get; set; }

}

By convention if your Response DTO contains any of these properties it will be automatically populated:

T Id- The Primary KeyT Result- The POCO you want to return (can be a subset of DB model)int Count- Return the number of rows affected (Deletes can have >1)

Delete Services need only a Primary Key, e.g:

public class DeleteRockstar : IDeleteDb<Rockstar>, IReturnVoid

{

public int Id { get; set; }

}

and to Query the Rockstar table you have the full featureset of AutoQuery for a complete set of CRUD Services without needing to provide any implementations.

Advanced CRUD Example

Lets now explore a more advanced example that implements Audit information as well as layered support for multi-tenancy to see how you can easily compose features.

So lets say you have an interface that all tables you want to contain Audit information implements:

public interface IAudit

{

DateTime CreatedDate { get; set; }

string CreatedBy { get; set; }

string CreatedInfo { get; set; }

DateTime ModifiedDate { get; set; }

string ModifiedBy { get; set; }

string ModifiedInfo { get; set; }

DateTime? SoftDeletedDate { get; set; }

string SoftDeletedBy { get; set; }

string SoftDeletedInfo { get; set; }

}

It's not required, but it's also useful to have a concrete base table which could be annotated like:

public abstract class AuditBase : IAudit

{

public DateTime CreatedDate { get; set; }

[Required]

public string CreatedBy { get; set; }

[Required]

public string CreatedInfo { get; set; }

public DateTime ModifiedDate { get; set; }

[Required]

public string ModifiedBy { get; set; }

[Required]

public string ModifiedInfo { get; set; }

[Index] //Check if Deleted

public DateTime? SoftDeletedDate { get; set; }

public string SoftDeletedBy { get; set; }

public string SoftDeletedInfo { get; set; }

}

We can then create a base Request DTO that all Audit Create Services will implement:

[ValidateIsAuthenticated]

[AutoPopulate(nameof(IAudit.CreatedDate), Eval = "utcNow")]

[AutoPopulate(nameof(IAudit.CreatedBy), Eval = "userAuthName")] //or userAuthId

[AutoPopulate(nameof(IAudit.CreatedInfo), Eval = "`${userSession.DisplayName} (${userSession.City})`")]

[AutoPopulate(nameof(IAudit.ModifiedDate), Eval = "utcNow")]

[AutoPopulate(nameof(IAudit.ModifiedBy), Eval = "userAuthName")] //or userAuthId

[AutoPopulate(nameof(IAudit.ModifiedInfo), Eval = "`${userSession.DisplayName} (${userSession.City})`")]

public abstract class CreateAuditBase<Table,TResponse> : ICreateDb<Table>, IReturn<TResponse> {}

These all call #Script Methods which you can add/extend yourself.

The *Info examples is a superfluous example showing that you can basically evaluate any #Script expression. Typically you'd only save User Id or Username

AutoPopulate

The [AutoPopulate] attribute tells AutoCrud that you want the DB Table to automatically populate these properties, which can be populated using any of its

properties below:

- Value - A constant value that can be used in C# Attributes, e.g

Value="Foo" - Expression - A Lightweight #Script Expression that results in a constant value that's only evaluated once and cached globally, e.g.

Expression = "date(2001,1,1)", useful for values that can't be defined in C# Attributes likeDateTime, can be any #Script Method. - Eval - A #Script Expression that's cached per request. E.g.

Eval="utcNow"calls theutcNowScript method which returnsDateTime.UtcNowwhich is cached for that request so all otherutcNowexpressions will return the same exact value. - NoCache - Don't cache the expression, evaluate it each time.

AutoCrud makes extensive usage of #Script expressions for much of its declarative functionality which always executes their cached ASTs so expressions are only parsed once and still fast to evaluate even when results are not cached.

Lets now layer on additional generic functionality by inheriting and extending the base class with additional functionality, e.g. if we want our table to support Multitenancy we could extend it with:

[AutoPopulate(nameof(IAuditTenant.TenantId), Eval = "Request.Items.TenantId")]

public abstract class CreateAuditTenantBase<Table,TResponse>

: CreateAuditBase<Table,TResponse> {}

Where TenantId is added in a Global Request Filter (e.g. after inspecting the authenticated UserSession to determine the tenant they belong to), e.g:

const string TenantId = nameof(TenantId);

void SetTenant(IRequest req, IResponse res, object dto)

{

var userSession = req.SessionAs<AuthUserSession>();

if (userSession.IsAuthenticated)

{

req.SetItem(TenantId, userSession.City switch {

"London" => 10,

"Perth" => 11,

//...

_ => 100,

});

}

}

GlobalRequestFilters.Add(SetTenant); // HTTP Requests

GlobalMessageRequestFilters.Add(SetTenant); // MQ Requests

Now we easily implement custom "Audited" and "Multi Tenant" CRUD Services by inheriting these base Services.

Here's an example of our custom Table that implements our AuditBase class with a TenantId to capture the Tenant the record should be saved to:

public class RockstarAuditTenant : AuditBase

{

[Index]

public int TenantId { get; set; }

[AutoIncrement]

public int Id { get; set; }

public string FirstName { get; set; }

public string LastName { get; set; }

public int? Age { get; set; }

public DateTime DateOfBirth { get; set; }

public DateTime? DateDied { get; set; }

public LivingStatus LivingStatus { get; set; }

}

Our service can now implement our base Audit & Multitenant enabled service:

public class CreateRockstarAuditTenant

: CreateAuditTenantBase<RockstarAuditTenant, CreateRockstarResponse>

{

public string FirstName { get; set; }

public string LastName { get; set; }

public int? Age { get; set; }

public DateTime DateOfBirth { get; set; }

}

And all the decorated properties will be automatically populated when creating the Rockstar with CreateRockstarAuditTenant, e.g:

client.Post(new CreateRockstarAuditTenant {

FirstName = "Kurt",

LastName = "Cobain",

Age = 27,

DateOfBirth = new DateTime(20,2,1967),

});

We can create the same base classes for Updates:

[ValidateIsAuthenticated]

[AutoPopulate(nameof(IAudit.ModifiedDate), Eval = "utcNow")]

[AutoPopulate(nameof(IAudit.ModifiedBy), Eval = "userAuthName")] //or userAuthId

[AutoPopulate(nameof(IAudit.ModifiedInfo), Eval = "`${userSession.DisplayName} (${userSession.City})`")]

public abstract class UpdateAuditBase<Table,TResponse>

: IUpdateDb<Table>, IReturn<TResponse> {}

[AutoFilter(nameof(IAuditTenant.TenantId), Eval="Request.Items.TenantId")]

public abstract class UpdateAuditTenantBase<Table,TResponse>

: UpdateAuditBase<Table,TResponse> {}

public class UpdateRockstarAuditTenant

: UpdateAuditTenantBase<RockstarAuditTenant, RockstarWithIdResponse>

{

public int Id { get; set; }

public string FirstName { get; set; }

public LivingStatus? LivingStatus { get; set; }

}

Note the

[AutoPopulate]properties only appear on the Data Model, not the external Request DTO since we don't want external API consumers to populate them.

For Apps that prefer to never delete rows and instead mark records as deleted so an audit trail is retained, we can implement "Soft Deletes" using an UPDATE to populate the SoftDelete* fields behind-the-scenes:

[ValidateIsAuthenticated]

[AutoPopulate(nameof(IAudit.SoftDeletedDate), Eval = "utcNow")]

[AutoPopulate(nameof(IAudit.SoftDeletedBy), Eval = "userAuthName")] //or userAuthId

[AutoPopulate(nameof(IAudit.SoftDeletedInfo), Eval = "`${userSession.DisplayName} (${userSession.City})`")]

public abstract class SoftDeleteAuditBase<Table,TResponse>

: IUpdateDb<Table>, IReturn<TResponse> {}

[AutoFilter(QueryTerm.Ensure, nameof(IAuditTenant.TenantId), Eval = "Request.Items.TenantId")]

public abstract class SoftDeleteAuditTenantBase<Table,TResponse>

: SoftDeleteAuditBase<Table,TResponse> {}

public class SoftDeleteAuditTenant

: SoftDeleteAuditTenantBase<RockstarAuditTenant, RockstarWithIdResponse>

{

public int Id { get; set; }

}

To implement a "Real" permanently destructive DELETE you would instead implement IDeleteDb<T>:

[ValidateIsAuthenticated]

[AutoFilter(QueryTerm.Ensure, nameof(IAuditTenant.TenantId), Eval = "Request.Items.TenantId")]

public class RealDeleteAuditTenant

: IDeleteDb<RockstarAuditTenant>, IReturn<RockstarWithIdResponse>

{

public int Id { get; set; }

public int? Age { get; set; }

}

Multi RDBMS Services

As they're just regular ServiceStack Services everything you’re used to that works with normal services also works with new Auto Crud Services, to

recap you can annotate the DB Model with the [NamedConnection] attribute to specify which

registered named connection AutoQuery should use:

[NamedConnection("Reporting")]

public class NamedRockstar : Rockstar { } //DB Model

Where all AutoQuery Services for that data model will query the Reporting database instead:

public class CreateNamedRockstar : RockstarBase,

ICreateDb<NamedRockstar>, IReturn<RockstarWithIdAndResultResponse>

{

public int Id { get; set; }

}

public class UpdateNamedRockstar : RockstarBase,

IUpdateDb<NamedRockstar>, IReturn<RockstarWithIdAndResultResponse>

{

public int Id { get; set; }

}

Alternatively the [ConnectionInfo] can be used on Service implementations, but as AutoQuery doesn't

have them you'd need to provide custom implementations that can delegate to their respective Auto Crud API, e.g:

[ConnectionInfo(NamedConnection = MyDatabases.Reporting)]

public class MyReportingServices(IAutoQueryDb autoQuery) : Service

{

public Task<object> Any(CreateConnectionInfoRockstar request) =>

autoQuery.CreateAsync(request, Request);

public Task<object> Any(UpdateConnectionInfoRockstar request) =>

autoQuery.UpdateAsync(request, Request);

}

AutoFilter

If you're creating Soft Delete & Multi tenant services you'll want to ensure that every query only returns records in their tenant and doesn't return deleted items, which we can implement using an [AutoFilter], e.g:

[ValidateIsAuthenticated]

[AutoFilter(QueryTerm.Ensure, nameof(IAudit.SoftDeletedDate), Template = SqlTemplate.IsNull)]

[AutoFilter(QueryTerm.Ensure, nameof(IAuditTenant.TenantId), Eval = "Request.Items.TenantId")]

public abstract class QueryDbTenant<From, Into> : QueryDb<From, Into> {}

The [AutoFilter] lets you add pre-configured filters to the query, QueryTerm.Ensure utilizes OrmLite's new Ensure() APIs which forces always applying this filter, even if the query contains other OR conditions.

This base class will then let you create concrete queries that doesn't return soft deleted rows and only returns rows from the same tenant as the authenticated user, e.g:

public class QueryRockstarAudit : QueryDbTenant<RockstarAuditTenant, Rockstar>

{

public int? Id { get; set; }

}

To coincide with AutoCRUD there's also support for declarative validation which thanks to #Script lets you define your Fluent Validation Rules by annotating your Request DTO properties. As it's essentially a different way to define Fluent Validation Rules, it still needs Validation enabled to run:

Plugins.Add(new ValidationFeature());

AutoMap and AutoDefault

The [AutoDefault] attribute allows you to specify default values that the Data Model should be populated with using the same #Script expression support

available in [AutoPopulate] to populate constant values, cached constant expressions or results of full evaluated expressions.

The [AutoMap] attributes enables the flexibility of being able to maintain different external property names from their internal data models, but still

be able to declaratively map them.

Here's an example ICreateDb<T> AutoCrud Service that makes use of both these attributes to achieve its desired behavior:

public class CreateRockstarAutoMapDefault : ICreateDb<Rockstar>, IReturn<RockstarWithIdResponse>

{

[AutoMap(nameof(Rockstar.FirstName))]

public string MapFirstName { get; set; }

[AutoMap(nameof(Rockstar.LastName))]

public string MapLastName { get; set; }

[AutoMap(nameof(Rockstar.Age))]

[AutoDefault(Value = 21)]

public int? MapAge { get; set; }

[AutoMap(nameof(Rockstar.DateOfBirth))]

[AutoDefault(Expression = "date(2001,1,1)")]

public DateTime MapDateOfBirth { get; set; }

[AutoMap(nameof(Rockstar.DateDied))]

[AutoDefault(Eval = "utcNow")]

public DateTime? MapDateDied { get; set; }

[AutoMap(nameof(Rockstar.LivingStatus))]

[AutoDefault(Value = LivingStatus.Dead)]

public LivingStatus? MapLivingStatus { get; set; }

}

Custom Complex Mapping

Another opportunity to apply more complex custom mapping logic before resorting to creating an actual Service implementation is to make use of

ServiceStack's built-in Auto Mapping Populator API to intercept an AutoMapping conversion

between 2 types and apply custom logic after ConvertTo<T> or PopulateWith<T> APIs, e.g:

AutoMapping.RegisterPopulator((Dictionary<string,object> target, CreateRockstar source) =>

{

if (!IsAlive(source))

{

target[nameof(source.LivingStatus)] = LivingStatus.Dead;

}

});

Auto Guid's

In addition to supporting [AutoIncrement] to insert records with Auto Incrementing Ids, you can use [AutoId] to insert entities with

RDBMS generated UUIDs where they're supported otherwise

OrmLite populates them with Guid.NewGuid().

Note: usage of inheritance isn't required & has the same behavior as using explicit properties

public abstract class RockstarBase

{

public string FirstName { get; set; }

public string LastName { get; set; }

public int? Age { get; set; }

public DateTime DateOfBirth { get; set; }

}

public class Rockstar : RockstarBase

{

[AutoId]

public Guid Id { get; set; }

}

public class CreateRockstarWithAutoGuid : RockstarBase, ICreateDb<Rockstar>, IReturn<RockstarWithIdResponse>

{

}

Or if you prefer for Id's to always be populated with Guid.NewGuid(), remove [AutoId] and populate it with [AutoPopulate] instead:

[AutoPopulate(nameof(Rockstar.Id), Eval = "nguid")]

public class CreateRockstarWithAutoGuid : RockstarBase, ICreateDb<Rockstar>, IReturn<RockstarWithIdResponse>

{

}

Optimistic Concurrency

We can declaratively add support for OrmLite's Optimistic Concurrency by

including ulong RowVersion property on Auto Crud Request/Response DTOs and Data Models, e.g:

// Data Model

public class RockstarVersion : RockstarBase

{

[AutoIncrement]

public int Id { get; set; }

public ulong RowVersion { get; set; }

}

public class CreateRockstarVersion : RockstarBase, ICreateDb<RockstarVersion>,

IReturn<RockstarWithIdAndRowVersionResponse> { }

public class UpdateRockstarVersion : RockstarBase, IPatchDb<RockstarVersion>,

IReturn<RockstarWithIdAndRowVersionResponse>

{

public int Id { get; set; }

public ulong RowVersion { get; set; }

}

// Response DTO

public class RockstarWithIdAndRowVersionResponse

{

public int Id { get; set; }

public uint RowVersion { get; set; }

public ResponseStatus ResponseStatus { get; set; }

}

AutoQuery will populate the RowVersion in Response DTOs which will need to be provided whenever making changes to that entity where it will fail to update the entity if no RowVersion was provided or has since been modified:

var createResponse = client.Post(new CreateRockstarVersion {

FirstName = "Original",

LastName = "Version",

Age = 20,

DateOfBirth = new DateTime(2001,7,1),

LivingStatus = LivingStatus.Dead,

});

// throws OptimisticConcurrencyException: No RowVersion provided

client.Patch(new UpdateRockstarVersion {

Id = createResponse.Id,

LastName = "UpdatedVersion",

});

// succeeds if "Original Version" wasn't modified otherwise throws OptimisticConcurrencyException

var response = client.Patch(new UpdateRockstarVersion {

Id = createResponse.Id,

LastName = "UpdatedVersion",

RowVersion = createResponse.RowVersion,

});

MQ Auto Crud Requests

As Auto Crud Services are just ServiceStack Services they can partake in its ecosystem of features like being able to

invoke Services via MQ, although there's some extra consideration needed to account for the differences between HTTP and MQ Requests.

First whatever filters you've added to populate the IRequest.Items like tenant Id you'll also need to register in GlobalMessageRequestFilters

so they're executed for MQ Requests as well:

GlobalRequestFilters.Add(SetTenant); // HTTP Requests

GlobalMessageRequestFilters.Add(SetTenant); // MQ Requests

Secondly Auth Information is typically sent in the HTTP Request Headers, but they need to be included in the Request DTO to send Authenticated

MQ Requests, which can either implement IHasSessionId for normal Session Auth Providers, e.g:

public class CreateRockstarAuditTenant

: CreateAuditTenantBase<RockstarAuditTenant, RockstarWithIdAndResultResponse>, IHasSessionId

{

public string SessionId { get; set; } //Authenticate MQ Requests

//...

}

Alternatively they can implement IHasBearerToken for stateless Bearer Token

Auth providers like JWT or API Keys.

If you're publishing an MQ Request inside a HTTP Service you can use the PopulateRequestDtoIfAuthenticated extension method which populates the Request

DTO from the Authenticated HTTP Request, e.g:

public class AutoCrudMqServices : Service

{

public void Any(CreateRockstarAuditTenantMq request)

{

var mqRequest = request.ConvertTo<CreateRockstarAuditTenant>();

Request.PopulateRequestDtoIfAuthenticated(mqRequest);

PublishMessage(mqRequest);

}

}

In this case if using Background MQ, it will execute the CreateRockstarAuditTenant request in a background thread, populating the MQ Request Context with the session identified by the IRequest.GetSessionId().

Publishing Requests to OneWay Endpoint

You can also send MQ requests directly by publishing to the OneWay HTTP endpoint, which if your AppHost is registered with an MQ Server, it will publish the message to the MQ and auto populate Request DTOs that implements IHasSessionId or IHasBearerToken, either if implicitly sent from an Authenticated client:

var authResponse = authClient.Post(new Authenticate {

provider = "credentials",

UserName = "admin@email.com",

Password = "p@55wOrd",

RememberMe = true,

});

authClient.SendOneWay(new CreateRockstarAuditTenant {

FirstName = nameof(CreateRockstarAuditTenant),

LastName = "SessionId",

Age = 20,

DateOfBirth = new DateTime(2002,2,2),

});

Or from an anonymous client with the explicit BearerToken or SessionId properties populated, e.g:

client.SendOneWay(new CreateRockstarAuditMqToken {

BearerToken = JwtUserToken,

FirstName = nameof(CreateRockstarAuditMqToken),

LastName = "JWT",

Age = 20,

DateOfBirth = new DateTime(2002,2,2),

});

To save populating the BearerToken in each request, you can set it once on the Service Client which will automatically populate it on Request DTOs:

client.BearerToken = jwtUserToken;

Declarative Validation

To facilitate greater declarative functionality around ServiceStack Services, this release also introduces support for declarative validation where all existing Fluent Validation Property Validators can be annotated on Request DTOs using typed validation attributes which are decoupled from their Validator implementation so they're suitable to be annotated on impl-free Service Model DTOs and exported in Add ServiceStack Reference Types.

As they're decoupled they can eventually be used to implement instant validation feedback on clients without server round trips

The validators are incorporated into ServiceStack's existing Fluent Validation model so it works with existing UI form binding.

Property Validators

The new Property Validator attributes provide an alternative way to apply Request DTO validation rules, the best way to demonstrate them is showing the same example below implemented using Fluent Validation APIs:

public class ExampleValidatorsValidator : AbstractValidator<ExampleValidators>

{

public ExampleValidatorsValidator()

{

RuleFor(x => x.CreditCard).CreditCard();

RuleFor(x => x.Email).EmailAddress();

RuleFor(x => x.Empty).Empty();

RuleFor(x => x.Equal).Equal("Equal");

RuleFor(x => x.ExclusiveBetween).ExclusiveBetween(10, 20);

RuleFor(x => x.GreaterThanOrEqual).GreaterThanOrEqualTo(10);

RuleFor(x => x.GreaterThan).GreaterThan(10);

RuleFor(x => x.InclusiveBetween).InclusiveBetween(10, 20);

RuleFor(x => x.Length).Length(10);

RuleFor(x => x.LessThanOrEqual).LessThanOrEqualTo(10);

RuleFor(x => x.LessThan).LessThan(10);

RuleFor(x => x.NotEmpty).NotEmpty();

RuleFor(x => x.NotEqual).NotEqual("NotEqual");

RuleFor(x => x.Null).Null();

RuleFor(x => x.ScalePrecision).ScalePrecision(1,1);

RuleFor(x => x.RegularExpression).Matches(@"^[a-z]*$");

}

}

For each property validator above you can use a Typed Property Validation Attribute in the format [Validate*]:

public class ExampleValidators : ICreateDb<ExampleValidator>, IReturn<EmptyResponse>

{

[ValidateCreditCard]

public string CreditCard { get; set; }

[ValidateEmail]

public string Email { get; set; }

[ValidateEmpty]

public string Empty { get; set; }

[ValidateEqual("Equal")]

public string Equal { get; set; }

[ValidateLessThan(10)]

public int LessThan { get; set; }

[ValidateLessThanOrEqual(10)]

public int LessThanOrEqual { get; set; }

[ValidateGreaterThan(10)]

public int GreaterThan { get; set; }

[ValidateGreaterThanOrEqual(10)]

public int GreaterThanOrEqual { get; set; }

[ValidateExclusiveBetween(10, 20)]

public int ExclusiveBetween { get; set; }

[ValidateInclusiveBetween(10, 20)]

public int InclusiveBetween { get; set; }

[ValidateExactLength(10)]

public string Length { get; set; }

[ValidateNotEmpty]

public string NotEmpty { get; set; }

[ValidateNotEqual("NotEqual")]

public string NotEqual { get; set; }

[ValidateNull]

public string Null { get; set; }

[ValidateScalePrecision(1,1)]

public decimal ScalePrecision { get; set; }

[ValidateRegularExpression("^[a-z]*$")]

public string RegularExpression { get; set; }

}

All Typed Validator Attributes above are just providing a typed subclass wrapper around the generic [Validate], so the implementation of

the [ValidateLessThan] is just:

public class ValidateLessThanAttribute : ValidateAttribute

{

public ValidateLessThanAttribute(int value) : base($"LessThan({value})") { }

}

So the same Typed Validator above is equivalent to using the untyped generic [Validate] attribute below:

public class ExampleValidators : ICreateDb<ExampleValidator>, IReturn<EmptyResponse>

{

[Validate("CreditCard")]

public string CreditCard { get; set; }

[Validate("Email")]

public string Email { get; set; }

[Validate("Empty")]

public string Empty { get; set; }

[Validate("Equal('Equal')")]

public string Equal { get; set; }

[Validate("ExclusiveBetween(10, 20)")]

public int ExclusiveBetween { get; set; }

[Validate("GreaterThanOrEqual(10)")]

public int GreaterThanOrEqual { get; set; }

[Validate("GreaterThan(10)")]

public int GreaterThan { get; set; }

[Validate("InclusiveBetween(10, 20)")]

public int InclusiveBetween { get; set; }

[Validate("ExactLength(10)")]

public string Length { get; set; }

[Validate("LessThanOrEqual(10)")]

public int LessThanOrEqual { get; set; }

[Validate("LessThan(10)")]

public int LessThan { get; set; }

[Validate("NotEmpty")]

public string NotEmpty { get; set; }

[Validate("NotEqual('NotEqual')")]

public string NotEqual { get; set; }

[Validate("Null")]

public string Null { get; set; }

[Validate("RegularExpression('^[a-z]*$')")]

public string RegularExpression { get; set; }

[Validate("ScalePrecision(1,1)")]

public decimal ScalePrecision { get; set; }

}

Where the Validator Expression is a #Script Expression that returns a Fluent Validation IPropertyValidator defined

in the built-in ValidateScripts.cs:

public class ValidateScripts : ScriptMethods

{

public IPropertyValidator Null() => new NullValidator();

public IPropertyValidator Empty() => new EmptyValidator(null);

public IPropertyValidator Empty(object defaultValue) => new EmptyValidator(defaultValue);

public IPropertyValidator Equal(object value) => new EqualValidator(value);

public IPropertyValidator NotNull() => new NotNullValidator();

public IPropertyValidator NotEmpty() => new NotEmptyValidator(null);

public IPropertyValidator NotEmpty(object defaultValue) => new NotEmptyValidator(defaultValue);

public IPropertyValidator NotEqual(object value) => new NotEqualValidator(value);

public IPropertyValidator CreditCard() => new CreditCardValidator();

public IPropertyValidator Email() => new AspNetCoreCompatibleEmailValidator();

public IPropertyValidator Length(int min, int max) => new LengthValidator(min, max);

public IPropertyValidator ExactLength(int length) => new ExactLengthValidator(length);

public IPropertyValidator MaximumLength(int max) => new MaximumLengthValidator(max);

public IPropertyValidator MinimumLength(int min) => new MinimumLengthValidator(min);

public IPropertyValidator InclusiveBetween(IComparable from, IComparable to) =>

new InclusiveBetweenValidator(from, to);

public IPropertyValidator ExclusiveBetween(IComparable from, IComparable to) =>

new ExclusiveBetweenValidator(from, to);

public IPropertyValidator LessThan(int value) => new LessThanValidator(value);

public IPropertyValidator LessThanOrEqual(int value) => new LessThanOrEqualValidator(value);

public IPropertyValidator GreaterThan(int value) => new GreaterThanValidator(value);

public IPropertyValidator GreaterThanOrEqual(int value) => new GreaterThanOrEqualValidator(value);

public IPropertyValidator ScalePrecision(int scale, int precision) =>

new ScalePrecisionValidator(scale, precision);

public IPropertyValidator RegularExpression(string regex) =>

new RegularExpressionValidator(regex, RegexOptions.Compiled);

}

Validated Validator Expressions

Despite using untyped string Expressions, Validator expressions still provide early error detection as on Startup each #Script

expression is evaluated and verified that it resolves to a valid IPropertyValidator instance otherwise fails with a Startup Exception.

If the instance returned is valid it's merged with any other AbstractValidator<T> that may also be defined for the same Request DTO Type,

where it lets you mix n' match declarative attributes together with Fluent Validation rules.

Defining Multiple Validators

You can specify multiple Property Validators should be applied within a single Validator expression by using [] Array notation,

alternatively you can apply multiple Validate attributes and use C# syntax to combine them in a single line:

public class ExampleValidators

{

[Validate("[NotNull,InclusiveBetween(13,100)]")]

public int? ValidateAge { get; set; }

[ValidateNotNull,ValidateInclusiveBetween(13,100)]

public int? TypedAge { get; set; }

}

Registering Custom Declarative Validators

As [Validate*] attributes just execute a Script Method they're easily extensible by defining and register your own, e.g:

public class MyValidateScripts : ScriptMethods

{

public IPropertyValidator Custom(int arg) => new MyCustomValidator(arg);

}

Which can be registered, either directly on your Script Pages plugin if your AppHost uses one:

Plugins.Add(new SharpPagesFeature {

ScriptMethods = { new CustomScriptMethods() }

});

Otherwise you can use the AppHost's new ScriptContext which adds it to the AppHost's empty ScriptContext:

ScriptContext.ScriptMethods.Add(new CustomScriptMethods());

ScriptContextalso returnsSharpPagesFeatureif registered, in which case both registration examples are equivalent

After which you'll immediately be able to use it with the [Validate] attribute:

[Validate("Custom(1)")]

public int Test { get; set; }

Likewise you can create a typed Validate attribute around it which you can use instead:

public class ValidateCustomAttribute : ValidateAttribute

{

public ValidateCustomAttribute(int arg) : base($"Custom({arg})") { }

}

//...

[ValidateCustom(1)]

public int Test { get; set; }

Custom Script Validation

Fluent Validation Validators are a nice model for defining reusable validation rules however they can require a bit of boilerplate

if you only need to define a one-off validation check. In these cases we can provide an even lighter weight solution by being able

to defining our validation condition inline with #Script by specifying it in the Condition attribute, e.g:

public class ExampleValidators : ICreateDb<ExampleValidator>, IReturn<EmptyResponse>

{

[Validate(Condition = "it.isOdd()")]

public int IsOddCondition { get; set; }

[Validate(Condition = "it.isOdd() && it.log10() > 2")]

public int IsOddAndOverTwoDigitsCondition { get; set; }

[Validate(Condition = "it.isOdd() || it.log10() > 2")]

public int IsOddOrOverTwoDigitsCondition { get; set; }

}

Script Conditions are valid if they return a truthy value and have access to the following arguments within their Expression:

Request: IRequestdto: Request DTOfield: Property Nameit: Property Value

If you're reusing the same Expression a nice solution for maintaining them is in a static class where you can use the AllConditions

and AnyConditions helper properties to compose individual checks, e.g:

public static class ValidationConditions

{

public const string IsOdd = "it.isOdd()";

public const string IsOver2Digits = "it.log10() > 2";

}

public class ExampleValidators : ICreateDb<ExampleValidator>, IReturn<EmptyResponse>

{

[Validate(Condition = ValidationConditions.IsOdd)]

public int IsOddCondition { get; set; }

[Validate(AllConditions = new[]{ ValidationConditions.IsOdd, ValidationConditions.IsOver2Digits })]

public int IsOddAndOverTwoDigitsCondition { get; set; }

[Validate(AnyConditions = new[]{ ValidationConditions.IsOdd, ValidationConditions.IsOver2Digits })]

public int IsOddOrOverTwoDigitsCondition { get; set; }

}

Despite not using a validator all #Script Conditions are executed using a custom Fluent Validation IPredicateValidator

(called ScriptConditionValidator) so it able to slot right

in with all other Property Validators.

Custom Error Codes and Messages

The other aspect of validators that can be overridden declaratively are the ErrorCode and Error Message returned in ServiceStack's

structured Error Response, specified using the ErrorCode and Message Attribute properties:

public class ExampleValidators : ICreateDb<ExampleValidator>, IReturn<EmptyResponse>

{

[ValidateNotNull(ErrorCode = "ZError")]

public string CustomErrorCode { get; set; }

// Overrides both ErrorCode & Message

[ValidateInclusiveBetween(1,2, ErrorCode = "ZError",

Message = "{PropertyName} has to be between {From} and {To}, you: {PropertyValue}")]

public int CustomErrorCodeAndMessage { get; set; }

// Overrides ErrorCode & uses Message from Validators

[ValidateNotNull(ErrorCode = "RuleMessage")]

public string ErrorCodeRule { get; set; }

[Validate(Condition = ValidationConditions.IsOdd)]

public int IsOddCondition { get; set; }