ServiceStack turns 10!

Before we get into unpacking this release we're happy to announce that we've now eclipsed 10 years since our first commit!

When you've been working on the same piece of code or art work for a long enough time you can look through it as a time lens back to that first commit, the developer you were, the visions, inspiration and motivations you had. Enough years pass that it eclipses a generation, remembering the person you were then and comparing with who you are now, how we grew as developers, as people, with different skills, careers, motivations, perspectives and life priorities.

As I take this moment to reflect back, it's reassuring to see that whilst the environments and ecosystems around us have all changed, ServiceStack's code always stayed true to its time-tested original vision. Whose core focus, Services, still provides the most value and software reuse, whose interface is still your systems most important and regardless of the technology used to implement them, its goals continue to be to make them simple to implement and simple to consume from wherever they're being called from - realizing their benefits.

It might be clear now but it wasn't in the era ServiceStack was created in, where most software was being given the "Enterprise" label and focused on surrounding itself with "Enterprise features". In Java, Enterprise Java Beans were being marketed as the pinnacle of Enterprise Software development whilst in .NET we were told we needed to use SOAP to in order to develop Web Services - which after experiencing the friction they inflicted in large code-bases first-hand, became the catalyst for starting ServiceStack - a clean, friction-free Services Framework for developing evolving message-based APIs, that even then already shipped with multiple JSON/XML serialization formats and REST/HTTP and SOAP endpoints out-of-the-box, including generic SOAP clients to ease transitions from legacy code bases.

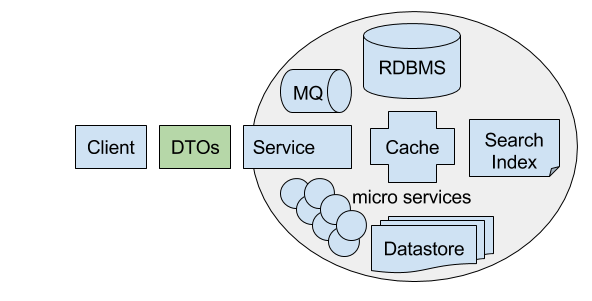

DTO-first Services on the outside

From the outset ServiceStack embraced and built upon the importance of the Data Transfer Object (DTO) and Remote Facade patterns for the development of its robust coarse-grained, message-based Services, the importance of using Model-first logic-less DTOs for developing well-defined, evolvable, serialization-agnostic Services ensuring they can be consumed in any format, in any language and client - where they utilized the Gateway pattern, a large part to why ServiceStack Services are so easily consumed from all its reusable generic Typed Service Clients in different languages, all in the same way, where only DTOs are needed to enable its end-to-end Typed APIs.

Even today, most generated client proxy solutions still generate their clients RPC proxy stubs coupled with their types, creating additional friction whilst limiting its flexibility and reuse.

Clean architecture on the inside

Instead of WCF's dependence on complicated configuration and tooling ServiceStack opted for a convention-based configuration-free approach that in addition to adopting a simpler, cleaner more robust approach to develop Services on the outside, also encouraged a cleaner development model on the inside where DTOs only dependency were to ServiceStack.Interfaces - a dependency and implementation-free Assembly containing abstractions of all ServiceStack providers. This allowed the decoupling of your Host project containing all your App's configuration and concrete dependencies with your Services logic which would only bind to substitutable and testable interfaces so your AppHost could freely switch to use different concrete Caching, Configuration or Logging providers without impacting your Services implementation which is decoupled from your ServiceModel DTOs, which itself has no concrete dependencies.

This same time-tested blue-print giving ServiceStack Projects a structured base to build on continues to be our recommended Physical Project Structure that's adopted from most of our project templates.

So what started out as a System.Web ASP.NET 3/3.5 code-base 10 years ago has now evolved where its single modular code-base can support running your ServiceStack Services on any of .NET Framework v4.5+ and .NET Core's most popular Hosts where they're available in a myriad of serialization formats, accessible from a number of popular MQ Servers and SOAP Endpoints (should you still need to support legacy integrations) whilst the first-class end-to-typed support for all popular Mobile and Desktop platforms enables maximum utility and productivity for everyone consuming your Services.

Thank You!

I'd like to take this milestone to thank our thousands of Customers we've had the pleasure to serve and see our Software used which has amassed more than 27,000,000+ total downloads on NuGet and to see the uptick in adoption of some of our unique features like Add ServiceStack Reference which has been used more than 88,000 times to generate native Typed DTOs amongst its 8 supported languages.

It's rewarding to see value being created with our software and the small part we've played in its success, we're especially grateful to continue serving our long term Customers who've supported us since ServiceStack became a permanent full-time effort in 2013 and I look forward to continue working on enhancing the value and provide more first-class integrations around your ServiceStack Services including access to the latest modern development technologies in the simplest and most productive way we can make it, including continuing to develop our own innovations where it can reduce friction or be able to deliver a simpler and more enjoyable experience - many of which we're happy to introduce to you today.

And with that we have another jam-packed release with exciting features across the board, if you haven't got enough time to go through it all today, feel free to jump directly to the features you're interested in:

Table of Contents

- ASP.NET Core on .NET Framework

- New Vue and React "lite" ASP.NET Core Templates

- Introducing new "lite" npm-free project templates

- Light on Complexity, Big on Features

- vue-lite

- react-lite

- Development workflow

- Update TypeScript DTOs

- Integrated Bundling

- Pre-compiled minified production bundles

- Minified bundles with cache breakers

- Available in Razor Helpers

- vue-lite Project Template features

- react-lite Project Template features

- React Global State Management

- "lite" Project Structure

- Updating "lite" project dependencies

- #Script!

- World Validation

- "No touch" Host Configuration

- Auto Mapping

- Page Based Routing in Razor!

webtoolweb new- .NET's missing project template systemweb +- customize mix/match projects from gists!- SourceLink Enabled Packages

- Authentication

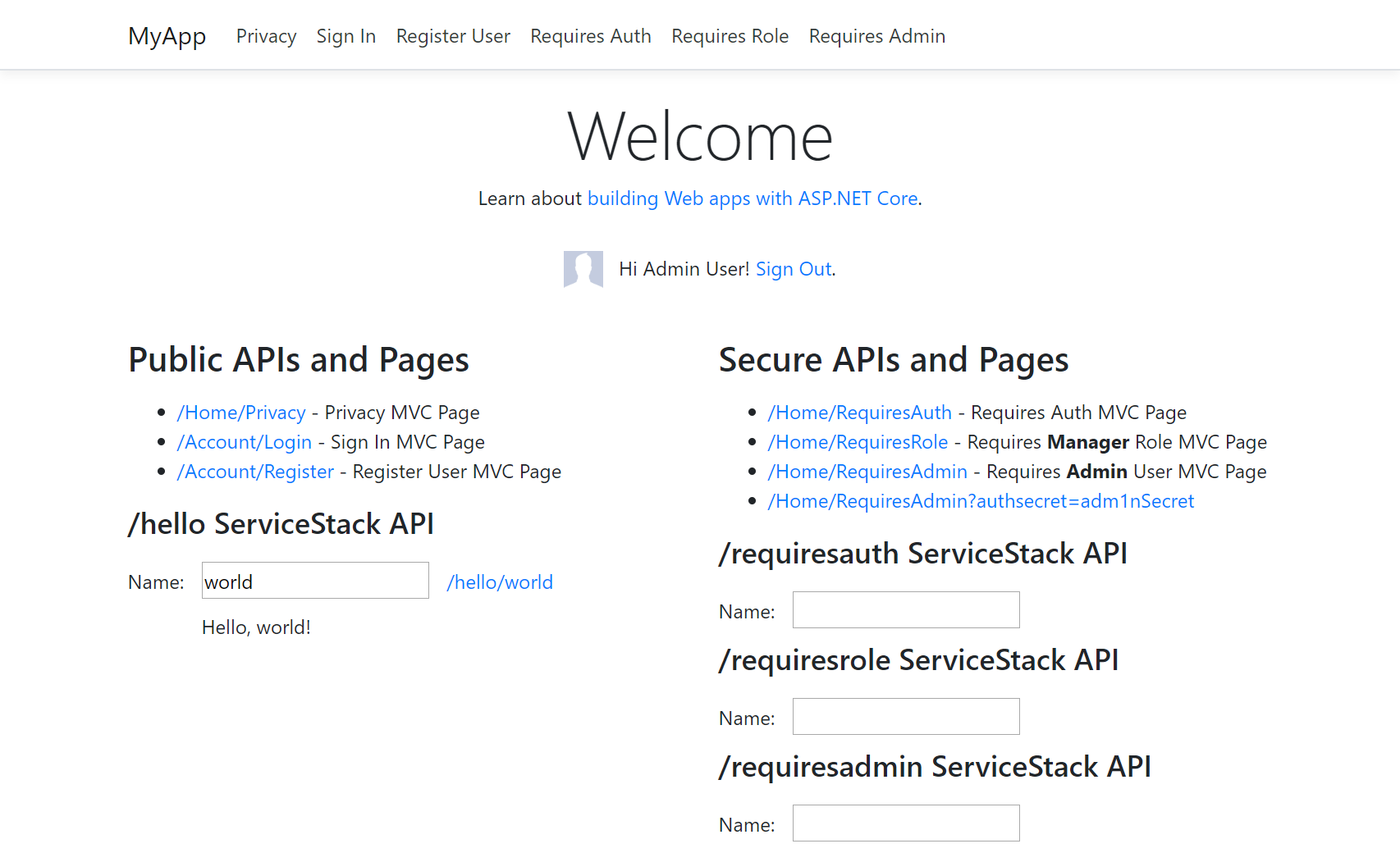

- Using ServiceStack Auth in MVC

- Using ASP.NET Identity Auth in ServiceStack

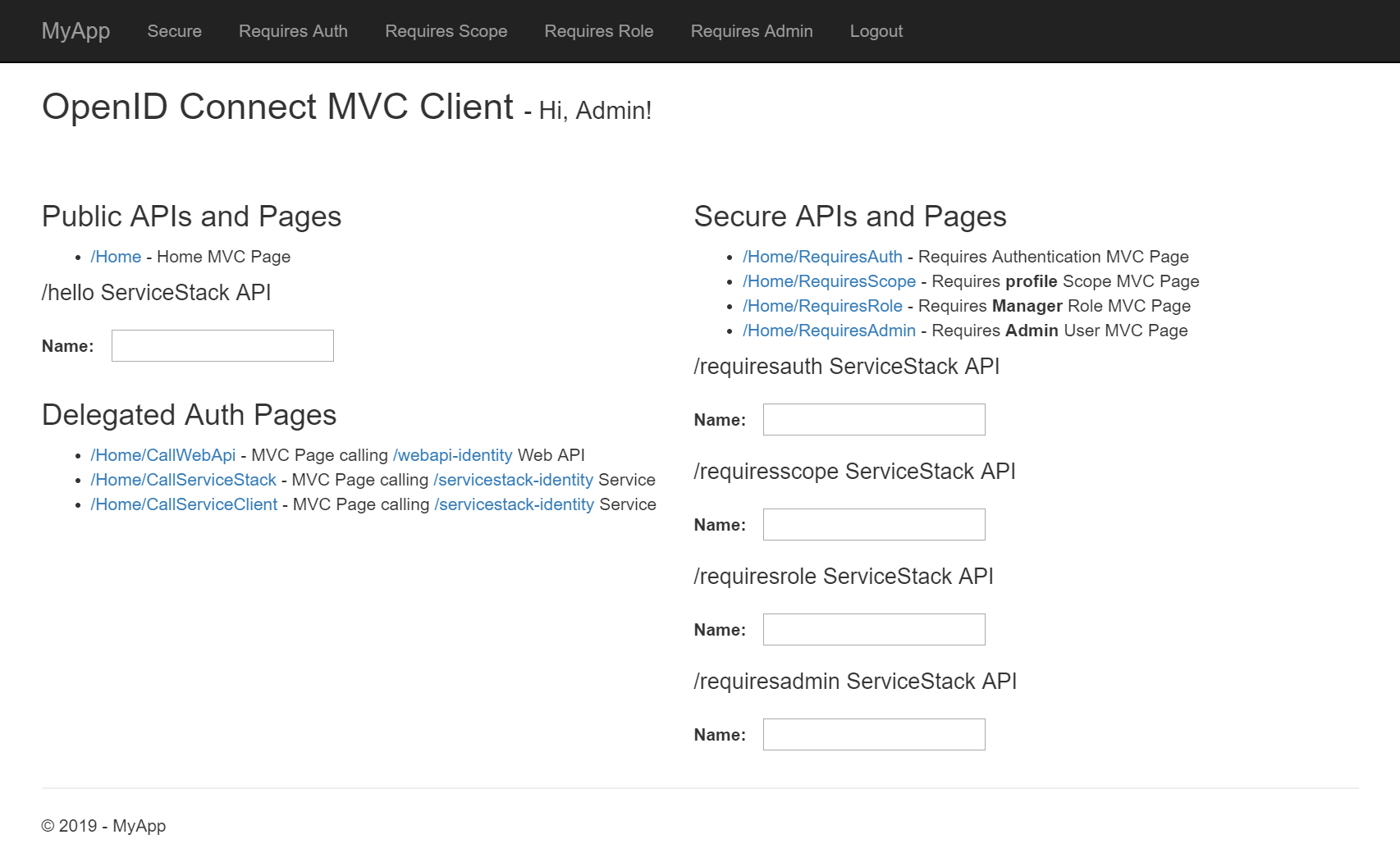

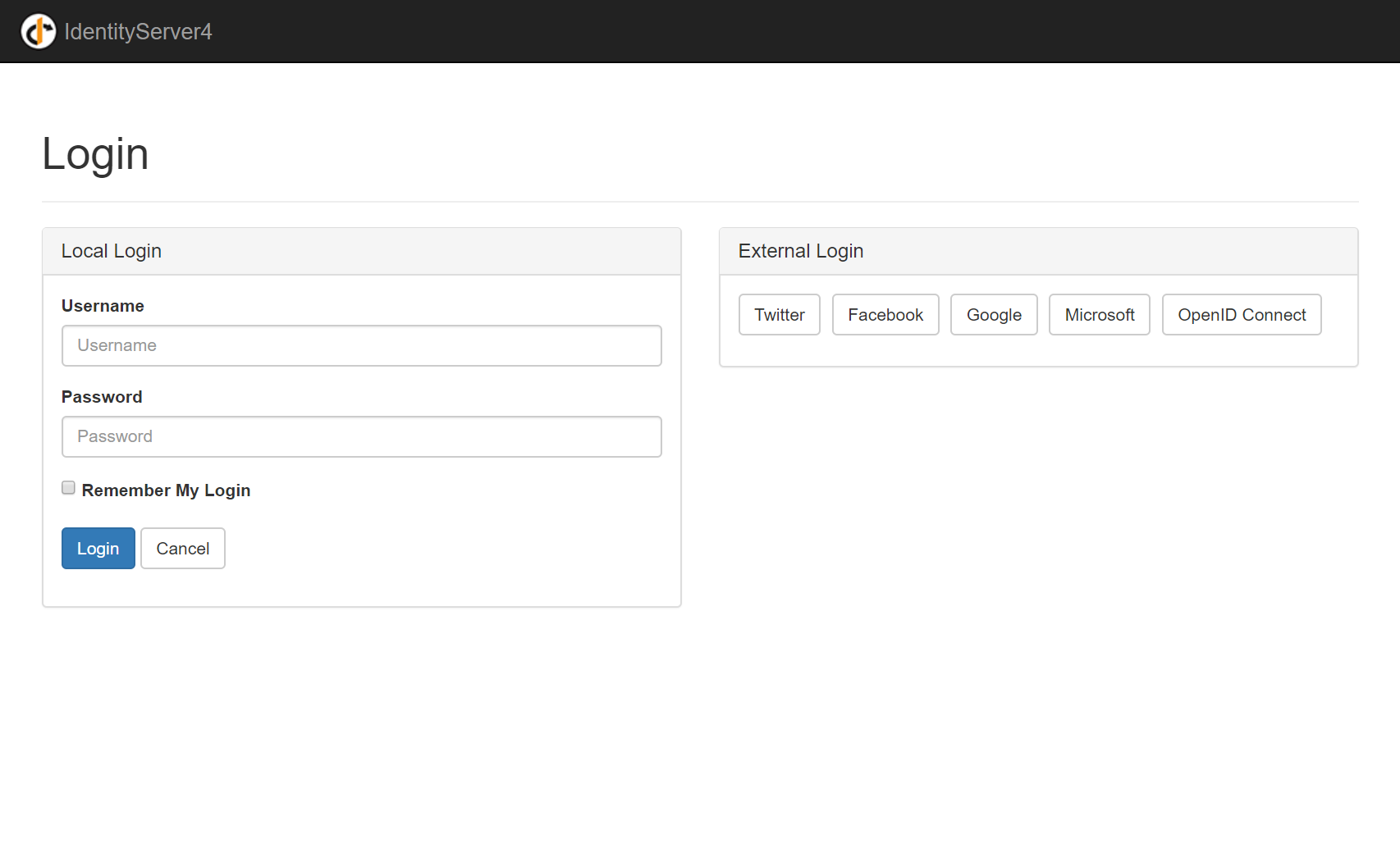

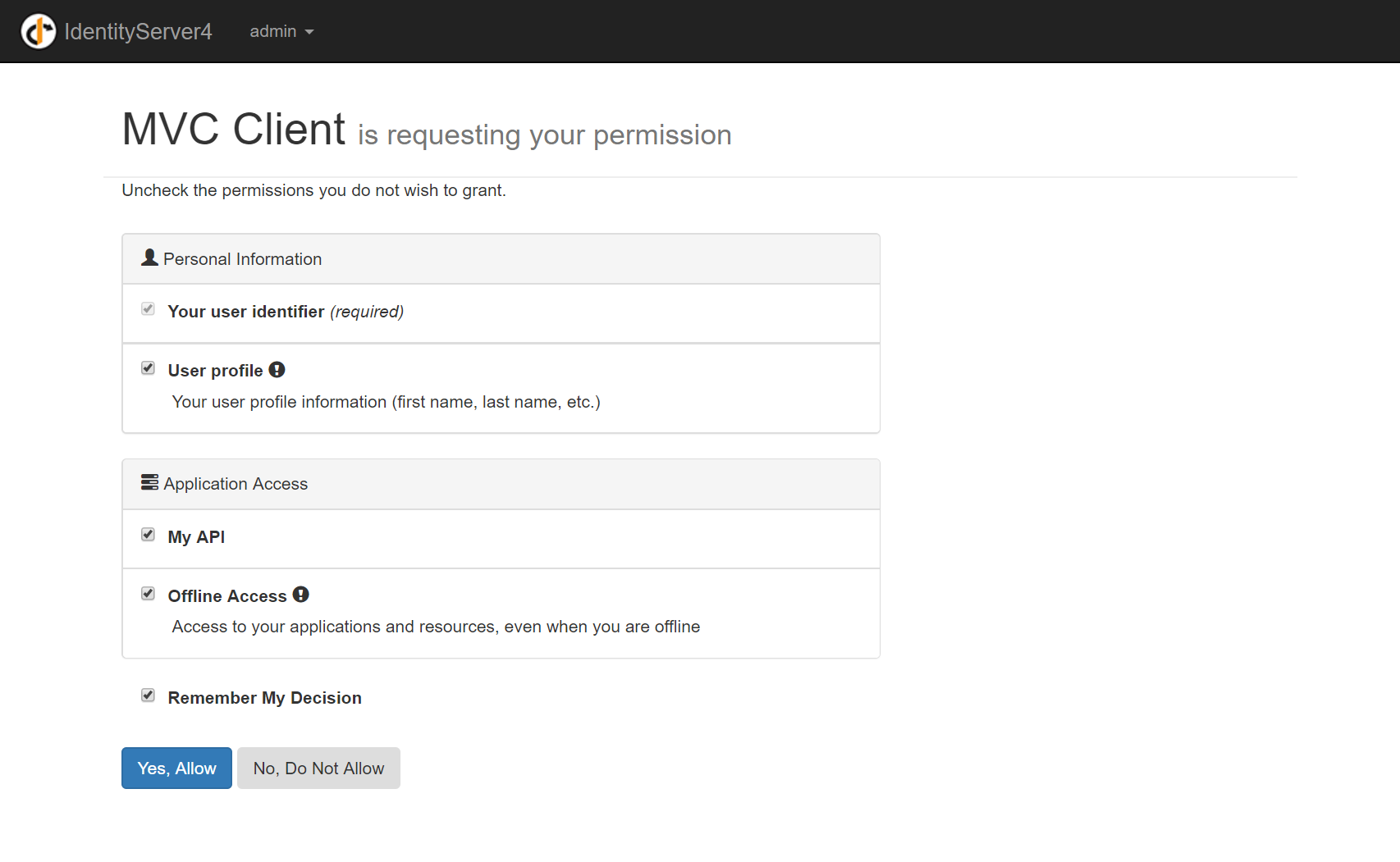

- Using IdentityServer4 Auth in ServiceStack

- ServiceStack

- New Auth Providers

- Microsoft Graph Auth Provider

- New Claim APIs

- IServiceProvider Request Extensions

- Enable Same Site Cookies

- Cookie Filters

- Secure Cookies enabled by default

- Override Authorization HTTP Header

- GET Authenticate Requests are disabled by default

- UserSession validation

- Intercept Service Requests

- Fluent Validation

- Auto Batching

- Hot Reload

- Image Utils

- Enum Utils

- Open API Feature

- Disable Auto HTML Pages

- TypeScript

- Messaging

- OrmLite

- INSERT INTO SELECT

- PostgreSQL Rich Data Types

- Hstore support

- JSON data types

- New KeyValuePair<K,V> top-level APIs

- SELECT Constant Expressions

- SELECT DISTINCT in SelectMulti

- New TableAlias replaces JoinAlias

- GetTableNames and GetTableNamesWithRowCounts APIs

- Dapper updated

- DB Scripts can open different connections

- Redis

- ServiceStack.Text

- ServiceStack.Azure

ASP.NET Core on .NET Framework

Another important announcement that occurred since our last release was Microsoft's announcement that it would stop supporting new versions of ASP.NET Core on the .NET Framework. Whilst we strongly disagreed against this decision which would've put out a large class of the existing ecosystems from participating in the new ASP.NET Core development model and many from staged migrations to .NET Core from commencing, we're happy to see ASP.NET Core 2.1 LTS will enjoy the same indefinite level of support as the rest of the .NET Framework - which should come as great news to the 1/3 of our Customers who are still creating new ASP.NET Core on FX Project Templates.

Whilst this announcement sends a clear message that new development on .NET Framework has effectively been put on product life support, ASP.NET Core 2.1 LTS is still a great rock-solid platform to build on if you're unable to jump directly to .NET Core immediately or if you want to get off .NET Core's major version release train and build upon a stable LTS platform.

ASP.NET Core - still our top recommendation for .NET Framework

If you need to stay on the .NET Framework, we'd still recommend using the newer ASP.NET Core 2.1 over classic ASP.NET System.Web projects as it's cleaner, lighter, more flexible and future proof. Unlike Microsoft web frameworks, ServiceStack is a single code-base which supports running on multiple platforms so your ServiceStack Services can enjoy near perfect source-code compatibility when and if you choose to move to .NET Core in future.

So whilst Microsoft is stopping new development of ASP.NET Core on .NET Framework, we're not, our supported packages have standardized to multi-target both .NET v4.5+ and .NET Standard 2.0 which is supported natively on ASP.NET Core 2.1.

ServiceStack's multi-targeted Packages

We've opted early on to shun classic ASP.NET providers and use our own clean Session, Caching, Configuration, Logging providers all of which automatically support .NET Standard 2.0 given they're clean library implementations without .NET Framework-only dependencies.

The only features which we can't offer .NET Standard 2.0 builds for are when they referenced external packages which don't offer .NET Standard 2.0 builds, the major examples being:

- ServiceStack.Authentication.OAuth2 which depends on DotNetOpenAuth, of which we've rewritten the last remaining popular OAuth providers in this release to not have any dependencies and

- ServiceStack.Razor which depends on the .NET Framework Microsoft.AspNet.Razor which we've rewritten on top of ASP.NET Core MVC that in this release gained features that eclipses the .NET Framework implementation with the exciting new Page-based routing feature.

Future proofed and continually developed

But otherwise all our own home-grown innovations like #Script (fka ServiceStack Templates) naturally support .NET Framework and .NET Core and runs everywhere ServiceStack does including within classic ASP.NET MVC Controllers which wasn't a design goal but was a natural consequence of developing clean libraries without external dependencies or reliance on external tooling.

This is to say that ASP.NET Core 2.1 LTS is still a fantastic rock-solid platform to run your .NET Framework workloads when you need to which will continue to receive enhancements and new features with each ServiceStack release courtesy of being derived from the same shared code-base which will enable seamless migrations to .NET Core should you wish to in future.

Subpar experiences

It's not always a friction-free experience as there have been frequent reports of runtime Assembly binding issues which are not always correctly handled by NuGet package installs and may require manual binding redirects, in general upgrading to the latest .NET Framework will mitigate these issues.

Also you'll miss out on some niceties like the Microsoft.AspNetCore.App meta-package reference, as a result we recommend starting from one of our ASP.NET Core Framework project Templates which contains all the individual package references needed to get started which we've expanded in this release to include a couple of exciting new project templates...

New Vue and React "lite" ASP.NET Core Templates

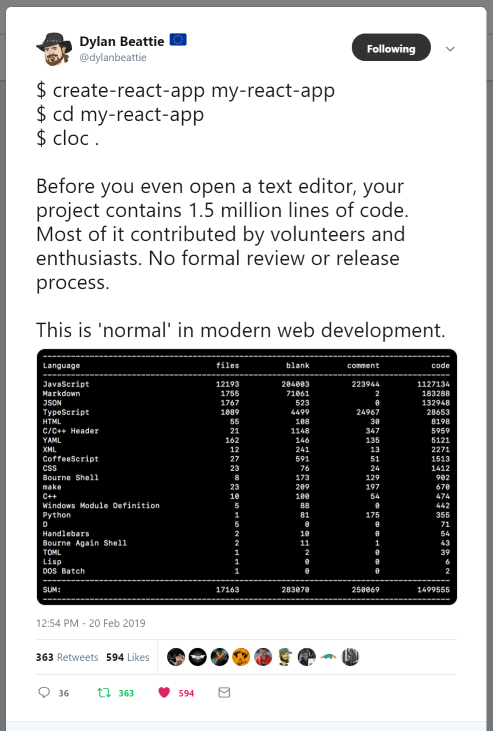

Developing Single Page Apps can often feel like a compromise, on the one hand premier JS frameworks like Vue and React have offered unprecedented simplicity and elegance in developing rich and reactive Single Page Apps on the Web, on the other hand you have to start from:

This is the result of npm's culture for hyper modularization into micro modules where often these dependencies only contain a single function, in some cases something as simple as checking if a number is positive is an npm package all by itself with a single function requiring 3 additional dependencies.

Regardless of the reasons touted for micro modules, they have many negative side-effects where each of these dependencies opens a possible vector making your project susceptible to breaking changes, potential vulnerabilities or potentially malicious code if any of the packages in your dependency tree becomes compromised by a bad actor. The resulting matrix of dependencies often requires you to use complicated tools like Webpack to manage them, which itself can grow to accumulate bespoke complex configuration to manage your projects builds which can quickly become obsolete with each new major Webpack version.

There are also packages that have shunned this trend like typescript - a wonderfully capable Typed superset of JavaScript that assists in maintaining new class of large-scale code-bases - a clear counter that you can maintain large, high quality code bases and build highly functional and capable libraries without micro modules.

Introducing new "lite" npm-free project templates

The question we keep asking ourselves is how can ServiceStack make modern Web Development simpler, the natural choice was to provide pre-configured Webpack-powered SPA Project Templates - bringing the recommended SPA development model for all popular SPA frameworks to .NET, which we've been doing successfully and seamlessly integrated with ServiceStack for years.

However the next leap in simplicity wont be coming from adding additional tooling to manage the complexity, it will be from removing the underlying complexity entirely. Fortunately one of the targets all premier SPA frameworks offer are encapsulated UMD packages so they can be referenced as a single include in online IDE's like codepen.io but also for simple Web Apps that want to gradually adopt these frameworks but want to avoid the complexity of maintaining an npm build system.

These UMD packages lets us return back to the simple era of web development where we can go back to referencing libraries using simple script includes - which is the strategy embraced in ServiceStack's new "lite" project templates.

Light on Complexity, Big on Features

Surprisingly whilst we're able to rid ourselves of the complexity of maintaining an npm-based build system, we're still able to enjoy many of the features that make SPA development with Webpack a joy:

- Integrated hot-reloading

- Advanced JavaScript language features

- Continue developing with same componentized development model as done when using Webpack

- Future proofed to use optimal ES6 source code

- TypeScript with runtime type-checking verification and auto-complete

- Incremental compilation

- TypeScript declarations are included for all default packages

- Smart, effortless bundling and minification

- Optimal unminified during development and minified for production

- No reliance on external tooling necessary, but can use same bundling configuration in website

_layout.htmlif pre-compilation is preferred

Essentially the "lite" templates goal are to provide the richest suite of functionality possible with the least amount of complexity. TypeScript was adopted because it runs as a non-invasive global tool with no dependencies that enables us to take advantage of the latest JavaScript language features to be able to develop in modern JavaScript without compromises, in the same source code as a fully-fledged npm webpack build system, should you wish to upgrade to one in future.

Install

All ServiceStack Project Templates can now be created with our web (or app) .NET Core tool:

$ dotnet tool install -g web

If you previously had an existing web tool installed, update it to the latest version with:

$ dotnet tool update -g web

vue-lite

Browse source code, view vue-lite.web-templates.io live demo and install for .NET Core with:

$ web new vue-lite ProjectName

Alternatively you can create an ASP.NET Core 2.1 LTS project on .NET Framework with:

$ web new vue-lite-corefx ProjectName

react-lite

Browse source code, view react-lite.web-templates.io live demo and install for .NET Core with:

$ web new react-lite ProjectName

Alternatively you can create an ASP.NET Core 2.1 LTS project on .NET Framework with:

$ web new react-lite-corefx ProjectName

Development workflow

All that's needed for client development is to run TypeScript in "watch" mode:

$ tsc -w

Which monitors any changes to any .ts files and incrementally compiles their .js files on save. ServiceStack's built-in

static files hot-reloading detects

changes to any .js files and automatically reloads the page.

For Server C# development, start your .NET Web App in a new Terminal window with:

$ dotnet watch run

Using

watch runwill monitor changes toC#source files and automatically re-builds and restarts the Server.

Update TypeScript DTOs

After changing your ServiceStack Services, you can re-generate their Typed TypeScript DTOs with:

$ web ts

Which will recursively update and re-generate all *dto.ts in the current and sub directories.

Integrated Bundling

The way to eliminate needing a build and module system comes down to including dependencies in dependent order which is where ServiceStack's new bundling APIs help with. We'll walk through the vue-lite to see how this is easily done.

All the bundling logic for all .css and .js resources are contained within the _layout.html page below:

/wwwroot/_layout.html

<!DOCTYPE html>

<html lang="en">

<head>

<meta charset="UTF-8">

<meta name="viewport" content="width=device-width, initial-scale=1.0">

<meta http-equiv="X-UA-Compatible" content="ie=edge">

<title>{{ title ?? 'MyApp' }}</title>

{{ (debug ? '' : '.min') | assignTo: min }}

{{ ['/assets/css/'] | bundleCss({minify:!debug, cache:!debug, disk:!debug, out:`/css/bundle${min}.css`}) }}

</head>

<body>

<i hidden>{{ '/js/hot-fileloader.js' | ifDebugIncludeScript }}</i>

{{page}}

{{ [

`/lib/vue/dist/vue${min}.js`,

`/lib/vue-router/dist/vue-router${min}.js`,

'/lib/vue-class-component/vue-class-component.js',

'/lib/vue-property-decorator/vue-property-decorator.umd.js',

'/lib/@servicestack/client/servicestack-client.umd.js',

] | bundleJs({ minify:!debug, cache:!debug, disk:!debug, out:`/js/lib.bundle${min}.js` }) }}

<script>

var ALIASES = {

'vue': { default: Vue },

'vue-router': { default: VueRouter },

'vue-class-component': VueClassComponent

};

window.exports = {};

window.require = function(name) {

return ALIASES[name] || exports[name] || window[name] || exports;

};

</script>

{{ [

'content:/src/components/',

'content:/src/shared/',

'content:/src/',

] | bundleJs({ minify:!debug, cache:!debug, disk:!debug, out:`/js/bundle${min}.js` }) }}

{{ scripts | raw }}

</body>

</html>

Bundling happens on-the-fly at runtime when the index.html page is requested which is embedded in its nearest _layout.html (above).

CSS Bundling

The first bundle created is the .css bundle that's appropriately located in the <head/> section of the HTML page.

How and where the bundle is written depends on whether the page is loaded in Development (debug) or Release mode:

{{ var min = debug ? '' : '.min' }}

{{ ['/assets/css/'] |> bundleCss({ minify:!debug, cache:!debug, disk:!debug, out:`/css/bundle${min}.css` }) }}

Bundling Options

The bundler will include all target resources specified on the left of bundleCss using the behavior as specified in the argument options on the right:

minify- whether to minify the.cssfiles before bundlingcache- whether to use the previous cached version if existsdisk- whether to save the output bundle to disk or in the In Memory FileSystembundle- whether to bundle all.cssin a single file or emit include individual<link />importsout- virtual file path where to save the bundle (defaults to/css/bundle{.min}.css)

During development (in DebugMode) this will create an unminified bundle, ignoring any previous caches that's saved to the In Memory Virtual File at /css/bundle.css.

Whereas in Release mode it will create a minified bundle, with all subsequent requests using the pre-bundled asset written at /css/bundle.min.css.

No tooling or pre-compilation is required prior to deployment as the bundler will automatically create one if it doesn't already exist.

All virtual paths are from the wwwroot/ WebRoot. Paths ending with a / indicate to include all .css files in that directory, which

is included in DirectoryInfo (alphabetical) order.

If for example you wanted to include your App's default.css before bootstrap.css you can specify it first, where it will be included first

and ignored in subsequent references, e.g:

{{ [

'/assets/css/default.css',

'/assets/css/'

] |> bundleCss }}

Hot Reloading of Static Resources

The script below enables hot-reloading during development:

<i hidden>{{ '/js/hot-fileloader.js' |> ifDebugIncludeScript }}</i>

Where it will automatically reload the page if it detects any modifications to any .html, .js or .css files,

Configured with:

if (Config.DebugMode)

{

Plugins.Add(new HotReloadFeature {

DefaultPattern = "*.html;*.js;*.css",

VirtualFiles = VirtualFiles // Monitor ContentRoot to detect changes in /src

});

}

The page placeholder is where the page will be rendered inside the Layout template:

{{page}}

JavaScript Library Bundling

The layout creates 2 JavaScript bundles, the first containing all 3rd Party libraries used in the App which is written to /js/lib.bundle{.min}.js

using the same bundling options as the bundleCss above:

{{ [

`/lib/vue/dist/vue${min}.js`,

`/lib/vue-router/dist/vue-router${min}.js`,

'/lib/vue-class-component/vue-class-component.js',

'/lib/vue-property-decorator/vue-property-decorator.umd.js',

'/lib/@servicestack/client/servicestack-client.umd.js',

] |> bundleJs({ minify:!debug, cache:!debug, disk:!debug, out:`/js/lib.bundle${min}.js` }) }}

Register UMD Module Mappings

After importing the libraries we need to make the globals registered by the UMD dependencies available under the module name they are imported from.

When they don't match they need to be explicitly registered in the ALIASES object:

<script>

var ALIASES = {

'vue': { default: Vue },

'vue-router': { default: VueRouter },

'vue-class-component': VueClassComponent

};

window.exports = {};

window.require = function(name) {

return ALIASES[name] || exports[name] || window[name] || exports;

};

</script>

Since Vue is imported as a default import:

import Vue from 'vue';

It's expected for require("vue").default to return the module assigned to the Vue global:

(global = global || self, global.Vue = factory());

Dependencies like vue-property-decorator.umd.js and servicestack-client.umd.js that already register themselves under their expected "vue-property-decorator" and "@servicestack/client" module names don't need any manual mappings.

App Source Code Bundling

The last js bundle created is your App's source code which also needs to be imported in dependent order, both vue-lite and react-lite project templates share the same structure so their bundle configuration is identical where /src/components contains each page defined as a separate component, the /src/shared contains any shared functionality used by the different components whilst the base /src folder contains your App's entry point:

{{ [

'content:/src/components/',

'content:/src/shared/',

'content:/src/',

] |> bundleJs({ minify:!debug, cache:!debug, disk:!debug, out:`/js/bundle${min}.js` }) }}

Bundling Path Options

The content: prefix specifies that the virtual path is from the ContentRoot directory, in this case so your App source code is maintained outside of the wwwroot/ WebRoot.

Possible values include:

web:- Web Root folder (default)content:- Content Root folderfilesystem:- TheFileSystemVFS provider in the Web Root's cascading Virtual File Sourcesmemory:- TheMemoryVFS provider in the Web Root's cascading Virtual File Sources

Finally the scripts argument is written (unencoded) after the library and App Source code bundles where it contains any additional scripts that

individual pages wants to include at the bottom of the page:

{{ scripts | raw }}

Pre-compiled minified production bundles

Whilst not required you can copy the exact same bundling configuration in your _layout.html above into a separate

/wwwroot/_bundle.ss script:

{{* run in host project directory with `web run wwwroot/_bundle.ss` *}}

{{ var debug = false }}

{{ var min = debug ? '' : '.min' }}

{{ [`/css/bundle${min}.css`,`/js/lib.bundle${min}.js`,`/js/bundle${min}.js`] |> map => fileDelete(it) |> end }}

{{* Copy same bundle definitions from _layout.html as-is *}}

{{ ['/assets/css/'] |> bundleCss({ minify:!debug, cache:!debug, disk:!debug, out:`/css/bundle${min}.css` }) }}

{{ [

`/lib/vue/dist/vue${min}.js`,

`/lib/vue-router/dist/vue-router${min}.js`,

'/lib/vue-class-component/vue-class-component.js',

'/lib/vue-property-decorator/vue-property-decorator.umd.js',

'/lib/@servicestack/client/servicestack-client.umd.js',

] |> bundleJs({ minify:!debug, cache:!debug, disk:!debug, out:`/js/lib.bundle${min}.js` }) }}

{{ [

'content:/src/components/',

'content:/src/shared/',

'content:/src/',

] |> bundleJs({ minify:!debug, cache:!debug, disk:!debug, out:`/js/bundle${min}.js` }) }}

Then run it with:

$ web run wwwroot/_bundle.ss

Which will create the production bundles, minify all already non-minified bundles and write them to disk with the paths written visible in the

#Script _bundle.ss output:

<link rel="stylesheet" href="/css/bundle.min.css">

<script src="/js/lib.bundle.min.js"></script>

<script src="/js/bundle.min.js"></script>

The bundles created by running _bundle.ss generates more advanced compression courtesy of the web tool's use of NUglify's

smarter and more advanced JS, CSS and HTML minifers.

If you encounter any issues you can revert back to using ServiceStack's built-in JSMin and CssMinifier implementations by adding these

script arguments at the top of your _bundle.css script:

<!--

jsMinifier ServiceStack

cssMinifier ServiceStack

-->

Minified bundles with cache breakers

Cache Breaker support is available by with the [hash] placeholder, which we only want to include in minified bundles.

In this case we need to perform a file pattern search to find and delete any existing generated bundles:

{{* run in host project directory with `web run wwwroot/_bundle.ss` *}}

{{ false | assignTo: debug }}

{{ (debug ? '' : '[hash].min') | assignTo: min }}

{{ [`/css/bundle${min}.css`,`/js/lib.bundle${min}.js`,`/js/bundle${min}.js`]

| map => filesFind(replace(it,'[hash]','.*'))

| flatten

| map => fileDelete(it.VirtualPath) | end }}

{{* Copy same bundle definitions from _layout.html as-is *}}

{{ ['/assets/css/'] | bundleCss({ minify:!debug, cache:!debug, disk:!debug, out:`/css/bundle${min}.css` }) }}

{{ [

`/lib/vue/dist/vue${min}.js`,

`/lib/vue-router/dist/vue-router${min}.js`,

'/lib/vue-class-component/vue-class-component.js',

'/lib/vue-property-decorator/vue-property-decorator.umd.js',

'/lib/@servicestack/client/servicestack-client.umd.js',

] | bundleJs({ minify:!debug, cache:!debug, disk:!debug, out:`/js/lib.bundle${min}.js` }) }}

{{ [

'content:/src/components/',

'content:/src/shared/',

'content:/src/',

] | bundleJs({ minify:!debug, cache:!debug, disk:!debug, out:`/js/bundle${min}.js` }) }}

Running the _bundle.css script again will then output minified bundles with cache breakers:

<link rel="stylesheet" href="/css/bundle.1549858174979.min.css">

<script src="/js/lib.bundle.155190192923.min.js"></script>

<script src="/js/bundle.1551907971028.min.js"></script>

When using [hash] cache breakers the bundle APIs will use any existing generated bundles it finds, so you'll need to

ensure that any older minified assets are removed (as done in the above script).

Available in Razor Helpers

The same Html.BundleJs(), Html.BundleCss() and Html.BundleHtml() bundling implementations as above have also been available in

ServiceStack Razor where it can be used like:

@Html.BundleJs(new BundleOptions {

Sources = {

"content:/src/components/",

"content:/src/shared/",

"content:/src/",

},

Minify = !DebugMode,

Cache = !DebugMode,

SaveToDisk = !DebugMode,

OutputTo = $"/js/bundle{min}.js",

})

vue-lite Project Template features

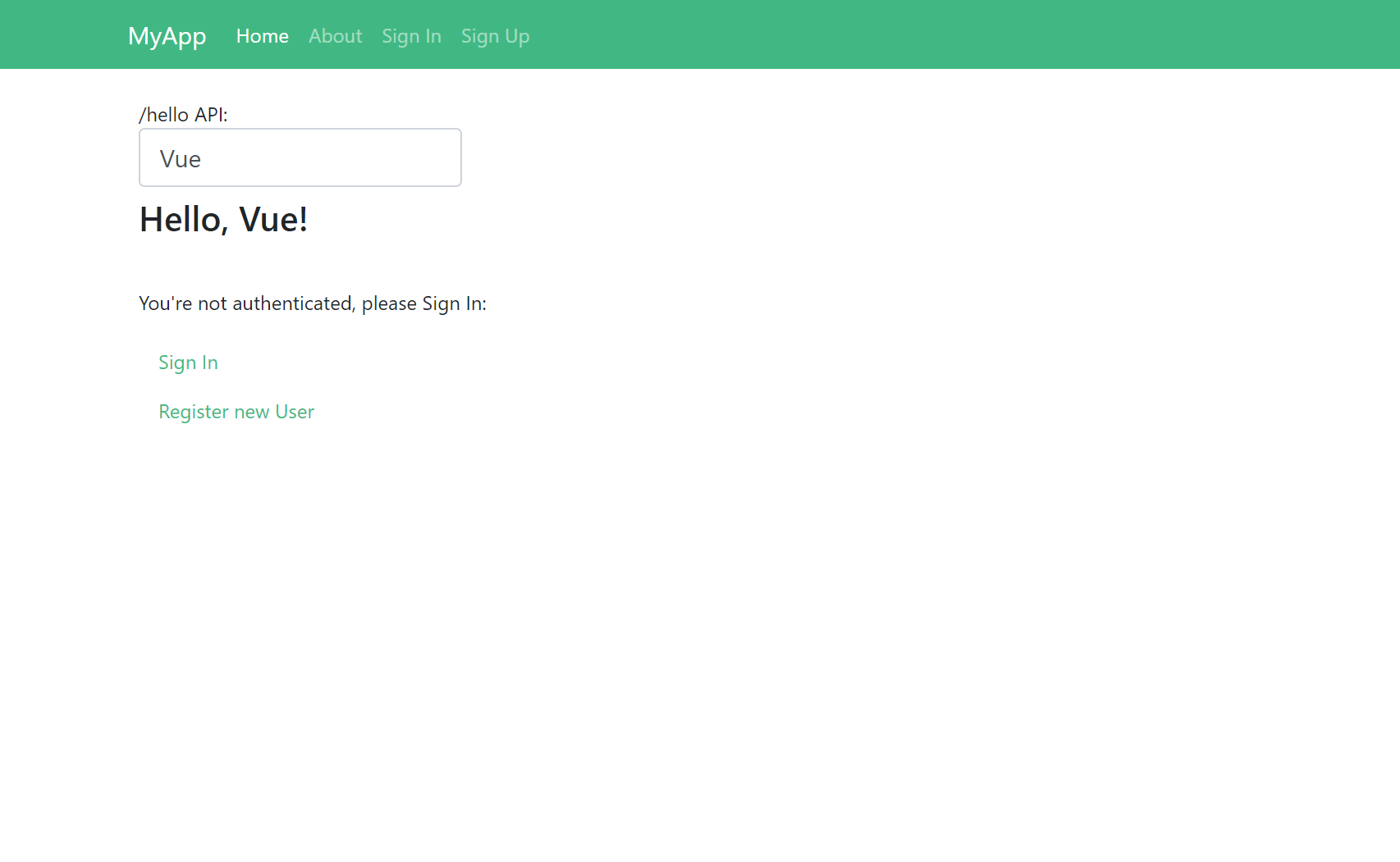

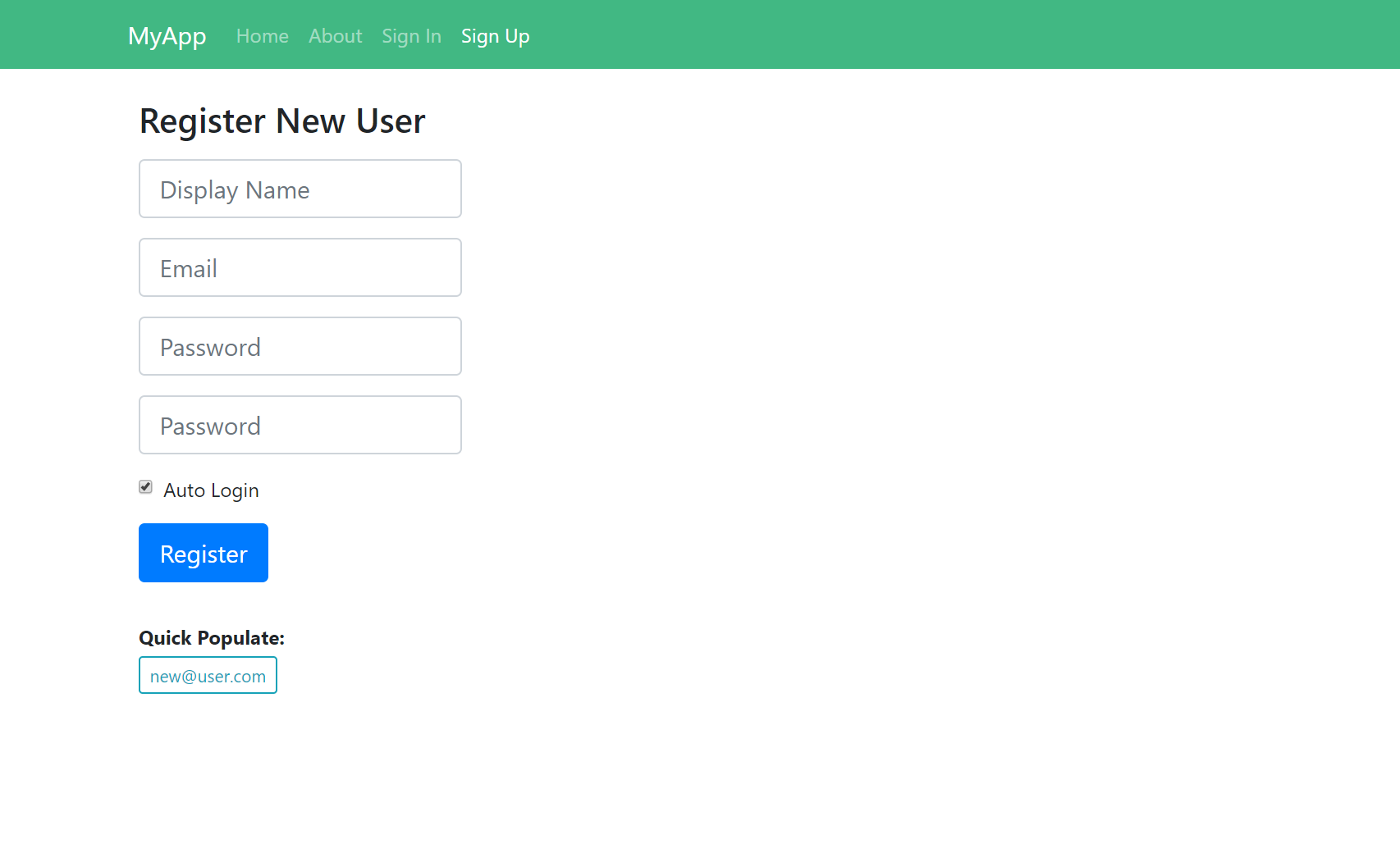

vue-lite comes pre-configured with a lot of the functionality needed in most Single Page Apps including client-side routing in /shared/router.ts and Sign In and Registration pages, both of which are integrated with ServiceStack's declarative form validation and auto-binding.

Form Validation Example

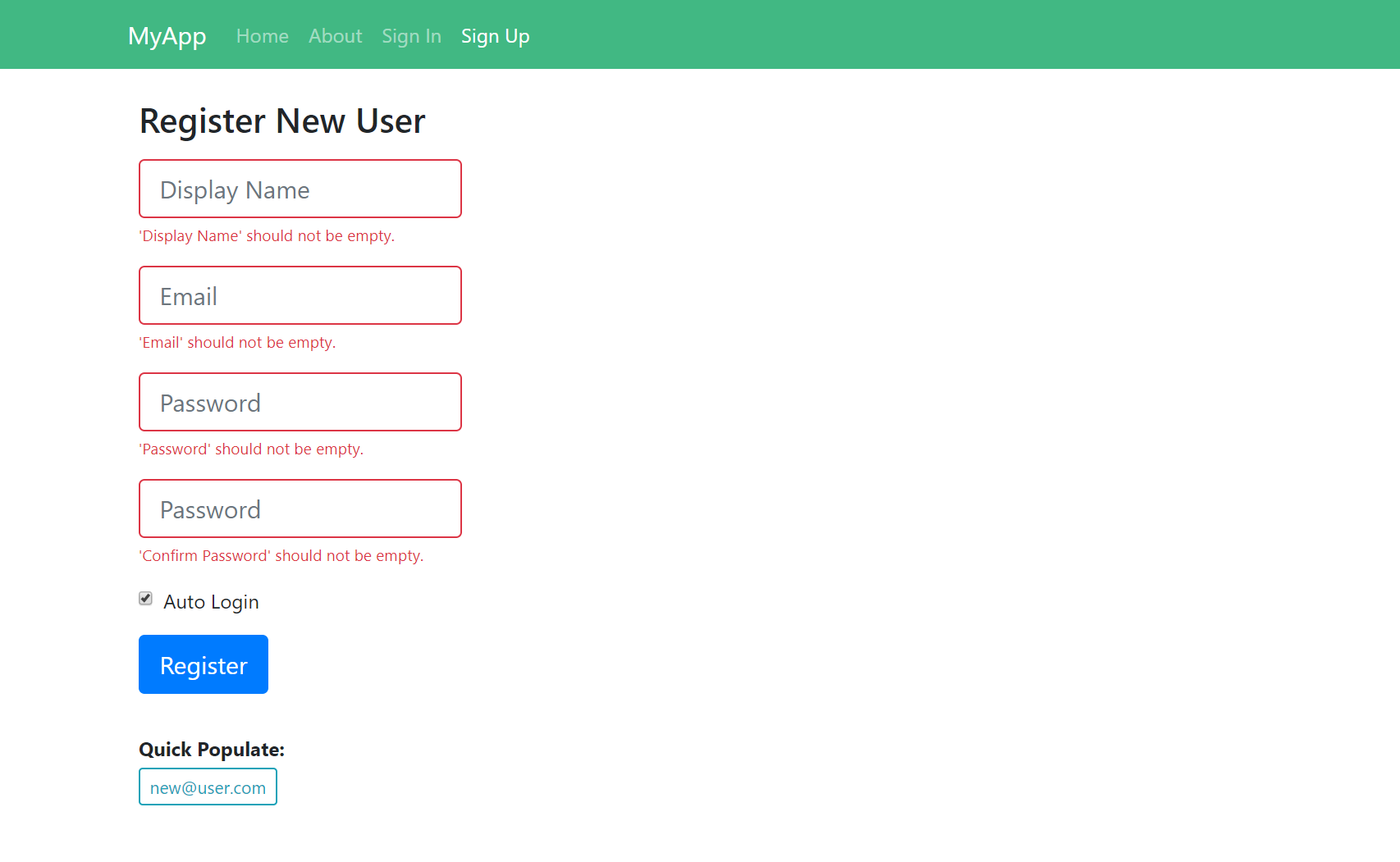

The Sign Up Page shows a typical example of auto-form validation with ServiceStack which can be developed using clean declarative markup:

@Component({ template:

`<div>

<h3>Register New User</h3>

<form ref="form" @submit.prevent="submit" :class="{ error:responseStatus, loading }" >

<div class="form-group">

<ErrorSummary except="displayName,email,password,confirmPassword" :responseStatus="responseStatus" />

</div>

<div class="form-group">

<Input name="displayName" v-model="displayName" placeholder="Display Name" :responseStatus="responseStatus" />

</div>

<div class="form-group">

<Input name="email" v-model="email" placeholder="Email" :responseStatus="responseStatus" />

</div>

<div class="form-group">

<Input type="password" name="password" v-model="password" placeholder="Password" :responseStatus="responseStatus" />

</div>

<div class="form-group">

<Input type="password" name="confirmPassword" v-model="confirmPassword" placeholder="Password" :responseStatus="responseStatus" />

</div>

<div class="form-group">

<CheckBox name="autoLogin" v-model="autoLogin" :responseStatus="responseStatus">

Auto Login

</CheckBox>

</div>

<div class="form-group">

<button class="btn btn-lg btn-primary" type="submit">Register</button>

</div>

<div class="pt-3">

<b>Quick Populate:</b>

<p class="pt-1">

<a class="btn btn-outline-info btn-sm" href="javascript:void(0)" @click.prevent="newUser('new@user.com')">new@user.com</a>

</p>

</div>

</form>

</div>`

})

Which renders into the following Bootstrap Form UI:

All custom controls used are defined in /shared/controls.ts which encapsulate the label and input controls and their validation error bindings within reusable Vue components.

Validation Error Binding

All validation errors are sourced from the Component's this.responseStatus reactive property, populated by any Exception's thrown when using the

ServiceStack's TypeScript JsonServiceClient which in this case is used to

Register the user by sending the Register Request DTO generated in /shared/dtos.ts:

export class SignUp extends Vue {

displayName = ''

email = ''

password = ''

confirmPassword = ''

autoLogin = true

loading = false

responseStatus = null

async submit() {

try {

this.loading = true;

this.responseStatus = null;

const response = await client.post(new Register({

displayName: this.displayName,

email: this.email,

password: this.password,

confirmPassword: this.confirmPassword,

autoLogin: this.autoLogin,

}));

await checkAuth();

redirect('/');

} catch (e) {

this.responseStatus = e.responseStatus || e;

} finally {

this.loading = false;

}

}

newUser(email:string) {

const names = email.split('@');

this.displayName = toPascalCase(names[0]) + " " + toPascalCase(splitOnFirst(names[1],'.')[0]);

this.email = email;

this.password = this.confirmPassword = 'p@55wOrd';

}

}

This is all it takes to render any server validation errors against their respective fields which we can test by submitting an empty form:

Vue Global State Management

Instead of immediately reaching for Vuex, we've kept the templates "lite" by leveraging existing

functionality built into the core libraries. So for global state management we're using a global Vue instance as a pub/sub EventBus that

our decoupled components use to update global state and listen for events.

This is used by checkAuth to post an empty Authenticate DTO to ServiceStack to check if the user is still authenticated on the server,

which depending if they're Authenticated will either returns basic session info or fails with a 401 error response, which the pub/sub event listeners

use to update global its state:

export const store:Store = {

isAuthenticated: false,

userSession: null,

};

class EventBus extends Vue {

store = store

}

export var bus = new EventBus({ data: store });

bus.$on('signin', (userSession:AuthenticateResponse) => {

bus.$set(store, 'isAuthenticated', true);

bus.$set(store, 'userSession', userSession);

})

export const checkAuth = async () => {

try {

bus.$emit('signin', await client.post(new Authenticate()));

} catch (e) {

bus.$emit('signout');

}

}

react-lite Project Template features

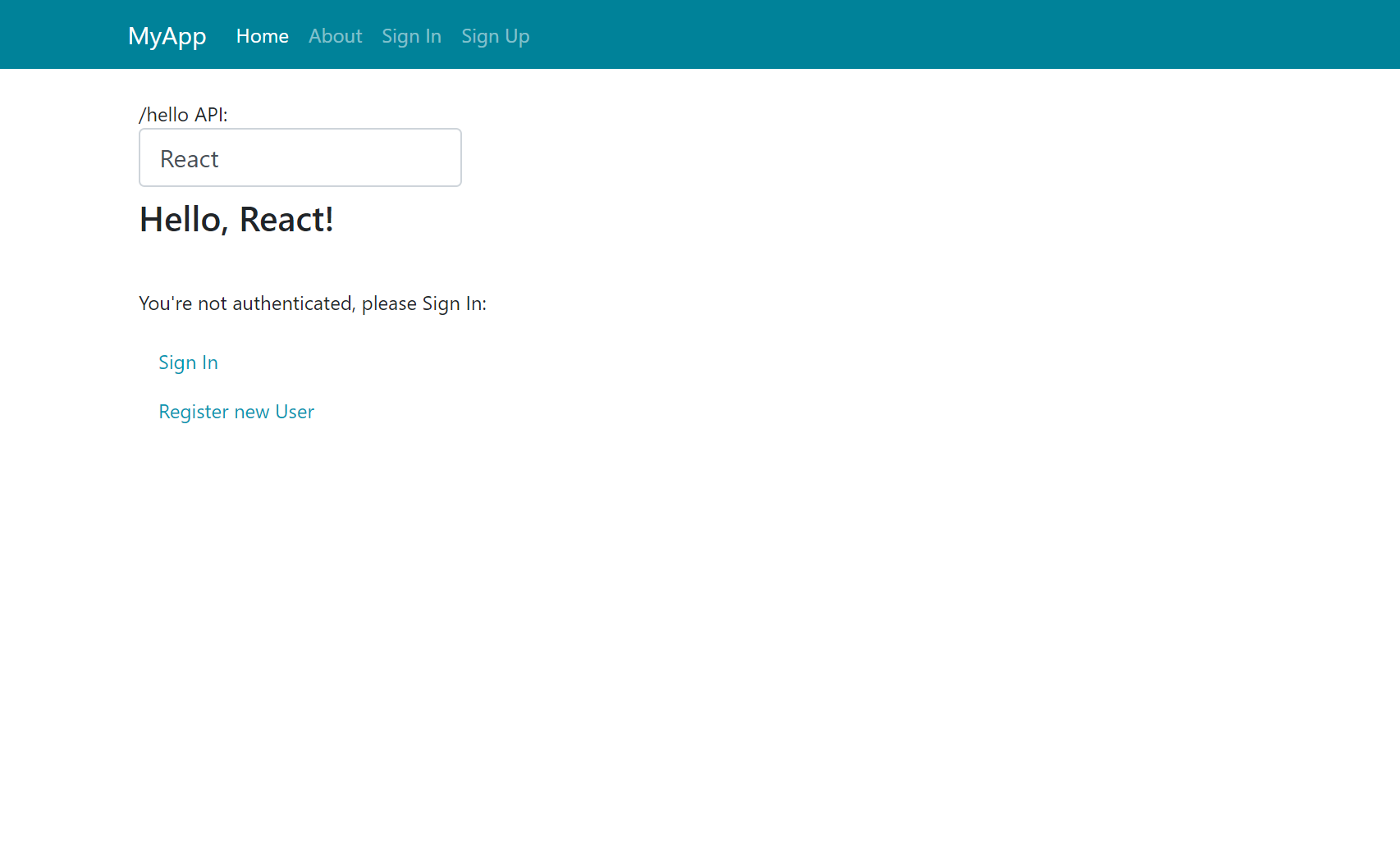

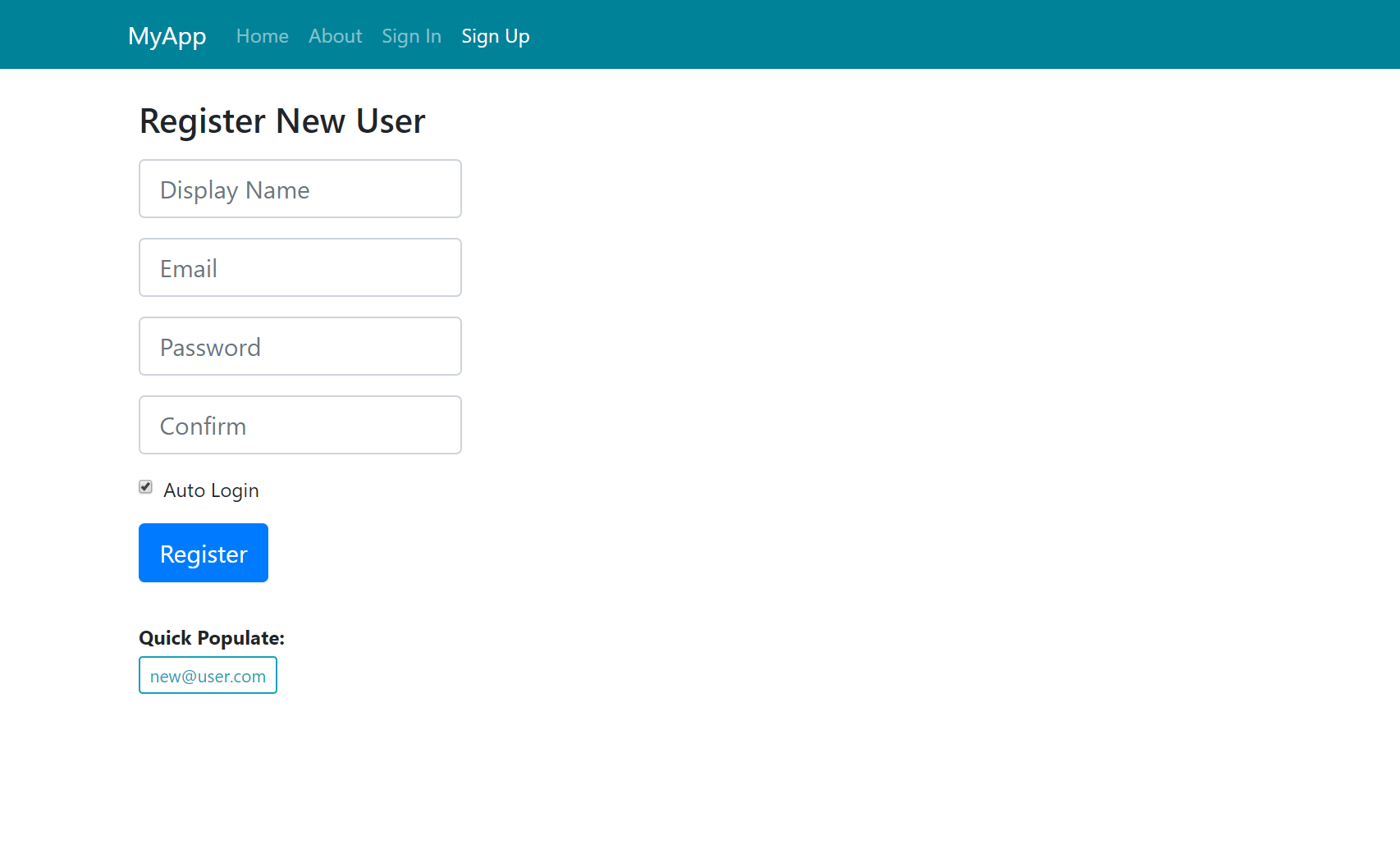

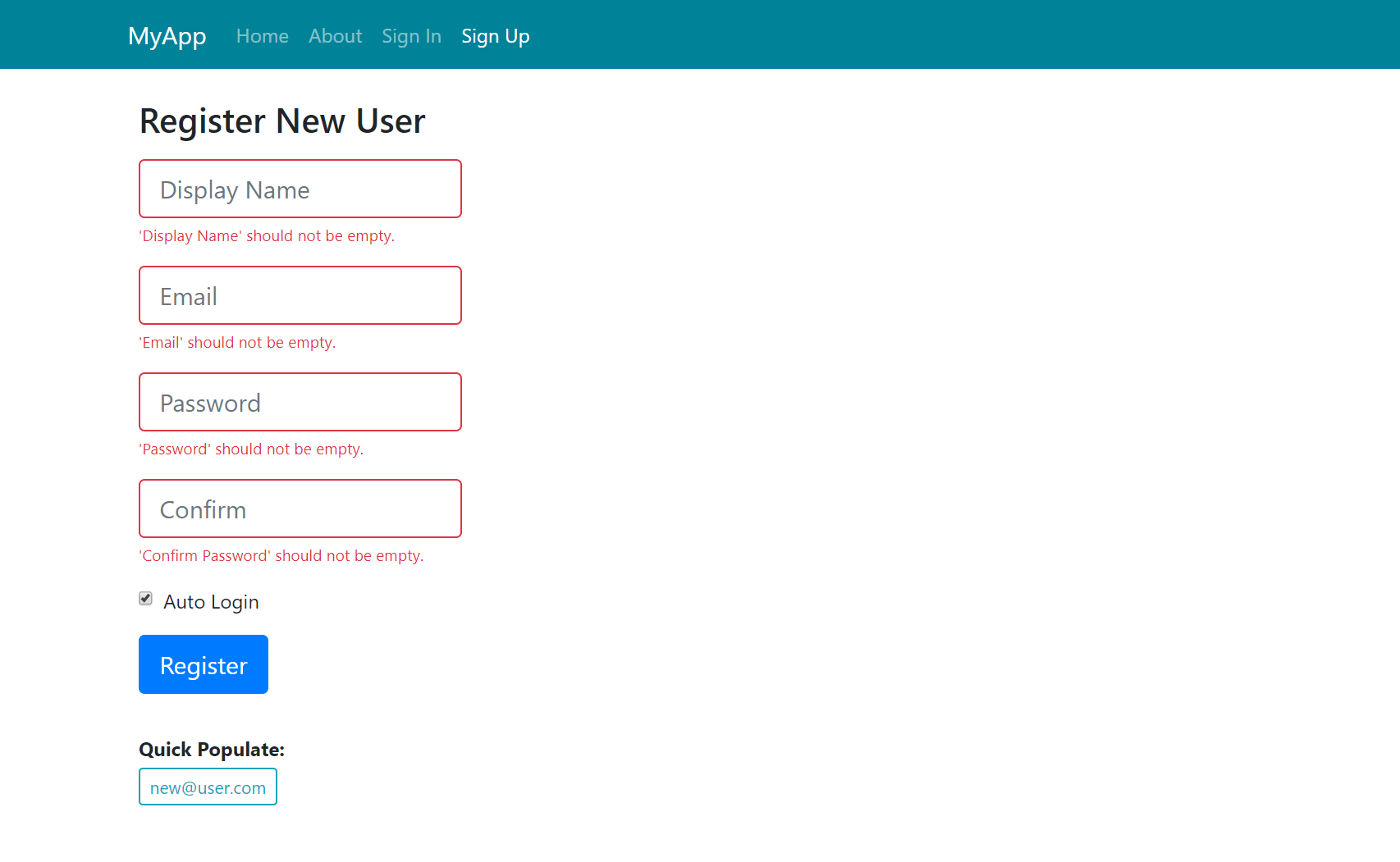

The react-lite template is functionality equivalent to vue-lite but created using the latest React features. For client-side routing we use React Router's declarative markup defined in main.tsx.

All components are written as Functional Components and makes use of React's new Hooks functionality which enable functional components to retain local state. Just like vue-lite all high-level controls are encapsulated into reusable functional components defined in /shared/controls.tsx which ends up retaining similar markup as vue-lite despite their completely different implementations:

export const SignUpImpl: React.SFC<any> = ({ history }) => {

const {state, dispatch} = useContext(StateContext);

const [loading, setLoading] = useState(false);

const [responseStatus, setResponseStatus] = useState(null);

const [displayName, setDisplayName] = useState('');

const [email, setEmail] = useState('');

const [password, setPassword] = useState('');

const [confirmPassword, setConfirmPassword] = useState('');

const [autoLogin, setAutoLogin] = useState(true);

const newUser = (email:string) => {

const names = email.split('@');

setDisplayName(toPascalCase(names[0]) + " " + toPascalCase(splitOnFirst(names[1],'.')[0]));

setEmail(email);

setPassword('p@55wOrd');

setConfirmPassword('p@55wOrd');

}

const submit = async () => {

try {

setLoading(true);

setResponseStatus(null);

const response = await client.post(new Register({

displayName,

email,

password,

confirmPassword,

autoLogin,

}));

await checkAuth(dispatch);

setLoading(false);

(history as History).push('/');

} catch (e) {

setResponseStatus(e.responseStatus || e);

setLoading(false);

}

};

return (<div>

<h3>Register New User</h3>

<form className={classNames({error:responseStatus, loading})}

onSubmit={async e => { e.preventDefault(); await submit(); }}>

<div className="form-group">

<ErrorSummary responseStatus={responseStatus} except={'displayName,email,password,confirmPassword'} />

</div>

<div className="form-group">

<Input type="text" name="displayName" value={displayName} onChange={setDisplayName} responseStatus={responseStatus} placeholder="Display Name" />

</div>

<div className="form-group">

<Input type="text" name="email" value={email} onChange={setEmail} responseStatus={responseStatus} placeholder="Email" />

</div>

<div className="form-group">

<Input type="password" name="password" value={password} onChange={setPassword} responseStatus={responseStatus} placeholder="Password" />

</div>

<div className="form-group">

<Input type="password" name="confirmPassword" value={confirmPassword} onChange={setConfirmPassword} responseStatus={responseStatus} placeholder="Confirm" />

</div>

<div className="form-group">

<CheckBox name="autoLogin" checked={autoLogin} onChange={setAutoLogin} responseStatus={responseStatus}>

Auto Login

</CheckBox>

</div>

<div className="form-group">

<button className="btn btn-lg btn-primary" type="submit">Register</button>

</div>

<div className="pt-3">

<b>Quick Populate:</b>

<p className="pt-1">

<a className="btn btn-outline-info btn-sm" href="javascript:void(0)" onClick={() => newUser('new@user.com')}>new@user.com</a>

</p>

</div>

</form>

</div>);

}

export const SignUp = withRouter(SignUpImpl);

Which renders the same Bootstrap form UI:

Despite React and Vue's stylistic differences the ServiceStack integration remains the same where the populated Register Request DTO

in /shared/dtos.ts is used to register the User with any failures used to

populate the responseStatus local state where it's reactively referenced in all Input components to render field validation errors against their targeted control:

React Global State Management

Likewise with global state management we've leveraged existing functionality instead of depending on an external state library like Redux or MobX.

Instead react-lite use React's new useReducer hook within a global StateContext which is made available to all components using

React's Context where they're used to dispatch actions that mutate global state:

const initialState: State = {

isAuthenticated: false,

userSession: null

};

const reducer = (state:State, action:Action) => {

switch (action.type) {

case 'signin':

return { ...state, isAuthenticated:true, userSession:action.data };

case 'signout':

return { ...state, isAuthenticated:false, userSession:null };

default:

throw new Error();

}

}

export const StateContext = createContext({} as Context);

export const StateProvider = (props:any) => {

const [state, dispatch] = useReducer(reducer, initialState);

return (<StateContext.Provider value={ { state, dispatch } }>{props.children}</StateContext.Provider>);

}

type Dispatch = React.Dispatch<Action>;

export const checkAuth = async (dispatch:Dispatch) => {

try {

dispatch({ type: 'signin', data: await client.post(new Authenticate()) });

} catch (e) {

dispatch({ type: 'signout' });

}

};

"lite" Project Structure

Unlike most other project templates which follow our Recommended Physical Project Structure, the "lite" project templates are all within a single project as it's more suitable for smaller projects and can be developed using lightweight IDE's like VS Code which doesn't work well with multi-project solutions.

So what would've been separate projects are being maintained separate folders:

Where they still retain the same source code and namespaces and can be easily be moved out into a different project when wanting to upgrade to a multi-project solution.

Updating "lite" project dependencies

We've also enabled a novel approach for updating your "lite" project 3rd Party dependencies where instead of everyone maintaining their own bespoke configuration and a tool like libman for updating their local dependencies, vue-lite projects can just run:

$ web +vue-lite-lib

To update their vue-lite projects with the latest JS libraries and TypeScript definitions used in the default project template.

For react-lite projects, run:

$ web +react-lite-lib

We'll cover how this works in more detail when we announce our web tool's new capabilities below.

Empty MemoryVirtualFiles now registered in VirtualFileSources

To enable shadowing of the WebRoot cascading Virtual File Sources, an empty MemoryVirtualFiles has been added to

InsertVirtualFileSources by default where it gets inserted at the start of VirtualFileSources, i.e:

new AppHost {

InsertVirtualFileSources = { new MemoryVirtualFiles() }

}

If needed, the individual Memory and FileSystem VFS providers in the WebRoot VFS Sources can be accessed with:

var memFs = appHost.VirtualFileSources.GetMemoryVirtualFiles();

var diskFs = appHost.VirtualFileSources.GetFileSystemVirtualFiles();

Which are also available from the HostContext singleton:

HostContext.MemoryVirtualFiles- WebRoot MemoryVirtualFilesHostContext.FileSystemVirtualFiles- WebRoot FileSystem

The WebRoot Directory and ContentRoot Directories are also available from:

HostContext.RootDirectory- WebRootwwwroot/HostContext.ContentRootDirectory- ContentRoot/

Sharp Script

sharpscript.net

#Script (fka ServiceStack Templates)

As we continue enhancing ServiceStack's scripting support with exciting new features, it no longer made sense to call our dynamic scripting language

"Templates" which is just one of the many use-cases #Script enables.

#Script is typical of a popular dynamic template language you'd find in other platforms, using the ubiquitously familiar mix of

JavaScript Expressions which for increased wrist-friendly readability can be easily composed

together using the Unix | operator as embraced by Vue.js filters and

Angular's Template Expressions

whist the Script Statement Blocks adopt the universally adopted Handlebars-like syntax that's ideal for

rendering dynamic pages.

#Script is contained within the pure ServiceStack.Common library that as it doesn't require any compilation or reliance on any

external build tools is embeddable within any .NET v4.5 or .NET Standard 2.0 App, even within Environments that don't allow

Reflection.Emit thanks to the cascading implementations of Reflection Utils.

#Script is also completely customizable where all the script methods and blocks can easily be removed or shadowed and replaced to create your

own DSL language. Alternatively you can use its AST parsing APIs directly to create, parse and evaluate ASTs

from free-form JavaScript expressions.

Optimal for generating HTML and Live Scripting Environments

We're staunch proponents for using typed languages like C# for developing compiler-checked server software but we prefer using dynamic languages for creating UIs which are typically constantly changing, single purpose "end-user scripts" where we believe it's more valuable to have a flexible and highly iterative and productive workflow than be confronted with the friction and delays imposed by a static type system - that's especially cumbersome in text generation tasks like dynamic HTML pages. We see this as the main reason why innovative Reactive UI frameworks like React and Vue don't work well translated in C# where the friction and boilerplate imposed by conforming to static and generic typed structures inhibits the productivity and fast iteration that dynamic languages enjoy.

Unrestricted flexibility

The flexibility, extensibility, expendability of #Script ensures we can use it anywhere, e.g. the same UI logic and controls we use to render

dynamic HTML pages can also be re-used inside Services to render Emails and run in stand-alone scripts. It also becomes trivial to unit test

any partial fragments and functionality in isolation where the ScriptContext can easily be re-created and any functionality simulated.

As #Script is not shackled to external tooling or constrained by MVC Razor conventions it's unrestricted with which features and capabilities

we can add to #Script - where it's already being used to power a number of exciting scenarios.

Sharp Apps

In our last release we can see how we can use #Script to build Sharp Apps in real-time:

Sharp APIs

#Script is also the fastest way to create APIs in .NET, which can also be created in real-time without compilation where you can use page based

routing to define your API at /hello/_name/index.html (or /hello/_name.html)

that just returns a JS object literal:

{{ { result: `Hello, ${name}!` } | return }}

Which returns the same JSON wire-format as the equivalent ServiceStack Service:

[Route("/hello/{Name}")]

public class Hello : IReturn<HelloResponse>

{

public string Name { get; set; }

}

public class HelloResponse

{

public string Result { get; set; }

}

public class HelloService : Service

{

public object Any(Hello request) => new HelloResponse { Result = $"Hello, {request.Name}!" };

}

Which also supports standard HTTP Content Negotiation available in all registered Content Types:

- /hello/World?format=html

- /hello/World?format=json

- /hello/World?format=xml

- /hello/World?format=csv

- /hello/World?format=jsv

Note: as Sharp APIs are untyped they don't benefit from ServiceStack's metadata features around its Typed Services

Sharp Scripts

In addition to being a versatile utility tool belt, our web (and app) .NET Core tools also serve as a #Script runner. We've seen a

glimpse of this with _bundle.ss script above which is run with web run {script}:

$ web run wwwroot/_bundle.ss

Sharp Scripts are run in the same context and have access to the same functionality and features as a Sharp App including extensibility va custom plugins. They can run stand-alone independent of an app.settings config file, instead the app settings configuration can be added in its page arguments to enable or configure any features.

Lets go through a couple of different possibilities we can do with scripts:

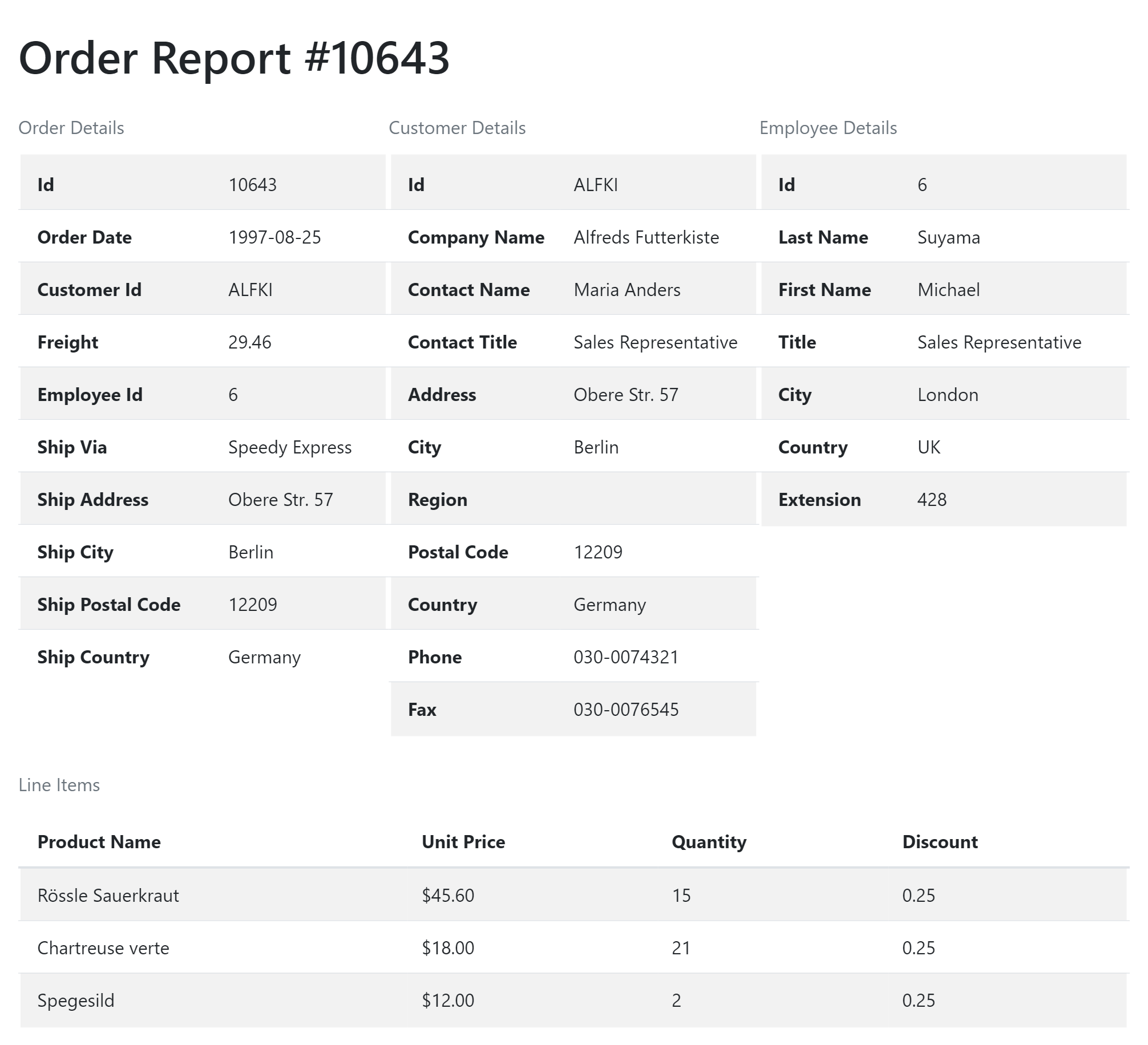

Adhoc reports

Scripts can use the built-in Database Scripts to be able to run queries against any sqlite, sqlserver, mysql and postgres database and quickly view data snapshots using the built-in

HTML Scripts, e.g:

<!--

db sqlite

db.connection ~/../apps/northwind.sqlite

-->

<link rel="stylesheet" href="https://stackpath.bootstrapcdn.com/bootstrap/4.3.1/css/bootstrap.min.css">

<style>body {padding:1em} caption{caption-side:top;}</style>

<h1 class="py-2">Order Report #{{id}}</h1>

{{ `SELECT o.Id, OrderDate, CustomerId, Freight, e.Id as EmployeeId, s.CompanyName as ShipVia,

ShipAddress, ShipCity, ShipPostalCode, ShipCountry

FROM "Order" o

INNER JOIN

Employee e ON o.EmployeeId = e.Id

INNER JOIN

Shipper s ON o.ShipVia = s.Id

WHERE o.Id = @id`

| dbSingle({ id }) | assignTo: order }}

{{#with order}}

{{ "table table-striped" | assignTo: className }}

<style>table {border: 5px solid transparent} th {white-space: nowrap}</style>

<div style="display:flex">

{{ order | htmlDump({ caption: 'Order Details', className }) }}

{{ `SELECT * FROM Customer WHERE Id = @CustomerId`

| dbSingle({ CustomerId }) | htmlDump({ caption: `Customer Details`, className }) }}

{{ `SELECT Id, LastName, FirstName, Title, City, Country, Extension FROM Employee WHERE Id=@EmployeeId`

| dbSingle({ EmployeeId }) | htmlDump({ caption: `Employee Details`, className }) }}

</div>

{{ `SELECT p.ProductName, ${sqlCurrency("od.UnitPrice")} UnitPrice, Quantity, Discount

FROM OrderDetail od

INNER JOIN

Product p ON od.ProductId = p.Id

WHERE OrderId = @id`

| dbSelect({ id })

| htmlDump({ caption: "Line Items", className }) }}

{{else}}

{{ `There is no Order with id: ${id}` }}

{{/with}}

Specifying Script Arguments

The above script generates a static HTML page can be invoked with any number of named arguments after the script name, in this case it

generates a report for Northwind Order #10643, saves it to 10643.html and opens it in the OS's default browser:

$ web run script.html -id 10643 > 10643.html && start 10643.html

Which looks like:

textDump

Generating static .html pages can quickly produce reports that looks good enough to share with others,

but if you just want to see a snapshot info at a glance or be able to share in text-based mediums like email or chat

channels you can replace htmlDump with textDump where it will instead output GitHub flavored Markdown tables, e.g:

<!--

db sqlite

db.connection ~/../apps/northwind.sqlite

-->

{{ `SELECT o.Id, OrderDate, CustomerId, Freight, e.Id as EmployeeId, s.CompanyName as ShipVia,

ShipAddress, ShipCity, ShipPostalCode, ShipCountry

FROM "Order" o

INNER JOIN

Employee e ON o.EmployeeId = e.Id

INNER JOIN

Shipper s ON o.ShipVia = s.Id

WHERE o.Id = @id`

| dbSingle({ id }) | assignTo: order }}

{{#with order}}

{{ order | textDump({ caption: 'Order Details' }) }}

{{ `SELECT p.ProductName, ${sqlCurrency("od.UnitPrice")} UnitPrice, Quantity, Discount

FROM OrderDetail od

INNER JOIN

Product p ON od.ProductId = p.Id

WHERE OrderId = @id`

| dbSelect({ id })

| textDump({ caption: "Line Items" })

}}

{{ `SELECT ${sqlCurrency("(od.UnitPrice * Quantity)")} AS OrderTotals

FROM OrderDetail od

INNER JOIN

Product p ON od.ProductId = p.Id

WHERE OrderId = @id

ORDER BY 1 DESC`

| dbSelect({ id })

| textDump({ rowNumbers: false }) }}

{{else}}

{{ `There is no Order with id: ${id}` }}

{{/with}}

As the output is human-readable we can view directly it without a browser:

$ web run script.ss -id 10643

Which will output:

| Order Details ||

|------------------|----------------|

| Id | 10643 |

| Order Date | 1997-08-25 |

| Customer Id | ALFKI |

| Freight | 29.46 |

| Employee Id | 6 |

| Ship Via | Speedy Express |

| Ship Address | Obere Str. 57 |

| Ship City | Berlin |

| Ship Postal Code | 12209 |

| Ship Country | Germany |

Line Items

| # | Product Name | Unit Price | Quantity | Discount |

|---|-------------------|------------|----------|----------|

| 1 | Rössle Sauerkraut | $45.60 | 15 | 0.25 |

| 2 | Chartreuse verte | $18.00 | 21 | 0.25 |

| 3 | Spegesild | $12.00 | 2 | 0.25 |

| Order Totals |

|--------------|

| $684.00 |

| $378.00 |

| $24.00 |

And because they're GitHub Flavored Markdown Tables they can be embedded directly in Markdown docs (like this) where it's renders as:

AWS Dashboards

The comprehensive built-in scripts coupled with ServiceStack's agnostic providers like the Virtual File System makes it easy to quickly query infrastructure resources like all Tables and Row counts in managed AWS RDS Instances or Search for static Asset resources in S3 Buckets.

<!--

db postgres

db.connection $AWS_RDS_POSTGRES

files s3

files.config {AccessKey:$AWS_S3_ACCESS_KEY,SecretKey:$AWS_S3_SECRET_KEY,Region:us-east-1,Bucket:rockwind}

-->

{{ dbTableNamesWithRowCounts | textDump({ caption: 'Tables' }) }}

{{ `SELECT "Id", "CustomerId", "EmployeeId", "OrderDate" from "Order" ORDER BY "Id" DESC ${sqlLimit(5)}`

| dbSelect | textDump({ caption: 'Last 5 Orders', headerStyle:'None' }) }}

{{ contentAllRootDirectories | map => `${it.Name}/`

| union(map(contentAllRootFiles, x => x.Name))

| textDump({ caption: 'Root Files and Folders' }) }}

{{ find ?? '*.html' | assignTo: find }}

{{ find | contentFilesFind | map => it.VirtualPath | take(15)

| textDump({ caption: `Files matching: ${find}` }) }}

You can use $NAME to move confidential information out of public scripts where it will be replaced with Environment

Variables. Then run the script as normal and optionally override the find pattern for files you want to search for:

$ web run script-aws.ss -find *.png

Where it displays a dashboard of activity from your AWS resources: containing all Tables with their Row Counts,

adhoc queries like your last 5 Orders, The Root files and Folders available in your S3 Bucket and any matching resources

from your find search pattern:

| Tables ||

|--------------------|------|

| Order Detail | 2155 |

| Order | 830 |

| Customer | 91 |

| Product | 77 |

| Territory | 53 |

| Region | 0 |

| Shipper | 0 |

| Supplier | 0 |

| Category | 0 |

| Employee | 0 |

| Employee Territory | 0 |

Last 5 Orders

| # | Id | CustomerId | EmployeeId | OrderDate |

|---|-------|------------|------------|------------|

| 1 | 11077 | RATTC | 1 | 1998-05-06 |

| 2 | 11076 | BONAP | 4 | 1998-05-06 |

| 3 | 11075 | RICSU | 8 | 1998-05-06 |

| 4 | 11074 | SIMOB | 7 | 1998-05-06 |

| 5 | 11073 | PERIC | 2 | 1998-05-05 |

| Root Files and Folders |

|------------------------|

| api/ |

| northwind/ |

| rockstars/ |

| index.html |

| web.aws.settings |

| web.postgres.settings |

| web.sqlite.settings |

| web.sqlserver.settings |

| Files matching: *.png |

|-----------------------------------------|

| assets/img/logo-32.png |

| rockstars/img/green_dust_scratch.png |

| rockstars/img/rip_jobs.png |

| rockstars/img/tileable_wood_texture.png |

Azure Dashboards

The nice thing about #Script late-binding and cloud agnostic providers is that with just different configuration we

can use the exact same script to query an Azure managed SQL Server Database and Azure Blob File Storage:

<!--

db sqlserver

db.connection $AZURE_SQL_CONNECTION_STRING

files azure

files.config {ConnectionString:$AZURE_BLOB_CONNECTION_STRING,ContainerName:rockwind}

-->

{{ dbTableNamesWithRowCounts |> textDump({ caption: 'Tables' }) }}

{{ `SELECT "Id", "CustomerId", "EmployeeId", "OrderDate" from "Order" ORDER BY "Id" DESC ${sqlLimit(5)}`

|> dbSelect |> textDump({ caption: 'Last 5 Orders', headerStyle:'None' }) }}

{{ contentAllRootDirectories | map => `${it.Name}/`

|> union(map(contentAllRootFiles, x => x.Name))

|> textDump({ caption: 'Root Files and Folders' }) }}

{{ find ?? '*.html' |> to => find }}

{{ find |> contentFilesFind |> map => it.VirtualPath | take(5)

|> textDump({ caption: `Files matching: ${find}` }) }}

Live #Script with web watch

What's even nicer than the fast feedback of running adhoc scripts? Is the instant feedback you get from being able to "watch" the same script!

To watch a script just replace run with watch:

$ web watch script-aws.ss -find *.png

The ability to run stand-alone adhoc scripts in an extensible dynamic scripting language feels like you're using a "developer enhanced" SQL Studio, where you can combine queries from multiple data sources, manipulate them with LINQ and quickly pipe results to dump utils to combine them in the same output for instant visualization.

#Script scripts can also be easily shared, maintained in gists and run on all different Win/OSX/Linux OS's that .NET Core runs on.

Live Transformations

Another area where "watched" scripts can shine is as a "companion scratch pad" assistant during development that you can quickly switch to

and instantly test out live code fragments, calculations and transformations, e.g. This ends up being a great way to test out markdown syntax

and Nuglify's advanced compression using our new minifyjs and minifycss Script Blocks:

<!--

debug false

-->

Markdown:

{{#markdown}}

## Title

> quote

Paragraph with [a link](https://example.org).

{{/markdown}}

JS:

{{#minifyjs}}

function add(left, right) {

return left + right;

}

add(1, 2);

{{/minifyjs}}

CSS:

{{#minifycss}}

body {

background-color: #ffffff;

}

{{/minifycss}}

Then run with:

$ web watch livepad.ss

Which starts a live watched session that re-renders itself on save, initially with:

Markdown:

<h2 id="title">Title</h2>

<blockquote>

<p>quote</p>

</blockquote>

<p>Paragraph with <a href="https://example.org">a link</a>.</p>

JS:

function add(n,t){return n+t}add(1,2)

CSS:

body{background-color:#fff}

Live Session

Usage in .NET

To evaluate #Script in .NET you'll first create the ScriptContext containing all functionality and features your Scripts have access to:

var context = new ScriptContext {

Args = { ... }, // Global Arguments available to all Scripts, Pages, Partials, etc

Plugins = { ... }, // Encapsulated Features, e.g. Markdown, Protected or ServiceStack Features

ScriptMethods = { ... }, // Additional Methods

ScriptBlocks = { ... }, // Additional Script Blocks

FilterTransformers = { ... }, // Additional Stream Transformers

ScanTypes = { ... }, // Auto register Methods, Blocks and Code Page Types

ScanAssemblies = { ... }, // Auto register all Methods, Blocks and Code Page Types in Assembly

PageFormats = { ... }, // Additional Text Document Formats

}.Init();

Then call EvaluateScript() to evaluate the script and capture it's rendered output in a string:

string output = context.EvaluateScript("The time is now: {{ now | dateFormat('HH:mm:ss') }}");

Evaluating Scripts with return values

#Script can render text as above or they can return values using the return method where it can be accessed using Evaluate():

var result = context.Evaluate("1 + 1 = {{ 1 + 1 | return }}."); //= 2

The generic version utilizes ServiceStack's powerful built-in conversion utils to convert the return value into your preferred type, e.g:

double result = context.Evaluate<double>("1 + 1 = {{ return(1 + 1) }}."); //= 2.0

string result = context.Evaluate<string>("1 + 1 = {{ return(1 + 1) }}."); //= "2"

But can also be used for more powerful conversions like converting an Object Dictionary into your preferred POCO:

var result = context.Evaluate<Customer>("{{`select * from customer where id=@id` | dbSingle({id}) | return }}",

new ObjectDictionary {

["id"] = 1

});

Optimized for .NET

To enable JS-like dynamism when binding to .NET methods, #Script automatically converts arguments for Types that don't match.

One of the effects of this is that you can define a single method with double params:

class MyMethods : ScriptMethods

{

public double add(double a, double b) => a + b;

}

And be able to call it with any .NET numeric type, e.g:

var context = new ScriptContext { ScriptMethods = { new MyMethods() } }.Init();

context.EvaluateScript("{{ add(1,1) }}");

Where it will convert all int arguments into double before executing your method.

For improved performance the Default scripts arithmetic and Math methods avoid any numeric conversions themselves by using

DynamicNumberwhich delegates it to use the optimal concrete arithmetic methods.

Auto Async I/O and Stream Transformations

#Script makes it easy to write composable, intent-based self-documenting code, e.g it's clear that the expression below makes a database call

to fetch a URL from the qotd table, downloads the URL Contents, transforms its markdown contents and assigns the results to the quote argument:

{{ 'select url from qotd where id = @id'

| dbScalar({ id }) | urlContents | markdown | assignTo: quote }}

How it does it becomes an implementation detail, e.g. with this naive implementation below it will make a Sync DB call then

download the entire URL contents before passing it to the markdown() method to convert it to HTML:

class MyMethods : ScriptMethods

{

public string urlContents(string url) => url.GetStringFromUrl();

public string markdown(string markdown) => MarkdownConfig.Transform(markdown);

}

var context = new ScriptContext {

InsertScriptMethods = {

new MyMethods(),

new DbScripts(),

}

}.Init();

But without changing any of the script code we can use the more optimal built-in implementation:

var context = new ScriptContext {

Plugins = { new MarkdownScriptPlugin() },

ScriptMethods = { new DbScriptsAsync() },

}.Init();

Where dbScalar is now an async API that returns a Task<object> which is automatically awaited before the async urlContents method

is called which makes an Async I/O HTTP Call to asynchronously write the response to the OutputStream before it's passed to the markdown

Filter Transformer which reads markdown from an async Input Stream

and returns a Stream of HTML, the rendered text output is then captured and stored in the quote string argument.

So whilst both implementations end up with the same result, they achieve it differently where no additional boilerplate is required to enlist the more performant async streaming implementation below.

Breaking Changes

There were 2 major changes which can cause breaking changes in #Script:

ServiceStack.Script rebrand

Despite the re-branding to #Script we were able to retain most source-code compatibility where the previous "Old APIs" under ServiceStack.Templates

are now deprecated stubs

that inherit the new APIs under ServiceStack.Script. All deprecation messages contain the newer classes that you should move to.

Some classes couldn't be duplicated, like if you were using PageResult in your Services. They now require adding:

using ServiceStack.Script;

Which is effectively the only changes that ServiceStack Templates needed to run on the latest version.

ServiceStack Templates still uses and documents the old ServiceStack.Template APIs whilst the

new sharpscript.net is the new website for #Script which has been converted to use and document the new ServiceStack.Script APIs.

To verify minimal disruption to existing APIs, most were converted into unit tests in BAK_CompatTemplateTests.cs.

Migration to new Script APIs

Migrating to the new APIs is fairly straight forward:

- Change

using ServiceStack.Templates;tousing ServiceStack.Script; - Any classes with

TemplatePage*has been renamed toSharpPage* - Any other class with a

Template*prefix has been renamed toScript*

This change doesn't affect any of your existing #Script source code whose existing syntax and available filters/methods remains unchanged.

New Terminology

The primary rationale for the rebranding was so we're better able to label, describe and document all of #Script different features easier,

so when referring to Templates View Engine we're now calling #Script Pages which is a better corollary to "Razor Pages"

which it provides an alternative to.

Other re-branded features:

- API Pages are now called Sharp APIs

- Web Apps are now called Sharp Apps

- Template Filters are now called Script Methods

- Template Blocks are now called Script Blocks

The collection of methods you inject in your scripts like TemplateRedisFilters and TemplateDbFilters are now referred to as "Scripts"

where they've been renamed to RedisScripts and DbScripts.

Request Params are no longer imported by default

A major change that will require changing existing scripts is that Request Parameters are no longer imported by default and will need to explicitly accessed or imported.

Previously you could access the ?id=1 queryString param in your page with:

{{ id }}

This now needs to be explicitly accessed using the new query or shorter qs alias:

{{ qs.id }}

For HTTP Form Data Params use:

{{ form.id }}

importRequestParams

The least disruption to existing Pages would be to specify a white-list of arguments you want to import at the top of your page:

{{ 'id,name,age' | importRequestParams }}

Or if preferred, you can specify a collection of param names instead:

{{ ['id','name','age'] | importRequestParams }}

Allow all Request Params in a page

There's a local nuclear option that you can use to temporarily restore previous behavior in adhoc pages by calling importRequestParams

without any arguments:

{{ importRequestParams }}

Which you can add at the top of adhoc pages to import all QueryString and FormData params as page arguments.

Allow all Request Params Globally

There's also the unrecommended global nuclear option of reverting to the previous behaving and always importing all Request Params in all pages:

Plugins.Add(new SharpPagesFeature {

ImportRequestParams = true

});

No impact on page based routing

This doesn't impact page based routing as the path info arguments are explicitly declared in the file or directory name, e.g:

/posts/_slug.htmlcalled from/posts/Astill populatesslugwith A/posts/_slug/edit.htmlcalled from/posts/A/editstill populatesslugwith A

Request Param Methods

To make it accessing the Request Params as easy as possible we've added a number of new methods to access it in a variety of different ways:

| Query Methods | Description | |

|---|---|---|

| formQuery(name) | -> string | FormData[name] ?? QueryString[name] |

| formQueryValues(name) | -> string[] | FormData[name] |

| httpParam(name) | -> string | Headers[X-name] ?? QueryString[name] ?? FormData[name] ?? Item[name] |

| queryString | -> string | $"?{QueryString.ToString()}" |

| queryDictionary | -> Dictionary | QueryString.ToObjectDictionary() |

| formDictionary | -> Dictionary | FormData.ToObjectDictionary() |

| formValue(name) | -> string | if (hasError) FormData[name] ?? QueryString[name] |

| formValues(name) | -> string[] | if (hasError) FormData[name] ?? QueryString[name] |

| formCheckValue(name) | -> bool | formValue(name) in [ "true", "t", "on", "1" ] |

Here's a flavor of how of using the different APIs above:

{{ `?${qs}` | addQueryString({ qs3:3}) }}

{{ queryString | addQueryString({ qs3:3}) }}

{{ qs | toObjectDictionary | addItem({ qs3:3 }) | toQueryString }}

{{ queryDictionary | addItem({ qs3:3 }) | toQueryString }}

{{ queryDictionary | addItem(pair('qs3',3)) | toQueryString }}

Which all return the same result:

?qs1=1&qs2=2&qs3=3

?qs1=1&qs2=2&qs3=3

?qs1=1&qs2=2&qs3=3

?qs1=1&qs2=2&qs3=3

?qs1=1&qs2=2&qs3=3

As they all return a string they can be further manipulated with the various URL handling methods:

{{ queryDictionary | addItem({ qs3:3 }) | toQueryString | addQueryString({ qs4:4 }) }}

{{ queryDictionary | addItem({ qs3:3 }) | toQueryString | setQueryString({ qs1:5 }) }}

{{ queryDictionary | addItem({ qs3:3 }) | toQueryString | addHashParams({ qs4:4 }) }}

{{ queryDictionary | toQueryString | addHashParams({qs4:4}) | setHashParams({qs4:5}) }}

Which returns:

?qs1=1&qs2=2&qs3=3&qs4=4

?qs1=5&qs2=2&qs3=3

?qs1=1&qs2=2&qs3=3#qs4=4

?qs1=1&qs2=2#qs4=5

World Validation

One message we continually try to re-iterate is the importance of Services (aka APIs) having a well-defined coarse-grained Services Contract which serves as the interface into your system by which all external consumers bind to - making it the most important contract in your system.

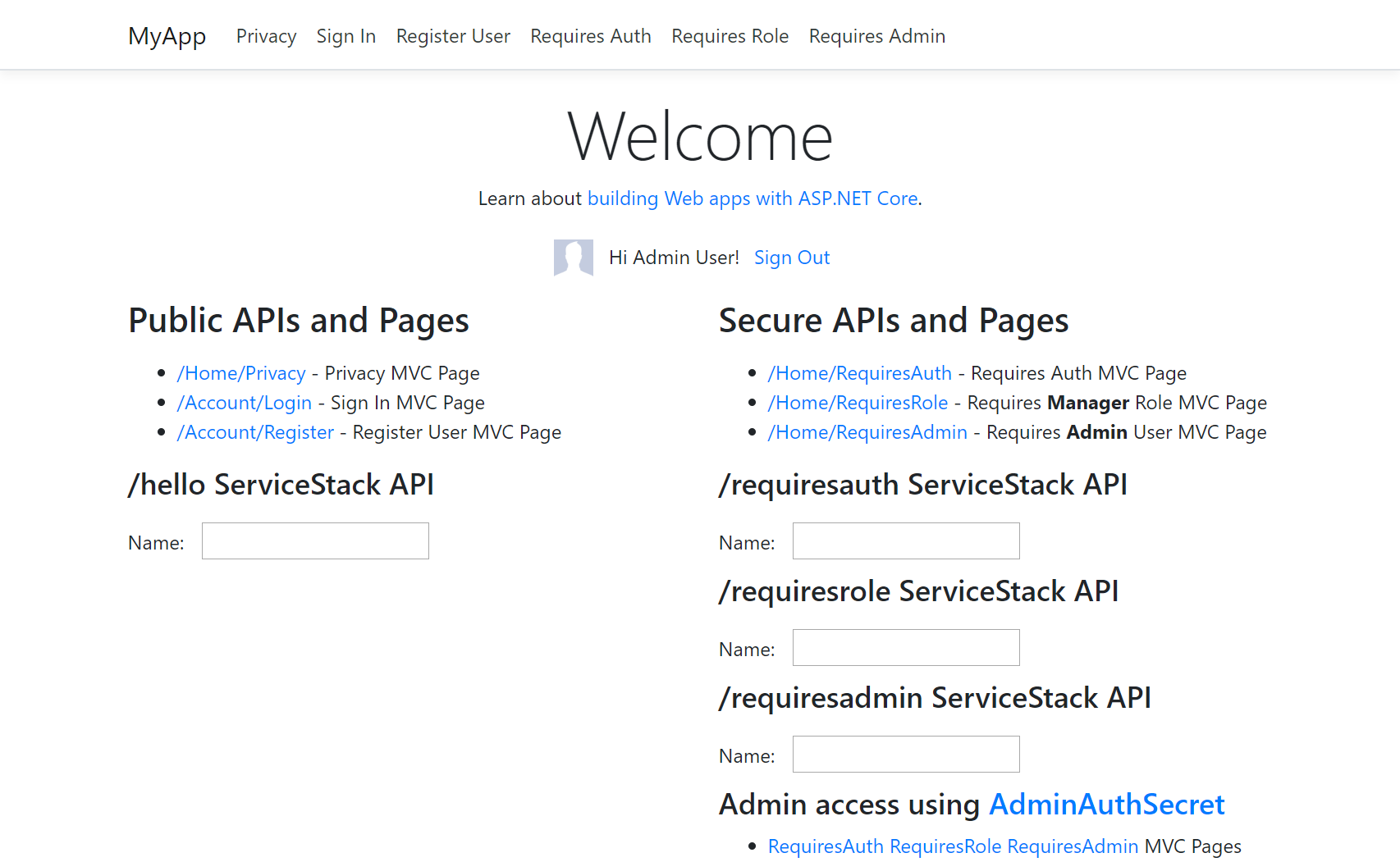

Benefits of Services

This is the development model ServiceStack has always promoted and what most of its features are centered around, where your Services Contract is defined by decoupled impl-free DTOs. If your Services retain this property then they'll be able to encapsulate any of its capabilities of infinite complexity and make it available remotely to all consumers with never any more complexity than the cost of a Service call:

This is where the true value of Services are derived, they're the ultimate form of encapsulating complexity and offers the highest level of software reuse. ServiceStack amplifies your Services capabilities by making them available in multiple Hosting Options, serialization formats, MQ and SOAP endpoints to enable more seamless integrations in a variety of different scenarios including native end-to-end Typed APIs for the most popular Web, Mobile and Desktop Apps that reduce the effort and complexity required to call your Services in all consumers - multiplicatively increasing the value provided.

API First Development Model

The practice .NET has always dictated was that you need to maintain separate controllers and logic for your HTML UIs and a different controller for your HTTP APIs. Apart from forcing code duplication, doing this breaks your systems well-defined Service Contracts where any custom logic in your MVC Controllers and Razor pages becomes another entry point into your system where no longer are all your system capabilities available to all clients, some are only available when using a browser to navigate MVC pages.

Whereas in ServiceStack there are only Services, which are written with pure logic that's unopinionated as to what clients are calling it, with clean Request DTOs received as Inputs that typically return clean Response DTOs as outputs. HTML is then just another serialization format, providing a View of your Services or serving as a bundled UI that works on top of your existing Services, in all cases calling the same well tested and defined Services that all other clients use.

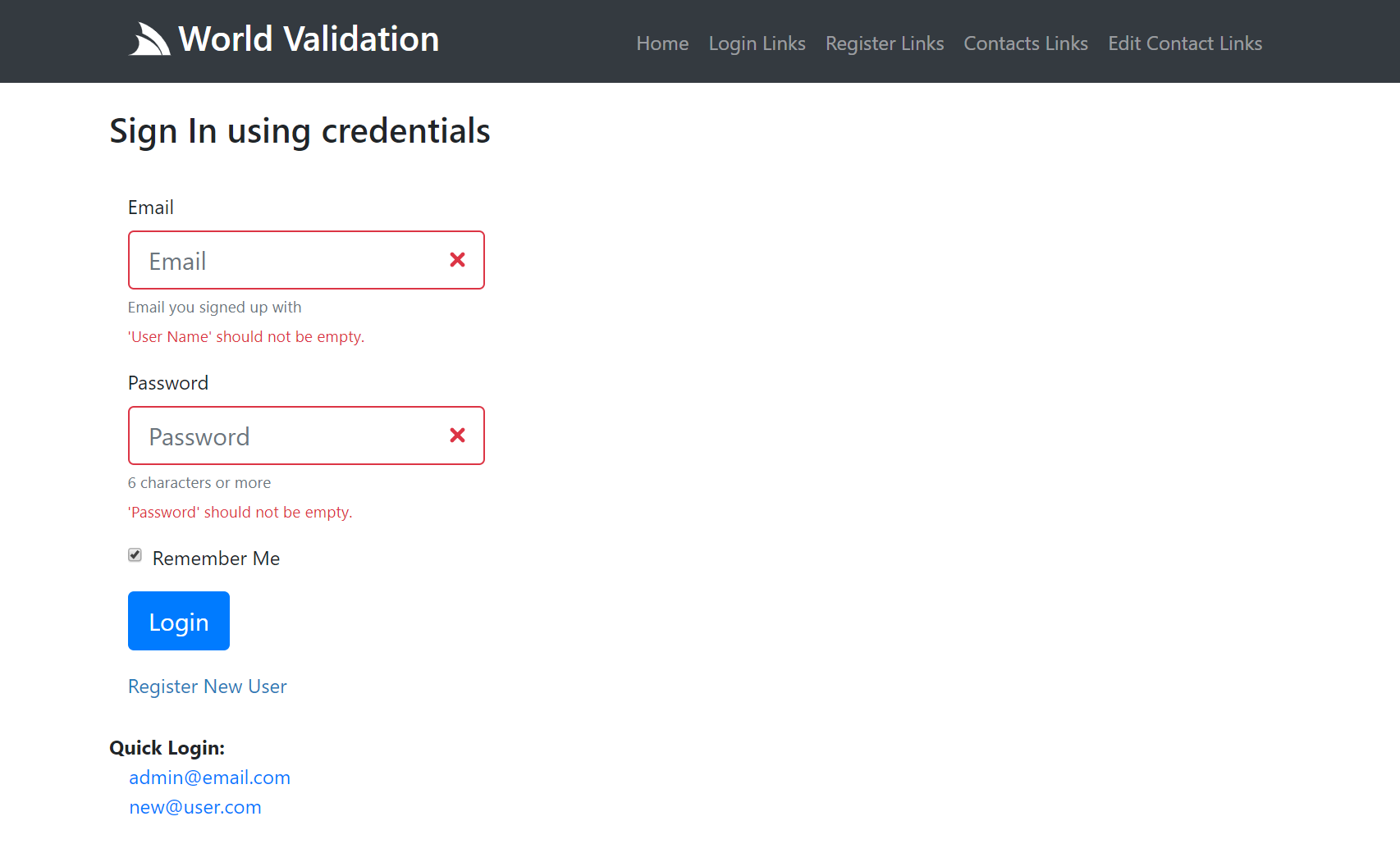

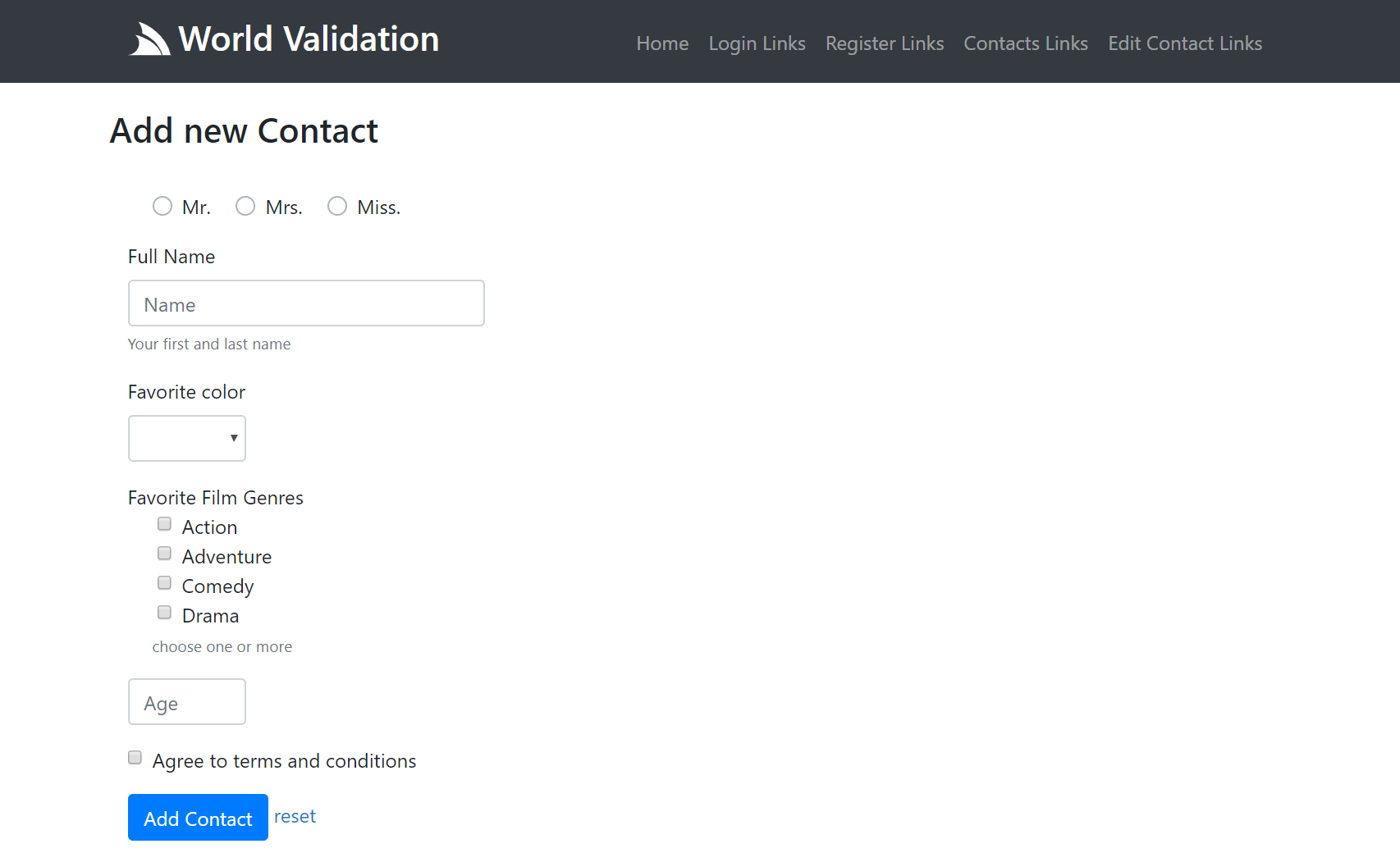

Validation from all the things

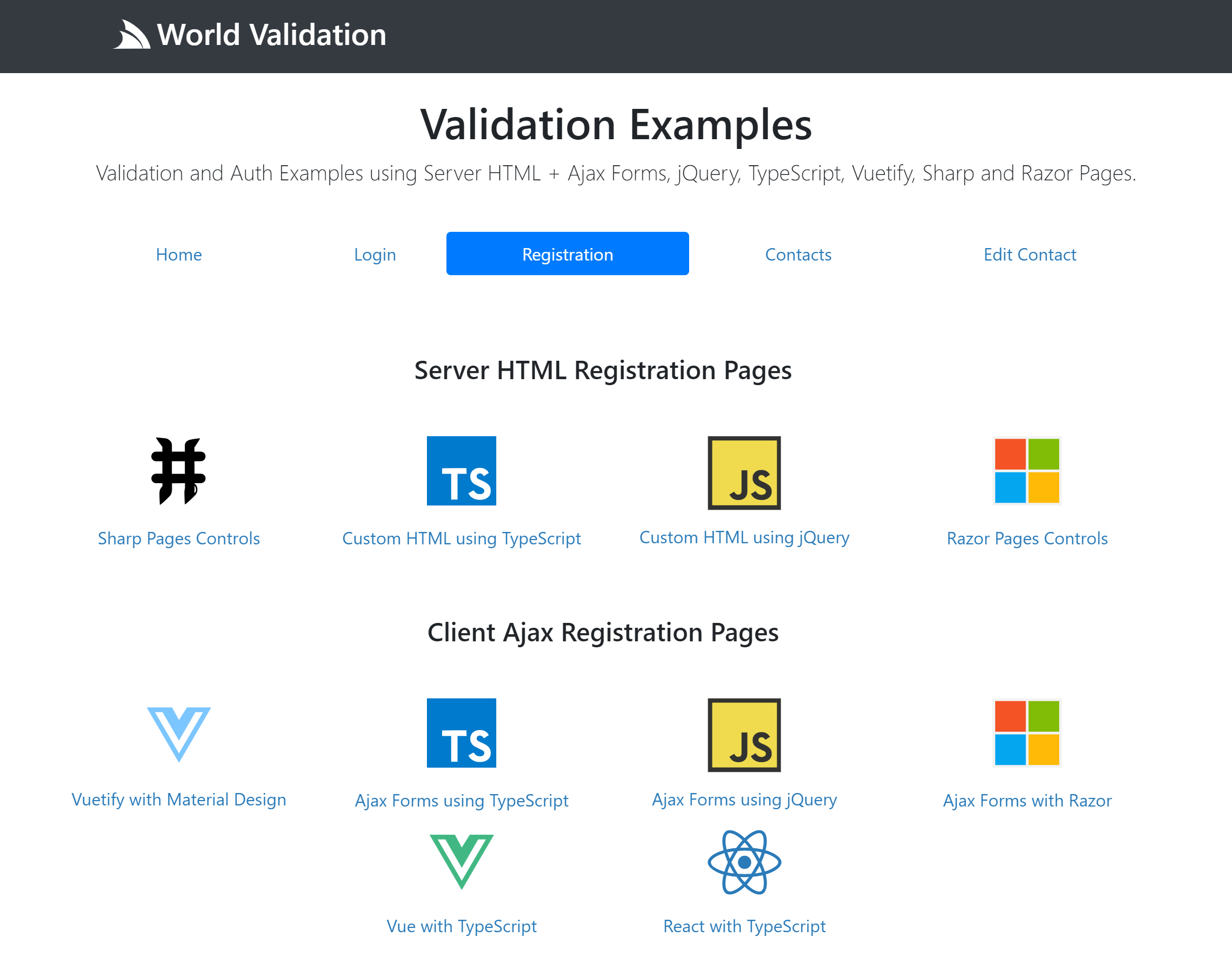

To better demonstrate the benefits of this approach and to show there's no loss of flexibility, we've created the new Validation .NET Core App which uses the same pure unopinionated ServiceStack Services to support 8 different HTML UI strategies including server HTML Rendered and Ajax Client forms, multiple View Engines, multiple layouts - all utilizing the same Services and declarative Fluent Validation.

View Source on GitHub NetCoreApps/Validation

It should be noted that these are just examples of different HTML UIs, with no additional effort, all ServiceStack Services automatically provide native integrations into all popular Mobile and Desktop Apps with Add ServiceStack Reference.

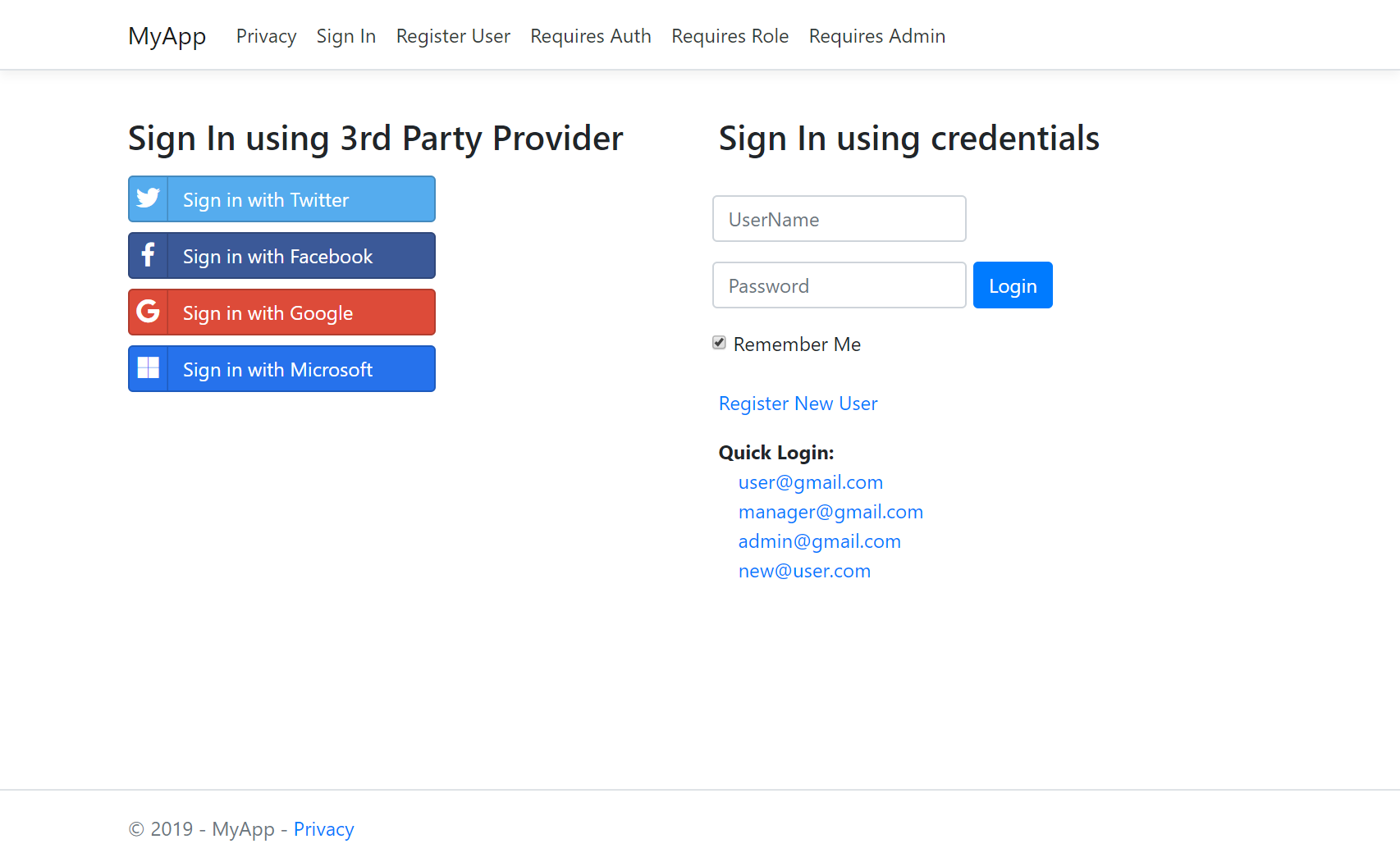

About

The Validation App covers a typical App example you'd find in most Apps, including Login and Registration Forms to Sign In and Register new Users who are then able to access the same protected Services to maintain their own private contact lists. It's a compact example that tries to cover a lot of use-cases typical in a real-world App, including maintaining a separate Data and DTO Model and using C# idioms like Enum's for defining a finite list of options which are re-used to populate its HTML UI.

The UI for the same App is re-implemented in 8 popular Web Development approaches, each integrated with ServiceStack's validation.

As of this writing there 4 different server HTML generated strategies that use HTML Form Posts to call back-end Services:

Server Rendered HTML UIs

- /server - #Script Pages using Server Controls

- /server-ts - Server HTML enhanced with TypeScript

- /server-jquery - Server HTML enhanced with jQuery

- /server-razor - ServiceStack.Razor using Razor Helpers

Client HTML UIs

The Client Examples use Ajax Forms and the TypeScript JsonServiceClient to send TypeScript dtos.ts generated with TypeScript Add ServiceStack Reference:

- /vuetify - Vue App using Vuetify's Material Design Controls using ServiceClient Requests

- /client-ts - TypeScript UI using Ajax Forms and ServiceClient Requests

- /client-jquery - JavaScript UI using jQuery Ajax Requests

- /client-razor - Client jQuery Ajax Requests rendered by Razor pages

The source code for all different strategies are encapsulated within their folders above except for the Razor examples which need to maintain their shared resources in the /Views folder (representative of friction and restrictions when working with Razor).

Server Implementation

This is the shared backend Server implementation that all UIs are using:

All Auth Configuration is encapsulated within a "no-touch" IConfigureAppHost plugin that's run once on Startup:

// Run before AppHost.Configure()

public class ConfigureAuth : IConfigureAppHost

{

public void Configure(IAppHost appHost)

{

var AppSettings = appHost.AppSettings;

appHost.Plugins.Add(new AuthFeature(() => new CustomUserSession(),

new IAuthProvider[] {

new CredentialsAuthProvider(), //Enable UserName/Password Credentials Auth

}));

appHost.Plugins.Add(new RegistrationFeature()); //Enable /register Service

//override the default registration validation with your own custom implementation

appHost.RegisterAs<CustomRegistrationValidator, IValidator<Register>>();

container.Register<ICacheClient>(new MemoryCacheClient()); //Store User Sessions in Memory

appHost.Register<IAuthRepository>(new InMemoryAuthRepository()); //Store Authenticated Users in Memory

CreateUser(appHost, "admin@email.com", "Admin User", "p@55wOrd", roles:new[]{ RoleNames.Admin });

}

// Add initial Users to the configured Auth Repository

public void CreateUser(IAppHost appHost, string email, string name, string password, string[] roles)

{

var authRepo = appHost.TryResolve<IAuthRepository>();

var newAdmin = new UserAuth { Email = email, DisplayName = name };

var user = authRepo.CreateUserAuth(newAdmin, password);

authRepo.AssignRoles(user, roles);

}

}

// Type class to store additional metadata in Users Session

public class CustomUserSession : AuthUserSession {}

// Custom Validator to add custom validators to built-in /register Service requiring DisplayName and ConfirmPassword

public class CustomRegistrationValidator : RegistrationValidator

{

public CustomRegistrationValidator()

{

RuleSet(ApplyTo.Post, () =>

{

RuleFor(x => x.DisplayName).NotEmpty();

RuleFor(x => x.ConfirmPassword).NotEmpty();

});

}

}

All Services and Validators used in this App. Extension methods are used to DRY reusable code and a Custom

Auto Mapping handles conversion between the Contact Data Model and Contact`` DTO:

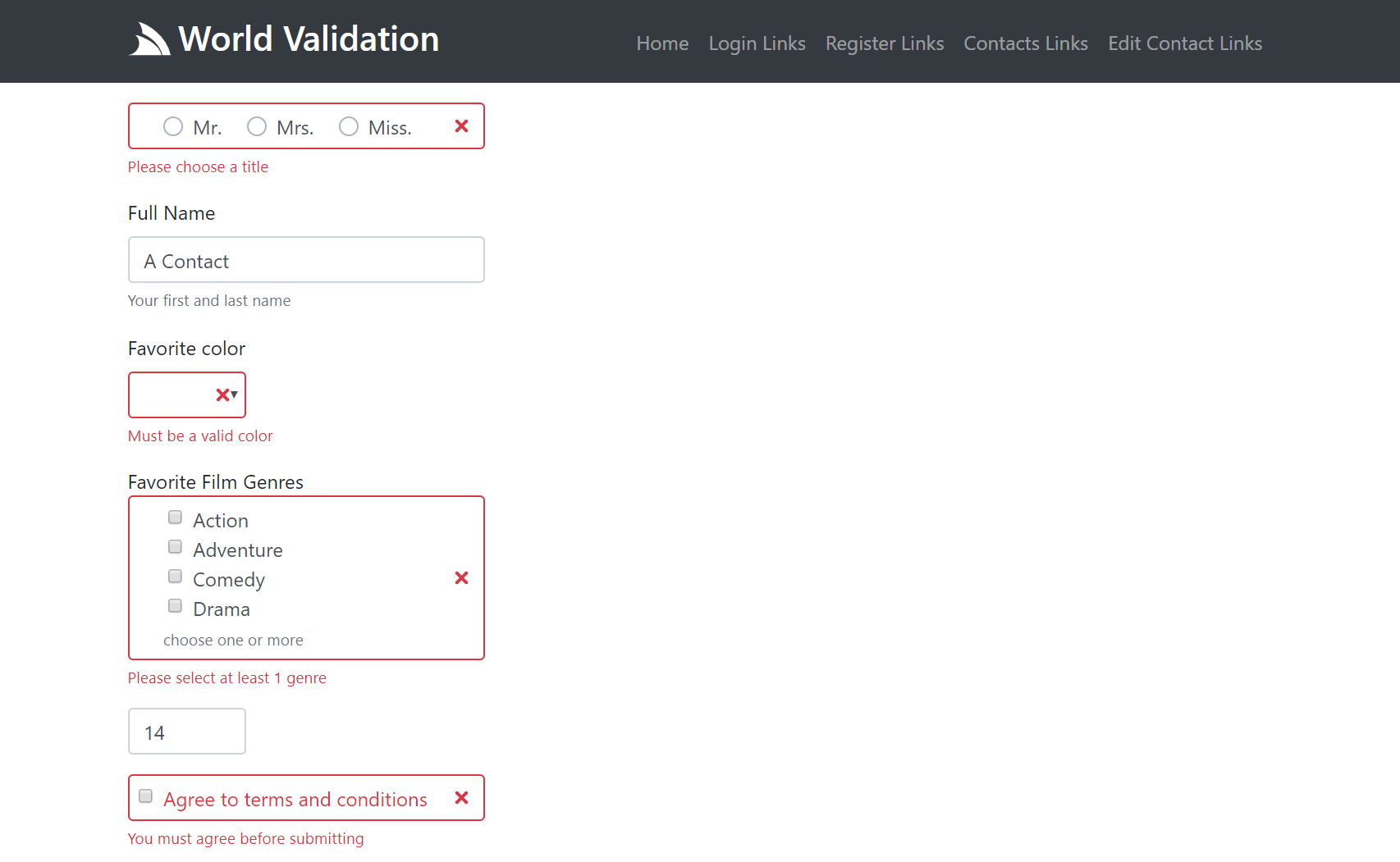

public class CreateContactValidator : AbstractValidator<CreateContact>

{

public CreateContactValidator()

{

RuleFor(r => r.Title).NotEqual(Title.Unspecified).WithMessage("Please choose a title");

RuleFor(r => r.Name).NotEmpty();

RuleFor(r => r.Color).Must(x => x.IsValidColor()).WithMessage("Must be a valid color");

RuleFor(r => r.FilmGenres).NotEmpty().WithMessage("Please select at least 1 genre");

RuleFor(r => r.Age).GreaterThan(13).WithMessage("Contacts must be older than 13");

RuleFor(x => x.Agree).Equal(true).WithMessage("You must agree before submitting");

}

}

[Authenticate] // Limit to Authenticated Users

[ErrorView(nameof(CreateContact.ErrorView))] // Display ErrorView for HTML requests resulting in an Exception

[DefaultView("/server/contacts")] // Render custom HTML View for HTML Requests

public class ContactServices : Service

{

private static int Counter = 0;

internal static readonly ConcurrentDictionary<int, Data.Contact> Contacts = new ConcurrentDictionary<int, Data.Contact>();

public object Any(GetContacts request)

{

var userId = this.GetUserId();

return new GetContactsResponse

{

Results = Contacts.Values

.Where(x => x.UserAuthId == userId)

.OrderByDescending(x => x.Id)

.Map(x => x.ConvertTo<Contact>())

};

}

public object Any(GetContact request) =>

Contacts.TryGetValue(request.Id, out var contact) && contact.UserAuthId == this.GetUserId()

? (object)new GetContactResponse { Result = contact.ConvertTo<Contact>() }

: HttpError.NotFound($"Contact was not found");

public object Any(CreateContact request)

{

var newContact = request.ConvertTo<Data.Contact>();

newContact.Id = Interlocked.Increment(ref Counter);

newContact.UserAuthId = this.GetUserId();

newContact.CreatedDate = newContact.ModifiedDate = DateTime.UtcNow;

var contacts = Contacts.Values.ToList();

var alreadyExists = contacts.Any(x => x.UserAuthId == newContact.UserAuthId && x.Name == request.Name);

if (alreadyExists)

throw new ArgumentException($"You already have a contact named '{request.Name}'", nameof(request.Name));

Contacts[newContact.Id] = newContact;