Highly Available Redis

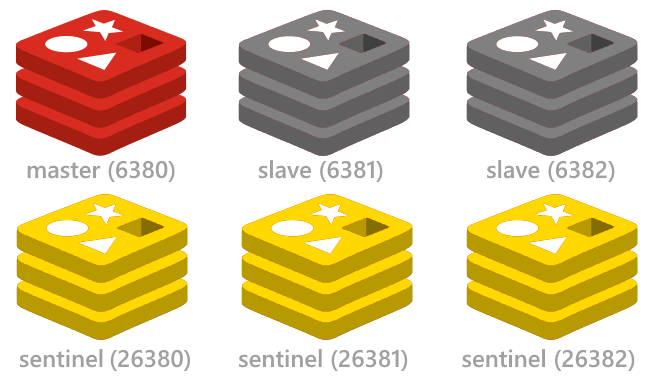

Redis Sentinel is the official recommendation for running a highly available Redis configuration by running a number of additional redis sentinel processes to actively monitor existing redis master and slave instances ensuring they're each working as expected. If by consensus it's determined that the master is no longer available it will automatically failover and promote one of the replicated slaves as the new master. The sentinels also maintain an authoritative list of available redis instances providing clients a centeral repositorty to discover available instances they can connect to.

Support for Redis Sentinel has been added with the RedisSentinel class which listens to the available

Sentinels to source its list of available master, slave and other sentinel redis instances which it uses

to configure and maintain the Redis Client Managers, initiating any failovers as they're reported.

RedisSentinel Usage

To use the new Sentinel support, instead of populating the Redis Client Managers with the connection string

of the master and slave instances you would create a single RedisSentinel instance configured with

the connection string of the running Redis Sentinels:

var sentinelHosts = new[]{ "sentinel1", "sentinel2:6390", "sentinel3" };

var sentinel = new RedisSentinel(sentinelHosts, masterName: "mymaster");

This shows a typical example of configuring a RedisSentinel which references 3 sentinel hosts (i.e.

the minimum number for a highly available setup which can survive any node failing).

It's also configured to look at the mymaster configuration set (the default master group).

Redis Sentinels can monitor more than 1 master / slave group, each with a different master group name.

The default port for sentinels is 26379 (when unspecified) and as RedisSentinel can auto-discover other sentinels, the minimum configuration required is just:

var sentinel = new RedisSentinel("sentinel1");

Scanning and auto discovering of other Sentinels can be disabled with

ScanForOtherSentinels=false

Start monitoring Sentinels

Once configured, you can start monitoring the Redis Sentinel servers and access the pre-configured client manager with:

IRedisClientsManager redisManager = sentinel.Start();

Which as before, can be registered in your preferred IOC as a singleton instance:

container.Register<IRedisClientsManager>(c => sentinel.Start());

Advanced Sentinel Configuration

RedisSentinel by default manages a configured PooledRedisClientManager instance which resolves both master

Redis clients for read/write GetClient() and slaves for readonly GetReadOnlyClient() API's.

This can be changed to use the newer RedisManagerPool with:

sentinel.RedisManagerFactory = (master,slaves) => new RedisManagerPool(master);

Custom Redis Connection String

The host the RedisSentinel is configured with only applies to that Sentinel Host, you can still use the flexibility of

Redis Connection Strings

to configure the individual Redis Clients by specifying a custom HostFilter:

sentinel.HostFilter = host => "{0}?db=1&RetryTimeout=5000".Fmt(host);

This will return clients configured to use Database 1 and a Retry Timeout of 5 seconds (used in new Auto Retry feature).

Other RedisSentinel Configuration

Whilst the above covers the popular Sentinel configuration that would typically be used, nearly every aspect

of RedisSentinel behavior is customizable with the configuration below:

| OnSentinelMessageReceived | Fired when the Sentinel worker receives a message from the Sentinel Subscription |

| OnFailover | Fired when Sentinel fails over the Redis Client Manager to a new master |

| OnWorkerError | Fired when the Redis Sentinel Worker connection fails |

| IpAddressMap | Map internal redis host IP's returned by Sentinels to its external IP |

| ScanForOtherSentinels | Whether to routinely scan for other sentinel hosts (default true) |

| RefreshSentinelHostsAfter | What interval to scan for other sentinel hosts (default 10 mins) |

| WaitBetweenFailedHosts | How long to wait after failing before connecting to next redis instance (default 250ms) |

| MaxWaitBetweenFailedHosts | How long to retry connecting to hosts before throwing (default 60s) |

| WaitBeforeForcingMasterFailover | How long after consecutive failed attempts to force failover (default 60s) |

| ResetWhenSubjectivelyDown | Reset clients when Sentinel reports redis is subjectively down (default true) |

| ResetWhenObjectivelyDown | Reset clients when Sentinel reports redis is objectively down (default true) |

| SentinelWorkerConnectTimeoutMs | The Max Connection time for Sentinel Worker (default 100ms) |

| SentinelWorkerSendTimeoutMs | Max TCP Socket Send time for Sentinel Worker (default 100ms) |

| SentinelWorkerReceiveTimeoutMs | Max TCP Socket Receive time for Sentinel Worker (default 100ms) |

Configure Redis Sentinel Servers

We've also created the redis config project to simplify setting up and running a highly-available multi-node Redis Sentinel configuration including start/stop scripts for instantly setting up the minimal highly available Redis Sentinel configuration on a single (or multiple) Windows, OSX or Linux servers. This single-server/multi-process configuration is ideal for setting up a working sentinel configuration on a single dev workstation or remote server.

The redis-config repository also includes the MS OpenTech Windows redis binaries and doesn't require any software installation.

Windows Usage

To run the included Sentinel configuration, clone the redis-config repo on the server you want to run it on:

git clone https://github.com/ServiceStack/redis-config.git

Then Start 1x Master, 2x Slaves and 3x Sentinel redis-servers with:

cd redis-config\sentinel3\windows

start-all.cmd

Shutdown started instances:

stop-all.cmd

If you're running the redis processes locally on your dev workstation the minimal configuration to connect to the running instances is just:

var sentinel = new RedisSentinel("127.0.0.1:26380");

container.Register(c => sentinel.Start());

Localhost vs Network IP's

The sentinel configuration assumes all redis instances are running locally on 127.0.0.1.

If you're instead running it on a remote server that you want all developers in your network to be

able to access, you'll need to either change the IP Address in the *.conf files to use the servers

Network IP. Otherwise you can leave the defaults and use the RedisSentinel IP Address Map feature

to transparently map localhost IP's to the Network IP that each pc on your network can connect to.

E.g. if this is running on a remote server with a 10.0.0.9 Network IP, it can be configured with:

var sentinel = new RedisSentinel("10.0.0.9:26380") {

IpAddressMap = {

{"127.0.0.1", "10.0.0.9"},

}

};

container.Register(c => sentinel.Start());

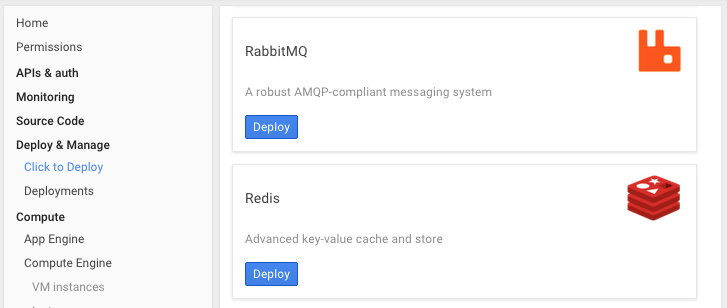

Google Cloud - Click to Deploy Redis

The easiest Cloud Service we've found that can instantly set up a multi node-Redis Sentinel Configuration is using Google Cloud's click to deploy Redis feature available from the Google Cloud Console under Deploy & Manage:

Clicking Deploy button will let you configure the type, size and location where you want to deploy the Redis VM's. See the full Click to Deploy Redis guide for a walk-through on setting up and inspecting a highly-available redis configuration on Google Cloud.

Automatic Retries

Another feature we've added to improve reliability is Auto Retry where the RedisClient will transparently

retry failed Redis operations due to Socket and I/O Exceptions in an exponential backoff starting from

10ms up until the RetryTimeout of 3000ms. These defaults can be tweaked with:

RedisConfig.DefaultRetryTimeout = 3000;

RedisConfig.BackOffMultiplier = 10;

The RetryTimeout can also be configured on the connection string with ?RetryTimeout=3000.

RedisConfig

The RedisConfig static class has been expanded to provide an alternative to Redis Connection Strings to

configure the default RedisClient settings. Each config option is

documented on the RedisConfig class

with the defaults shown below:

class RedisConfig

{

DefaultConnectTimeout = -1

DefaultSendTimeout = -1

DefaultReceiveTimeout = -1

DefaultRetryTimeout = 3 * 1000

DefaultIdleTimeOutSecs = 240

BackOffMultiplier = 10

BufferLength = 1450

BufferPoolMaxSize = 500000

VerifyMasterConnections = true

HostLookupTimeoutMs = 200

AssumeServerVersion = null

DeactivatedClientsExpiry = TimeSpan.FromMinutes(1)

DisableVerboseLogging = false

}

One option you may want to set is AssumeServerVersion with the version of Redis Server version you're running, e.g:

RedisConfig.AssumeServerVersion = 2812; //2.8.12

RedisConfig.AssumeServerVersion = 3030; //3.0.3

This is used to change the behavior of a few API's to use the most optimal Redis Operation for their server version. Setting this will save an additional INFO lookup each time a new RedisClient Connection is opened.

RedisStats

The new RedisStats class provides better visibility and introspection into your running instances:

| TotalCommandsSent | Total number of commands sent |

| TotalFailovers | Number of times the Redis Client Managers have FailoverTo() either by sentinel or manually |

| TotalDeactivatedClients | Number of times a Client was deactivated from the pool, either by FailoverTo() or exceptions on client |

| TotalFailedSentinelWorkers | Number of times connecting to a Sentinel has failed |

| TotalForcedMasterFailovers | Number of times we've forced Sentinel to failover to another master due to consecutive errors |

| TotalInvalidMasters | Number of times a connecting to a reported Master wasn't actually a Master |

| TotalNoMastersFound | Number of times no Masters could be found in any of the configured hosts |

| TotalClientsCreated | Number of Redis Client instances created with RedisConfig.ClientFactory |

| TotalClientsCreatedOutsidePool | Number of times a Redis Client was created outside of pool, either due to overflow or reserved slot was overridden |

| TotalSubjectiveServersDown | Number of times Redis Sentinel reported a Subjective Down (sdown) |

| TotalObjectiveServersDown | Number of times Redis Sentinel reported an Objective Down (odown) |

| TotalRetryCount | Number of times a Redis Request was retried due to Socket or Retryable exception |

| TotalRetrySuccess | Number of times a Request succeeded after it was retried |

| TotalRetryTimedout | Number of times a Retry Request failed after exceeding RetryTimeout |

| TotalPendingDeactivatedClients | Total number of deactivated clients that are pending being disposed |

You can get and print a dump of all the stats at anytime with:

RedisStats.ToDictionary().PrintDump();

And Reset all Stats back to 0 with RedisStats.Reset().

Injectable Resolver Strategy

To support the different host resolution behavior required for Redis Sentinel, we've decoupled the Redis Host Resolution behavior into an injectable strategy which can be overridden by implementing IRedisResolver and injected into any of the Redis Client Managers with:

redisManager.RedisResolver = new CustomHostResolver();

Whilst this an advanced customization option not expected to be used, it does allow using a custom strategy to change which Redis hosts to connect to. See the RedisResolverTests for more info.

New APIs

- PopItemsFromSet()

- DebugSleep()

- GetServerRole()

The RedisPubSubServer is now able to listen to a pattern of multiple channels with:

var redisPubSub = new RedisPubSubServer(redisManager) {

ChannelsMatching = new[] { "events.in.*", "events.out." }

};

redisPubSub.Start();

Deprecated APIs

- The

SetEntry*API's have been deprecated in favor of the more appropriately namedSetValue*API's

OrmLite Converters!

OrmLite has become a lot more customizable and extensible thanks to the internal redesign decoupling all

custom logic for handling different Field Types into individual Type Converters.

This redesign makes it possible to enhance or entirely replace how .NET Types are handled. OrmLite can now

be extended to support new Types it has no knowledge about, a feature taken advantage of by the new support

for SQL Server's SqlGeography, SqlGeometry and SqlHierarchyId Types!

Despite the scope of this internal refactor, OrmLite's existing test suite (and a number of new tests) continue to pass for each supported RDBMS. Whilst the Firebird and VistaDB providers having been greatly improved and now also pass the existing test suite (RowVersion's the only feature not implemented in VistaDB due to its lack of triggers).

Improved encapsulation, reuse, customization and debugging

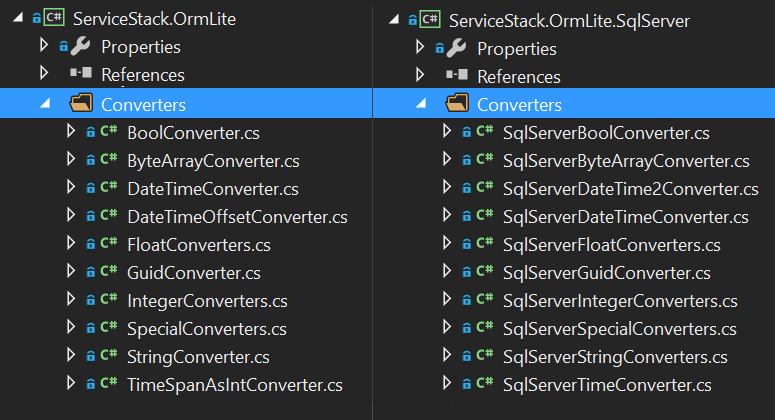

Converters allows for greater re-use where the common functionality to support each type is maintained in the common ServiceStack.OrmLite/Converters whilst any RDBMS-specific functionality can inherit the common converters and provide any specialization required to support that type. E.g. SQL Server specific converters are maintained in ServiceStack.OrmLite.SqlServer/Converters with each converter inheriting shared functionality and only adding custom logic required to support that Type in Sql Server.

Creating Converters

They also provide better encapsulation since everything relating to handling the field type is contained within

a single class definition. A Converter is any class implementing

IOrmLiteConverter

although it's instead recommended to inherit from the OrmLiteConverter abstract class which allows

only the minimum API's needing to be overridden, namely the ColumnDefinition

used when creating the Table definition and the ADO.NET DbType it should use in parameterized queries.

An example of this is in

GuidConverter:

public class GuidConverter : OrmLiteConverter

{

public override string ColumnDefinition

{

get { return "GUID"; }

}

public override DbType DbType

{

get { return DbType.Guid; }

}

}

But for this to work in SQL Server the ColumnDefinition should instead be UniqueIdentifier which is also

what it needs to be cast to, to be able to query Guid's in an SQL Statement.

Therefore it requires a custom

SqlServerGuidConverter

to support Guids in SQL Server:

public class SqlServerGuidConverter : GuidConverter

{

public override string ColumnDefinition

{

get { return "UniqueIdentifier"; }

}

public override string ToQuotedString(Type fieldType, object value)

{

var guidValue = (Guid)value;

return string.Format("CAST('{0}' AS UNIQUEIDENTIFIER)", guidValue);

}

}

Registering Converters

To get OrmLite to use this new Custom Converter for SQL Server, the SqlServerOrmLiteDialectProvider just

registers it in its constructor:

base.RegisterConverter<Guid>(new SqlServerGuidConverter());

Overriding the pre-registered GuidConverter to enable its extended functionality in SQL Server.

You'll also use the RegisterConverter<T>() API to register your own Custom GuidCoverter on the RDBMS

provider you want it to apply to, e.g for SQL Server:

SqlServerDialect.Provider.RegisterConverter<Guid>(new MyCustomGuidConverter());

Resolving Converters

If needed, it can be later retrieved with:

IOrmLiteConverter converter = SqlServerDialect.Provider.GetConverter<Guid>();

var myGuidConverter = (MyCustomGuidConverter)converter;

Debugging Converters

Custom Converters can also enable a better debugging story where if you want to see what value gets retrieved from the database, you can override and add a breakpoint on the base method letting you inspect the value returned from the ADO.NET Data Reader:

public class MyCustomGuidConverter : SqlServerGuidConverter

{

public overridde object FromDbValue(Type fieldType, object value)

{

return base.FromDbValue(fieldType, value); //add breakpoint

}

}

Enhancing an existing Converter

An example of when you'd want to do this is if you wanted to use the Guid property in your POCO's on

legacy tables which stored Guids in VARCHAR columns, in which case you can also add support for converting

the returned strings back into Guid's with:

public class MyCustomGuidConverter : SqlServerGuidConverter

{

public overridde object FromDbValue(Type fieldType, object value)

{

var strValue = value as string;

return strValue != null

? new Guid(strValue);

: base.FromDbValue(fieldType, value);

}

}

Override handling of existing Types

Another popular Use Case now enabled with Converters is being able to override built-in functionality based

on preference. E.g. By default TimeSpans are stored in the database as Ticks in a BIGINT column since it's

the most reliable way to retain the same TimeSpan value uniformly across all RDBMS's.

E.g SQL Server's TIME data type can't store Times greater than 24 hours or with less precision than 3ms. But if using a TIME column was preferred it can now be enabled by registering to use the new SqlServerTimeConverter instead:

SqlServerDialect.Provider.RegisterConverter<TimeSpan>(new SqlServerTimeConverter {

Precision = 7

});

Customizable Field Definitions

Another benefit is they allow for easy customization as seen with Precision property which will now

create tables using the TIME(7) Column definition for TimeSpan properties.

For RDBMS's that don't have a native Guid type like Oracle or Firebird, you had an option to choose whether

you wanted to save them as text for better readability (default) or in a more efficient compact binary format.

Previously this preference was maintained in a boolean flag along with multiple Guid implementations hard-coded

at different entry points within each DialectProvider. This complexity has now been removed, now to store guids

in a compact binary format you'll instead register the preferred Converter implementation, e.g:

FirebirdDialect.Provider.RegisterConverter<Guid>(

new FirebirdCompactGuidConverter());

Changing String Column Behavior

This is another area improved with Converters where previously any available field customizations required maintaining state inside each provider. Now any customizations are encapsulated within each Converter and can be modified directly on its concrete Type without unnecessarily polluting the surface area of the primary IOrmLiteDialectProvider which used to create new API's every time a new customization option was added.

Now to customize the behavior of how strings are stored you can change them directly on the StringConverter, e.g:

StringConverter converter = OrmLiteConfig.DialectProvider.GetStringConverter();

converter.UseUnicode = true;

converter.StringLength = 100;

Which will change the default column definitions for strings to use NVARCHAR(100) for RDBMS's that support

Unicode or VARCHAR(100) for those that don't.

The GetStringConverter() API is just an extension method wrapping the generic GetConverter() API to return

a concrete type:

public static StringConverter GetStringConverter(this IOrmLiteDialectProvider dialect)

{

return (StringConverter)dialect.GetConverter(typeof(string));

}

Typed extension methods are also provided for other popular types offering additional customizations including

GetDecimalConverter() and GetDateTimeConverter().

Specify the DateKind in DateTimes

It's now much simpler and requires less effort to implement new features that maintain the same behavior

across all supported RDBM's thanks to better cohesion, re-use and reduced internal state. One new feature

we've added as a result is the new DateStyle customization on DateTimeConverter which lets you change how

Date's are persisted and populated, e.g:

DateTimeConverter converter = OrmLiteConfig.DialectProvider.GetDateTimeConverter();

converter.DateStyle = DateTimeKind.Local;

Will save DateTime in the database and populate them back on data models as LocalTime.

This is also available for Utc:

converter.DateStyle = DateTimeKind.Utc;

Default is Unspecified which doesn't do any conversions and just uses the DateTime returned by the ADO.NET provider.

Examples of the behavior of the different DateStyle's is available in

DateTimeTests.

SQL Server Special Type Converters!

Just as the ground work for Converters were laid down, @KevinHoward from the ServiceStack Community noticed OrmLite could now be extended to support new Types and promptly contributed Converters for SQL Server-specific SqlGeography, SqlGeometry and SqlHierarchyId Types!

Since these Types require an external dependency to the Microsoft.SqlServer.Types NuGet package they're contained in a separate NuGet package that can be installed with:

PM> Install-Package ServiceStack.OrmLite.SqlServer.Converters

Alternative Strong-named version:

PM> Install-Package ServiceStack.OrmLite.SqlServer.Converters.Signed

Once installed, all available SQL Server Types can be registered on your SQL Server Provider with:

SqlServerConverters.Configure(SqlServer2012Dialect.Provider);

Example Usage

After the Converters are registered they can treated like a normal .NET Type, e.g:

SqlHierarchyId Example:

public class Node {

[AutoIncrement]

public long Id { get; set; }

public SqlHierarchyId TreeId { get; set; }

}

db.DropAndCreateTable<Node>();

var treeId = SqlHierarchyId.Parse("/1/1/3/"); // 0x5ADE is hex

db.Insert(new Node { TreeId = treeId });

var parent = db.Scalar<SqlHierarchyId>(db.From<Node>().Select("TreeId.GetAncestor(1)"));

parent.ToString().Print(); //= /1/1/

SqlGeography and SqlGeometry Example:

public class GeoTest {

public long Id { get; set; }

public SqlGeography Location { get; set; }

public SqlGeometry Shape { get; set; }

}

db.DropAndCreateTable<GeoTest>();

var geo = SqlGeography.Point(40.6898329,-74.0452177, 4326); // Statue of Liberty

// A simple line from (0,0) to (4,4) Length = SQRT(2 * 4^2)

var wkt = new System.Data.SqlTypes.SqlChars("LINESTRING(0 0, 4 4)".ToCharArray());

var shape = SqlGeometry.STLineFromText(wkt, 0);

db.Insert(new GeoTestTable { Id = 1, Location = geo, Shape = shape });

var dbShape = db.SingleById<GeoTest>(1).Shape;

new { dbShape.STEndPoint().STX, dbShape.STEndPoint().STY }.PrintDump();

Output:

{

STX: 4,

STY: 4

}

New SQL Server 2012 Dialect Provider

There's a new SqlServer2012Dialect.Provider to take advantage of optimizations available in recent versions

of SQL Server, that's now recommended for use with SQL Server 2012 and later.

container.Register<IDbConnectionFactory>(c =>

new OrmLiteConnectionFactory(connString, SqlServer2012Dialect.Provider);

The new SqlServer2012Dialect takes advantage of SQL Server's new OFFSET and FETCH support to enable more

optimal paged queries

that replaces the

Windowing Function hack

required to support earlier versions of SQL Server.

Nested Typed Sub SqlExpressions

The Sql.In() API has been expanded by Johann Klemmack to support nesting

and combining of multiple Typed SQL Expressions together in a single SQL Query, e.g:

var usaCustomerIds = db.From<Customer>(c => c.Country == "USA").Select(c => c.Id);

var usaCustomerOrders = db.Select(db.From<Order>()

.Where(q => Sql.In(q.CustomerId, usaCustomerIds)));

Descending Indexes

Descending composite Indexes can be declared with:

[CompositeIndex("Field1", "Field2 DESC")]

public class Poco { ... }

Dapper Updated

The embedded version of Dapper in the ServiceStack.OrmLite.Dapper namespace has been upgraded to the

latest version of Dapper and also includes Dapper's Async API's in .NET 4.5 builds.

CSV Support for dynamic Dapper results

The CSV Serializer also added support for Dapper's dynamic results:

IEnumerable<dynamic> results = db.Query("select * from Poco");

string csv = CsvSerializer.SerializeToCsv(results);

OrmLite CSV Example

OrmLite avoids dynamic and instead prefers the use of code-first POCO's, where the above example translates to:

var results = db.Select<Poco>();

var csv = results.ToCsv();

To query untyped results in OrmLite when no POCO's exist, you can read them into a generic Dictionary:

var results = db.Select<Dictionary<string,object>>("select * from Poco");

var csv = results.ToCsv();

Order By Random

The new OrderByRandom() API abstracts the differences in each RDBMS to return rows in a random order:

var randomRows = db.Select<Poco>(q => q.OrderByRandom());

Other OrmLite Features

CreateTableIfNotExists returns true if a new table was created which is convenient for only populating

non-existing tables with new data on your Application StartUp, e.g:

if (db.CreateTableIfNotExists<Poco>()) {

AddSeedData(db);

}

- OrmLite Debug Logging includes DB Param names and values

- Char fields now use CHAR(1)

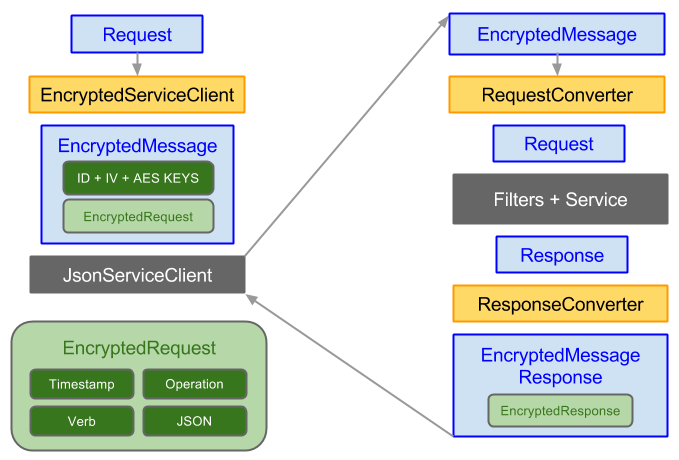

Encrypted Messaging

Encrypted Messages verified with HMAC SHA-256

The authenticity of Encrypted Messages are now being verified with HMAC SHA-256, essentially following an Encrypt-then-MAC strategy. The change to the existing process is that a new AES 256 Auth Key is used to Authenticate the encrypted data which is then sent along with the Crypt Key, encrypted with the Server's Public Key.

An updated version of this process is now:

- Client creates a new

IEncryptedClientconfigured with the Server Public Key - Client uses the

IEncryptedClientto create a EncryptedMessage Request DTO: - Generates a new AES 256bit/CBC/PKCS7 Crypt Key (Kc), Auth Key (Ka) and IV

- Encrypts Crypt Key (Kc), Auth Key (Ka) with Servers Public Key padded with OAEP = (Kc+Ka+P)e

- Authenticates (Kc+Ka+P)e with IV using HMAC SHA-256 = IV+(Kc+Ka+P)e+Tag

- Serializes Request DTO to JSON packed with current

Timestamp,VerbandOperation= (M) - Encrypts (M) with Crypt Key (Kc) and IV = (M)e

- Authenticates (M)e with Auth Key (Ka) and IV = IV+(M)e+Tag

- Creates

EncryptedMessageDTO with ServersKeyId, IV+(Kc+Ka+P)e+Tag and IV+(M)e+Tag - Client uses the

IEncryptedClientto send the populatedEncryptedMessageto the remote Server

On the Server, the EncryptedMessagingFeature Request Converter processes the EncryptedMessage DTO:

- Uses Private Key identified by KeyId or the current Private Key if KeyId wasn't provided

- Request Converter Extracts IV+(Kc+Ka+P)e+Tag into IV and (Kc+Ka+P)e+Tag

- Decrypts (Kc+Ka+P)e+Tag with Private Key into (Kc) and (Ka)

- The IV is checked against the nonce Cache, verified it's never been used before, then cached

- The IV+(Kc+Ka+P)e+Tag is verified it hasn't been tampered with using Auth Key (Ka)

- The IV+(M)e+Tag is verified it hasn't been tampered with using Auth Key (Ka)

- The IV+(M)e+Tag is decrypted using Crypt Key (Kc) = (M)

- The timestamp is verified it's not older than

EncryptedMessagingFeature.MaxRequestAge - Any expired nonces are removed. (The timestamp and IV are used to prevent replay attacks)

- The JSON body is deserialized and resulting Request DTO returned from the Request Converter

- The converted Request DTO is executed in ServiceStack's Request Pipeline as normal

- The Response DTO is picked up by the EncryptedMessagingFeature Response Converter:

- Any Cookies set during the Request are removed

- The Response DTO is serialized with the AES Key and returned in an

EncryptedMessageResponse - The

IEncryptedClientdecrypts theEncryptedMessageResponsewith the AES Key - The Response DTO is extracted and returned to the caller

Support for versioning Private Keys with Key Rotations

Another artifact introduced in the above process was the mention of a new KeyId.

This is a human readable string used to identify the Servers Public Key using the first 7 characters

of the Public Key Modulus (visible when viewing the Private Key serialized as XML).

This is automatically sent by IEncryptedClient to tell the EncryptedMessagingFeature which Private Key

should be used to decrypt the AES Crypt and Auth Keys.

By supporting multiple private keys, the Encrypted Messaging feature allows the seamless transition to a new Private Key without affecting existing clients who have yet to adopt the latest Public Key.

Transitioning to a new Private Key just involves taking the existing Private Key and adding it to the

FallbackPrivateKeys collection whilst introducing a new Private Key, e.g:

Plugins.Add(new EncryptedMessagesFeature

{

PrivateKey = NewPrivateKey,

FallbackPrivateKeys = {

PreviousKey2015,

PreviousKey2014,

},

});

Why Rotate Private Keys?

Since anyone who has a copy of the Private Key can decrypt encrypted messages, rotating the private key clients use limits the amount of exposure an adversary who has managed to get a hold of a compromised private key has. i.e. if the current Private Key was somehow compromised, an attacker with access to the encrypted network packets will be able to read each message sent that was encrypted with the compromised private key up until the Server introduces a new Private Key which clients switches over to.

Swagger UI

The Swagger Metadata backend has been upgraded to support the Swagger 1.2 Spec

Basic Auth added to Swagger UI

Users can call protected Services using the Username and Password fields in Swagger UI. Swagger sends these credentials with every API request using HTTP Basic Auth, which can be enabled in your AppHost with:

Plugins.Add(new AuthFeature(...,

new IAuthProvider[] {

new BasicAuthProvider(), //Allow Sign-ins with HTTP Basic Auth

}));

Alternatively users can login outside of Swagger, to access protected Services in Swagger UI.

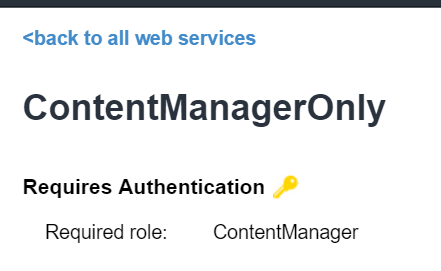

Auth Info displayed in Metadata Pages

Metadata pages now label protected Services. On the metadata index page it displays a yellow key next to each Service requiring Authentication:

Hovering over the key will show which also permissions or roles the Service needs.

This information is also shown the metadata detail pages which will list which permissions/roles are required (if any), e.g:

Java Native Types

Java Functional Utils

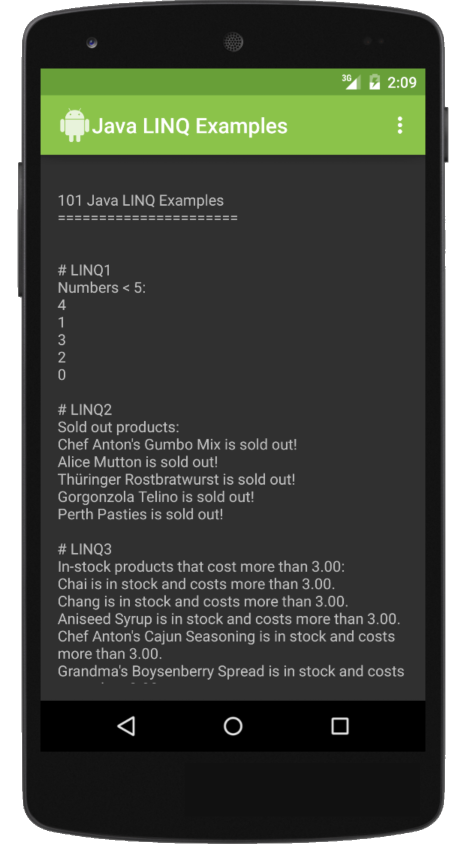

The Core Java Functional Utils required to run C#'s 101 LINQ Samples in Java have been added to the net.servicestack:client Java package which as its compatible with Java 1.7, also runs on Android:

Whilst noticeably more verbose than most languages, it enables a functional style of programming that provides an alternative to imperative programming with mutating collections and eases porting efforts of functional code which can be mapped to its equivalent core functional method.

New TreatTypesAsStrings option

Due to the unusual encoding of Guid bytes it may be instead be

preferential to treat Guids as opaque strings so they are easier to compare back to their original C# Guids.

This can be enabled with the new TreatTypesAsStrings option:

/* Options:

...

TreatTypesAsStrings: Guid

*/

Which will emit String data types for Guid properties that are deserialized back into .NET Guid's as strings.

Swift Native Types

All Swift reserved keywords are now escaped, allowing them to be used in DTO's.

Service Clients

All .NET Service Clients (inc JsonHttpClient)

can now be used to send raw string, byte[] or Stream Request bodies in their custom Sync or Async API's, e.g:

string json = "{\"Key\":1}";

client.Post<SendRawResponse>("/sendraw", json);

byte[] bytes = json.ToUtf8Bytes();

client.Put<SendRawResponse>("/sendraw", bytes);

Stream stream = new MemoryStream(bytes);

await client.PostAsync<SendRawResponse>("/sendraw", stream);

Authentication

Community Azure Active Directory Auth Provider

Jacob Foshee from the ServiceStack Community has published a ServiceStack AuthProvider for Azure Active Directory.

To get started, Install it from NuGet with:

PM> Install-Package ServiceStack.Authentication.Aad

Then add the AadAuthProvider AuthProvider to your AuthFeature registration:

Plugins.Add(new AuthFeature(...,

new IAuthProvider[] {

new AadAuthProvider(AppSettings)

});

See the docs on the

Projects Homepage

for instructions on how to Configure the Azure Directory OAuth Provider in your applications <appSettings/>.

MaxLoginAttempts

The MaxLoginAttempts feature has been moved out from OrmLiteAuthRepository into a global option in the

AuthFeature plugin where this feature has now been added to all User Auth Repositories.

E.g. you can lock a User Account after 5 invalid login attempts with:

Plugins.Add(new AuthFeature(...) {

MaxLoginAttempts = 5

});

Generate New Session Cookies on Authentication

Previously the Authentication provider only removed Users Cookies after they explicitly log out. The AuthFeature now also regenerates new Session Cookies each time users login. If you were previously relying on the user maintaining the same cookies (i.e. tracking anonymous user activity) this behavior can be disabled with:

Plugins.Add(new AuthFeature(...) {

GenerateNewSessionCookiesOnAuthentication = false

});

ClientId and ClientSecret OAuth Config Aliases

OAuth Providers can now use ClientId and ClientSecret aliases instead of ConsumerKey and ConsumerSecret, e.g:

<appSettings>

<add key="oauth.twitter.ClientId" value="..." />

<add key="oauth.twitter.ClientSecret" value="..." />

</appSettings>

Error Handling

Custom Response Error Codes

In addition to customizing the HTTP Response Body of C# Exceptions with

IResponseStatusConvertible,

you can also customize the HTTP Status Code by implementing IHasStatusCode:

public class Custom401Exception : Exception, IHasStatusCode

{

public int StatusCode

{

get { return 401; }

}

}

Which is a more cohesive alternative that registering the equivalent StatusCode Mapping:

SetConfig(new HostConfig {

MapExceptionToStatusCode = {

{ typeof(Custom401Exception), 401 },

}

});

Meta Dictionary on ResponseStatus and ResponseError

The IMeta

Dictionary has been added to ResponseStatus and ResponseError DTO's which provides a placeholder to

be able to send additional context with errors.

This Meta dictionary will be automatically populated for any CustomState on FluentValidation ValidationFailure

that's populated with a Dictionary<string, string>.

Server Events

The new ServerEventsFeature.HouseKeepingInterval option controls the minimum interval for how often SSE

connections should be routinely scanned and expired subscriptions removed. The default is every 5 seconds.

As there's no background Thread managing SSE connections, the cleanup happens in periodic SSE heartbeat handlers

Update: An issue with this feature has been resolved in the v4.0.45 pre-release NuGet packages.

ServiceStack.Text

There are new convenient extension methods for Converting any POCO to and from Object Dictionary, e.g:

var dto = new User

{

FirstName = "First",

LastName = "Last",

Car = new Car { Age = 10, Name = "ZCar" },

};

Dictionary<string,object> map = dtoUser.ToObjectDictionary();

User user = (User)map.FromObjectDictionary(typeof(User));

Like most Reflection API's in ServiceStack this is fairly efficient as it uses cached compiled delegates.

There's also an extension method for adding types to List<Type>, e.g:

var types = new List<Type>()

.Add<User>()

.Add<Car>();

Which is a cleaner equivalent to:

var types = new List<Type>();

types.Add(typeof(User));

types.Add(typeof(User));