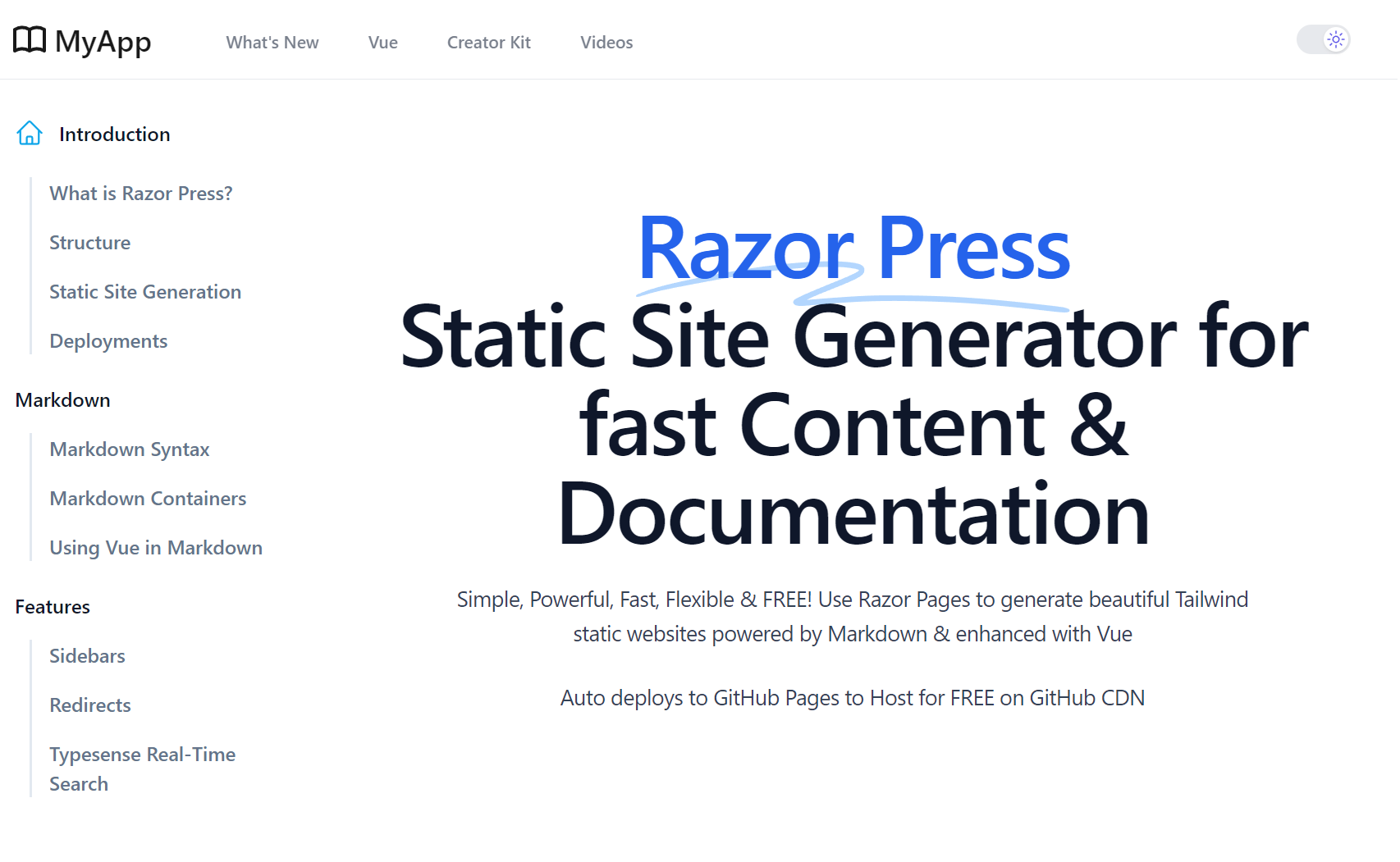

Introducing Razor Press

Introducing Razor Press

SSG Razor Pages alternative to VitePress & Jekyll for creating beautiful docs

Razor Press is a Razor Pages and Markdown powered alternative to Ruby's Jekyll & Vue's VitePress that's ideal for generating fast, static content-centric & documentation websites. Inspired by VitePress, it's designed to effortlessly create documentation around content written in Markdown, rendered using C# Razor Pages that's beautifully styled with tailwindcss and @tailwindcss/typography.

The resulting statically generated HTML pages can be easily deployed anywhere, where it can be hosted by any HTTP Server or CDN. By default it includes GitHub Actions to deploy it your GitHub Repo's gh-pages branch where it's hosted for FREE on GitHub Pages CDN which can be easily configured to use your Custom Domain.

Install Razor Press

Download a new Razor Press Project with you preferred Project name below:

Alternatively you can install a new project template using the x dotnet tool:

x new razor-press ProjectName

Use Cases

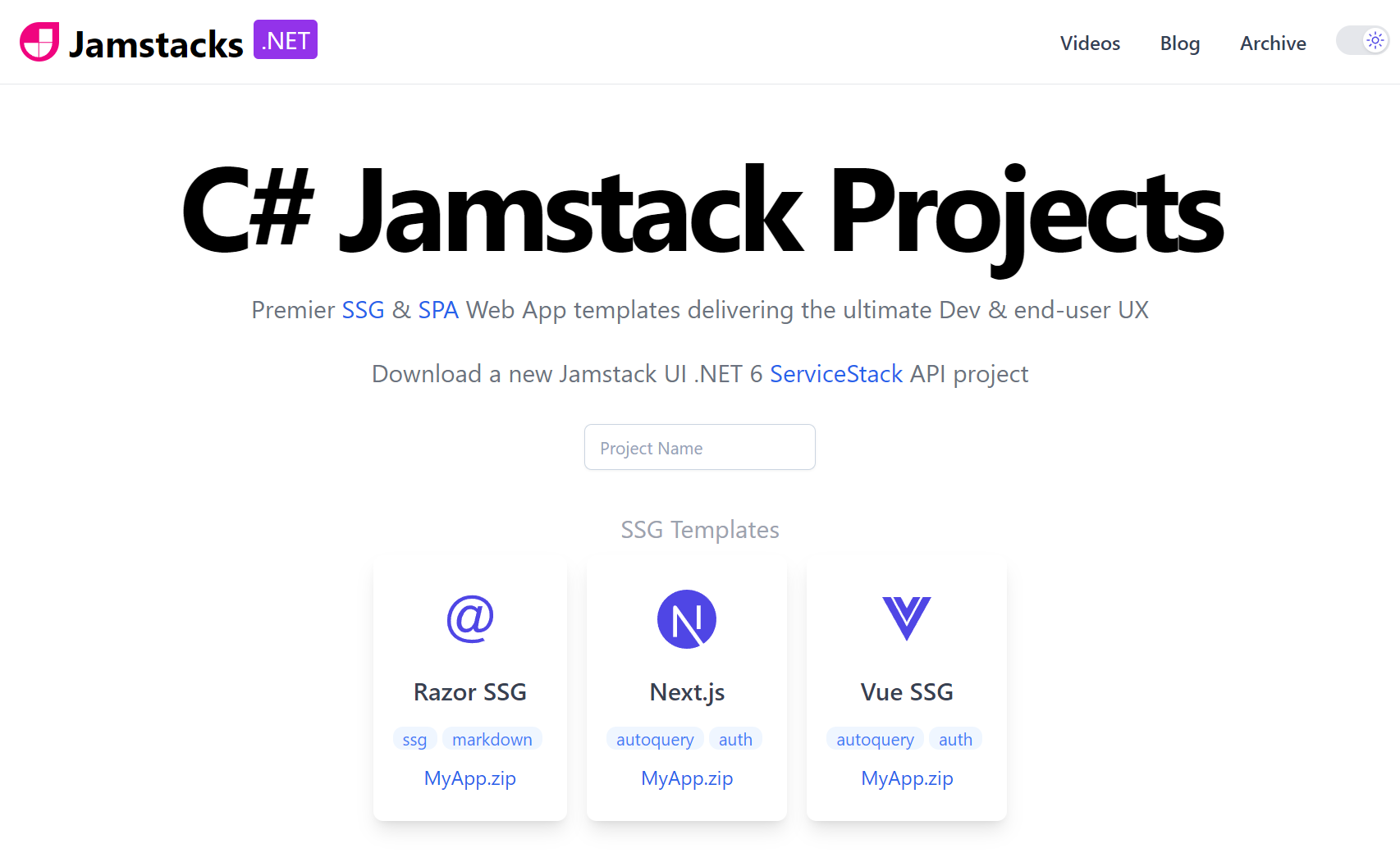

Razor Press utilizes the same technology as Razor SSG which is the template we recommend for developing any statically generated sites with Razor like Blogs, Portfolios, and Marketing Sites as it includes more Razor & Markdown features like blogs and integration with Creator Kit - a companion OSS project offers the necessary tools any static website can use to reach and retain users, from managing subscriber mailing lists to moderating a feature-rich comments system.

Some examples built with Razor SSG include:

Documentation

Razor Press is instead optimized for creating documentation and content-centric websites, with built-in features useful for documentation websites including:

- Customizable Sidebar Menus

- Document Maps

- Document Page Navigation

- Autolink Headers

Markdown Extensions

- Markdown Content Includes

- Tip, Info, Warning, Danger sections

- Copy and Shell command widgets

But given Razor Press and Razor SSG share the same implementation, their features are easily transferable, e.g. The What's New and Videos sections are features copied from Razor SSG as they can be useful in Documentation websites.

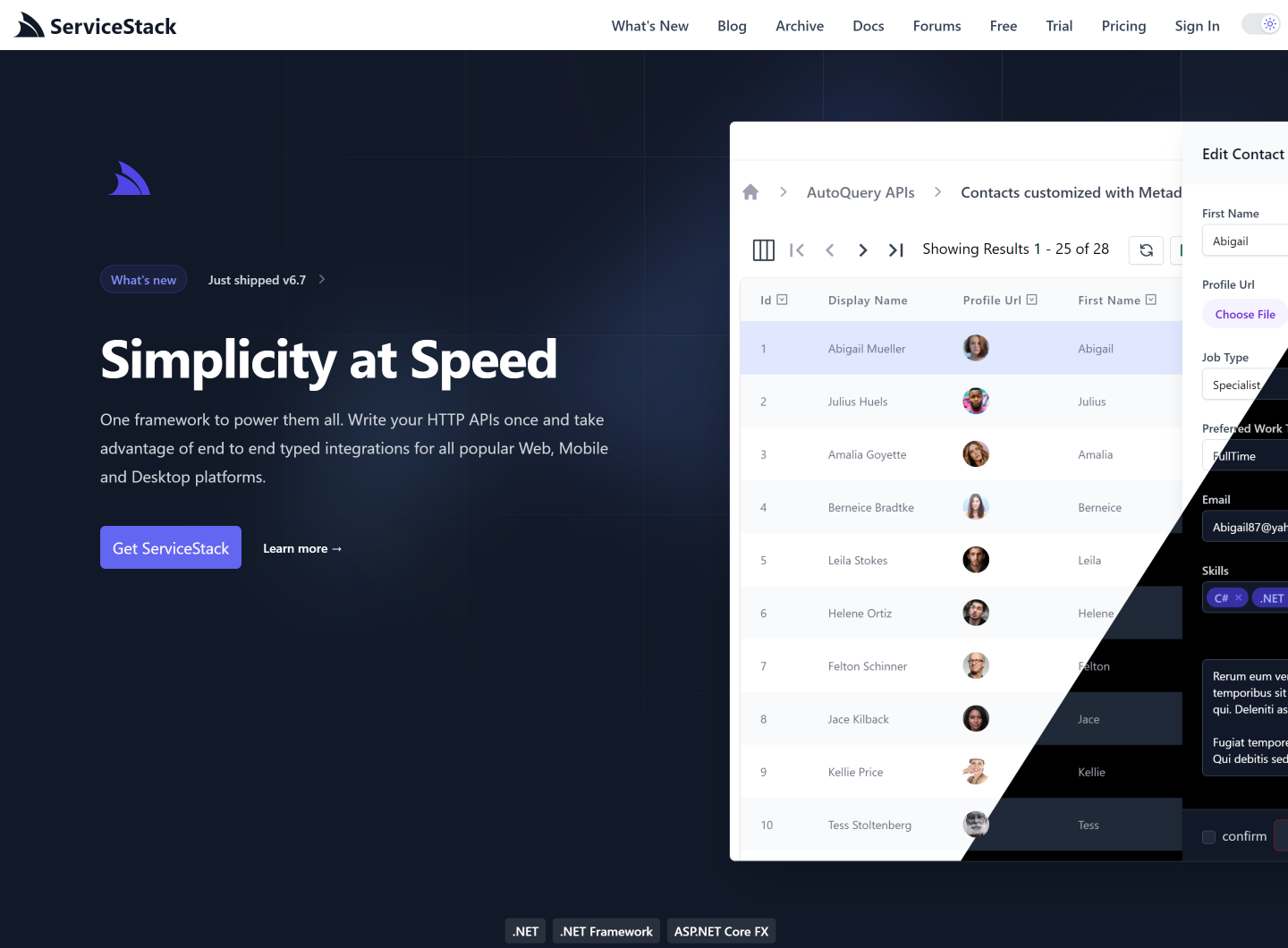

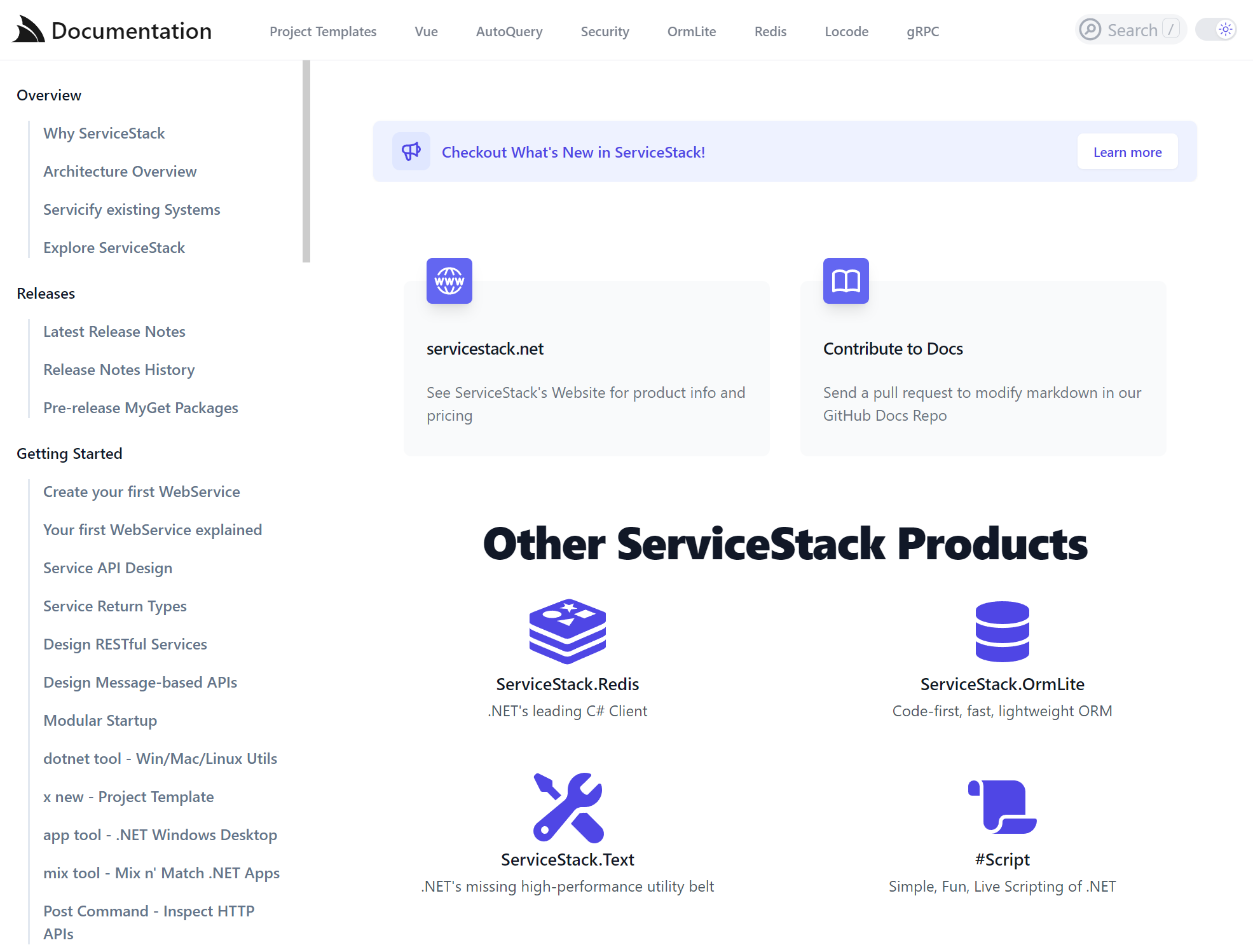

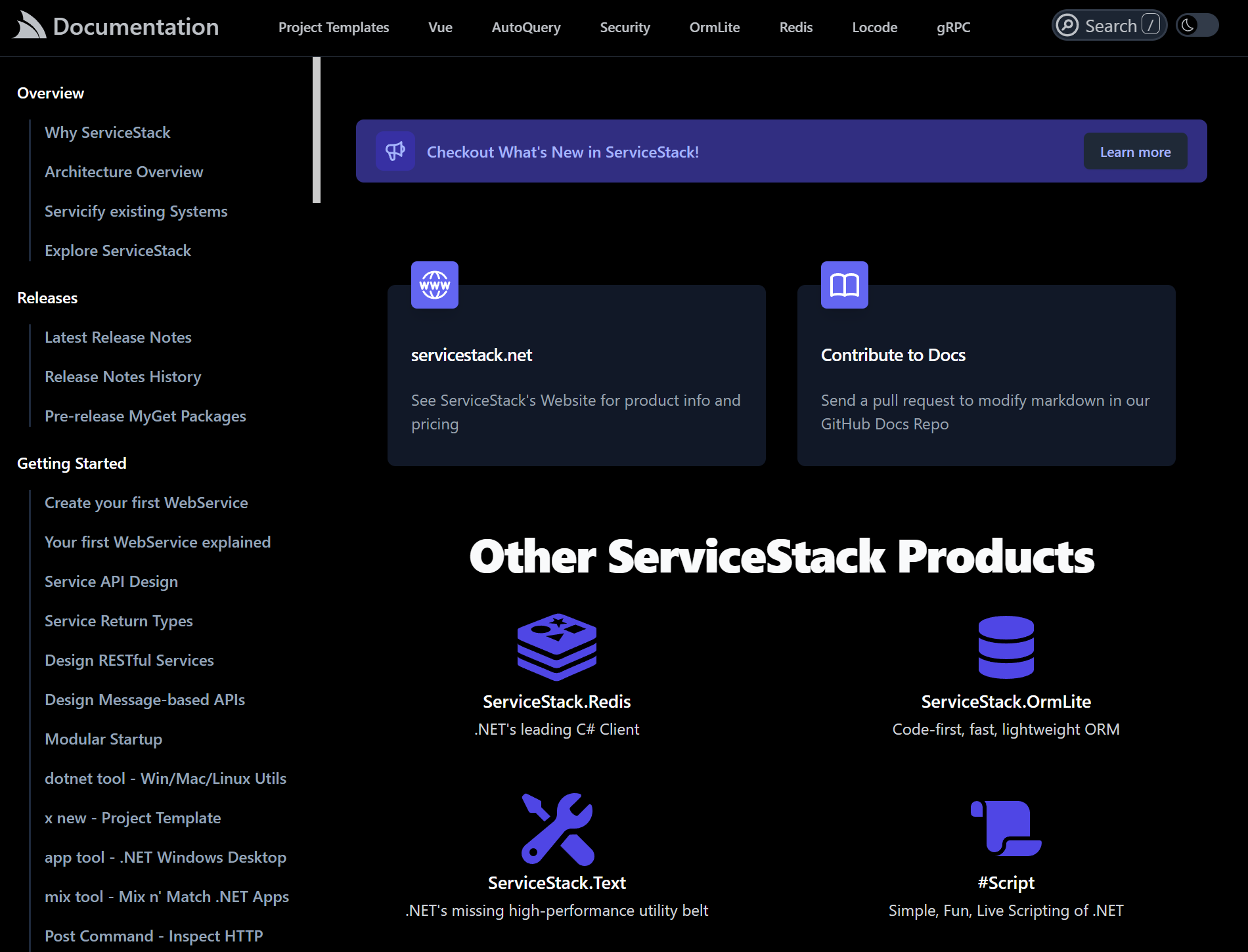

docs.servicestack.net ported to Razor Press

docs.servicestack.net ported to Razor Press

Following in the footsteps of porting servicestack.net website from Jekyll to Razor SSG, we've decided to also take control over our last active VitePress website and port our docs.servicestack.net to Razor Press, giving us complete control over its implementation allowing us to resolve any issues and add any features ourselves as needed, at the same time freeing us from the complexity and brittleness of the npm ecosystem with a more robust C# and Razor Pages SSG based implementation.

VitePress Issues

Our 500 page docs.servicestack.net started experiencing growing pains under VitePress which started experiencing rendering issues that we believe stems from VitePress's SSR/SPA hydration model that for maximum performance would convert the initial downloaded SSR content into an SPA to speed up navigation between pages.

However several pages began to randomly show duplicate content and sometimes not display the bottom section of pages at all. For a while we worked around these issues by running custom JavaScript to detect and remove duplicate content from the DOM after the page loaded as well as moving bottom fragments of pages into separate includes and external Vue components for the pages with missing content.

However as the time to detect and workaround these issues across all our documentation started becoming too time consuming, it was time to consider a more permanent and effective solution.

Porting to Razor SSG

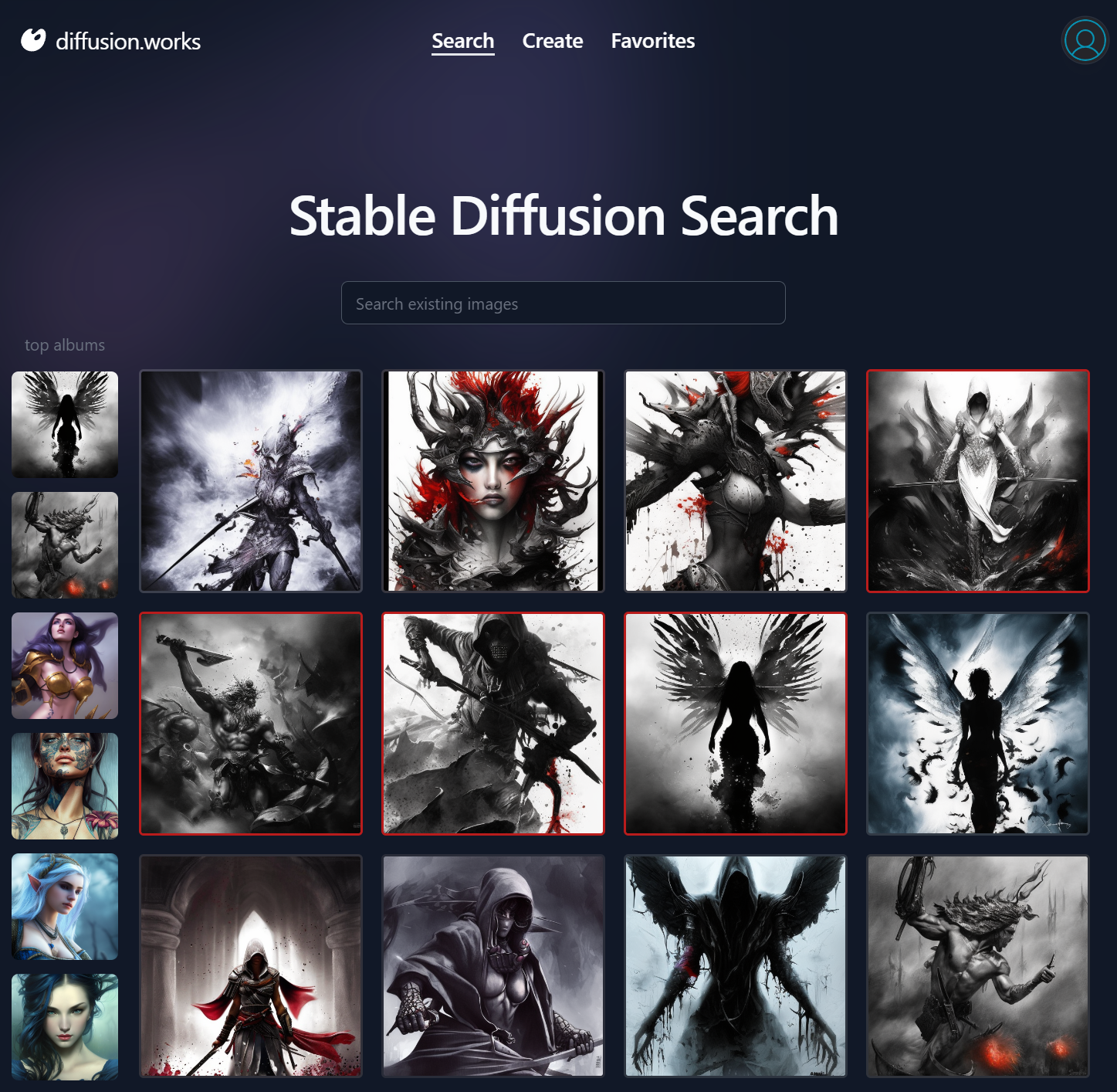

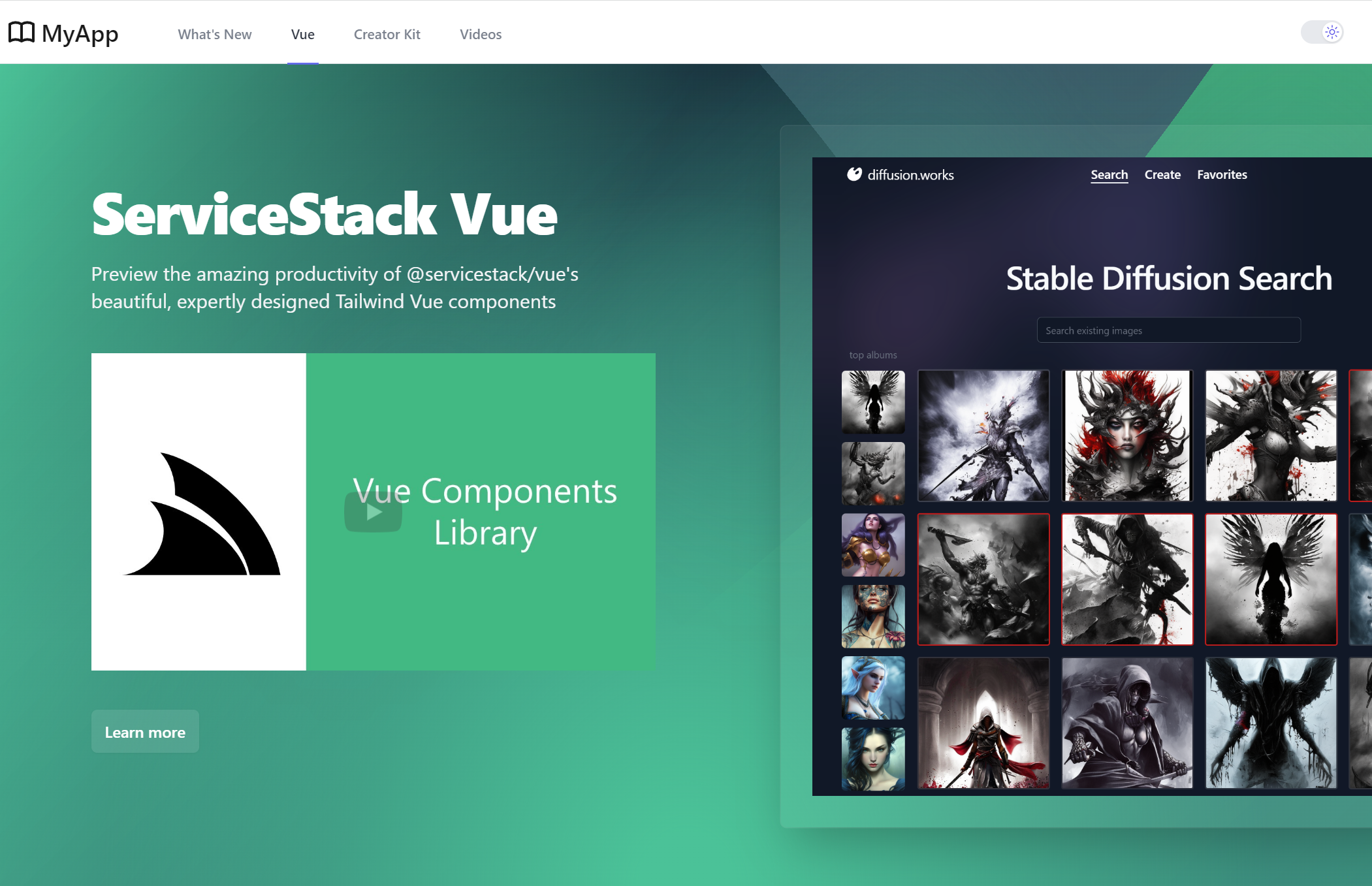

Given we've already spent time & effort porting docs.servicestack.net from Jekyll to VitePress less than 2 years ago and after the success we had of rapidly porting servicestack.net to Razor SSG and rapidly creating Vue Stable Diffusion with Razor SSG in a fraction of the time it took to develop the equivalent Blazor Diffusion, it was clear we should also do the same for the new documentation website.

Porting docs.servicestack.net ended up being fairly straightforward process that was completed in just a few days, with most of the time spent on implementing existing VitePress features we used in C# and Markdig Extensions, a new Responsive Tailwind Layout and adding support for Dark Mode which was previously never supported.

Fortunately none of VitePress's SSR/SPA hydration issues manifested in the port which adopted the cleaner traditional architecture of generating clean HTML from Markdown and Razor Pages and enhanced on the client-side with Vue.

We're extremely happy with the result, a much lighter and cleaner HTML generated site that now supports Dark Mode!

Razor Pages Benefits

The new Razor SSG implementation now benefits from Razor Pages flexible layouts and partials where pages can be optionally implemented in just markdown, Razor or a hybrid mix of both. The Vue splash page is an example of this implemented in a custom /Vue/Index.cshtml Razor Page:

Other benefits include a new Documentation Map feature with live scroll updating, displayed on the right side of each documentation page.

New in Razor SSG

We've continued improving the Razor SSG template for static generated websites and Blogs.

RSS Feed

Razor SSG websites now generates a valid RSS Feed for its blog to support their readers who'd prefer to read blog posts with their favorite RSS reader:

New Markdown Containers

All Razor Press's Markdown Containers are also available in Razor SSG websites for enabling rich, wrist-friendly consistent markup in your Markdown pages, e.g:

::: info

This is an info box.

:::

::: tip

This is a tip.

:::

::: warning

This is a warning.

:::

::: danger

This is a dangerous warning.

:::

:::copy

Copy Me!

:::

INFO

This is an info box.

TIP

This is a tip.

WARNING

This is a warning.

DANGER

This is a dangerous warning.

Copy Me!

See Markdown Containers docs for more examples and how to implement your own Custom Markdown containers.

Support for Includes

Markdown fragments can be added to _pages/_include - a special folder rendered with

Pages/Includes.cshtml using

an Empty Layout

which can be included in other Markdown and Razor Pages or fetched on demand with Ajax.

Markdown Fragments can be then included inside other markdown documents with the ::include inline container, e.g:

::include vue/formatters.md::

Where it will be replaced with the HTML rendered markdown contents of fragments maintained in _pages/_include.

Include Markdown in Razor Pages

Markdown Fragments can also be included in Razor Pages using the custom MarkdownTagHelper.cs <markdown/> tag:

<markdown include="vue/formatters.md"></markdown>

Inline Markdown in Razor Pages

Alternatively markdown can be rendered inline with:

<markdown>

## Using Formatters

Your App and custom templates can also utilize @servicestack/vue's

[built-in formatting functions](href="/vue/use-formatters).

</markdown>

Meta Headers support for Twitter cards and Improved SEO

Blog Posts and Pages now include additional <meta> HTML Headers to enable support for

Twitter Cards in both

Twitter and Meta's new threads.net, e.g:

Posts can include Vue Components

Blog Posts can now embed any global Vue Components directly in their Markdown, e.g:

<getting-started></getting-started>

Just like Pages and Docs they can also include specific JavaScript .mjs or .css in the /wwwroot/posts folder

which will only be loaded for that post:

Now posts that need it can dynamically load large libraries like Chart.js and use it inside a custom Vue component, e.g:

import { ref, onMounted } from "vue"

import { addScript } from "@servicestack/client"

const addChartsJs = await addScript('../lib/js/chart.js')

const ChartJs = {

template:`<div><canvas ref="chart"></canvas></div>`,

props:['type','data','options'],

setup(props) {

const chart = ref()

onMounted(async () => {

await addChartsJs

const options = props.options || {

responsive: true,

legend: { position: "top" }

}

new Chart(chart.value, {

type: props.type || "bar",

data: props.data,

options,

})

})

return { chart }

}

}

export default {

components: { ChartJs }

}

Which allows the post to embed Chart.js charts using the custom <chart-js> Vue component and a JS Object literal, e.g:

<chart-js :data="{

labels: [

//...

],

datasets: [

//...

]

}"></chart-js>

Which the Bulk Insert Performance Blog Post uses extensively to embeds its Chart.js Bar charts:

Light and Dark Mode Query Params

You can link to Dark and Light modes of your Razor SSG website with the ?light and ?dark query string params:

Blog Post Authors can have threads.net and Mastodon links

The social links for Blog Post Authors can now include threads.net and mastodon.social links, e.g:

{

"AppConfig": {

"BlogImageUrl": "https://servicestack.net/img/logo.png",

"Authors": [

{

"Name": "Lucy Bates",

"Email": "lucy@email.org",

"ProfileUrl": "img/authors/author1.svg",

"TwitterUrl": "https://twitter.com/lucy",

"ThreadsUrl": "https://threads.net/@lucy",

"GitHubUrl": "https://github.com/lucy"

"MastodonUrl": "https://mastodon.social/@luch"

}

]

}

}

RDBMS Bulk Inserts

The latest release of OrmLite includes Bulk Inserts implementations for

each supported RDBMS to support the most efficient

ways for inserting large amounts of data, which is encapsulated behind OrmLite's new BulkInsert API:

db.BulkInsert(rows);

Bulk Insert Implementations

Where the optimal implementation for each RDBMS were all implemented differently:

- PostgreSQL - Uses PostgreSQL's COPY command via Npgsql's Binary Copy import

- MySql - Uses MySqlBulkLoader

feature where data is written to a temporary CSV file that's imported directly by

MySqlBulkLoader - MySqlConnector - Uses MySqlConnector's MySqlBulkLoader

implementation which makes use of its

SourceStreamfeature to avoid writing to a temporary file - SQL Server - Uses SQL Server's

SqlBulkCopyfeature which imports data written to an in-memoryDataTable - SQLite - SQLite doesn't have a specific import feature, instead Bulk Inserts are performed using batches of Multiple Rows Inserts to reduce I/O calls down to a configurable batch size

- Firebird - Is also implemented using Multiple Rows Inserts within an EXECUTE BLOCK configurable up to Firebird's maximum of 256 statements

SQL Multiple Row Inserts

All RDBMS's also support SQL's Multiple Insert Rows feature which is an efficient and compact alternative to inserting multiple rows within a single INSERT statement:

INSERT INTO Contact (Id, FirstName, LastName, Age) VALUES

(1, 'John', 'Doe', 27),

(2, 'Jane', 'Doe', 42);

Normally OrmLite APIs uses parameterized statements however for Bulk Inserts it uses inline rasterized values in order to construct and send large SQL INSERT statements that avoids RDBMS's max parameter limitations, which if preferred can be configured to be used instead of its default optimal implementation:

db.BulkInsert(rows, new BulkInsertConfig {

Mode = BulkInsertMode.Sql

});

Batch Size

Multiple Row Inserts are sent in batches of 1000 (Maximum for SQL Server), Firebird uses a maximum of 256 whilst other RDBMS's can be configured to use larger batch sizes:

db.BulkInsert(rows, new BulkInsertConfig {

BatchSize = 1000

});

Bulk Insert Benchmarks

To test the performance of Bulk Inserts we've ran a number of benchmarks across macOS, Linux and Windows in our Bulk Insert Performance blog post.

The Relative performances of Apple M2 macOS Benchmarks provide some indication of the performance benefits of Bulk Inserts you can expect, confirming that they offer much better performance when needing to insert a significant number of rows, we're it's up to 138x more efficient than inserting just 1,000 rows.

Relative performance for Inserting 1,000 records:

| Database | Bulk Inserts | Multiple Rows Inserts | Single Row Inserts |

|---|---|---|---|

| PostgreSQL | 1x | 1.32x | 57.04x |

| MySqlConnector | 1x | 1.04x | 137.78x |

| MySql | 1x | 1.16x | 131.47x |

| SqlServer | 1x | 6.61x | 74.19x |

Relative performance for Inserting 10,000 records:

| Database | Bulk Inserts | Multiple Rows Inserts |

|---|---|---|

| PostgreSQL | 1x | 3.37x |

| MySqlConnector | 1x | 1.24x |

| MySql | 1x | 1.52x |

| SqlServer | 1x | 9.36x |

Relative performance for Inserting 100,000 records:

| Database | Bulk Inserts | Multiple Rows Inserts |

|---|---|---|

| PostgreSQL | 1x | 3.68x |

| MySqlConnector | 1x | 2.04x |

| MySql | 1x | 2.31x |

| SqlServer | 1x | 10.14x |

It also shows that batched Multiple Row Inserts Bulk Insert mode is another good option for inserting large number of

rows that's within 3.4x performance range of optimal Bulk Insert implementations, for all but SQL Server which

is an order of magnitude slower than using SqlBulkCopy.

Transaction SavePoints

A savepoint is a special mark inside a transaction that allows all commands that are executed after it was established to be rolled back, restoring the transaction state to what it was at the time of the savepoint.

Transaction SavePoints have been added for all supported RDBMS

with the SavePoint() API which will let you Create and Rollback() to a SavePoint or Release() its resources, e.g:

// Sync

using (var trans = db.OpenTransaction())

{

try

{

db.Insert(new Person { Id = 2, Name = "John" });

var firstSavePoint = trans.SavePoint("FirstSavePoint");

db.UpdateOnly(() => new Person { Name = "Jane" }, where: x => x.Id == 1);

firstSavePoint.Rollback();

var secondSavePoint = trans.SavePoint("SecondSavePoint");

db.UpdateOnly(() => new Person { Name = "Jack" }, where: x => x.Id == 1);

secondSavePoint.Release();

db.Insert(new Person { Id = 3, Name = "Diane" });

trans.Commit();

}

catch (Exception e)

{

trans.Rollback();

}

}

It's also includes equivalent async versions with SavePointAsync() to create a Save Point, RollbackAsync() and ReleaseAsync()

to Rollback and Release Save Points, e.g:

// Async

using (var trans = db.OpenTransaction())

{

try

{

await db.InsertAsync(new Person { Id = 2, Name = "John" });

var firstSavePoint = await trans.SavePointAsync("FirstSavePoint");

await db.UpdateOnlyAsync(() => new Person { Name = "Jane" }, where: x => x.Id == 1);

await firstSavePoint.RollbackAsync();

var secondSavePoint = await trans.SavePointAsync("SecondSavePoint");

await db.UpdateOnlyAsync(() => new Person { Name = "Jack" }, where: x => x.Id == 1);

await secondSavePoint.ReleaseAsync();

await db.InsertAsync(new Person { Id = 3, Name = "Diane" });

trans.Commit();

}

catch (Exception e)

{

trans.Rollback();

}

}

Multi Database Migrations

OrmLite's code-first DB Migrations now support running and reverting Migrations on multiple named connections which is a feature used in the Install PostgreSQL, MySql and SQL Server on Apple Silicon Blog Post to populate test data in all configured RDBMS's:

[NamedConnection("mssql")]

[NamedConnection("mysql")]

[NamedConnection("postgres")]

public class Migration1001 : MigrationBase

{

//...

}

Although this feature is more useful for maintaining the same schema across multiple database shards, which is a popular scaling technique to increase system capacity and improve response times:

[NamedConnection("shard1")]

[NamedConnection("shard2")]

[NamedConnection("shard3")]

public class Migration1001 : MigrationBase

{

//...

}

SqlServer and Sqlite.Data for NET Framework

To enable creating cross-platform Apps that work across macOS, Linux, Windows on both x86 and ARM we're switching our examples, Apps and Demos to use the ServiceStack.OrmLite.SqlServer.Data and ServiceStack.OrmLite.Sqlite.Data NuGet packages which now include .NET Framework v4.7.2 builds to ensure they can run on all platforms.

We now recommend switching to these packages as they reference the more portable and actively maintained Microsoft.Data.SqlClient and Microsoft.Data.Sqlite ADO.NET NuGet packages.

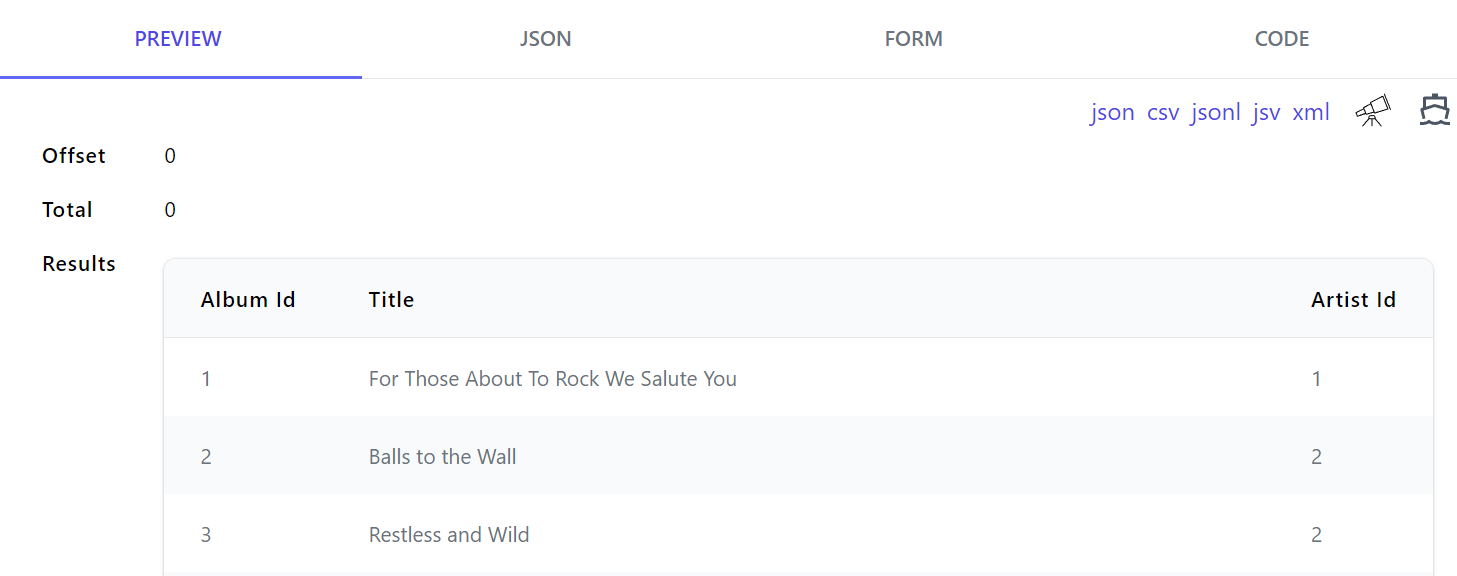

JSON Lines Data Format

JSON Lines is an efficient JSON data format parseable by streaming parsers and text processing tools like Unix shell pipelines, whose streamable properties is making it a popular data format for maintaining large datasets like the large AI datasets maintained on https://huggingface.co which is now accessible on Auto HTML API pages:

Which lists the .jsonl format like other data formats where it's accessible from .jsonl extension for ?format=jsonl query string, e.g:

- https://blazor-gallery.servicestack.net/albums.jsonl

- https://blazor-gallery.servicestack.net/api/QueryAlbums?format=jsonl

CSV Enumerable Behavior

The JSON Lines data format behaves the same way as the CSV format where if your Request DTO is annotated with either

[DataContract] or the more explicit [Csv(CsvBehavior.FirstEnumerable)] it will automatically serialize the

first IEnumerable property, where all AutoQuery APIs and the Request DTO's below will return their

IEnumerable datasets in the streamable JSON Lines format:

public class QueryPocos : QueryDb<Poco> {}

[Route("/pocos")]

public class Pocos : List<Poco>, IReturn<Pocos>

{

public Pocos() {}

public Pocos(IEnumerable<Poco> collection) : base(collection) {}

}

[Route("/pocos")]

[DataContract]

public class Pocos : IReturn<Pocos>

{

[DataMember]

public List<Poco> Items { get; set; }

}

[Route("/pocos")]

[Csv(CsvBehavior.FirstEnumerable)]

public class Pocos : IReturn<Pocos>

{

public List<Poco> Items { get; set; }

}

Async Streaming Parsing Example

The HTTP Utils extension methods makes it trivial to implement async streaming parsing where you can process each row one at a time to avoid large allocations:

const string BaseUrl = "https://blazor-gallery.servicestack.net";

var url = BaseUrl.CombineWith("albums.jsonl");

await using var stream = await url.GetStreamFromUrlAsync();

await foreach (var line in stream.ReadLinesAsync())

{

var row = line.FromJson<Album>();

//...

}

JsonlSerializer

Alternatively if streaming the results isn't important it can be deserialized like any other format using the new JsonlSerializer:

var jsonl = await url.GetStringFromUrlAsync();

var albums = JsonlSerializer.DeserializeFromString<List<Album>>(jsonl);

Which can also serialize to a string, Stream or TextWriter:

var jsonl = JsonlSerializer.SerializeToString(albums);

JsonlSerializer.SerializeToStream(albums, stream);

JsonlSerializer.SerializeToWriter(albums, textWriter);

AddQueryParams HTTP Utils API

The new AddQueryParams and AddNameValueCollection extension methods makes easy to construct URLs from an Object Dictionary, e.g:

var url = BaseUrl.CombineWith("albums.jsonl")

.AddQueryParams(new() { ["titleContains"] = "Soundtrack", ["take"] = 10 });

Pre Release NuGet Packages now published to Feedz.io

We started experiencing several reliability issues with myget.org earlier this year which prompted us to start deploying our pre-release packages to GitHub Packages as a fallback option, but since it doesn't allow anonymous public NuGet feeds we continued to public and direct customers to use MyGet as it offered the least friction.

Unfortunately MyGet.org's major outage on 26th July in which their entire website and services was down for more than a day prompted us to seek a permanent alternative. After evaluating several alternatives we settled on Feedz.io as the most viable alternative, which like MyGet offered anonymous public NuGet feeds, with full control over allowing us to delete/replace pre-release packages as needed.

The Pre-release NuGet Packages documentation have been updated with the new feed information, e.g. if you're currently using MyGet your NuGet.Config should be updated to:

NuGet.Config

<?xml version="1.0" encoding="utf-8"?>

<configuration>

<packageSources>

<add key="ServiceStack Pre-Release" value="https://f.feedz.io/servicestack/pre-release/nuget/index.json" />

<add key="nuget.org" value="https://api.nuget.org/v3/index.json" protocolVersion="3" />

</packageSources>

</configuration>

Alternatively this NuGet.Config can be added to your solution with the x dotnet tool:

x mix feedz