New JsonHttpClient!

The new JsonHttpClient is an alternative to the existing generic typed JsonServiceClient for consuming ServiceStack Services

which instead of HttpWebRequest is based on Microsoft's latest async HttpClient (from Microsoft.Net.Http on NuGet).

JsonHttpClient implements the full IServiceClient API making it an easy drop-in replacement for your existing JsonServiceClient

where in most cases it can simply be renamed to JsonHttpClient, e.g:

//IServiceClient client = new JsonServiceClient("https://techstacks.io");

IServiceClient client = new JsonHttpClient("https://techstacks.io");

Which can then be used as normal:

var response = await client.GetAsync(new GetTechnology { Slug = "servicestack" });

Install

JsonHttpClient can be downloaded from NuGet at:

<PackageReference Include="ServiceStack.HttpClient" Version="5.*" />

PCL Support

JsonHttpClient also comes in PCL flavour and can be used on the same platforms as the existing PCL Service Clients enabling the same clean and productive development experience on popular mobile platforms like Xamarin.iOS and Xamarin.Android.

ModernHttpClient

One of the primary benefits of being based on HttpClient is being able to make use of

ModernHttpClient which provides a thin wrapper around iOS's native NSURLSession or OkHttp client on Android, offering improved stability for 3G mobile connectivity.

To enable, install ModernHttpClient then set the

Global HttpMessageHandler Factory to configure all JsonHttpClient instances to use ModernHttpClient's NativeMessageHandler:

JsonHttpClient.GlobalHttpMessageHandlerFactory = () => new NativeMessageHandler();

Alternatively, you can configure a single client instance to use ModernHttpClient with:

client.HttpMessageHandler = new NativeMessageHandler();

Differences with JsonServiceClient

Whilst our goal is to retain the same behavior in both clients, there are some differences resulting from using HttpClient where the Global and Instance Request and Response Filters are instead passed HttpClients HttpRequestMessage and HttpResponseMessage.

Also, all API's are Async under-the-hood where any Sync API's that doesn't return a Task<T> just blocks on the Async Task.Result response. As this can dead-lock in certain environments we recommend sticking with the Async API's unless safe to do otherwise.

Encrypted Messaging!

One of the benefits of adopting a message-based design is being able to easily layer functionality and generically add value to all Services, we've seen this recently with Auto Batched Requests which automatically enables each Service to be batched and executed in a single HTTP Request. Similarly the new Encrypted Messaging feature enables a secure channel for all Services (inc Auto Batched Requests :) offering protection to clients who can now easily send and receive encrypted messages over unsecured HTTP!

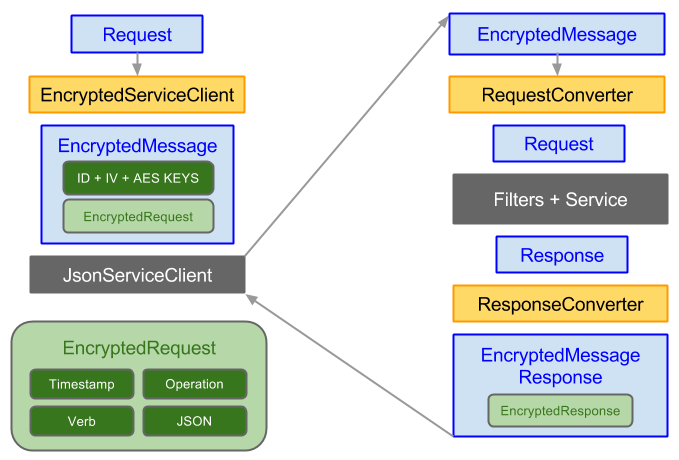

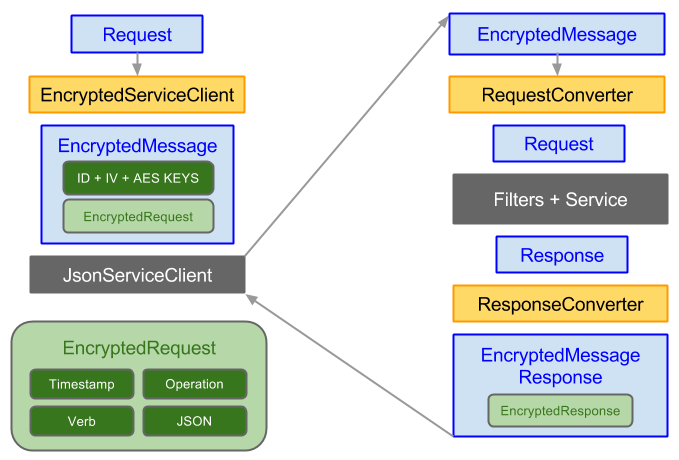

Encrypted Messaging Overview

Configuration

Encrypted Messaging support is enabled by registering the plugin:

Plugins.Add(new EncryptedMessagesFeature {

PrivateKeyXml = ServerRsaPrivateKeyXml

});

Where PrivateKeyXml is the Servers RSA Private Key Serialized as XML. If you don't have an existing one, a new one can be generated with:

var rsaKeyPair = RsaUtils.CreatePublicAndPrivateKeyPair();

string ServerRsaPrivateKeyXml = rsaKeyPair.PrivateKey;

Once generated, it's important the Private Key is kept confidential as anyone with access will be able to decrypt the encrypted messages! Whilst most obfuscation efforts are ultimately futile the goal should be to contain the private key to your running Web Application, limiting access as much as possible.

Once registered, the EncryptedMessagesFeature enables the 2 Services below:

GetPublicKey- Returns the Serialized XML of your Public Key (extracted from the configured Private Key)EncryptedMessage- The Request DTO which encapsulates all encrypted Requests (can't be called directly)

Giving Clients the Public Key

To communicate clients need access to the Server's Public Key, it doesn't matter who has accessed the Public Key only that clients use the real Servers Public Key. It's therefore not advisable to download the Public Key over unsecure http:// where traffic can potentially be intercepted and the key spoofed, subjecting them to a Man-in-the-middle attack.

It's safer instead to download the public key over a trusted https:// url where the servers origin is verified by a trusted CA. Sharing the Public Key over Dropbox, Google Drive, OneDrive or other encrypted channels are also good options.

Since GetPublicKey is just a ServiceStack Service it's easily downloadable using a Service Client:

var client = new JsonServiceClient(BaseUrl);

string publicKeyXml = client.Get(new GetPublicKey());

If the registered EncryptedMessagesFeature.PublicKeyPath has been changed from its default /publickey, it can be dowloaded with:

string publicKeyXml = client.Get<string>("/custom-publickey"); // or with HttpUtils:

string publicKeyXml = BaseUrl.CombineWith("/custom-publickey").GetStringFromUrl();

To help with verification the SHA256 Hash of the PublicKey is returned in

X-PublicKey-HashHTTP Header

Encrypted Service Client

Once they have the Server's Public Key, clients can use it to get an EncryptedServiceClient via the GetEncryptedClient() extension method on JsonServiceClient or new JsonHttpClient, e.g:

var client = new JsonServiceClient(BaseUrl);

IEncryptedClient encryptedClient = client.GetEncryptedClient(publicKeyXml);

Once configured, clients have access to the familiar typed Service Client API's and productive workflow they're used to with the generic Service Clients, sending typed Request DTO's and returning the typed Response DTO's - rendering the underlying encrypted messages a transparent implementation detail:

HelloResponse response = encryptedClient.Send(new Hello { Name = "World" });

response.Result.Print(); //Hello, World!

REST Services Example:

HelloResponse response = encryptedClient.Get(new Hello { Name = "World" });

Auto-Batched Requests Example:

var requests = new[] { "Foo", "Bar", "Baz" }.Map(x => new HelloSecure { Name = x });

var responses = encryptedClient.SendAll(requests);

When using the IEncryptedClient, the entire Request and Response bodies are encrypted including Exceptions which continue to throw a populated WebServiceException:

try

{

var response = encryptedClient.Send(new Hello());

}

catch (WebServiceException ex)

{

ex.ResponseStatus.ErrorCode.Print(); //= ArgumentNullException

ex.ResponseStatus.Message.Print(); //= Value cannot be null. Parameter name: Name

}

Authentication with Encrypted Messaging

Many encrypted messaging solutions use Client Certificates which Servers can use to cryptographically verify a client's identity - providing an alternative to HTTP-based Authentication. We've decided against using this as it would've forced an opinionated implementation and increased burden of PKI certificate management and configuration onto Clients and Servers - reducing the applicability and instant utility of this feature.

We can instead leverage the existing Session-based Authentication Model in ServiceStack letting clients continue to use the existing Auth functionality and Auth Providers they're already used to, e.g:

var authResponse = encryptedClient.Send(new Authenticate {

provider = CredentialsAuthProvider.Name,

UserName = "test@gmail.com",

Password = "p@55w0rd",

});

Encrypted Messages have their cookies stripped so they're no longer visible in the clear which minimizes their exposure to Session hijacking. This does pose the problem of how we can call authenticated Services if the encrypted HTTP Client is no longer sending Session Cookies?

Without the use of clear-text Cookies or HTTP Headers there's no longer an established Authenticated Session for the encryptedClient to use to make subsequent Authenticated requests. What we can do instead is pass the Session Id in the encrypted body for Request DTO's that implement the new IHasSessionId interface, e.g:

[Authenticate]

public class HelloAuthenticated : IReturn<HelloAuthenticatedResponse>, IHasSessionId

{

public string SessionId { get; set; }

public string Name { get; set; }

}

var response = encryptedClient.Send(new HelloAuthenticated {

SessionId = authResponse.SessionId,

Name = "World"

});

Here we're injecting the returned Authenticated SessionId to access the [Authenticate] protected Request DTO. However remembering to do this for every authenticated request can get tedious, a nicer alternative is just setting it once on the encryptedClient which will then use it to automatically populate any IHasSessionId Request DTO's:

encryptedClient.SessionId = authResponse.SessionId;

var response = encryptedClient.Send(new HelloAuthenticated {

Name = "World"

});

Incidentally this feature is now supported in all Service Clients

Combined Authentication Strategy

Another potential use-case is to only use Encrypted Messaging when sending any sensitive information and the normal Service Client for other requests. In which case we can Authenticate and send the user's password with the encryptedClient:

var authResponse = encryptedClient.Send(new Authenticate {

provider = CredentialsAuthProvider.Name,

UserName = "test@gmail.com",

Password = "p@55w0rd",

});

But then fallback to using the normal IServiceClient for subsequent requests. But as the encryptedClient doesn't receive cookies we'd need to set it explicitly on the client ourselves with:

client.SetCookie("ss-id", authResponse.SessionId);

After which the ServiceClient "establishes an authenticated session" and can be used to make Authenticated requests, e.g:

var response = await client.GetAsync(new HelloAuthenticated { Name = "World" });

INFO

EncryptedServiceClient is unavailable in PCL Clients

Hybrid Encryption Scheme

The Encrypted Messaging Feature follows a Hybrid Cryptosystem which uses RSA Public Keys for Asymmetric Encryption combined with the performance of AES Symmetric Encryption making it suitable for encrypting large message payloads.

The key steps in the process are outlined below:

- The Client creates a new

IEncryptedClientconfigured with the Server Public Key - The Client uses the

IEncryptedClientto send a Request DTO: - A new 256-bit Symmetric AES Key and IV is generated

- The AES Key and IV bytes are merged, encrypted with the Servers Public Key and Base64 encoded

- The Request DTO is serialized into JSON and packed with the current Timestamp, Verb and Operation and encrypted with the new AES Key

- The

IEncryptedClientuses the underlying JSON Service Client to send theEncryptedMessageto the remote Server - The

EncryptedMessageis picked up and decrypted by the EncryptedMessagingFeature Request Converter: - The AES Key is decrypted with the Servers Private Key

- The IV is checked against the nonce Cache, verified it's never been used before, then cached

- The unencrypted AES Key is used to decrypt the EncryptedBody

- The timestamp is verified it's not older than

EncryptedMessagingFeature.MaxRequestAge - Any expired nonces are removed. (The timestamp and IV are used to prevent replay attacks)

- The JSON body is deserialized and resulting Request DTO returned from the Request Converter

- The converted Request DTO is executed in ServiceStack's Request Pipeline as normal

- The Response DTO is picked up by the EncryptedMessagingFeature Response Converter:

- Any Cookies set during the Request are removed

- The Response DTO is serialized with the AES Key and returned in an

EncryptedMessageResponse - The

IEncryptedClientdecrypts theEncryptedMessageResponsewith the AES Key - The Response DTO is extracted and returned to the caller

A visual of how this all fits together in captured in the high-level diagram below:

- Components in Yellow show the encapsulated Encrypted Messaging functionality where all encryption and decryption is performed

- Components in Blue show Unencrypted DTO's

- Components in Green show Encrypted content:

- The AES Key and IV in Dark Green is encrypted by the client using the Server's Public Key

- The EncryptedRequest in Light Green is encrypted with a new AES Key generated by the client on each Request

- Components in Dark Grey depict existing ServiceStack functionality where Requests are executed as normal through the Service Client and Request Pipeline

All Request and Response DTO's get encrypted and embedded in the EncryptedMessage and EncryptedMessageResponse DTO's below:

public class EncryptedMessage : IReturn<EncryptedMessageResponse>

{

public string EncryptedSymmetricKey { get; set; }

public string EncryptedBody { get; set; }

}

public class EncryptedMessageResponse

{

public string EncryptedBody { get; set; }

}

The diagram also expands the EncryptedBody Content containing the EncryptedRequest consisting of the following parts:

- Timestamp - Unix Timestamp of the Request

- Verb - Target HTTP Method

- Operation - Request DTO Name

- JSON - Request DTO serialized as JSON

Source Code

- The Client implementation is available in EncryptedServiceClient.cs

- The Server implementation is available in EncryptedMessagesFeature.cs

- The Crypto Utils used are available in the RsaUtils.cs and AesUtils.cs

- Tests are available in EncryptedMessagesTests.cs

Request and Response Converters

The Encrypted Messaging Feature takes advantage of new Converters that let you change the Request DTO and Response DTO's that get used in ServiceStack's Request Pipeline where:

Request Converters are executed directly after any Custom Request Binders:

appHost.RequestConverters.Add((req, requestDto) => {

//Return alternative Request DTO or null to retain existing DTO

});

Response Converters are executed directly after the Service:

appHost.ResponseConverters.Add((req, response) =>

//Return alternative Response or null to retain existing Service response

});

In addition to the converters above, Plugins can now register new callbacks in IAppHost.OnEndRequestCallbacks which gets fired at the end of a request.

Add ServiceStack Reference

Eclipse Integration!

We've further expanded our support for Java with our new ServiceStackEclipse plugin providing cross-platform Add ServiceStack Reference integration with Eclipse on Windows, OSX and Linux!

Install from Eclipse Marketplace

To install, search for ServiceStack in the Eclipse Marketplace at Help > Eclipse Marketplace:

Find the ServiceStackEclipse plugin, click Install and follow the wizard to the end, restarting to launch Eclipse with the plugin loaded!

ServiceStackEclipse is best used with Java Maven Projects where it automatically adds the ServiceStack.Java client library to your Maven Dependencies and when your project is set to Build Automatically, are then downloaded and registered, so you're ready to start consuming ServiceStack Services with the new

JsonServiceClient!

Eclipse Add ServiceStack Reference

Just like Android Studio you can right-click on a Java Package to open the Add ServiceStack Reference... dialog from the Context Menu:

Complete the dialog to add the remote Servers generated Java DTO's to your selected Java package and the net.servicestack.client dependency to your Maven dependencies.

Eclipse Update ServiceStack Reference

Updating a ServiceStack Reference works as normal where you can change any of the available options in the header comments, save, then right-click on the file in the File Explorer and click on Update ServiceStack Reference in the Context Menu:

ServiceStack IDEA IntelliJ Plugin

The ServiceStackIDEA plugin has added support for IntelliJ Maven projects giving Java devs a productive and familiar development experience whether they're creating Android Apps or pure cross-platform Java clients.

Install ServiceStack IDEA from the Plugin repository

The ServiceStack IDEA is now available to install directly from within IntelliJ or Android Studio IDE Plugins Repository, to Install Go to:

File -> Settings...Main Menu Item- Select Plugins on left menu then click Browse repositories... at bottom

- Search for ServiceStack and click Install plugin

- Restart to load the installed ServiceStack IDEA plugin

ssutil.exe - Command line ServiceStack Reference tool

Add ServiceStack Reference is also moving beyond our growing list of supported IDEs and is now available in a single cross-platform .NET command-line .exe making it easy for build servers and automated tasks or command-line runners of your favorite text editors to easily Add and Update ServiceStack References!

To Get Started download ssutil.exe and open a command prompt to the containing directory:

Download ssutil.exe

ssutil.exe Usage:

Adding a new ServiceStack Reference

To create a new ServiceStack Reference, pass the remote ServiceStack BaseUrl then specify both which -file and -lang you want, e.g:

ssutil https://techstacks.io -file TechStacks -lang CSharp

Executing the above command fetches the C# DTOs and saves them in a local file named TechStacks.dtos.cs.

Available Languages

- CSharp

- FSharp

- VbNet

- Java

- Swift

- TypeScript.d

Update existing ServiceStack Reference

Updating a ServiceStack Reference is even easier we just specify the path to the existing generated DTO's. E.g. Update the TechStacks.dtos.cs we just created with:

ssutil TechStacks.dtos.cs

Using Xamarin.Auth with ServiceStack

Xamarin.Auth

is an extensible Component and provides a good base for handling authenticating with ServiceStack from Xamarin platforms. To show how to make use of it we've created the TechStacksAuth example repository containing a custom WebAuthenticator we use to call our remote ServiceStack Web Application and reuse its existing OAuth integration.

Here's an example using TwitterAuthProvider:

Checkout the TechStacksAuth repo for the docs and source code.

Swift

Unfortunately the recent release of Xcode 6.4 and Swift 1.2 still haven't fixed the earlier regression added in Xcode 6.3 and Swift 1.2 where the Swift compiler segfaults trying to compile Extensions to Types with a Generic Base Class. The swift-compiler-crashes repository is reporting this is now fixed in Swift 2.0 / Xcode 7 beta but as that wont be due till later this year we've decided to improve the experience by not generating any types with the problematic Generic Base Types from the generated DTO's by default. This is configurable with:

//ExcludeGenericBaseTypes: True

Any types that were omitted from the generated DTO's will be emitted in comments, using the format:

//Excluded: {TypeName}

C#, F#, VB.NET Service Reference

The C#, F# and VB.NET Native Type providers can emit [GeneratedCode] attributes with:

AddGeneratedCodeAttributes: True

This is useful for skipping any internal Style Cop rules on generated code.

Service Clients

Custom Client Caching Strategy

New ResultsFilter and ResultsFilterResponse delegates have been added to all Service Clients allowing clients to employ a custom caching strategy.

Here's a basic example implementing a cache for all GET Requests:

var cache = new Dictionary<string, object>();

client.ResultsFilter = (type, method, uri, request) => {

if (method != HttpMethods.Get) return null;

object cachedResponse;

cache.TryGetValue(uri, out cachedResponse);

return cachedResponse;

};

client.ResultsFilterResponse = (webRes, response, method, uri, request) => {

if (method != HttpMethods.Get) return;

cache[uri] = response;

};

//Subsequent requests returns cached result

var response1 = client.Get(new GetCustomer { CustomerId = 5 });

var response2 = client.Get(new GetCustomer { CustomerId = 5 }); //cached response

The ResultsFilter delegate is executed with the context of the request before the request is made. Returning a value of type TResponse short-circuits the request and returns that response. Otherwise the request continues and its response passed into the ResultsFilterResponse delegate where it can be cached.

New ServiceClient API's

The following new API's were added to all .NET Service Clients:

SetCookie()- Sets a Cookie on the clientsCookieContainerGetCookieValues()- Return all site Cookies in a string DictionaryCustomMethodAsync()- Call any Custom HTTP Method Asynchronously

Implicit Versioning

Similar to the behavior of IHasSessionId above, Service Clients that have specified a Version number, e.g:

client.Version = 2;

Will populate that version number in all Request DTO's implementing IHasVersion, e.g:

public class Hello : IReturn<HelloResponse>, IHasVersion {

public int Version { get; set; }

public string Name { get; set; }

}

client.Version = 2;

client.Get(new Hello { Name = "World" }); // Hello.Version=2

Version Abbreviation Convention

A popular convention for specifying versions in API requests is with the ?v=1 QueryString which ServiceStack now uses as a fallback for populating any Request DTO's that implement IHasVersion (as above).

INFO

as ServiceStack's message-based design promotes forward and backwards-compatible Service API designs, our recommendation is to only consider implementing versioning when necessary, at which point check out our recommended versioning strategy

Cancellable Requests Feature

The new Cancellable Requests Feature makes it easy to design long-running Services that are cancellable with an external Web Service Request. To enable this feature, register the CancellableRequestsFeature plugin:

Plugins.Add(new CancellableRequestsFeature());

Designing a Cancellable Service

Then in your Service you can wrap your implementation within a disposable ICancellableRequest block which encapsulates a Cancellation Token that you can watch to determine if the Request has been cancelled, e.g:

public object Any(TestCancelRequest req)

{

using (var cancellableRequest = base.Request.CreateCancellableRequest())

{

//Simulate long-running request

while (true)

{

cancellableRequest.Token.ThrowIfCancellationRequested();

Thread.Sleep(100);

}

}

}

Cancelling a remote Service

To be able to cancel a Server request on the client, the client must first Tag the request which it does by assigning the X-Tag HTTP Header with a user-defined string in a Request Filter before calling a cancellable Service, e.g:

var tag = Guid.NewGuid().ToString();

var client = new JsonServiceClient(baseUri) {

RequestFilter = req => req.Headers[HttpHeaders.XTag] = tag

};

var responseTask = client.PostAsync(new TestCancelRequest());

Then at anytime whilst the Service is still executing the remote request can be cancelled by calling the CancelRequest Service with the specified Tag, e.g:

var cancelResponse = client.Post(new CancelRequest { Tag = tag });

If it was successfully cancelled it will return a CancelRequestResponse DTO with the elapsed time of how long the Service ran for. Otherwise if the remote Service had completed or never existed it will throw 404 Not Found in a WebServiceException.

Include Aggregates in AutoQuery

AutoQuery now supports running additional Aggregate queries on the queried result-set.

To include aggregates in your Query's response specify them in the Include property of your AutoQuery Request DTO, e.g:

var response = client.Get(new Query Rockstars { Include = "COUNT(*)" })

Or in the Include QueryString param if you're calling AutoQuery Services from a browser, e.g:

/rockstars?include=COUNT(*)

The result is then published in the QueryResponse<T>.Meta String Dictionary and is accessible with:

response.Meta["COUNT(*)"] //= 7

By default any of the functions in the SQL Aggregate whitelist can be referenced:

AVG, COUNT, FIRST, LAST, MAX, MIN, SUM

Which can be added to or removed from by modifying SqlAggregateFunctions collection, e.g, you can allow usage of a CustomAggregate SQL Function with:

Plugins.Add(new AutoQueryFeature {

SqlAggregateFunctions = { "CustomAggregate" }

})

Aggregate Query Usage

The syntax for aggregate functions is modelled after their usage in SQL so they should be instantly familiar.

At its most basic usage you can just specify the name of the aggregate function which will use * as a default argument so you can also query COUNT(*) with:

?include=COUNT

It also supports SQL aliases:

COUNT(*) Total

COUNT(*) as Total

Which is used to change what key the result is saved into:

response.Meta["Total"]

Columns can be referenced by name:

COUNT(LivingStatus)

If an argument matches a column in the primary table the literal reference is used as-is, if it matches a column in a joined table it's replaced with its fully-qualified reference and when it doesn't match any column, Numbers are passed as-is otherwise its automatically escaped and quoted and passed in as a string literal.

The DISTINCT modifier can also be used, so a complex example looks like:

COUNT(DISTINCT LivingStatus) as UniqueStatus

Which saves the result of the above function in:

response.Meta["UniqueStatus"]

Any number of aggregate functions can be combined in a comma-delimited list:

Count(*) Total, Min(Age), AVG(Age) AverageAge

Which returns results in:

response.Meta["Total"]

response.Meta["Min(Age)"]

response.Meta["AverageAge"]

Aggregate Query Performance

Surprisingly AutoQuery is able to execute any number of Aggregate functions without performing any additional queries as previously to support paging, a Total needed to be executed for each AutoQuery. Now the Total query is combined with all other aggregate functions and executed in a single query.

AutoQuery Response Filters

The Aggregate functions feature is built on the new ResponseFilters support in AutoQuery which provides a new extensibility option enabling customization and additional metadata to be attached to AutoQuery Responses. As the Aggregate Functions support is itself a Response Filter in can disabled by clearing them:

Plugins.Add(new AutoQueryFeature {

ResponseFilters = new List<Action<QueryFilterContext>>()

})

The Response Filters are executed after each AutoQuery and gets passed the full context of the executed query, i.e:

class QueryFilterContext

{

IDbConnection Db // The ADO.NET DB Connection

List<Command> Commands // Tokenized list of commands

IQuery Request // The AutoQuery Request DTO

ISqlExpression SqlExpression // The AutoQuery SqlExpression

IQueryResponse Response // The AutoQuery Response DTO

}

Where the Commands property contains the parsed list of commands from the Include property, tokenized into the structure below:

class Command

{

string Name

List<string> Args

string Suffix

}

With this we could add basic calculator functionality to AutoQuery with the custom Response Filter below:

Plugins.Add(new AutoQueryFeature {

ResponseFilters = {

ctx => {

var supportedFns = new Dictionary<string, Func<int, int, int>>(StringComparer.OrdinalIgnoreCase)

{

{"ADD", (a,b) => a + b },

{"MULTIPLY", (a,b) => a * b },

{"DIVIDE", (a,b) => a / b },

{"SUBTRACT", (a,b) => a - b },

};

var executedCmds = new List<Command>();

foreach (var cmd in ctx.Commands)

{

Func<int, int, int> fn;

if (!supportedFns.TryGetValue(cmd.Name, out fn)) continue;

var label = !string.IsNullOrWhiteSpace(cmd.Suffix) ? cmd.Suffix.Trim() : cmd.ToString();

ctx.Response.Meta[label] = fn(int.Parse(cmd.Args[0]), int.Parse(cmd.Args[1])).ToString();

executedCmds.Add(cmd);

}

ctx.Commands.RemoveAll(executedCmds.Contains);

}

}

})

Which now lets users perform multiple basic arithmetic operations with any AutoQuery request!

var response = client.Get(new QueryRockstars {

Include = "ADD(6,2), Multiply(6,2) SixTimesTwo, Subtract(6,2), divide(6,2) TheDivide"

});

response.Meta["ADD(6,2)"] //= 8

response.Meta["SixTimesTwo"] //= 12

response.Meta["Subtract(6,2)"] //= 4

response.Meta["TheDivide"] //= 3

Untyped SqlExpression

If you need to introspect or modify the executed ISqlExpression, it’s useful to access it as a IUntypedSqlExpression so its non-generic API's are still accessible without having to convert it back into its concrete generic SqlExpression<T> Type, e.g:

IUntypedSqlExpression q = ctx.SqlExpression.GetUntypedSqlExpression()

.Clone();

Cloning the SqlExpression allows you to modify a copy that won't affect any other Response Filter.

AutoQuery Property Mapping

AutoQuery can map [DataMember] property aliases on Request DTO's to the queried table, e.g:

public class QueryPerson : QueryBase<Person>

{

[DataMember("first_name")]

public string FirstName { get; set; }

}

public class Person

{

public int Id { get; set; }

public string FirstName { get; set; }

public string LastName { get; set; }

}

Which can be queried with:

?first_name=Jimi

or by setting the global JsConfig.EmitLowercaseUnderscoreNames=true convention:

public class QueryPerson : QueryBase<Person>

{

public string LastName { get; set; }

}

Where it's also queryable with:

?last_name=Hendrix

OrmLite

Dynamic Result Sets

There's new support for returning unstructured resultsets letting you Select List<object> instead of having results mapped to a concrete Poco class, e.g:

db.Select<List<object>>(db.From<Poco>()

.Select("COUNT(*), MIN(Id), MAX(Id)"))[0].PrintDump();

Output of objects in the returned List<object>:

[

10,

1,

10

]

You can also Select Dictionary<string,object> to return a dictionary of column names mapped with their values, e.g:

db.Select<Dictionary<string,object>>(db.From<Poco>()

.Select("COUNT(*) Total, MIN(Id) MinId, MAX(Id) MaxId"))[0].PrintDump();

Output of objects in the returned Dictionary<string,object>:

{

Total: 10,

MinId: 1,

MaxId: 10

}

and can be used for API's returning a Single row result:

db.Single<List<object>>(db.From<Poco>()

.Select("COUNT(*) Total, MIN(Id) MinId, MAX(Id) MaxId")).PrintDump();

or use object to fetch an unknown Scalar value:

object result = db.Scalar<object>(db.From<Poco>().Select(x => x.Id));

New DB Parameters API's

To enable even finer-grained control of parameterized queries we've added new overloads that take a collection of IDbDataParameter's:

List<T> Select<T>(string sql, IEnumerable<IDbDataParameter> sqlParams)

T Single<T>(string sql, IEnumerable<IDbDataParameter> sqlParams)

T Scalar<T>(string sql, IEnumerable<IDbDataParameter> sqlParams)

List<T> Column<T>(string sql, IEnumerable<IDbDataParameter> sqlParams)

IEnumerable<T> ColumnLazy<T>(string sql, IEnumerable<IDbDataParameter> sqlParams)

HashSet<T> ColumnDistinct<T>(string sql, IEnumerable<IDbDataParameter> sqlParams)

Dictionary<K, List<V>> Lookup<K, V>(string sql, IEnumerable<IDbDataParameter> sqlParams)

List<T> SqlList<T>(string sql, IEnumerable<IDbDataParameter> sqlParams)

List<T> SqlColumn<T>(string sql, IEnumerable<IDbDataParameter> sqlParams)

T SqlScalar<T>(string sql, IEnumerable<IDbDataParameter> sqlParams)

Including Async equivalents for each of the above Sync API's.

The new API's let you execute parameterized SQL with finer-grained control over the IDbDataParameter used, e.g:

IDbDataParameter pAge = db.CreateParam("age", 40, dbType:DbType.Int16);

db.Select<Person>("SELECT * FROM Person WHERE Age > @pAge", new[] { pAge });

The new CreateParam() extension method above is a useful helper for creating custom IDbDataParameter's.

Customize null values

The new OrmLiteConfig.OnDbNullFilter lets you to replace DBNull values with a custom value, so you could convert all null strings to be populated with "NULL" using:

OrmLiteConfig.OnDbNullFilter = fieldDef =>

fieldDef.FieldType == typeof(string)

? "NULL"

: null;

Case Insensitive References

If you're using a case-insensitive database you can tell OrmLite to match on case-insensitive POCO references with:

OrmLiteConfig.IsCaseInsensitive = true;

Enhanced CaptureSqlFilter

CaptureSqlFilter now tracks DB Parameters used in each query which can be used to quickly found out what SQL your DB calls generate by surrounding DB access in a using scope like:

using (var captured = new CaptureSqlFilter())

using (var db = OpenDbConnection())

{

db.Where<Person>(new { Age = 27 });

captured.SqlCommandHistory[0].PrintDump();

}

Emits the Executed SQL along with any DB Parameters:

{

Sql: "SELECT ""Id"", ""FirstName"", ""LastName"", ""Age"" FROM ""Person"" WHERE ""Age"" = @Age",

Parameters:

{

Age: 27

}

}

Other OrmLite Features

- New

IncludeFunctions = trueT4 Template configuration for generating Table Valued Functions added by @mikepugh - New

OrmLiteConfig.SanitizeFieldNameForParamNameFncan be used to support sanitizing field names with non-ascii values into legal DB Param names

Authentication

Enable Session Ids on QueryString

Setting Config.AllowSessionIdsInHttpParams=true will allow clients to specify the ss-id, ss-pid Session Cookies on the QueryString or FormData. This is useful for getting Authenticated SSE Sessions working in IE9 which needs to rely on SSE Polyfills that's unable to send Cookies or Custom HTTP Headers.

The SSE-polyfills Chat Demo has an example of adding the Current Session Id on the JavaScript SSE EventSource Url:

var source = new EventSource('/event-stream?channels=@channels&ss-id=@(base.GetSession().Id)');

In Process Authenticated Requests

You can enable the CredentialsAuthProvider to allow In Process requests to Authenticate without a Password with:

new CredentialsAuthProvider {

SkipPasswordVerificationForInProcessRequests = true,

}

When enabled this lets In Process Service Requests to login as a specified user without needing to provide their password.

For example this could be used to create an Intranet Restricted Admin-Only Service that lets you login as another user so you can debug their account without knowing their password with:

[RequiredRole("Admin")]

[Restrict(InternalOnly=true)]

public class ImpersonateUser

{

public string UserName { get; set; }

}

public object Any(ImpersonateUser request)

{

using (var service = base.ResolveService<AuthenticateService>()) //In Process

{

return service.Post(new Authenticate {

provider = AuthenticateService.CredentialsProvider,

UserName = request.UserName,

});

}

}

Your Services can use the new

Request.IsInProcessRequest()to identify Services that were executed in-process.

New CustomValidationFilter Filter

The new CustomValidationFilter is available on each AuthProvider and can be used to add custom validation logic where returning any non-null response will short-circuit the Auth Process and return the response to the client.

The Validation Filter receives the full AuthContext captured about the Authentication Request.

So if you're under attack you could use this filter to Rick Roll North Korean hackers :)

Plugins.Add(new AuthFeature(...,

new IAuthProvider[] {

new CredentialsAuthProvider {

CustomValidationFilter = authCtx =>

authCtx.Request.UserHostAddress.StartsWith("175.45.17")

? HttpResult.Redirect("https://youtu.be/dQw4w9WgXcQ")

: null

}

}));

UserName Validation

The UserName validation for all Auth Repositories have been consolidated in a central location, configurable at:

Plugins.Add(new AuthFeature(...){

ValidUserNameRegEx = new Regex(@"^(?=.{3,20}$)([A-Za-z0-9][._-]?)*$", RegexOptions.Compiled),

})

INFO

the default UserName RegEx above was increased from 15 chars limit to 20 chars

Instead of RegEx you can choose to validate using a Custom Predicate. The example below ensures UserNames don't include specific chars:

Plugins.Add(new AuthFeature(...){

IsValidUsernameFn = userName => userName.IndexOfAny(new[] { '@', '.', ' ' }) == -1

})

Overridable Hash Provider

The IHashProvider used to generate and verify password hashes and salts in each UserAuth Repository is now overridable from its default with:

container.Register<IHashProvider>(c =>

new SaltedHash(HashAlgorithm:new SHA256Managed(), theSaltLength:4));

Configurable Session Expiry

Permanent and Temporary Sessions can now be configured separately with different Session Expiries, configurable on either AuthFeature or SessionFeature plugins, e.g:

new AuthFeature(...) {

SessionExpiry = TimeSpan.FromDays(7 * 2),

PermanentSessionExpiry = TimeSpan.FromDays(7 * 4),

}

The above defaults configures Temporary Sessions to last a maximum of 2 weeks and Permanent Sessions lasting 4 weeks.

Redis

- New

PopItemsFromSet(setId, count)API added by @scottmcarthur - The

SetEntry()API's for setting string values inIRedisClienthave been deprecated in favor of newSetValue()API's. - Large performance improvement for saving large values (>8MB)

- Internal Buffer pool now configurable with

RedisConfig.BufferLengthandRedisConfig.BufferPoolMaxSize

ServiceStack.Text

- Use

JsConfig.ExcludeDefaultValues=trueto reduce payloads by omitting properties with default values - Text serializers now serialize any property or field annotated with the

[DataMember]attribute, inc. private fields. - Updated

RecyclableMemoryStreamto the latest version, no longer throws an exception for disposing streams twice - Added support for serializing collections with base types

T[].NewArray() Usage

The NewArray() extension method reduces boilerplate required in modifying and returning an Array, it's useful when modifying configuration using fixed-size arrays, e.g:

JsConfig.IgnoreAttributesNamed = JsConfig.IgnoreAttributesNamed.NewArray(

with: typeof(ScriptIgnoreAttribute).Name,

without: typeof(JsonIgnoreAttribute).Name);

Which is equivalent to the imperative alternative:

var attrNames = new List<string>(JsConfig.IgnoreAttributesNamed) {

typeof(ScriptIgnoreAttribute).Name

};

attrNames.Remove(typeof(JsonIgnoreAttribute).Name));

JsConfig.IgnoreAttributesNamed = attrNames.ToArray();

byte[].Combine() Usage

The Combine(byte[]...) extension method combines multiple byte arrays into a single byte[], e.g:

byte[] bytes = "FOO".ToUtf8Bytes().Combine(" BAR".ToUtf8Bytes(), " BAZ".ToUtf8Bytes());

bytes.FromUtf8Bytes() //= FOO BAR BAZ

Swagger

Swagger support has received a number of fixes and enhancements which now generates default params for DTO properties that aren't attributed with [ApiMember] attribute. Specifying a single [ApiMember] attribute reverts back to the existing behavior of only showing [ApiMember] DTO properties.

You can now Exclude properties from being listed in Swagger when using:

[IgnoreDataMember]

Exclude properties from being listed in Swagger Schema Body with:

[ApiMember(ExcludeInSchema=true)]

Or exclude entire Services from showing up in Swagger or any other Metadata Services (i.e. Metadata Pages, Postman, NativeTypes, etc) by annotating Request DTO's with:

[Exclude(Feature.Metadata)]

SOAP

There's finer-grain control available over which Operations and Types are exported in SOAP WSDL's and XSD's by overriding the new ExportSoapOperationTypes() and ExportSoapType() methods in your AppHost.

You can also exclude specific Request DTO's from being emitted in WSDL's and XSD's with:

[Exclude(Feature.Soap)]

public class HiddenFromSoap { .. }

You can also override and customize how the SOAP Message Responses are written, here's a basic example:

public override WriteSoapMessage(Message message, Stream outputStream)

{

using (var writer = XmlWriter.Create(outputStream, Config.XmlWriterSettings))

{

message.WriteMessage(writer);

}

}

The default WriteSoapMessage implementation also raises a ServiceException and writes any returned response to a buffered Response Stream (if configured).

Minor Enhancements

- The Validation Features

AbstractValidator<T>base class now implementsIRequiresRequestand gets injected with the current Request - Response DTO is available at

IResponse.Dto - Use

IResponse.ClearCookies()to clear any cookies added during the Request pipeline before their written to the response - Use

[Exclude(Feature.Metadata)]to hide Operations from being exposed in Metadata Services, inc. Metadata pages, Swagger, Postman, NativeTypes, etc - Use new

container.TryResolve(Type)to resolve dependencies by runtimeType - New

debugEnabledoption added toInMemoryLogandEventLogger

Simple Customer REST Example

New stand-alone Customer REST Example added showing an complete example of creating a Typed Client / Server REST Service with ServiceStack. Overview of this example was answered on StackOverflow.

Community

- Jacob Foshee released a custom short URLs ServiceStack Service hosted on Azure.

Breaking changes

- The provider ids for

InstagramOAuth2Providerwas renamed to/auth/instagramandMicrosoftLiveOAuth2Providerwas renamed to/auth/microsoftlive. - All API's that return

HttpWebResponsehave been removed fromIServiceClientand replaced with source-compatible extension methods CryptUtilshave been deprecated and replaced with the more specificRsaUtilsSystem.IO.Compressionfunctionality was removed from the PCL clients to workaround an issue on Xamarin platforms

All external NuGet packages were upgraded to their most recent major version (as done before every release).