In this release we've started increasing ServiceStack's value beyond its primary focus of simple, fast and productive libraries and started looking towards improving integration with outside environments for hosting ServiceStack Services. This release marks just the beginning, we'll continue enhancing the complete story around developing, deploying and hosting your ServiceStack solutions ensuring it provides seamless integration with the best Single Page Apps, Mobile/Desktop technologies and Cloud/Hosting Services that share our simplicity, performance and value-focused goals that we believe provide the best return on effort and have the most vibrant ecosystems.

.NET before Cloud Services

One thing we've missed from being based on .NET is its predisposition towards Windows-only technologies, missing out on all the industrial strength server solutions that are being primarily developed for hosting on Linux. This puts .NET at a disadvantage to other platforms which have first-class support for using the best technologies at their discretion, which outside of .NET, are primarily running on Linux servers. We've historically ignored this bias in .NET and have always focused on simple technologies we've evaluated that provide the best value. Often this means we've had to maintain rich .NET clients ourselves to get a great experience in .NET which is what led us to develop first-class Redis client and why OrmLite has first-class support for major RDBMS running on Linux inc. PostgreSQL, MySql, Sqlite, Oracle and Firebird - all the while ensuring ServiceStack's libraries runs cross-platform on Mono/Linux.

AWS's servicified platform and polyglot ecosystem

By building their managed platform behind platform-agnostic web services, Amazon have largely eroded this barrier. We can finally tap into the same ecosystem innovative Startups are using with nothing more than the complexity cost of a service call - the required effort even further reduced with native clients. Designing its services behind message-based APIs made it much easier for Amazon to enable a new polyglot world with native clients for most popular platforms, putting .NET on a level playing field with other platforms thanks to AWS SDK for .NET's well-maintained typed native clients. By providing its functionality behind well-defined services, for the first time we've seen in a long time, .NET developers are able to benefit from this new polyglot world where solutions and app logic written in other languages can be easily translated into .NET languages - a trait which has been invaluable whilst developing ServiceStack's integration support for AWS.

This also means features and improvements to reliability, performance and scalability added to its back-end servers benefit every language and ecosystem using them. .NET developers are no longer at a disadvantage and can now leverage the same platform Hacker Communities and next wave of technology leading Startups are built on, benefiting from the Tech Startup culture of sharing their knowledge and experiences and pushing the limits of what's possible today.

AWS offers unprecedented productivity for back-end developers, its servicified hardware and infrastructure encapsulates the complexity of managing servers at a high-level programmatic abstraction that's effortless to consume and automate. These productivity gains is why we've been running our public servers on AWS for more than 2 years. The vast array of services on offer means we have everything our solutions need within the AWS Console, our RDS managed PostgreSQL databases takes care of automated backups and software updates, ease of snapshots means we can encapsulate and backup the configuration of our servers and easily spawn new instances. AWS has made software developers more capable than ever, and with its first-class native client support leveling the playing field for .NET, there's no reason why the next Instagram couldn't be built by a small team of talented .NET developers.

ServiceStack + Amazon Web Services

We're excited to participate in AWS's vibrant ecosystem and provide first-class support and deep integration with AWS where ServiceStack's decoupled substitutable functionality now seamlessly integrates with popular AWS back-end technologies. It's now more productive than ever to develop and host ServiceStack solutions entirely on the managed AWS platform!

ServiceStack.Aws

All of ServiceStack's support for AWS is encapsulated within the single ServiceStack.Aws NuGet package which references the latest modular AWSSDK v3.1x dependencies .NET 4.5+ projects can install from NuGet with:

PM> Install-Package ServiceStack.Aws

This ServiceStack.Aws NuGet package includes implementations for the following ServiceStack providers:

- PocoDynamo - Exciting new declarative, code-first POCO client for DynamoDB with LINQ support

- SqsMqServer - A new MQ Server for invoking ServiceStack Services via Amazon SQS MQ Service

- S3VirtualPathProvider - A read/write Virtual FileSystem around Amazon's S3 Simple Storage Service

- DynamoDbAuthRepository - A new UserAuth repository storing UserAuth info in DynamoDB

- DynamoDbAppSettings - An AppSettings provider storing App configuration in DynamoDB

- DynamoDbCacheClient - A new Caching Provider for DynamoDB

We'd like to give a big thanks to Chad Boyd from Spruce Media for contributing the SqsMqServer implementation.

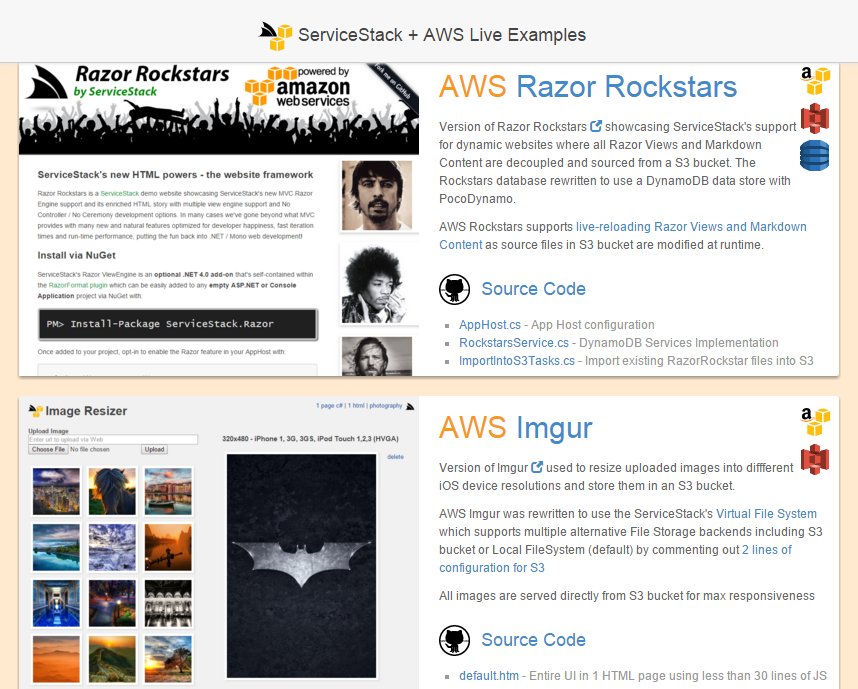

AWS Live Examples

To demonstrate the ease of which you can build AWS-powered solutions with ServiceStack we've rewritten 6 of our existing Live Demos to use a pure AWS managed backend using:

- Amazon DynamoDB for data persistance

- Amazon S3 for file storage

- Amazon SQS for background processing of MQ requests

- Amazon SES for sending emails

Simple AppHost Configuration

A good indication showing how simple it is to build ServiceStack + AWS solutions is the size of the AppHost which contains all the configuration for 5 different Apps below utilizing all the AWS technologies listed above contained within a single ASP.NET Web Application where each application's UI and back-end Service implementation are encapsulated under their respective sub directories:

AWS Razor Rockstars

Maintain Website Content in S3

The implementation for AWS Razor Rockstars is kept with all the other ports of Razor Rockstars in the RazorRockstars repository. The main difference that stands out with RazorRockstars.S3 is that all the content for the App is not contained within project as all its Razor Views, Markdown Content, imgs, js, css, etc. are instead being served directly from an S3 Bucket :)

This is simply enabled by overriding GetVirtualFileSources() and adding the new

S3VirtualPathProvider to the list of file sources:

public class AppHost : AppHostBase

{

public override void Configure(Container container)

{

//All Razor Views, Markdown Content, imgs, js, css, etc are served from an S3 Bucket

var s3 = new AmazonS3Client(AwsConfig.AwsAccessKey, AwsConfig.AwsSecretKey, RegionEndpoint.USEast1);

VirtualFiles = new S3VirtualPathProvider(s3, AwsConfig.S3BucketName, this);

}

public override List<IVirtualPathProvider> GetVirtualFileSources()

{

//Add S3 Bucket as lowest priority Virtual Path Provider

var pathProviders = base.GetVirtualFileSources();

pathProviders.Add(VirtualFiles);

return pathProviders;

}

}

The code to import RazorRockstars content into an S3 bucket is trivial: we just use a local FileSystem provider to get all the files we're interested in from the main ASP.NET RazorRockstars projects folder, then write them to the configured S3 VirtualFiles Provider:

var s3Client = new AmazonS3Client(AwsConfig.AwsAccessKey, AwsConfig.AwsSecretKey, RegionEndpoint.USEast1);

var s3 = new S3VirtualPathProvider(s3Client, AwsConfig.S3BucketName, appHost);

var fs = new FileSystemVirtualPathProvider(appHost, "~/../RazorRockstars.WebHost".MapHostAbsolutePath());

var skipDirs = new[] { "bin", "obj" };

var matchingFileTypes = new[] { "cshtml", "md", "css", "js", "png", "jpg" };

//Update links to reference the new S3 AppHost.cs + RockstarsService.cs source code

var replaceHtmlTokens = new Dictionary<string, string> {

{ "title-bg.png", "title-bg-aws.png" }, //S3 Title Background

{ "https://gist.github.com/3617557.js", "https://gist.github.com/mythz/396dbf54ce6079cc8b2d.js" },

{ "https://gist.github.com/3616766.js", "https://gist.github.com/mythz/ca524426715191b8059d.js" },

{ "RazorRockstars.WebHost/RockstarsService.cs", "RazorRockstars.S3/RockstarsService.cs" },

};

foreach (var file in fs.GetAllFiles())

{

if (skipDirs.Any(x => file.VirtualPath.StartsWith(x))) continue;

if (!matchingFileTypes.Contains(file.Extension)) continue;

if (file.Extension == "cshtml")

{

var html = file.ReadAllText();

replaceHtmlTokens.Each(x => html = html.Replace(x.Key, x.Value));

s3.WriteFile(file.VirtualPath, html);

}

else

{

s3.WriteFile(file);

}

}

During the import we also update the links in the Razor *.cshtml pages to reference the new RazorRockstars.S3 content.

Update S3 Bucket to enable LiveReload of Razor Views and Markdown

Another nice feature of having all content maintained in an S3 Bucket is that you can just change files in the S3 Bucket directly and have all App Servers immediately reload the Razor Views, Markdown content and static resources without redeploying.

CheckLastModifiedForChanges

To enable this feature we just tell the Razor and Markdown plugins to check the source file for changes before displaying each page:

GetPlugin<MarkdownFormat>().CheckLastModifiedForChanges = true;

Plugins.Add(new RazorFormat { CheckLastModifiedForChanges = true });

When this is enabled the View Engines checks the ETag of the source file to find out if it's changed, if it did, it will rebuild and replace it with the new view before rendering it. Given S3 supports object versioning this feature should enable a new class of use-cases for developing Content Heavy management sites with ServiceStack.

Explicit RefreshPage

One drawback of enabling CheckLastModifiedForChanges is that it forces a remote S3 call for each view before rendering it.

A more efficient approach is to instead notify the App Servers which files have changed so they can reload them once,

alleviating the need for multiple ETag checks at runtime, which is the approach we've taken with the

UpdateS3 Service:

if (request.Razor)

{

var kurtRazor = VirtualFiles.GetFile("stars/dead/cobain/default.cshtml");

VirtualFiles.WriteFile(kurtRazor.VirtualPath,

UpdateContent("UPDATED RAZOR", kurtRazor.ReadAllText(), request.Clear));

HostContext.GetPlugin<RazorFormat>().RefreshPage(kurtRazor.VirtualPath); //Force reload of Razor View

}

var kurtMarkdown = VirtualFiles.GetFile("stars/dead/cobain/Content.md");

VirtualFiles.WriteFile(kurtMarkdown.VirtualPath,

UpdateContent("UPDATED MARKDOWN", kurtMarkdown.ReadAllText(), request.Clear));

HostContext.GetPlugin<MarkdownFormat>().RefreshPage(kurtMarkdown.VirtualPath); //Force reload of Markdown

Live Reload Demo

You can test live reloading of the above Service with the routes below which modify Markdown and Razor views with the current time:

- /updateS3 - Update Markdown Content

- /updateS3?razor=true - Update Razor View

- /updateS3?razor=true&clear=true - Revert changes

This forces a recompile of the modified views which greatly benefits from a fast CPU and is a bit slow on our Live Demos server that's running on a m1.small instance shared with 25 other ASP.NET Web Applications.

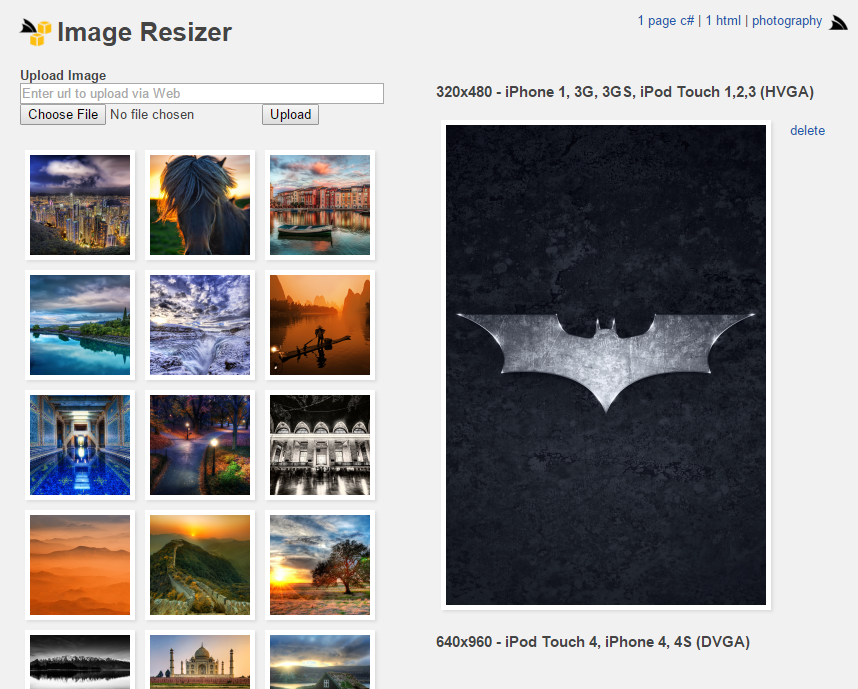

AWS Imgur

S3VirtualPathProvider

The backend ImageService.cs implementation for AWS Imgur has been rewritten to use the Virtual FileSystem instead of accessing the FileSystem directly. The benefits of this approach is that with 2 lines of configuration we can have files written to an S3 Bucket instead:

var s3Client = new AmazonS3Client(AwsConfig.AwsAccessKey, AwsConfig.AwsSecretKey, RegionEndpoint.USEast1);

VirtualFiles = new S3VirtualPathProvider(s3Client, AwsConfig.S3BucketName, this);

If we comment out the above configuration any saved files are instead written to the local FileSystem (default).

The benefit of using managed S3 File Storage is better scalability as your App Servers can remain stateless, improved performance as overhead of serving static assets can be offloaded by referencing the S3 Bucket directly and for even better responsiveness you can connect the S3 bucket to a CDN.

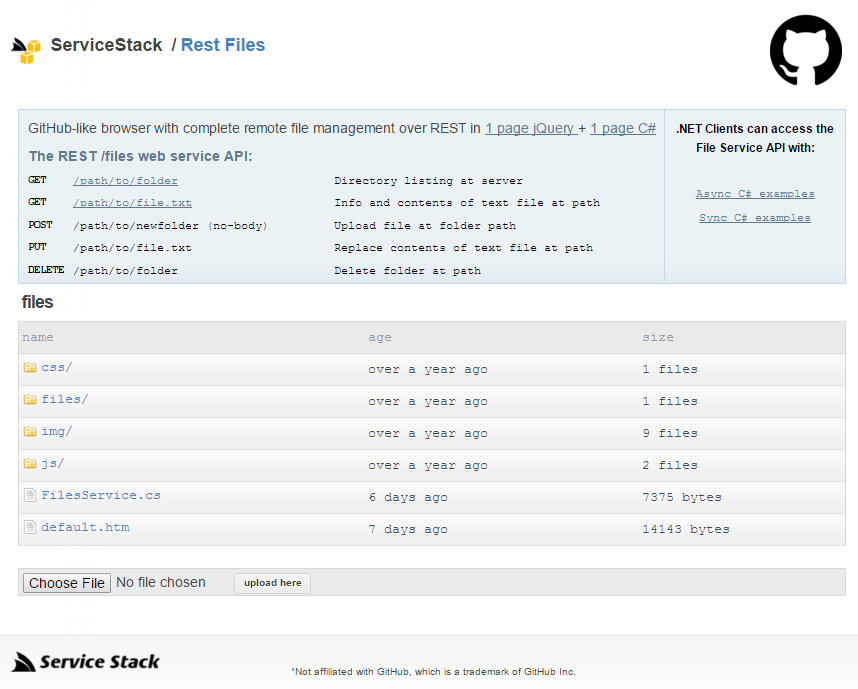

REST Files

REST Files GitHub-like explorer is another example that was rewritten to use ServiceStack's Virtual File System and now provides remote file management of an S3 Bucket behind a REST-ful API.

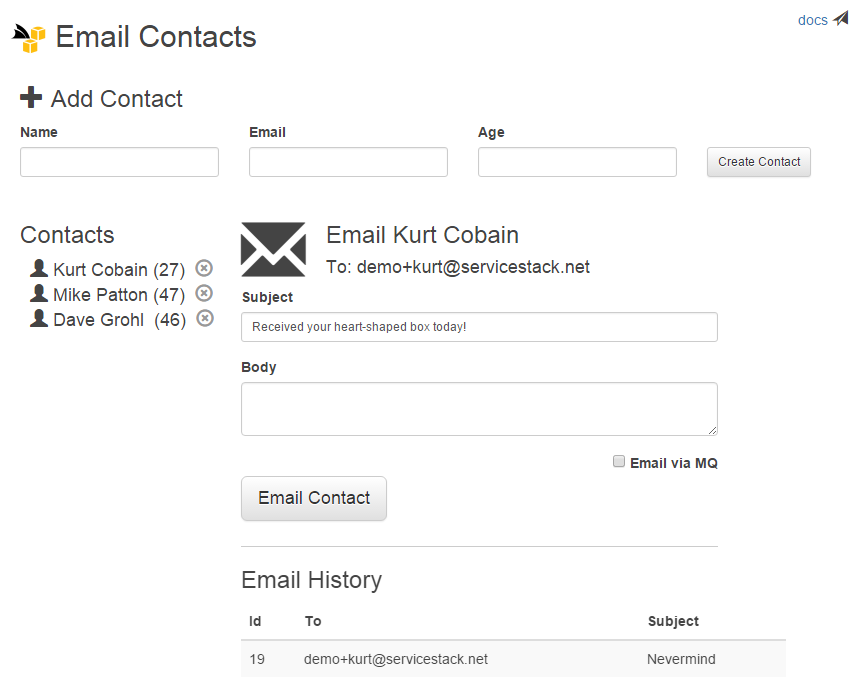

AWS Email Contacts

SqsMqServer

The AWS Email Contacts example shows the same long-running EmailContact Service being executed from both HTTP and MQ Server by just changing which url the HTML Form is posted to:

//html

<form id="form-emailcontact" method="POST"

action="@(new EmailContact().ToPostUrl())"

data-action-alt="@(new EmailContact().ToOneWayUrl())">

...

<div>

<input type="checkbox" id="chkAction" data-click="toggleAction" />

<label for="chkAction">Email via MQ</label>

</div>

...

</form>

The urls are populated from a typed Request DTO using the Reverse Routing Extension methods

Checking the Email via MQ checkbox fires the JavaScript handler below that's registered as declarative event in ss-utils.js:

$(document).bindHandlers({

toggleAction: function() {

var $form = $(this).closest("form"), action = $form.attr("action");

$form.attr("action", $form.data("action-alt"))

.data("action-alt", action);

}

});

The code to configure and start an SQS MQ Server is similar to other MQ Servers:

container.Register<IMessageService>(c => new SqsMqServer(

AwsConfig.AwsAccessKey, AwsConfig.AwsSecretKey, RegionEndpoint.USEast1) {

DisableBuffering = true, // Trade-off latency vs efficiency

});

var mqServer = container.Resolve<IMessageService>();

mqServer.RegisterHandler<EmailContacts.EmailContact>(ExecuteMessage);

AfterInitCallbacks.Add(appHost => mqServer.Start());

When an MQ Server is registered, ServiceStack automatically publishes Requests accepted on the "One Way" pre-defined route to the registered MQ broker. The message is later picked up and executed by a Message Handler on a background Thread.

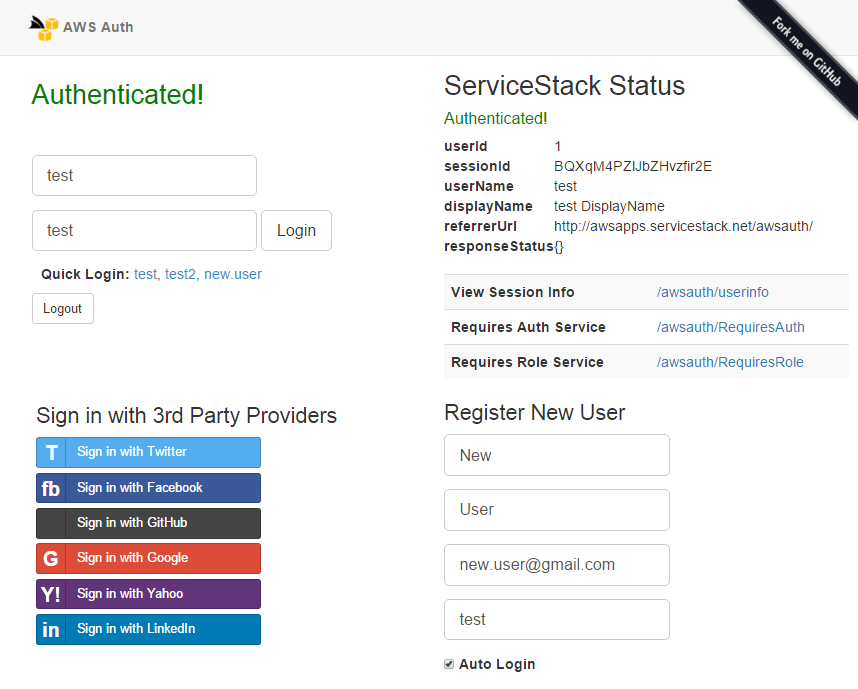

AWS Auth

DynamoDbAuthRepository

AWS Auth

is an example showing how easy it is to enable multiple Auth Providers within the same App which allows Sign-Ins from

Twitter, Facebook, GitHub, Google, Yahoo and LinkedIn OAuth providers, as well as HTTP Basic and Digest Auth and

normal Registered User logins and Custom User Roles validation, all managed in DynamoDB Tables using

the registered DynamoDbAuthRepository below:

container.Register<IAuthRepository>(new DynamoDbAuthRepository(db, initSchema:true));

Standard registration code is used to configure the AuthFeature with all the different Auth Providers AWS Auth wants

to support:

return new AuthFeature(() => new AuthUserSession(),

new IAuthProvider[]

{

new CredentialsAuthProvider(), //HTML Form post of UserName/Password credentials

new BasicAuthProvider(), //Sign-in with HTTP Basic Auth

new DigestAuthProvider(AppSettings), //Sign-in with HTTP Digest Auth

new TwitterAuthProvider(AppSettings), //Sign-in with Twitter

new FacebookAuthProvider(AppSettings), //Sign-in with Facebook

new YahooOpenIdOAuthProvider(AppSettings), //Sign-in with Yahoo OpenId

new OpenIdOAuthProvider(AppSettings), //Sign-in with Custom OpenId

new GoogleOAuth2Provider(AppSettings), //Sign-in with Google OAuth2 Provider

new LinkedInOAuth2Provider(AppSettings), //Sign-in with LinkedIn OAuth2 Provider

new GithubAuthProvider(AppSettings), //Sign-in with GitHub OAuth Provider

})

{

HtmlRedirect = "/awsauth/", //Redirect back to AWS Auth app after OAuth sign in

IncludeRegistrationService = true, //Include ServiceStack's built-in RegisterService

};

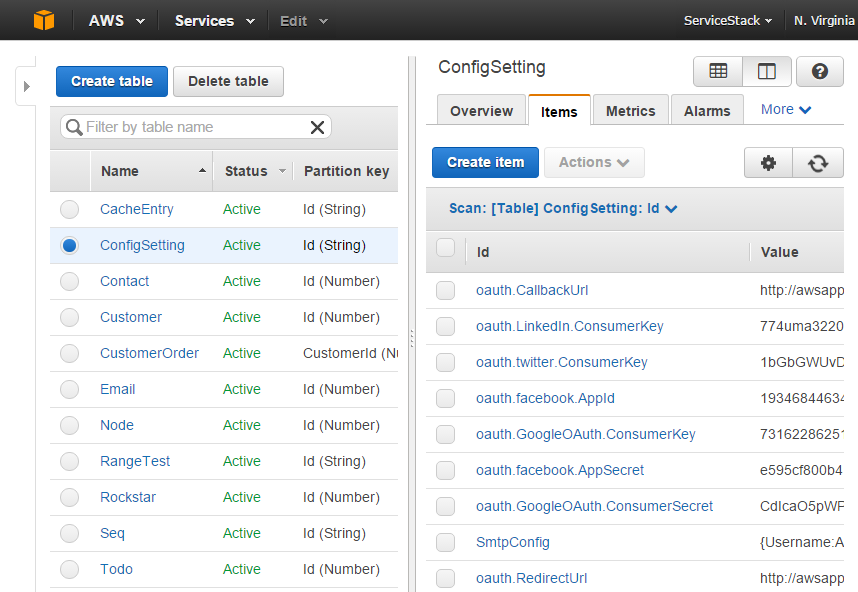

DynamoDbAppSettings

The AuthFeature looks for the OAuth settings for each AuthProvider in the registered

AppSettings, which for deployed Release builds

gets them from multiple sources. Since DynamoDbAppSettings is registered first in a MultiAppSettings collection

it checks entries in the DynamoDB ConfigSetting Table first before falling back to local

Web.config appSettings:

#if !DEBUG

AppSettings = new MultiAppSettings(

new DynamoDbAppSettings(new PocoDynamo(AwsConfig.CreateAmazonDynamoDb()), initSchema:true),

new AppSettings()); // fallback to Web.confg

#endif

Storing production config in DynamoDB reduces the effort for maintaining production settings decoupled from source code. The App Settings were populated in DynamoDB using this simple script which imports its settings from a local appsettings.txt file:

var fileSettings = new TextFileSettings("~/../../deploy/appsettings.txt".MapHostAbsolutePath());

var dynamoSettings = new DynamoDbAppSettings(AwsConfig.CreatePocoDynamo());

dynamoSettings.InitSchema();

//dynamoSettings.Set("SmtpConfig", "{Username:REPLACE_USER,Password:REPLACE_PASS,Host:AWS_HOST,Port:587}");

foreach (var config in fileSettings.GetAll())

{

dynamoSettings.Set(config.Key, config.Value);

}

ConfigSettings Table in DynamoDB

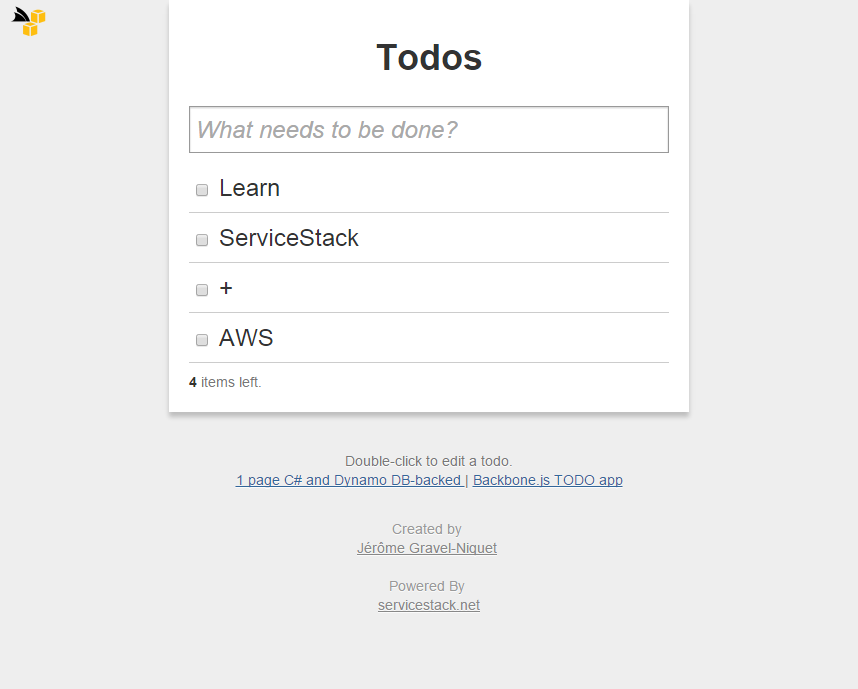

AWS Todos

The Backbone TODO App is a famous minimal example used as a "Hello, World" example to showcase and compare JavaScript client frameworks. The example also serves as a good illustration of the clean and minimal code it takes to build a simple CRUD Service utilizing a DynamoDB back-end with the new PocoDynamo client:

public class TodoService : Service

{

public IPocoDynamo Dynamo { get; set; }

public object Get(Todo todo)

{

if (todo.Id != default(long))

return Dynamo.GetItem<Todo>(todo.Id);

return Dynamo.GetAll<Todo>();

}

public Todo Post(Todo todo)

{

Dynamo.PutItem(todo);

return todo;

}

public Todo Put(Todo todo)

{

return Post(todo);

}

public void Delete(Todo todo)

{

Dynamo.DeleteItem<Todo>(todo.Id);

}

}

As it's a clean POCO, the Todo model can be also reused as-is throughout ServiceStack in Redis, OrmLite, Caching, Config, DTO's, etc:

public class Todo

{

[AutoIncrement]

public long Id { get; set; }

public string Content { get; set; }

public int Order { get; set; }

public bool Done { get; set; }

}

PocoDynamo

PocoDynamo is a highly productive, feature-rich, typed .NET client which extends ServiceStack's Simple POCO life by enabling re-use of your code-first data models with Amazon's industrial strength and highly-scalable NoSQL DynamoDB.

First class support for reusable, code-first POCOs

It works conceptually similar to ServiceStack's other code-first OrmLite and Redis clients by providing a high-fidelity, managed client that enhances AWSSDK's low-level IAmazonDynamoDB client, with rich, native support for intuitively mapping your re-usable code-first POCO Data models into DynamoDB Data Types.

PocoDynamo Features

Advanced idiomatic .NET client

PocoDynamo provides an idiomatic API that leverages .NET advanced language features with streaming API's returning

IEnumerable<T> lazily evaluated responses that transparently performs multi-paged requests behind-the-scenes as the

result set is iterated. It high-level API's provides a clean lightweight adapter to

transparently map between .NET built-in data types and DynamoDB's low-level attribute values. Its efficient batched

API's take advantage of DynamoDB's BatchWriteItem and BatchGetItem batch operations to perform the minimum number

of requests required to implement each API.

Typed, LINQ provider for Query and Scan Operations

PocoDynamo also provides rich, typed LINQ-like querying support for constructing DynamoDB Query and Scan operations, dramatically reducing the effort to query DynamoDB, enhancing readability whilst benefiting from Type safety in .NET.

Declarative Tables and Indexes

Behind the scenes DynamoDB is built on a dynamic schema which whilst open and flexible, can be cumbersome to work with directly in typed languages like C#. PocoDynamo bridges the gap and lets your app bind to impl-free and declarative POCO data models that provide an ideal high-level abstraction for your business logic, hiding a lot of the complexity of working with DynamoDB - dramatically reducing the code and effort required whilst increasing the readability and maintainability of your Apps business logic.

It includes optimal support for defining simple local indexes which only require declaratively annotating properties

to index with an [Index] attribute.

Typed POCO Data Models can be used to define more complex Local and Global DynamoDB Indexes by implementing

IGlobalIndex<Poco> or ILocalIndex<Poco> interfaces which PocoDynamo uses along with the POCOs class structure

to construct Table indexes at the same time it creates the tables.

In this way the Type is used as a DSL to define DynamoDB indexes where the definition of the index is decoupled from the imperative code required to create and query it, reducing the effort to create them whilst improving the visualization and understanding of your DynamoDB architecture which can be inferred at a glance from the POCO's Type definition. PocoDynamo also includes first-class support for constructing and querying Global and Local Indexes using a familiar, typed LINQ provider.

Resilient

Each operation is called within a managed execution which transparently absorbs the variance in cloud services reliability with automatic retries of temporary errors, using an exponential backoff as recommended by Amazon.

Enhances existing APIs

PocoDynamo API's are a lightweight layer modeled after DynamoDB API's making it predictable the DynamoDB operations

each API calls under the hood, retaining your existing knowledge investment in DynamoDB.

When more flexibility is needed you can access the low-level AmazonDynamoDBclient from the IPocoDynamo.DynamoDb

property and talk with it directly.

Whilst PocoDynamo doesn't save you for needing to learn DynamoDB, its deep integration with .NET and rich support for POCO's smoothes out the impedance mismatches to enable an type-safe, idiomatic, productive development experience.

High-level features

PocoDynamo includes its own high-level features to improve the re-usability of your POCO models and the development experience of working with DynamoDB with support for Auto Incrementing sequences, Query expression builders, auto escaping and converting of Reserved Words to placeholder values, configurable converters, scoped client configurations, related items, conventions, aliases, dep-free data annotation attributes and more.

Download

PocoDynamo is contained in ServiceStack's AWS NuGet package:

PM> Install-Package ServiceStack.Aws

PocoDynamo has a 10 Tables free-quota usage limit which is unlocked with a license key.

To get started we'll need to create an instance of AmazonDynamoDBClient with your AWS credentials and Region info:

var awsDb = new AmazonDynamoDBClient(AWS_ACCESS_KEY, AWS_SECRET_KEY, RegionEndpoint.USEast1);

Then to create a PocoDynamo client pass the configured AmazonDynamoDBClient instance above:

var db = new PocoDynamo(awsDb);

Clients are Thread-Safe so you can register them as a singleton and share the same instance throughout your App

Creating a Table with PocoDynamo

PocoDynamo enables a declarative code-first approach where it's able to create DynamoDB Table schemas from just your

POCO class definition. Whilst you could call db.CreateTable<Todo>() API and create the Table directly, the recommended

approach is instead to register all the tables your App uses with PocoDynamo on Startup, then just call InitSchema()

which will go through and create all missing tables:

//PocoDynamo

var db = new PocoDynamo(awsDb)

.RegisterTable<Todo>();

db.InitSchema();

db.GetTableNames().PrintDump();

In this way your App ends up in the same state with all tables created if it was started with no tables, all tables or only a partial list of tables. After the tables are created we query DynamoDB to dump its entire list of Tables, which if you started with an empty DynamoDB instance would print the single Todo table name to the Console:

[

Todo

]

Managed DynamoDB Client

Every request in PocoDynamo is invoked inside a managed execution where any temporary errors are retried using the AWS recommended retries exponential backoff.

All PocoDynamo API's returning IEnumerable<T> returns a lazy evaluated stream which behind-the-scenes sends multiple

paged requests as needed whilst the sequence is being iterated. As LINQ APIs are also lazily evaluated you could use

Take() to only download however the exact number results you need. So you can query the first 100 table names with:

//PocoDynamo

var first100TableNames = db.GetTableNames().Take(100).ToList();

and PocoDynamo will only make the minimum number of requests required to fetch the first 100 results.

PocoDynamo Examples

DynamoDbCacheClient

We've been quick to benefit from the productivity advantages of PocoDynamo ourselves where we've used it to rewrite

DynamoDbCacheClient

which is now just 2/3 the size and much easier to maintain than the existing

Community-contributed version

whilst at the same time extending it with even more functionality where it now implements the ICacheClientExtended API.

DynamoDbAuthRepository

PocoDynamo's code-first Typed API made it much easier to implement value-added DynamoDB functionality like the new

DynamoDbAuthRepository

which due sharing a similar code-first POCO approach to OrmLite, ended up being a straight-forward port of the existing

OrmLiteAuthRepository

where it was able to reuse the existing UserAuth and UserAuthDetails POCO data models.

DynamoDbTests

Despite its young age we've added a comprehensive test suite behind PocoDynamo which has become our exclusive client for developing DynamoDB-powered Apps.

PocoDynamo Docs

This only scratches the surface of what PocoDynamo can do, comprehensive documentation is available in the PocoDynamo project explaining how it compares to DynamoDB's AWSSDK client, how to use it to store related data, how to query indexes and how to use its rich LINQ querying functionality to query DynamoDB.

Getting started with AWS + ServiceStack Guides

Amazon offers managed hosting for a number of RDBMS and Caching servers which ServiceStack provides first-class clients for. We've provided a number of guides to walk through setting up these services from your AWS account and connect to them with ServiceStack's typed .NET clients.

AWS RDS PostgreSQL and OrmLite

AWS RDS Aurora and OrmLite

AWS RDS MySQL and OrmLite

AWS RDS MariaDB and OrmLite

AWS RDS SQL Server and OrmLite

AWS ElastiCache Redis and ServiceStack

AWS ElastiCache Redis and ServiceStack

The source code used in each guide is also available in the AwsGettingStarted repo.

Community

We're excited to learn that Andreas Niedermair new ServiceStack book is now available!

Mastering ServiceStack by Andreas Niedermair

Mastering ServiceStack covers real-life problems that occur over the lifetime of a distributed system and how to solve them by deeply understanding the tools of ServiceStack. Distributed systems is the enterprise solution that provide flexibility, reliability, scaling, and performance. ServiceStack is an outstanding tool belt to create such a system in a frictionless manner, especially sophisticated designed and fun to use.

The book starts with an introduction covering the essentials, but assumes you are just refreshing, are a very fast learner, or are an expert in building web services. Then, the book explains ServiceStack's data transfer object patterns and teach you how it differs from other methods of building web services with different protocols, such as SOAP and SOA. It also introduces more low-level details such as how to extend the User Auth, message queues and concepts on how the technology works.

By the end of this book, you will understand the concepts, framework, issues, and resolutions related to ServiceStack.

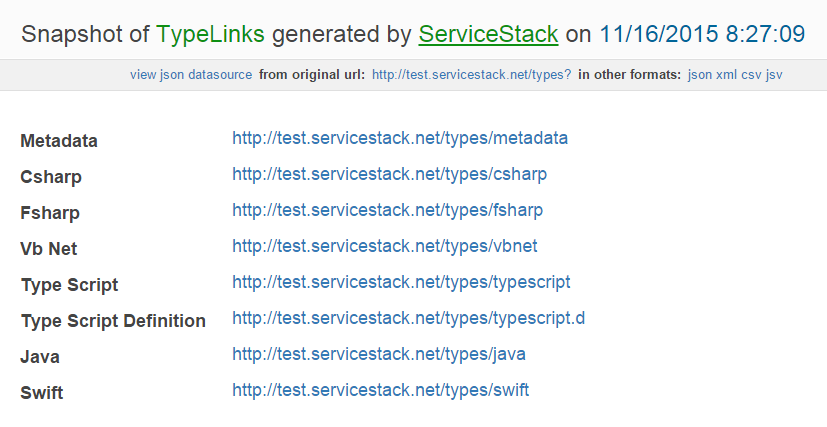

TypeScript

We've extended our existing TypeScript support for generating interface definitions for your DTO's with the much requested

ExportAsTypes=true option which instead generates non-ambient concrete TypeScript Types. This option is enabled at the

new /types/typescript route so you can get both concrete types and interface defintions at:

- /types/typescript - for generating concrete module and classes

- /types/typescript.d - for generating ambient interface definitions

Auto hyper-linked default HTML5 Pages

Any urls strings contained in default HTML5 Report Pages are automatically converted to a hyperlinks.

We can see this in the new /types route which returns links to all different

Add ServiceStack Reference supported languages:

public object Any(TypeLinks request)

{

var response = new TypeLinksResponse

{

Metadata = new TypesMetadata().ToAbsoluteUri(),

Csharp = new TypesCSharp().ToAbsoluteUri(),

Fsharp = new TypesFSharp().ToAbsoluteUri(),

VbNet = new TypesVbNet().ToAbsoluteUri(),

TypeScript = new TypesTypeScript().ToAbsoluteUri(),

TypeScriptDefinition = new TypesTypeScriptDefinition().ToAbsoluteUri(),

Swift = new TypesSwift().ToAbsoluteUri(),

Java = new TypesJava().ToAbsoluteUri(),

};

return response;

}

Where any url returned are now converted into navigatable hyper links, e.g: https://test.servicestack.net/types

New License Key Registration Option

To simplify license key registration when developing and maintaining multiple ServiceStack solutions we've added a new

option where you can now just register your License Key once in SERVICESTACK_LICENSE Environment Variable.

This lets you set it in one location on your local dev workstations and any CI and deployment Servers which then lets

you freely create multiple ServiceStack solutions without having to register the license key with each project.

Note: you'll need to restart IIS or VS.NET to have them pickup the new Environment Variable.

Virtual File System

ServiceStack's Virtual File System provides a clean abstraction over file-systems enabling the flexibility to elegantly support a wide range of cascading file sources. We've extended this functionality even further in this release with a new read/write API that's now implemented in supported providers:

public interface IVirtualFiles : IVirtualPathProvider

{

void WriteFile(string filePath, string textContents);

void WriteFile(string filePath, Stream stream);

void WriteFiles(IEnumerable<IVirtualFile> files, Func<IVirtualFile, string> toPath = null);

void DeleteFile(string filePath);

void DeleteFiles(IEnumerable<string> filePaths);

void DeleteFolder(string dirPath);

}

Folders are implicitly created when writing a file to folders that don't exist

The new IVirtualFiles API is available in local FileSystem, In Memory and S3 Virtual path providers:

- FileSystemVirtualPathProvider

- InMemoryVirtualPathProvider

- S3VirtualPathProvider

Whilst other read-only IVirtualPathProvider include:

- ResourceVirtualPathProvider - .NET Embedded resources

- MultiVirtualPathProvider - Combination of multiple cascading Virtual Path Providers

All IVirtualFiles providers share the same

VirtualPathProviderTests

ensuring a consistent behavior where it's now possible to swap between different file storage backends with simple

configuration as seen in the Imgur and REST Files examples.

VirtualFiles vs VirtualFileSources

As typically when saving uploaded files you'd only want files written to a single explicit File Storage provider,

ServiceStack keeps a distinction between the existing read-only Virtual File Sources it uses internally whenever a

static file is requested and the new IVirtualFiles which is maintained in a separate VirtualFiles property on

IAppHost and Service base class for easy accessibility:

public class IAppHost

{

// Read/Write Virtual FileSystem. Defaults to Local FileSystem.

IVirtualFiles VirtualFiles { get; set; }

// Cascading number of file sources, inc. Embedded Resources, File System, In Memory, S3.

IVirtualPathProvider VirtualFileSources { get; set; }

}

public class Service : IService //ServiceStack's convenient concrete base class

{

//...

public IVirtualPathProvider VirtualFiles { get; }

public IVirtualPathProvider VirtualFileSources { get; }

}

Internally ServiceStack only uses VirtualFileSources itself to serve static file requests.

The new IVirtualFiles is a clean abstraction your Services can bind to when saving uploaded files which can be easily

substituted when you want to change file storage backends. If not specified, VirtualFiles defaults to your local

filesystem at your host project's root directory.

Changes

To provide clear and predictable naming. some of the existing APIs were deprecated in favor of the new nomenclature:

IAppHost.VirtualPathProviderdeprecated, renamed toIAppHost.VirtualFileSourcesIAppHost.GetVirtualPathProviders()deprecated, renamed toIAppHost.GetVirtualFileSources()IWriteableVirtualPathProviderdeprecated, renamed toIVirtualFilesIWriteableVirtualPathProvider.AddFile()deprecated, renamed toWriteFile()VirtualPathno longer returns paths prefixed with/and VirtualPath of a Root directory isnullThis affectsConfig.ScanSkipPathswhich should no longer start with/, e.g: "node_modules/", "bin/", "obj/"

These old API's have been marked [Obsolete] and will be removed in a future version.

If you're using them, please upgrade to the newer APIs.

HttpResult

Custom Serialized Responses

The new IHttpResult.ResultScope API provides an opportunity to execute serialization within a custom scope, e.g. this can

be used to customize the serialized response of adhoc services that's different from the default global configuration with:

return new HttpResult(dto) {

ResultScope = () => JsConfig.With(includeNullValues:true)

};

Which enables custom serialization behavior by performing the serialization within the custom scope, equivalent to:

using (JsConfig.With(includeNullValues:true))

{

var customSerializedResponse = Serialize(dto);

}

Cookies

New cookies can be added to HttpResult’s new IHttpResult.Cookies collection.

VirtualFile downloads

The a new constructor overload lets you return a IVirtualFile download with:

return new HttpResult(VirtualFiles.GetFile(targetPath), asAttachment: true);

HttpError changes

The HttpError constructor that accepts a HttpStatusCode and a custom string description, e.g:

return new HttpError(HttpStatusCode.NotFound, "Custom Description");

return HttpError.NotFound("Custom Description");

Now returns the HttpStatusCode string as the ErrorCode instead of duplicating the error message,

so now the populated ResponseStatus for the above custom HttpError returns:

ResponseStatus {

ErrorCode = "NotFound", // previous: Custom Description

Message = "Custom Description"

}

AutoQuery

We've added a new %! and %NotEqualTo implicit query convention which is now available on all Auto queries:

/rockstars?age!=27

/rockstars?AgeNotEqualTo=27

Razor

New API's for explicitly refreshing a page:

HostContext.GetPlugin<RazorFormat>().RefreshPage(file.VirtualPath);

Also available in Markdown Format:

HostContext.GetPlugin<MarkdownFormat>().RefreshPage(file.VirtualPath);

New Error API's in base Razor Views for inspecting error responses:

GetErrorStatus()- get populated ErrorResponseStatusGetErrorHtml()- get error info marked up in a structured html fragment

Both API's return null if there were no errors.

Improved support for Xamarin.Mac

The ServiceStack.Client PCL provider for Xamarin.Mac now has an explicit reference Xamarin.Mac.dll which allows it to work in projects with the Link SDK Assemblies Only.

Redis

Allow StoreAsHash behavior converting a POCO object into a string Dictionary to be overridden to control how POCOs

are stored in a Redis Hash type, e.g:

RedisClient.ConvertToHashFn = o =>

{

var map = new Dictionary<string, string>();

o.ToObjectDictionary().Each(x => map[x.Key] = (x.Value ?? "").ToJsv());

return map;

};

Redis.StoreAsHash(dto); //Uses above implementation

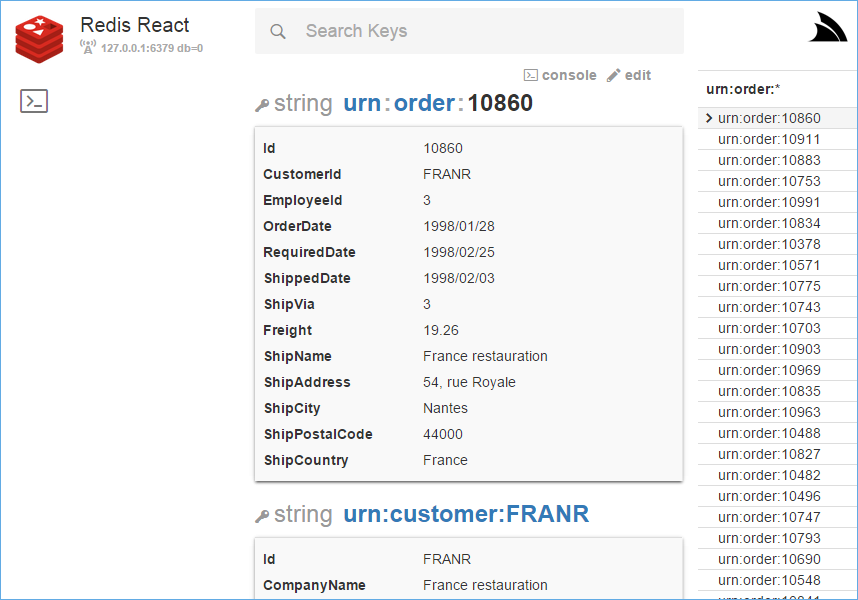

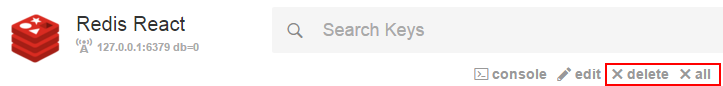

RedisReact Browser Updates

We've continued to add enhancements to Redis React Browser based on your feedback:

Delete Actions

Delete links added on each key. Use the delete link to delete a single key or the all link to delete all related keys currently being displayed.

Expanded Prompt

Keys can now be edited in a larger text area which uses the full height of the screen real-estate available - this is now the default view for editing a key. Click the collapse icon when finished to return to the console for execution.

All Redis Console commands are now be edited in the expanded text area by clicking on the Expand icon on the right of the console.

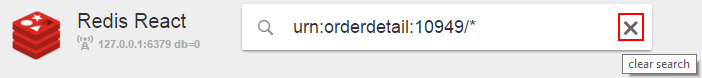

Clear Search

Use the X icon in the search box to quickly clear the current search.

OrmLite

System Variables and Default Values

To provide richer support for non-standard default values, each RDBMS Dialect Provider now contains a

OrmLiteDialectProvider.Variables placeholder dictionary for storing common, but non-standard RDBMS functionality.

We can use this to declaratively define non-standard default values that works across all supported RDBMS's

like automatically populating a column with the RDBMS UTC Date when Inserted with a default(T) Value:

public class Poco

{

[Default(OrmLiteVariables.SystemUtc)] //= {SYSTEM_UTC}

public DateTime CreatedTimeUtc { get; set; }

}

OrmLite variables need to be surrounded with {} braces to identify that it's a placeholder variable, e.g {SYSTEM_UTC}.

Parameterized SqlExpressions

Preview of a new parameterized SqlExpression provider that's now available for SQL Server and Oracle, opt-in with:

OrmLiteConfig.UseParameterizeSqlExpressions = true;

When enabled, OrmLite instead uses Typed SQL Expressions inheriting from ParameterizedSqlExpression<T> to convert

most LINQ expression arguments into parameterized variables instead of inline SQL Literals for improved RDBMS query

profiling and performance. Note: this is a beta feature that's subject to change.

Custom Load References

Johann Klemmack added support for selectively specifying which references you want to load, e.g:

var customerWithAddress = db.LoadSingleById<Customer>(customer.Id, include: new[] { "PrimaryAddress" });

//Alternative

var customerWithAddress = db.LoadSingleById<Customer>(customer.Id, include: x => new { x.PrimaryAddress });

T4 Templates

OrmLite's T4 templates for generating POCO types from an existing database schema has improved support for generating stored procedures thanks to Richard Safier.

ServiceStack.Text

The new ConvertTo<T> on JsonObject helps dynamically parsing JSON into typed object, e.g. you can dynamically

parse the following OData json response:

{

"odata.metadata":"...",

"value":[

{

"odata.id":"...",

"QuotaPolicy@odata.navigationLinkUrl":"...",

"#SetQuotaPolicyFromLevel": { "target":"..." },

"Id":"1111",

"UserName":"testuser",

"DisplayName":"testuser Large",

"Email":"testuser@testuser.ca"

}

]

}

By navigating down a JSON object graph with JsonObject then using ConvertTo<T> to convert a unstructured JSON object

into a concrete POCO Type, e.g:

var users = JsonObject.Parse(json)

.ArrayObjects("value")

.Map(x => x.ConvertTo<User>());

Minor Features

- Server Events

/event-subscribersroute now returns all channel subscribers - PATCH method is allowed in CORS Feature by default

- Swagger Summary for all servies under a particular route can be specified in

SwaggerFeature.RouteSummaryDictionary - New requested

FacebookAuthProvider.Fieldscan be customized withoauth.facebook.Fields - Added Swift support for

TreatTypesAsStringsfor returning specific .NET Types as Strings - New

IAppSettings.GetAll()added on all AppSetting sources fetches all App config in a single call - ServiceStackVS updated with ATS exception for React Desktop OSX Apps

- External NuGet packages updated to their latest stable version

New Signed Packages

- ServiceStack.Mvc.Signed

- ServiceStack.Authentication.OAuth2.Signed

v4.0.48 Issues

TypeScript missing BaseUrl

The TypeScript Add ServiceStack Reference feature was missing the BaseUrl in header comments preventing updates. It's now been resolved from this commit that's now available in our pre-release v4.0.49 NuGet packages.

ServiceStack.Mvc incorrectly references ServiceStack.Signed

The ServiceStack.Mvc project had a invalid dependency on ServiceStack.Signed which has been resolved from this commit that's now available in our pre-release v4.0.49 NuGet packages.

You can workaround this by manually removing the ServiceStack.Signed packages and adding the ServiceStack packages instead.

Config.ScanSkipPaths not ignoring folders

The change of removing / prefixes from Virtual Paths meant folders ignored in Config.ScanSkipPaths were no

longer being ignored. It's important node.js-based Single Page App templates ignore node_modules/ since trying

to scan it throws an error on StartUp when it reaches paths greater than Windows 260 char limit. This is

fixed in the pre-release v4.0.49 NuGet packages.

You can also work around this issue in v4.0.48 by removing the prefix from Config.ScanSkipPaths folders

in AppHost.Configure() manually with:

SetConfig(new HostConfig { ... });

for (int i = 0; i < Config.ScanSkipPaths.Count; i++)

{

Config.ScanSkipPaths[i] = Config.ScanSkipPaths[i].TrimStart('/');

}