This release includes support for .NET Core 2.1, number of performance improvements with internals rewritten to use .NET's new Span<T> Memory Types, all SPA Templates upgraded to utilize latest CLI projects, new simpler zero-configuration Parcel SPA Templates, exciting enhanced capabilities added to #Script - providing a fun and productive alternative to Razor, more capable and versatile Web Apps, a minimal multi-user .NET Core Blog Web App developed in a real-time live development workflow, enhancements to Swift, Dart, TypeScript end-to-end Typed integrations, support for the latest 3rd Party dependencies, and lots more.

Table of Contents

- Performance Improvements

- .NET Core Memory Provider Abstraction

- MemoryStream Pooling

- Support for .NET Core 2.1

- JavaScript Expressions

- Template Blocks

- Page Based Routing

- Dynamic Page Routes

- Init Pages

- Buffered View Pages

- Dynamic Sharp APIs

- Order Report Example

- SQL Studio Example

- Customizable Auth Providers

- Customizable Markdown Providers

- Rich Template Config Arguments

- New Parcel Web App Template

- Support for Npgsql 4.0

- Support for MySql.Data 8.0.12

- Bitwise operators

- Extended Select SqlExpression APIs

- OrmLite variables in CustomField

- Update from Object Dictionary

- Custom Index Name

- Array parameters in ExecuteSql

- Async overloads in Service Clients

- BearerToken in Request DTOs

- New FallbackHandlers filters

- Multitenancy

- Authentication

- Serilog

Spanified ServiceStack

Major rework was performed across the ServiceStack.Text and ServiceStack.Common foundational libraries to replace its internal usage of StringSegment

with .NET's new high-performance

Span and Memory Types primitives

which are now used for all JSON/JSV deserialization and many other String utilities.

The new Span<T> and ReadOnlyMemory<char> Types is the successor to StringSegment which are both allocation-free, but Span also enjoys additional runtime support as a JIT intrinsic for improved performance.

This change was primarily an internal refactor so there shouldn't be any user visible differences except for the addition of the System.Memory dependency which contains the new Memory types. As a general rule we're averse to adopting new dependencies but the added performance of these new primitives makes it a required dependency for maintaining high-performance libraries.

StringSegment extensions replaced with Span

The additional dependency replaces Microsoft.Extensions.Primitives containing

the StringSegment Type. This removal would be a breaking change if you were utilizing our StringSegment extension methods, but is easily resolvable

as all existing extension methods are

available on ReadOnlySpan<char>

which are accessible using

AsSpan()

to return it as a ReadOnlySpan<char>, e.g:

var name = filename.AsSpan().LeftPart('.');

Although as it's the preferred Type for allocation-free string utils in .NET we recommend updating your code to use the newer ReadOnlySpan<char>

and ReadOnlyMemory<char> Types also. But if needed, the existing

StringSegment polyfill and extension methods

can be dropped into your project to retain source code compatibility.

New Span APIs

Additional public APIs are available to deserialize directly from a ReadOnlySpan<char> source:

//JSON

JsonSerializer.DeserializeFromSpan<T>(ReadOnlySpan<char> json)

JsonSerializer.DeserializeFromSpan(Type type, ReadOnlySpan<char> json)

//JSV

TypeSerializer.DeserializeFromSpan<T>(ReadOnlySpan<char> jsv)

TypeSerializer.DeserializeFromSpan(Type type, ReadOnlySpan<char> jsv)

//Extension methods

T FromJsonSpan<T>(this ReadOnlySpan<char> json)

T FromJsvSpan<T>(this ReadOnlySpan<char> jsv)

One place where the conversion resulted in a user-visible change is in AutoQuery Response Filters where

the parsed Commands now have ReadOnlyMemory<char>

properties instead of StringSegment.

Performance Improvements

To provide some insight on the performance that can be expected we've taken the most recently published .NET Serializer benchmarks created by Alois Kraus and upgraded the ServiceStack dependency to use the latest version.

These are results of BookShelf JSON Serialization performance for ServiceStack.Text vs JSON.NET (run on an iMac 5k):

.NET Core Benchmarks

| Serializer | Objects | "Time to serialize in s" | "Time to deserialize in s" | "Size in bytes" | FileVersion |

|---|---|---|---|---|---|

| ServiceStack | 1 | 0.000 | 0.000 | 69 | 5.0.0.0 |

| ServiceStack | 1 | 0.000 | 0.000 | 69 | 5.0.0.0 |

| ServiceStack | 10 | 0.000 | 0.000 | 305 | 5.0.0.0 |

| ServiceStack | 100 | 0.000 | 0.000 | 2827 | 5.0.0.0 |

| ServiceStack | 500 | 0.000 | 0.000 | 14827 | 5.0.0.0 |

| ServiceStack | 1000 | 0.000 | 0.001 | 29829 | 5.0.0.0 |

| ServiceStack | 10000 | 0.004 | 0.006 | 317831 | 5.0.0.0 |

| ServiceStack | 50000 | 0.018 | 0.031 | 1677831 | 5.0.0.0 |

| ServiceStack | 100000 | 0.036 | 0.068 | 3377833 | 5.0.0.0 |

| ServiceStack | 200000 | 0.073 | 0.146 | 6977833 | 5.0.0.0 |

| ServiceStack | 500000 | 0.181 | 0.374 | 17777833 | 5.0.0.0 |

| ServiceStack | 800000 | 0.290 | 0.606 | 28577833 | 5.0.0.0 |

| ServiceStack | 1000000 | 0.363 | 0.756 | 35777835 | 5.0.0.0 |

| JsonNet | 1 | 0.000 | 0.000 | 69 | 11.0.2.21924 |

| JsonNet | 1 | 0.000 | 0.000 | 69 | 11.0.2.21924 |

| JsonNet | 10 | 0.000 | 0.000 | 305 | 11.0.2.21924 |

| JsonNet | 100 | 0.000 | 0.000 | 2827 | 11.0.2.21924 |

| JsonNet | 500 | 0.000 | 0.000 | 14827 | 11.0.2.21924 |

| JsonNet | 1000 | 0.001 | 0.001 | 29829 | 11.0.2.21924 |

| JsonNet | 10000 | 0.005 | 0.006 | 317831 | 11.0.2.21924 |

| JsonNet | 50000 | 0.025 | 0.035 | 1677831 | 11.0.2.21924 |

| JsonNet | 100000 | 0.051 | 0.073 | 3377833 | 11.0.2.21924 |

| JsonNet | 200000 | 0.105 | 0.153 | 6977833 | 11.0.2.21924 |

| JsonNet | 500000 | 0.253 | 0.404 | 17777833 | 11.0.2.21924 |

| JsonNet | 800000 | 0.400 | 0.726 | 28577833 | 11.0.2.21924 |

| JsonNet | 1000000 | 0.520 | 0.920 | 35777835 | 11.0.2.21924 |

Which shows ServiceStack.Text being about ~30% faster for serialization and 17.8% for deserialization for 1M objects.

.NET v4.7.1 Benchmarks

| Serializer | Objects | "Time to serialize in s" | "Time to deserialize in s" | "Size in bytes" | FileVersion |

|---|---|---|---|---|---|

| ServiceStack | 1 | 0.000 | 0.000 | 69 | 5.0.0.0 |

| ServiceStack | 1 | 0.000 | 0.000 | 69 | 5.0.0.0 |

| ServiceStack | 10 | 0.000 | 0.000 | 305 | 5.0.0.0 |

| ServiceStack | 100 | 0.000 | 0.000 | 2827 | 5.0.0.0 |

| ServiceStack | 500 | 0.000 | 0.000 | 14827 | 5.0.0.0 |

| ServiceStack | 1000 | 0.000 | 0.001 | 29829 | 5.0.0.0 |

| ServiceStack | 10000 | 0.004 | 0.007 | 317831 | 5.0.0.0 |

| ServiceStack | 50000 | 0.021 | 0.035 | 1677831 | 5.0.0.0 |

| ServiceStack | 100000 | 0.043 | 0.072 | 3377833 | 5.0.0.0 |

| ServiceStack | 200000 | 0.087 | 0.161 | 6977833 | 5.0.0.0 |

| ServiceStack | 500000 | 0.208 | 0.413 | 17777833 | 5.0.0.0 |

| ServiceStack | 800000 | 0.340 | 0.669 | 28577833 | 5.0.0.0 |

| ServiceStack | 1000000 | 0.417 | 0.836 | 35777835 | 5.0.0.0 |

| JsonNet | 1 | 0.000 | 0.000 | 69 | 11.0.2.21924 |

| JsonNet | 1 | 0.000 | 0.000 | 69 | 11.0.2.21924 |

| JsonNet | 10 | 0.000 | 0.000 | 305 | 11.0.2.21924 |

| JsonNet | 100 | 0.000 | 0.000 | 2827 | 11.0.2.21924 |

| JsonNet | 500 | 0.000 | 0.000 | 14827 | 11.0.2.21924 |

| JsonNet | 1000 | 0.001 | 0.001 | 29829 | 11.0.2.21924 |

| JsonNet | 10000 | 0.005 | 0.007 | 317831 | 11.0.2.21924 |

| JsonNet | 50000 | 0.025 | 0.038 | 1677831 | 11.0.2.21924 |

| JsonNet | 100000 | 0.050 | 0.078 | 3377833 | 11.0.2.21924 |

| JsonNet | 200000 | 0.100 | 0.170 | 6977833 | 11.0.2.21924 |

| JsonNet | 500000 | 0.250 | 0.439 | 17777833 | 11.0.2.21924 |

| JsonNet | 800000 | 0.402 | 0.777 | 28577833 | 11.0.2.21924 |

| JsonNet | 1000000 | 0.503 | 0.992 | 35777835 | 11.0.2.21924 |

Under ServiceStack.Text is about ~17% faster for serialization and ~15.7% faster for deserialization for 1M objects.

The benchmarks also show .NET Core (v2.0.7) is ~12.9% faster for serialization and ~9.5% faster for deserialization than .NET Framework (v4.7.3110.0).

.NET Core Memory Provider Abstraction

Whilst the Span<T> Type itself is available for .NET Standard libraries, the base-class libraries which accept Span<T> types directly are not which makes

them unavailable to .NET Standard 2.0 or .NET Framework builds. To be able to make use of the allocation-free alternatives in .NET Core 2.1,

a MemoryProvider abstraction was added

to allow using the most efficient APIs available on each platform. This also requires creating .NET Core 2.1 platform specific builds of

ServiceStack.Text which contains bindings to the native APIs which will continued to be published going forward.

Although our Micro benchmarks are still showing many of the new Span<T> overloads in the base class library like int.Parse(ReadOnlySpan<char>) are

still not as performant

as our own non-allocating implementations which continued to be used until more performant APIs are available.

But other APIs like like writing to Streams or UTF-8 conversions are now using .NET Core's native implementations.

MemoryStream Pooling

Bing's RecyclableMemoryStream for pooling Memory Streams that was previously only enabled in .NET Core is now enabled by default in .NET Framework.

This is utilized everywhere a MemoryStream is needed in ServiceStack except for a few identified places where they're found to be incompatible like

in .NET's Compression APIs.

We haven't been notified of any, but if this change results in any issues, MemoryStream pooling can be disabled with:

SetConfig(new HostConfig { EnableOptimizations = false })

If this does resolve any issues, please let us know so we can resolve them permanently without disabling any other optimizations.

Support for UWP .NET Native

AOT Environments like UWP's .NET Native will fail at both build and runtime if Reflection.Emit symbols are referenced, even if they're not used. As we've started publishing .NET Core builds of ServiceStack.Text, we've decided to remove dependencies of Reflection.Emit from .NET Standard 2.0 builds so they remain as portable as possible in .NET Standard 2.0 supported platforms like UWP/iOS/Android which now fallback to use Compiled Expressions.

To retain maximum performance Reflection.Emit is still enabled in .NET Core and .NET Framework builds.

Xamarin.iOS requires VS.NET 15.8

In order to be able to use the latest ServiceStack Service Clients in Xamarin platforms you'll need to upgrade to the latest Visual Studio 15.8 which resolves issues from using System.Memory in Xamarin.

The HelloMobile project has also upgraded its UWP, iOS, Android and Xamarin.Forms projects to use VS.NET 15.8 project templates.

Support for .NET Core 2.1

In following Microsoft's LTS policy for .NET Core we're also moving to support .NET Core 2.1 for running ServiceStack. Most packages publish .NET Standard 2.0 builds so they remain unaffected except for ServiceStack.Kestrel which has been upgraded to reference .NET Core 2.1 packages.

In addition all our Test projects, .NET Core Templates, .NET Core Web Apps, etc have all been upgraded to .NET Core 2.1.

As .NET Core 2.1 is the first LTS release of .NET Core 2 and soon to be the only supported version, we're also recommending everyone to upgrade when possible.

.NET Core Templates

Previously all ServiceStack's Webpack SPA Templates used a similar Webpack v3 configuration and npm-based solution for enabling its client development workflow and packaging. Unfortunately Webpack 4 was a major breaking change which is incompatible with the existing configuration with not all Webpack modules available for v4 yet. This means all projects created using existing Webpack v3 Templates would need to either manually upgrade to use Webpack 4 or stay on Webpack v3 indefinitely.

In the meantime all major JavaScript frameworks have developed their own CLI tools to simplify development by taking over the configuration and development workflow of projects using their frameworks. One of the ways they simplify development is by hiding Webpack configuration as an internal implementation detail whilst ensuring new projects start with same well-known compatible configuration defaults that has been battle tested across a larger user base. By insulating Webpack we're also expecting upgrades to be a lot smoother as in theory only the JS Framework packages and tools would need to be upgraded to support newer major Webpack versions, instead of every project using Webpack directly.

Given the simpler development workflow and smoother future upgrades possible with CLI tools, we've replaced all our SPA Templates to use the latest templates generated by the CLI tools for each respective framework.

All templates can be installed using our dotnet-new tool, which if not already can be installed with:

$ npm install -g @servicestack/cli

Available SPA Project Templates now bootstrapped with latest CLI tools and project templates include:

vue-spa

Bootstrapped with Vue CLI 3.

Create new Vue 2.5 Project for .NET Core 2.1:

$ dotnet-new vue-spa ProjectName

Create new Vue 2.5 Project for .NET Framework:

$ dotnet-new vue-spa-netfx ProjectName

react-spa

Bootstrapped with create-react-app.

Create new React 16 Project for .NET Core 2.1:

$ dotnet-new react-spa ProjectName

Create new React 16 Project for .NET Framework:

$ dotnet-new react-spa-netfx ProjectName

angular-spa

Bootstrapped with Angular CLI.

Create new Angular 7 Project for .NET Core 2.1:

$ dotnet-new angular-spa ProjectName

Create new Angular 7 Project for .NET Framework:

$ dotnet-new angular-spa-netfx ProjectName

vuetify-spa

Bootstrapped with Vue CLI 3 and the vuetify cli plugin.

Create new Vuetify Project for .NET Core 2.1:

$ dotnet-new vuetify-spa ProjectName

Create new Vuetify Project for .NET Framework:

$ dotnet-new vuetify-spa-netfx ProjectName

vue-nuxt

Bootstrapped with Nuxt.js starter template.

Create new Nuxt.js v1.4.2 Project for .NET Core 2.1:

$ dotnet-new vue-nuxt ProjectName

Create new Nuxt.js v1.4.2 Project for .NET Framework:

$ dotnet-new vue-nuxt-netfx ProjectName

vuetify-nuxt

Bootstrapped with Nuxt.js + Vuetify.js starter template.

Create new Nuxt Vuetify Project for .NET Core 2.1:

$ dotnet-new vuetify-nuxt ProjectName

Create new Nuxt Vuetify Project for .NET Framework:

$ dotnet-new vuetify-nuxt-netfx ProjectName

SPA Project Templates Dev Workflow

Whilst the client Application has been generated by the official CLI tool from each project, all templates continue to enjoy seamless integration with ServiceStack and follows its recommended Physical Project Structure. As the npm scripts vary slightly between projects, you'll need to refer to the documentation in the GitHub project of each template for the functionality available, but they all typically share the same functionality below to manage your projects development lifecycle:

Start a watched client build which will recompile and reload web assets on save:

$ npm run dev

Start a watched .NET Core build which will recompile C# .cs source files on save and restart the ServiceStack .NET Core App:

$ dotnet watch run

Leaving the above 2 commands running takes care of most of the development workflow which handles recompilation of both modified client and server source code.

Regenerate your client TypeScript DTOs after making a change to any Services:

$ npm run dtos

Create an optimized client and package a Release build of your App:

$ npm run publish

Which will publish your App to bin/Release/netcoreapp2.1/publish ready for deployment.

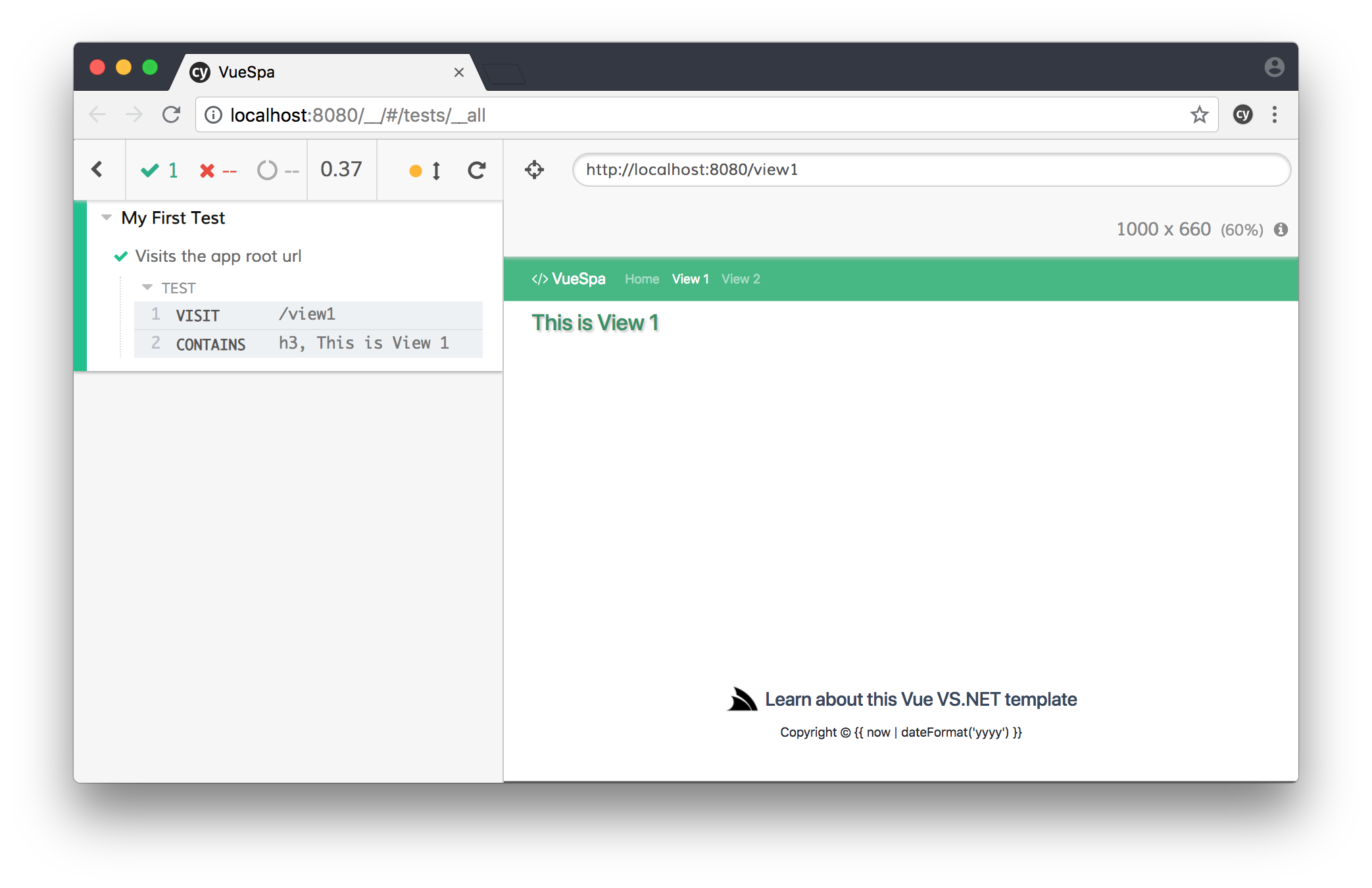

Testing

The major JS Framework Templates are also pre-configured with their preferred unit testing solution which are run with npm's test command:

$ npm test

Whilst Vue and Angular also include support for running end-to-end integration tests in a browser:

$ npm run e2e

This also highlights one of the benefits of utilizing npm's vibrant ecosystem where it benefits from significant investments like cypress.io which provides a complete solution for running integration tests:

New Parcel SPA Template

Create new Parcel Template:

$ dotnet-new parcel ProjectName

Parcel aims to provide the simplest out-of-the-box development experience for creating modern npm-powered Web Apps by getting out of your way and letting you develop Websites without regard for a bundling solution or JS Framework.

To enlist its functionality you just point parcel to your home page:

$ parcel index.html

This starts a Live Hot Reload Server which inspects all linked *.html, script and stylesheet resources to find all dependencies which it automatically

monitors for changes where it will automatically rebuild and reload your webpage. Then when it's time for deployment you can perform a production build

for your website with the build command:

$ parcel build index.html

Where it creates an optimized bundle using advanced minification, compilation and bundling techniques. Despite its instant utility and zero configuration, it comes pre-configured with popular auto transforms for developing modern Web Apps which lets you utilize PostCSS transforms and advanced transpilers like TypeScript which the new Parcel Template takes advantage of to enable a pleasant development experience by enabling access to the latest ES7/TypeScript language features.

This template starts from a clean slate and does not use any of the popular JavaScript frameworks making it ideal when wanting to use any other micro JS libraries that can be referenced using a simple script include - reminiscent of simpler times.

Or develop without a JS framework, e.g. index.ts below uses TypeScript and the native HTML DOM APIs for its functionality:

import { client } from "./shared";

import { Hello } from "./dtos";

const result = document.querySelector("#result")!;

document.querySelector("#Name")!.addEventListener("input", async e => {

const value = (e.target as HTMLInputElement).value;

if (value != "") {

const request = new Hello();

request.name = value;

const response = await client.get(request);

result.innerHTML = response.result;

} else {

result.innerHTML = "";

}

});

The Parcel Template also includes customizations to integrate it with .NET Core Project conventions and

#Script Website enabling access to additional flexibility like dynamic Web Pages and server-side rendering

when needed. See the Parcel Template docs for information on the

available dev, build, dtos and publish npm scripts used to manage the Development workflow.

Seamless Parcel integration is another example of the benefits of #Script layered approach and non-intrusive handlebars syntax which

can be cleanly embedded in existing .html pages without interfering with static HTML analyzers like parcel and Webpack HTML plugins and their

resulting HTML minification in optimized production builds - enabling simplified development workflows and integration that's not possible with Razor.

#Script Remastered

We're excited to announce the next version of #Script which has gained significant new capabilities and implemented its entire wishlist from the previous version, overcoming all features we felt were missing from the previous release transforming it into a full-featured dynamic templating language that provides a much simpler and more flexible alternative for developing server-generated Web Pages than Razor, that's suitable for more use-cases than Razor.

Natural familiar syntax for HTML Templating

An elegant characteristic of #Script is its familiar and natural syntax, it introduces very little new syntax as it's able

to adopt popular language syntax that's prevalently used in Web Apps today, namely JavaScript Expressions, Vue Filters and Handlebars.js

block helpers - these combined, adequately describe #Script syntax.

Spanified Templates

In amidst of the internal rewrite to be able to add its new capabilities, all parsing was rewritten to use the new Span<T> Type for added performance, minimal allocations and future proofing, ensuring the supporting Data Structures would not need to be changed in future.

Minimal Breaking Changes

Despite the significant refactor the only known breaking change was to rename the

and() and or() conditional test methods to upper case AND() and OR()

which was a side-effect of implementing proper JavaScript Expressions with and and or becoming keywords to support

SQL-Like Expressions. Although these methods are no longer needed

as they were deficiencies from the previous restriction of having all functionality provided by C# methods. Now you can use the more natural JS/C# or

SQL boolean expression syntax, e.g:

AND(OR(eq(it,2),eq(it,3)), isOdd(it)) // Filter methods

(it == 2 || it == 3) && isOdd(it) // JavaScript/C#

(it = 2 or it = 3) and isOdd(it) // SQL-like

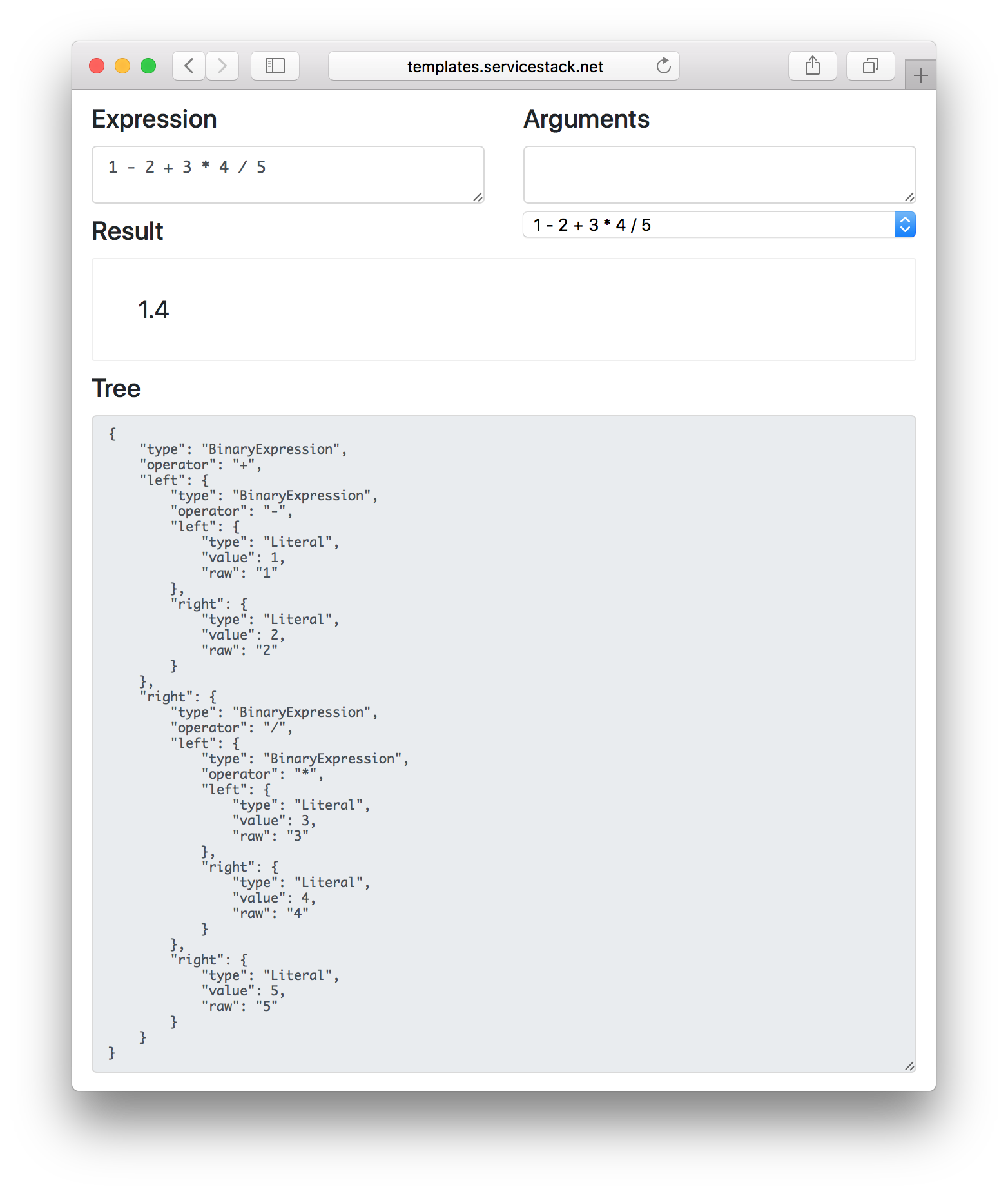

JavaScript Expressions

As Templates quickly became our preferred method for developing server-generated Websites one feature high on our wishlist was to be able to use JavaScript Expressions in filters. Templates was first and foremost designed for simplicity inspired by languages like LISP and Smalltalk which was able to achieve great power with minimal language features. But as the examples above shows this restriction often compromises on readability and expressiveness which are also design goals for Templates so the first major feature added to Templates was support for JavaScript Expressions.

As the previous abstract syntax tree (AST) was a naive implementation that only supported its limited featureset, a new AST was needed to support the

expanded JavaScript expression syntax, but instead of creating a new one we've followed the

syntax tree used by Esprima, JavaScript's leading lexical language parser for JavaScript,

but adapted to suit C# conventions using PascalCase properties and each AST Type prefixed with Js* to avoid naming collisions with C#'s LINQ

Expression Types which often has the same name.

So Esprima's MemberExpression maps to JsMemberExpression in Templates.

In addition to adopting Esprima's AST data structures, Templates can also emit the same serialized Syntax Tree that Esprima generates from any AST Expression, e.g:

// Create AST from JS Expression

JsToken expr = JS.expression("1 - 2 + 3 * 4 / 5");

// Convert to Object Dictionary in Esprima's Syntax Tree Format

Dictionary<string, object> esprimaAst = expr.ToJsAst();

// Serialize as Indented JSON

esprimaAst.ToJson().IndentJson().Print();

Which will display the same output as seen in the new JS Expression Viewer:

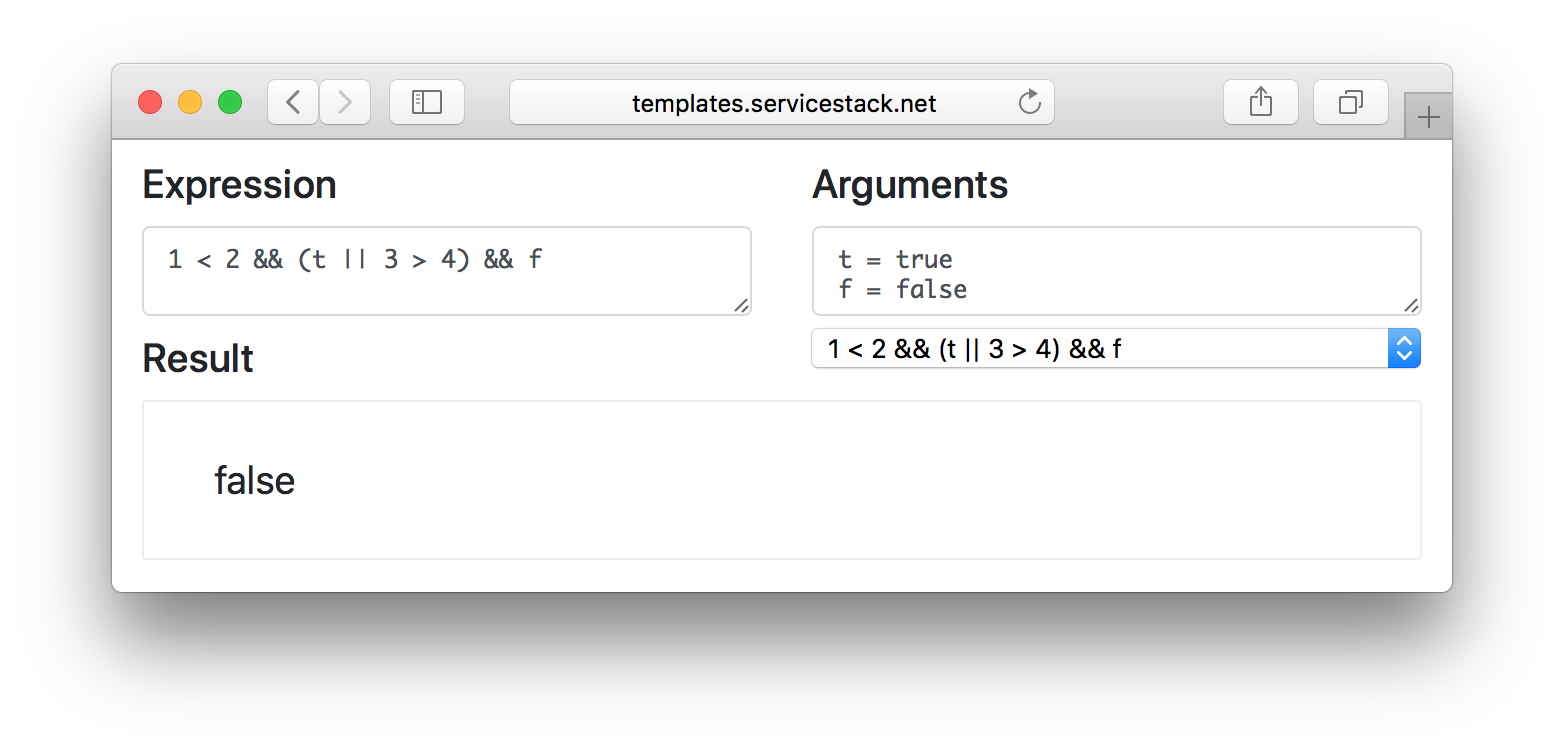

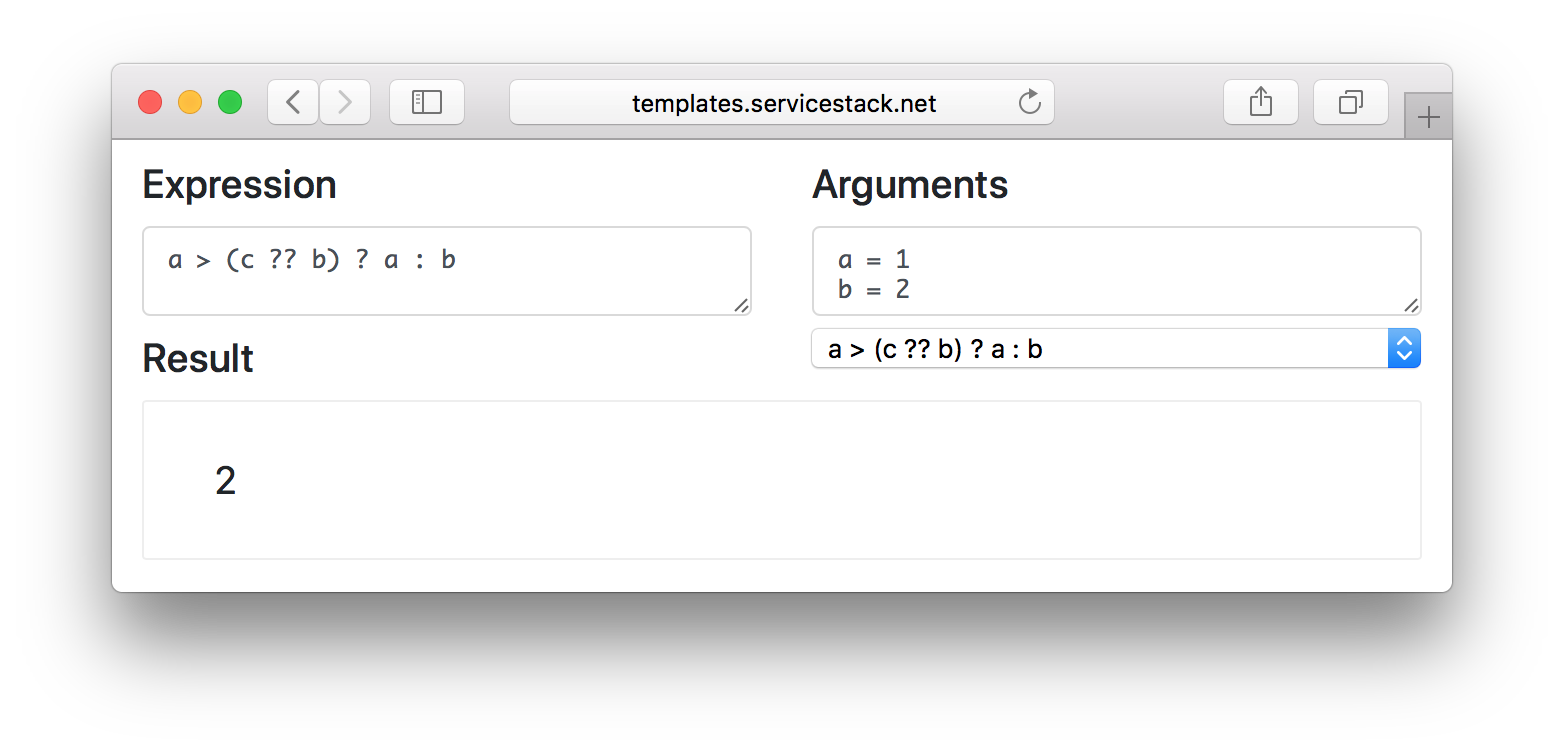

From the AST output we can visualize how the different operator precedence is applied to an Expression. Expression viewer also lets us explore and evaluate different JavaScript Expressions with custom arguments:

An abusage Brendan Eich regrets that we've chosen to enforce was limiting

the || and && binary operators to boolean expressions, which themselves always evaluate to a boolean value.

Instead to replicate || coalescing behavior on falsy values you can use C#'s ?? null coalescing operator as seen in:

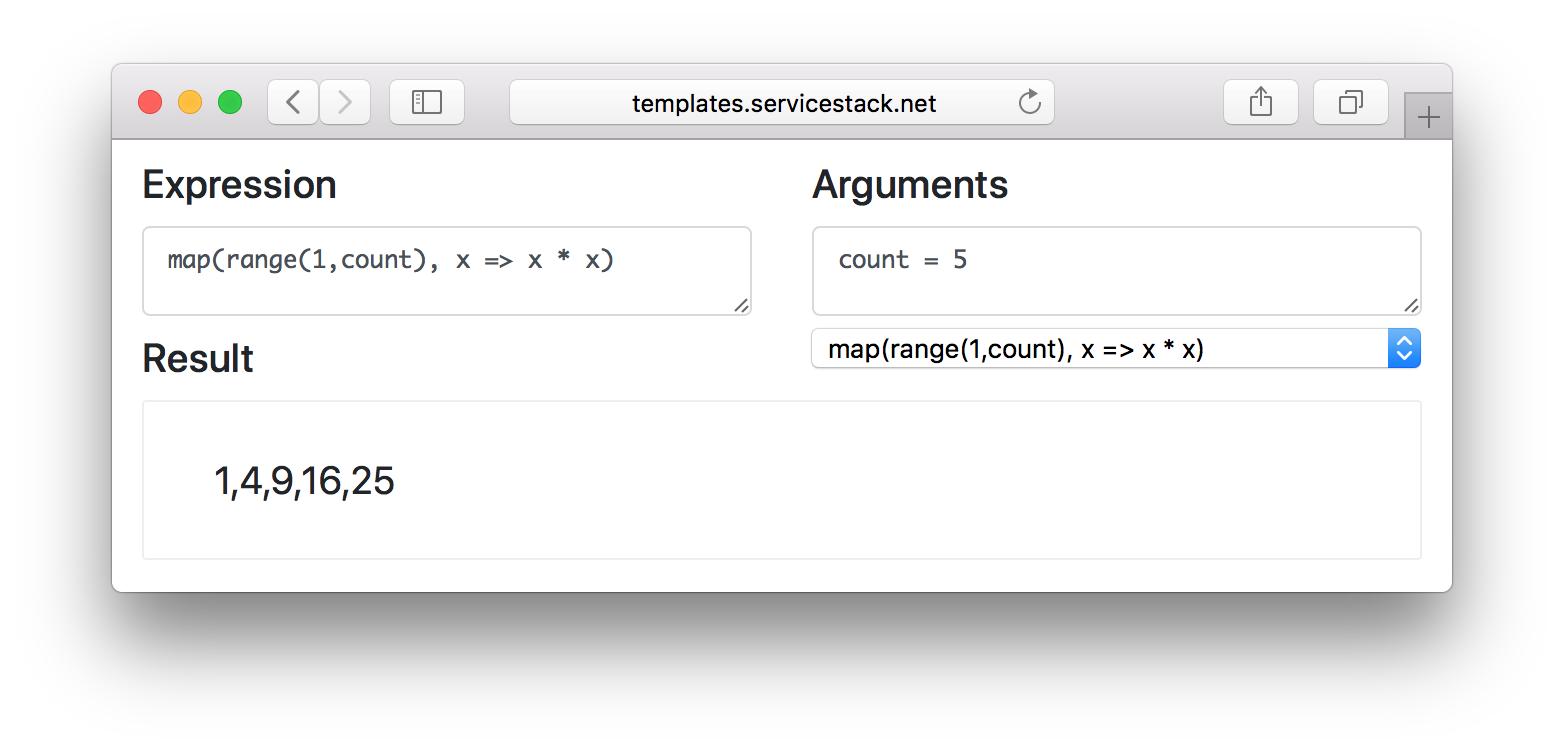

Lambda Expressions

The new support for lambda expressions is now available to all functional filters:

Previously to capture expressions without evaluating them they needed to be passed as a string where they would be re-evaluated with an

injected with an it binding. To make the experience more pleasant a

special string argument syntax was also added:

{{ customers | zip: it.Orders

| let({ c: 'it[0]', o: 'it[1]' })

| where: o.Total < 500

| map: o

| htmlDump }}

Whilst you can still use string expressions, its usage should be considered deprecated in all places that expect an expression given it's unfamiliarity which was only previously used to workaround the lack of lambda expressions which is now supported.

Instead you can now use lambda expression syntax:

{{ customers | zip(x => x.Orders)

| let(x => { c: x[0], o: x[1] })

| where(_ => o.Total < 500)

| map(_ => o)

| htmlDump }}

Similar to the Special string argument syntax there's also

shorthand support for single argument lambda expressions which can instead use => without brackets or named arguments where it will

be implicitly assigned to the it binding:

{{ customers | zip => it.Orders

| let => { c: it[0], o: it[1] }

| where => o.Total < 500

| map => o

| htmlDump }}

As it's results in more wrist-friendly and readable code, most LINQ Examples have been changed to use the shorthand lambda expression syntax above.

Shorthand properties

Other language enhancements include support for JavaScript's shorthand property names:

{{ {name,age} }}

But like C# also lets you use member property names:

{{ people | let => { it.Name, it.Age } | select: {Name},{Age} }}

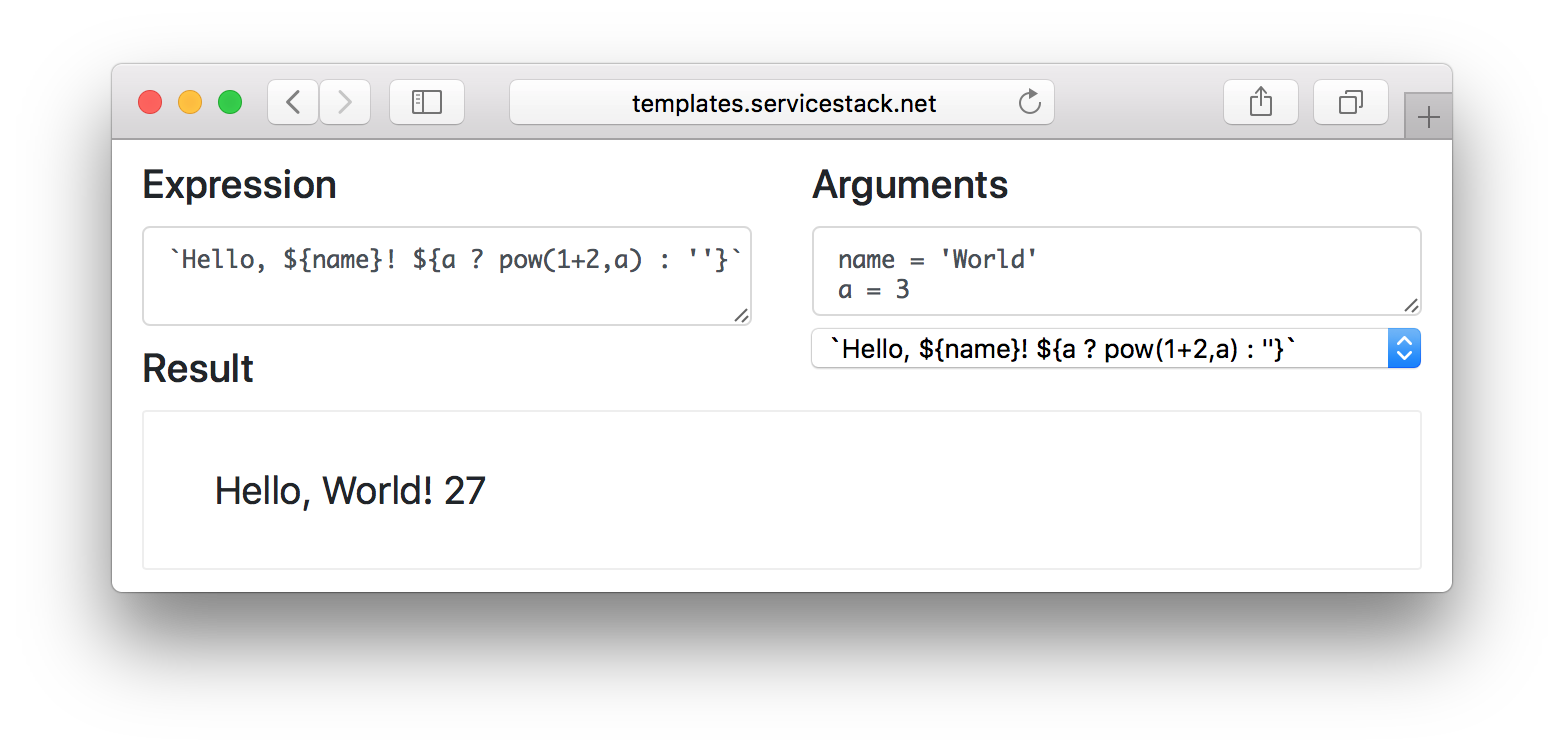

Template Literals

Many of ES6/7 features are also implemented like Template Literals which is very nice to have in a Template Language:

Backtick quoted strings also adopt the same escaping behavior of JavaScript strings whilst all other quoted strings preserve unescaped string values.

Spread Operators

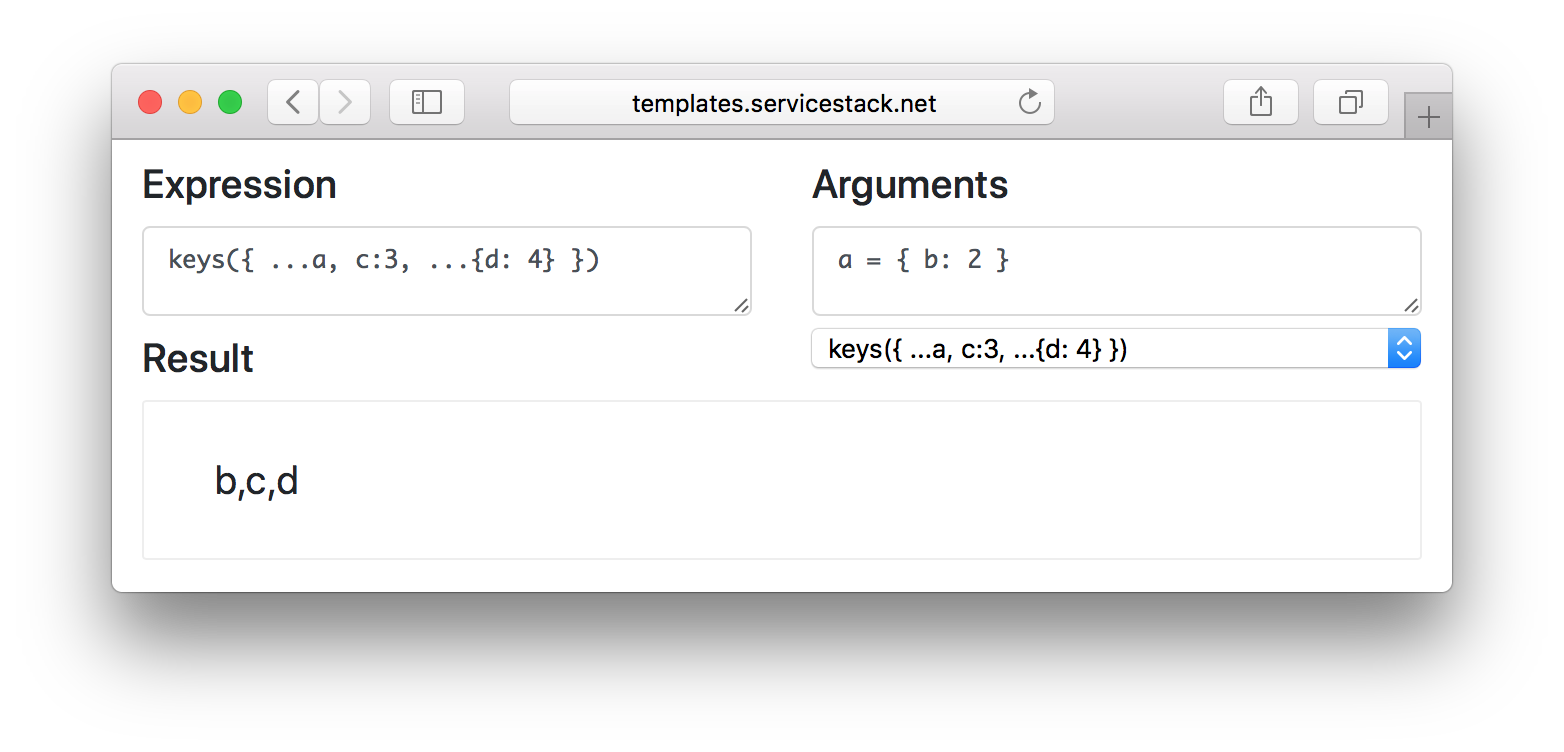

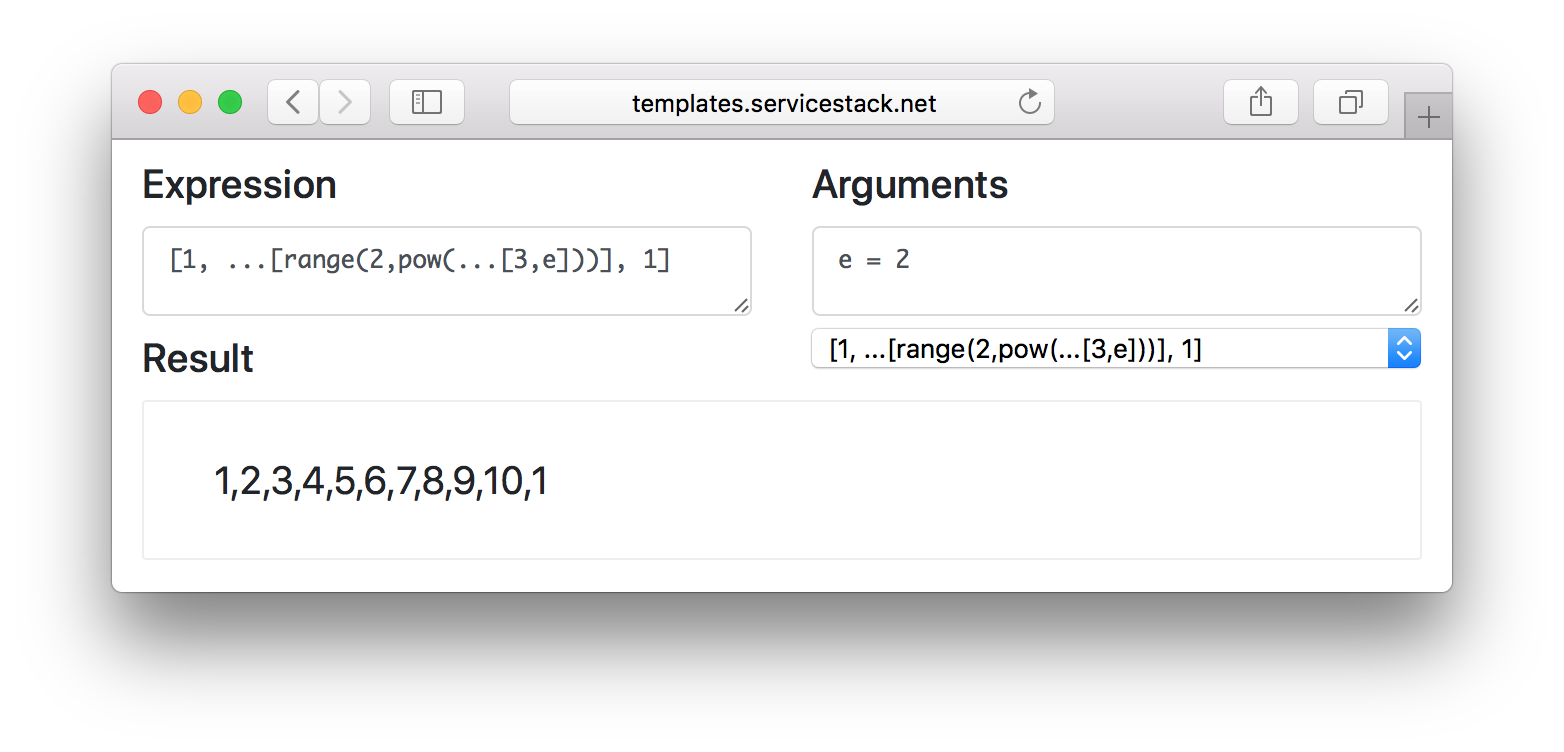

Other advanced ES6/7 features supported include the object spread, array spread and argument spread operators:

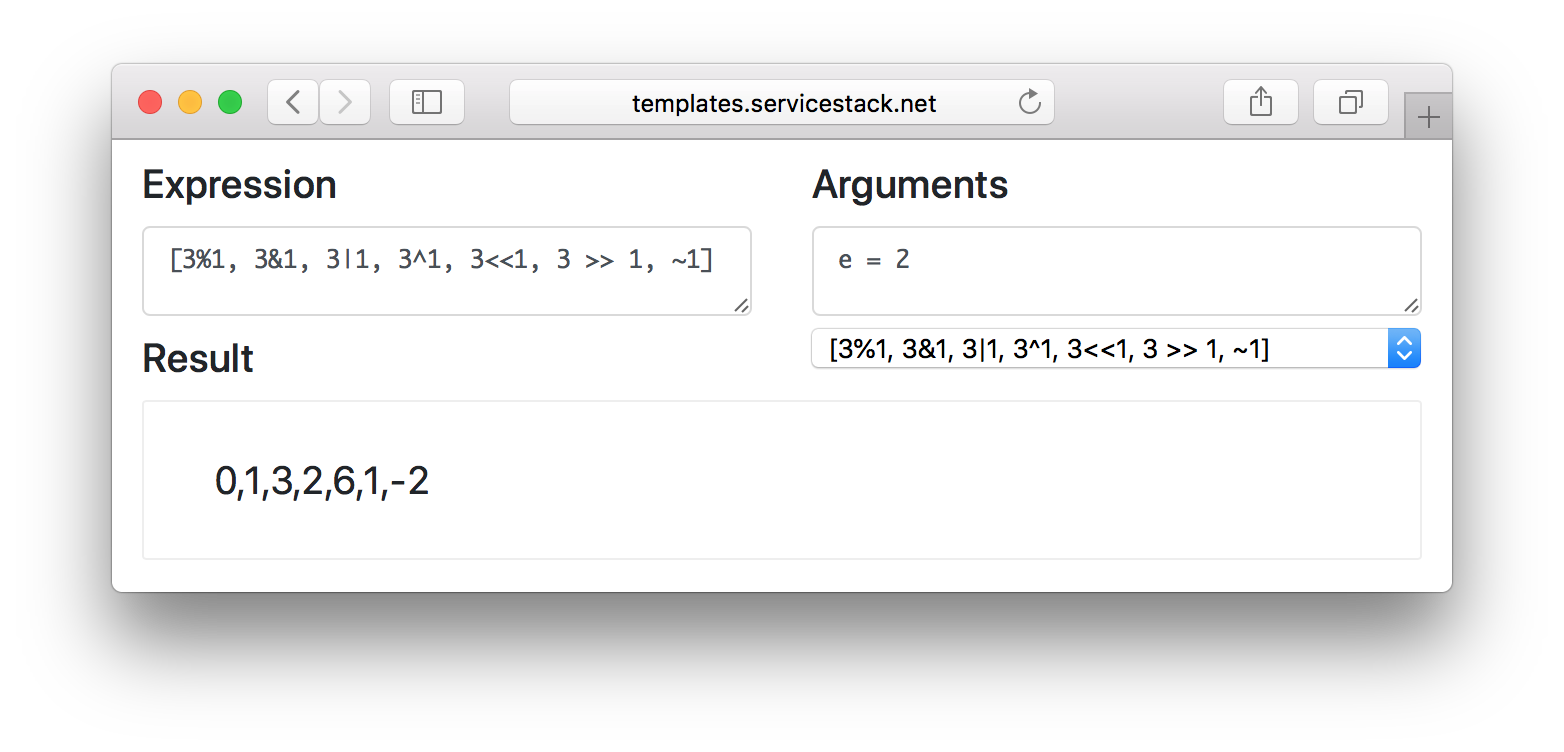

Bitwise Operators

All JavaScript Bitwise operators are also supported:

Essentially Templates now supports most JavaScript Expressions, not statements which are covered with the new Blocks support or mutations using Assignment Expressions and Operators. All assignments still need to be explicitly performed through an Assignment Filter.

Evaluating JavaScript Expressions

The built-in JavaScript expressions support is also useful outside of Templates where they can be evaluated with JS.eval():

JS.eval("pow(2,2) + pow(4,2)") //= 20

The difference over JavaScript's eval being that methods are calling C# method filters in a sandboxed context.

By default expressions are executed in an empty scope, but can also be executed within a custom scope which can be used to define the arguments expressions are evaluated with:

var scope = JS.CreateScope(args: new Dictionary<string, object> {

["a"] = 2,

["b"] = 4,

});

JS.eval("pow(a,2) + pow(b,2)", scope) //= 20

Custom methods can also be introduced into the scope which can override existing filters by using the same name and args count, e.g:

class MyFilters : TemplateFilter {

public double pow(double arg1, double arg2) => arg1 / arg2;

}

var scope = JS.CreateScope(functions: new MyFilters());

JS.eval("pow(2,2) + pow(4,2)", scope); //= 3

An alternative to injecting arguments by scope is to wrap the expression in a lambda expression, e.g:

var expr = (JsArrowFunctionExpression)JS.expression("(a,b) => pow(a,2) + pow(b,2)");

Which can then be invoked with positional arguments by calling Invoke(), e.g:

expr.Invoke(2,4) //= 20

expr.Invoke(scope, 2,4) //= 3

Parsing JS Expressions

Evaluating JS expressions with JS.eval() is a wrapper around parsing the JS expression into an AST tree then evaluating it:

var expr = JS.expression("pow(2,2) + pow(4,2)");

expr.Evaluate(); //= 20

When needing to evaluate the same expression multiple times you can cache and execute the AST to save the cost of parsing the expression again.

DSL example

If implementing a DSL containing multiple expressions as done in many of the Block argument expressions

you can instead use the ParseJsExpression() extension method to return a literal Span advanced to past the end of the expression with the parsed

AST token returned in an out parameter.

This is what the Each block implementation uses to parse its argument expression which can contain a number of LINQ-like expressions:

var literal = "where c.Age == 27 take 1 + 2".AsSpan();

if (literal.StartsWith("where "))

{

literal = literal.Advance("where ".Length); // 'c.Age == 27 take 1 + 2'

literal = literal.ParseJsExpression(out where); // ' take 1 + 2'

}

literal = literal.AdvancePastWhitespace(); // 'take 1 + 2'

if (literal.StartsWith("take "))

{

literal = literal.Advance("take ".Length); // '1 + 2'

literal = literal.ParseJsExpression(out take); // ''

}

Resulting in where populated with the c.Age == 27 BinaryExpression and take with the 1 + 2

BinaryExpression.

Immutable and Comparable

Unlike C#'s LINQ Expressions which can't be compared for equality, Template Expressions are both Immutable and Comparable which can be used in caches and compared to determine if 2 Expressions are equivalent, e.g:

var expr = new JsLogicalExpression(

new JsBinaryExpression(new JsIdentifier("a"), JsGreaterThan.Operator, new JsLiteral(1)),

JsAnd.Operator,

new JsBinaryExpression(new JsIdentifier("b"), JsLessThan.Operator, new JsLiteral(2))

);

expr.Equals(JS.expression("a > 1 && b < 2")); //= true

expr.Equals(new JsLogicalExpression(

JS.expression("a > 1"), JsAnd.Operator, JS.expression("b < 2")

)); //= true

Showing Expressions whether created programmatically, entirely from strings or any combination of both can be compared for equality and evaluated in the same way:

var scope = JS.CreateScope(args:new Dictionary<string, object> {

["a"] = 2,

["b"] = 1

});

expr.Evaluate(scope) //= true

Template Blocks

Another limitation causing friction was having to compose all logic within filter expressions which made it difficult to do things like selectively evaluate entire fragments. Existing solutions that was best suited to do this whilst maintaining high fidelity with existing Filter Expressions was handlebars block helpers.

Blocks lets you define reusable statements that can be invoked with a new context allowing the creation custom iterators and helpers - making it easy to encapsulate reusable functionality and reduce boilerplate for common functionality.

In addition to adopting its syntax, Templates also includes most of handlebars.js block helpers which are useful in a HTML template language whilst minimizing any porting efforts if needing to reuse existing JavaScript handlebars templates. Just like Filters, Blocks support is completely customizable where all built-in blocks can be removed and new ones added.

We'll walk through creating a few of the built-in Template blocks to demonstrate how to create them from scratch.

noop

We'll start with creating the noop block (short for "no operation") which functions like a block comment by removing its inner contents

from the rendered page:

<div class="entry">

<h1>{{title}}</h1>

<div class="body">

{{#noop}}

<h2>Removed Content</h2>

{{page}}

{{/noop}}

</div>

</div>

The noop block is also the smallest implementation possible which needs to inherit TemplateBlock class, overrides the Name getter with

the name of the block and implements the WriteAsync() method which for the noop block just returns an empty Task thereby not writing anything

to the Output Stream, resulting in its inner contents being ignored:

public class TemplateNoopBlock : TemplateBlock

{

public override string Name => "noop";

public override Task WriteAsync(TemplateScopeContext scope, PageBlockFragment block,CancellationToken t)

=> Task.CompletedTask;

}

All Block's are executed with 3 parameters:

TemplateScopeContext- The current Execution and Rendering contextPageBlockFragment- The parsed Block contentsCancellationToken- Allows the async render operation to be cancelled

Registering Blocks

The same flexible registration options for Registering Filters

is also available for registering blocks where if it wasn't already built-in, TemplateNoopBlock could be registered by adding it to

the TemplateBlocks collection:

var context = new TemplateContext {

TemplateBlocks = { new TemplateNoopBlock() },

}.Init();

Autowired using TemplateContext IOC

Autowired instances of blocks and filters can also be created using TemplateContext's configured IOC where they're also injected with any

registered IOC dependencies by registering them in the ScanTypes collection:

var context = new TemplateContext

{

ScanTypes = { typeof(TemplateNoopBlock) }

};

context.Container.AddSingleton<ICacheClient>(() => new MemoryCacheClient());

context.Init();

When the TemplateContext is initialized it goes through each Type and creates an autowired instance of each Block or Filter Type it can find

and registers them in the TemplateBlocks collection. An alternative to registering individual Types is to register an entire Assembly, e.g:

var context = new TemplateContext

{

ScanAssemblies = { typeof(MyBlock).Assembly }

};

Which automatically registers any Blocks or Filters contained in the Assembly where MyBlock is defined.

bold

A step up from noop is the bold Template Block which markup its contents within the <b/> tag:

{{#bold}}This text will be bold{{/bold}}

Which calls the base.WriteBodyAsync() method to evaluate and write the Block's contents to the OutputStream using the current

TemplateScopeContext:

public class TemplateBoldBlock : TemplateBlock

{

public override string Name => "bold";

public override async Task WriteAsync(

TemplateScopeContext scope, PageBlockFragment block, CancellationToken token)

{

await scope.OutputStream.WriteAsync("<b>", token);

await WriteBodyAsync(scope, block, token);

await scope.OutputStream.WriteAsync("</b>", token);

}

}

with

The with Block shows an example of utilizing arguments. To maximize flexibility arguments passed into your block are captured in a free-form

string (specifically a ReadOnlyMemory<char>) which gives Blocks the freedom to accept simple arguments to complex LINQ-like expressions - a

feature some built-in Blocks take advantage of.

The with block works similarly to handlebars with helper or JavaScript's

with statement where it extracts the properties (or Keys)

of an object and adds them to the current scope which avoids needing a prefix each property reference,

e.g. being able to use {{Name}} instead of {{person.Name}}:

{{#with person}}

Hi {{Name}}, your Age is {{Age}}.

{{/with}}

The with Block's contents are also only evaluated if the argument expression is not null.

The implementation below shows the optimal way to implement with by calling GetJsExpressionAndEvaluate() to resolve a cached

AST token that's then evaluated to return the result of the Argument expression.

If the argument evaluates to an object it calls the ToObjectDictionary() extension method to convert it into a Dictionary<string,object>

then creates a new scope with each property added as arguments and then evaluates the block's Body contents with the new scope:

public class TemplateWithBlock : TemplateBlock

{

public override string Name => "with";

public override async Task WriteAsync(

TemplateScopeContext scope, PageBlockFragment block, CancellationToken token)

{

var result = block.Argument.GetJsExpressionAndEvaluate(scope,

ifNone: () => throw new NotSupportedException("'with' block does not have a valid expression"));

if (result != null)

{

var resultAsMap = result.ToObjectDictionary();

var withScope = scope.ScopeWithParams(resultAsMap);

await WriteBodyAsync(withScope, block, token);

}

}

}

To better highlight what's happening, a non-cached version of GetJsExpressionAndEvaluate() involves parsing the Argument string into

an AST Token then evaluating it with the current scope:

block.Argument.ParseJsExpression(out token);

var result = token.Evaluate(scope);

The ParseJsExpression() extension method is able to parse virtually any JavaScript Expression into an AST tree which can then be evaluated by calling its token.Evaluate(scope) method.

Final implementation

The actual TemplateWithBlock.cs

used in Templates includes extended functionality which uses GetJsExpressionAndEvaluateAsync() to be able to evaluate both sync and async

results.

else if/else statements

It also evaluates any block.ElseBlocks statements which is functionality available to all blocks which are able to evaluate any alternative

else/else if statements when the main template isn't rendered, e.g. in this case when the with block is called with a null argument:

public class TemplateWithBlock : TemplateBlock

{

public override string Name => "with";

public override async Task WriteAsync(

TemplateScopeContext scope, PageBlockFragment block, CancellationToken token)

{

var result = await block.Argument.GetJsExpressionAndEvaluateAsync(scope,

ifNone: () => throw new NotSupportedException("'with' block does not have a valid expression"));

if (result != null)

{

var resultAsMap = result.ToObjectDictionary();

var withScope = scope.ScopeWithParams(resultAsMap);

await WriteBodyAsync(withScope, block, token);

}

else

{

await WriteElseAsync(scope, block.ElseBlocks, token);

}

}

}

This enables the with block to also evaluate async responses like the async results returned in

async Database filters,

it's also able to evaluate custom else statements for rendering different results based on alternate conditions, e.g:

{{#with dbSingle("select * from Person where id = @id", { id }) }}

Hi {{Name}}, your Age is {{Age}}.

{{else if id == 0}}

id is required.

{{else}}

No person with id {{id}} exists.

{{/with}}

if

Since all blocks can call base.WriteElseAsync() to execute any number of {{else}} statements, the implementation for

the {{if}} block ends up being even simpler which just needs to evaluate the argument to bool.

If true it writes the body with WriteBodyAsync() otherwise it evaluates any else statements with WriteElseAsync():

/// <summary>

/// Handlebars.js like if block

/// Usages: {{#if a > b}} max {{a}} {{/if}}

/// {{#if a > b}} max {{a}} {{else}} max {{b}} {{/if}}

/// {{#if a > b}} max {{a}} {{else if b > c}} max {{b}} {{else}} max {{c}} {{/if}}

/// </summary>

public class TemplateIfBlock : TemplateBlock

{

public override string Name => "if";

public override async Task WriteAsync(

TemplateScopeContext scope, PageBlockFragment block, CancellationToken token)

{

var result = await block.Argument.GetJsExpressionAndEvaluateToBoolAsync(scope,

ifNone: () => throw new NotSupportedException("'if' block does not have a valid expression"));

if (result)

{

await WriteBodyAsync(scope, block, token);

}

else

{

await WriteElseAsync(scope, block.ElseBlocks, token);

}

}

}

each

From what we've seen up till now, the handlebars.js each block is also

straightforward to implement which just iterates over a collection argument that evaluates its body with a new scope containing the

elements properties, a conventional it binding for the element and an index argument that can be used to determine the

index of each element:

/// <summary>

/// Handlebars.js like each block

/// Usages: {{#each customers}} {{Name}} {{/each}}

/// {{#each customers}} {{it.Name}} {{/each}}

/// {{#each customers}} Customer {{index + 1}}: {{Name}} {{/each}}

/// {{#each numbers}} {{it}} {{else}} no numbers {{/each}}

/// {{#each numbers}} {{it}} {{else if letters != null}} has letters {{else}} no numbers {{/each}}

/// </summary>

public class TemplateSimpleEachBlock : TemplateBlock

{

public override string Name => "each";

public override async Task WriteAsync(

TemplateScopeContext scope, PageBlockFragment block, CancellationToken token)

{

var collection = (IEnumerable) block.Argument.GetJsExpressionAndEvaluate(scope,

ifNone: () => throw new NotSupportedException("'each' block does not have a valid expression"));

var index = 0;

if (collection != null)

{

foreach (var element in collection)

{

var scopeArgs = element.ToObjectDictionary();

scopeArgs["it"] = element;

scopeArgs[nameof(index)] = index++;

var itemScope = scope.ScopeWithParams(scopeArgs);

await WriteBodyAsync(itemScope, block, token);

}

}

if (index == 0)

{

await WriteElseAsync(scope, block.ElseBlocks, token);

}

}

}

Despite its terse implementation, the above Template Block can be used to iterate over any expression that evaluates to a collection, inc. objects, POCOs, strings as well as Value Type collections like ints.

Built-in each

However the built-in TemplateEachBlock.cs has a larger implementation to support its richer feature-set where it also includes support for async results, custom element bindings and LINQ-like syntax for maximum expressiveness whilst utilizing expression caching to ensure any complex argument expressions are only parsed once.

/// <summary>

/// Handlebars.js like each block

/// Usages: {{#each customers}} {{Name}} {{/each}}

/// {{#each customers}} {{it.Name}} {{/each}}

/// {{#each num in numbers}} {{num}} {{/each}}

/// {{#each num in [1,2,3]}} {{num}} {{/each}}

/// {{#each numbers}} {{it}} {{else}} no numbers {{/each}}

/// {{#each numbers}} {{it}} {{else if letters != null}} has letters {{else}} no numbers {{/each}}

/// {{#each n in numbers where n > 5}} {{it}} {{else}} no numbers > 5 {{/each}}

/// {{#each n in numbers where n > 5 orderby n skip 1 take 2}} {{it}}{{else}}no numbers > 5{{/each}}

/// </summary>

public class TemplateEachBlock : TemplateBlock { ... }

By using ParseJsExpression() to parse expressions after each "LINQ modifier", each supports evaluating complex JavaScript expressions in each

of its LINQ querying modifiers, e.g:

{{#each c in customers

where c.City == 'London' and c.Country == 'UK' orderby c.CompanyName descending

skip 3 - (2 - 1)

take 1 + 2 }}

{{index + 1}}. {{c.CustomerId}} from <b>{{c.CompanyName}}</b> - {{c.City}}, {{c.Country}}.

{{/each}}

Custom bindings

When using a custom binding like {{#each c in customers}} above, the element is only accessible with the custom c binding

which is more efficient when only needing to reference a subset of the element's properties as it avoids adding each of the elements properties

in the items execution scope.

For more live previews showcasing advanced usages of {{#each}},

checkout the LINQ Examples.

raw

The {{#raw}} block is similar to handlebars.js's raw-helper which captures

the template's raw text content instead of having its content evaluated, making it ideal for emitting content that could contain

template expressions like client-side JavaScript or template expressions that shouldn't be evaluated on the server such as

Vue, Angular or Ember templates:

{{#raw}}

<div id="app">

{{ message }}

</div>

{{/raw}}

When called with no arguments it will render its unprocessed raw text contents. When called with a single argument, e.g.

{{#raw varname}} will instead save the raw text contents to the specified global (PageResult) variable and lastly when

called with the appendTo modifier it will append its contents to the existing variable, or initialize it if it doesn't exist.

This is now the preferred approach used in all .NET Core and .NET Framework Web Templates for pages and partials to append any custom JavaScript script blocks they need to include in the page, e.g:

{{#raw appendTo scripts}}

<script>

//...

</script>

{{/raw}}

Where any captured custom scripts are rendered at the bottom of _layout.html with:

<script src="/assets/js/default.js"></script>

{{ scripts | raw }}

</body>

</html>

The implementation to support each of these usages is contained within

TemplateRawBlock.cs

which inspects the block.Argument to determine whether it should capture the contents into the specified variable or write its raw

string contents directly to the OutputStream.

/// <summary>

/// Special block which captures the raw body as a string fragment

///

/// Usages: {{#raw}}emit {{ verbatim }} body{{/raw}}

/// {{#raw varname}}assigned to varname{{/raw}}

/// {{#raw appendTo varname}}appended to varname{{/raw}}

/// </summary>

public class TemplateRawBlock : TemplateBlock

capture

The {{#capture}} block in

TemplateCaptureBlock.cs

is similar to the raw block except instead of using its raw text contents, it evaluates its contents and captures the output.

It also supports evaluating the contents with scoped arguments where by each property in the object dictionary is added in the scoped arguments

that the template is executed with:

/// <summary>

/// Captures the output and assigns it to the specified variable.

/// Accepts an optional Object Dictionary as scope arguments when evaluating body.

///

/// Usages: {{#capture output}} {{#each args}} - [{{it}}](/path?arg={{it}}) {{/each}} {{/capture}}

/// {{#capture output {nums:[1,2,3]} }} {{#each nums}} {{it}} {{/each}} {{/capture}}

/// {{#capture appendTo output {nums:[1,2,3]} }} {{#each nums}} {{it}} {{/each}} {{/capture}}

/// </summary>

public class TemplateCaptureBlock : TemplateBlock

With this we can dynamically generate some markdown, capture its contents and convert the resulting markdown to html using the markdown Filter transformer:

{{#capture todoMarkdown { items:[1,2,3] } }}

## TODO List

{{#each items}}

- Item {{it}}

{{/each}}

{{/capture}}

{{todoMarkdown | markdown}}

markdown

The {{#markdown}} block in

MarkdownTemplatePlugin.cs

makes it even easier to embed markdown content directly in web pages which works as you'd expect where content in a markdown block is converted into HTML:

{{#markdown}}

## TODO List

- Item 1

- Item 2

- Item 3

{{/markdown}}

Which is now the easiest and preferred way to embed Markdown content in content-rich hybrid web pages like Razor Rockstars content pages, or even the blocks.html WebPage itself which makes extensive use of markdown.

As markdown block only supports 2 usages its implementation is much simpler than the capture block above:

/// <summary>

/// Converts markdown contents to HTML using the configured MarkdownConfig.Transformer.

/// If a variable name is specified the HTML output is captured and saved instead.

///

/// Usages: {{#markdown}} ## The Heading {{/markdown}}

/// {{#markdown content}} ## The Heading {{/markdown}} HTML: {{content}}

/// </summary>

public class TemplateMarkdownBlock : TemplateBlock

{

public override string Name => "markdown";

public override async Task WriteAsync(

TemplateScopeContext scope, PageBlockFragment block, CancellationToken token)

{

var strFragment = (PageStringFragment)block.Body[0];

if (!block.Argument.IsNullOrWhiteSpace())

{

Capture(scope, block, strFragment);

}

else

{

await scope.OutputStream.WriteAsync(MarkdownConfig.Transform(strFragment.ValueString), token);

}

}

private static void Capture(

TemplateScopeContext scope, PageBlockFragment block, PageStringFragment strFragment)

{

var literal = block.Argument.AdvancePastWhitespace();

literal = literal.ParseVarName(out var name);

var nameString = name.ToString();

scope.PageResult.Args[nameString] = MarkdownConfig.Transform(strFragment.ValueString).ToRawString();

}

}

Use Alternative Markdown Implementation

By default ServiceStack uses an interned implementation of MarkdownDeep for rendering markdown, you can get ServiceStack to use an alternate

Markdown implementation by overriding MarkdownConfig.Transformer.

E.g. to use the richer Markdig implementation, install the Markdig NuGet package:

PM> Install-Package Markdig

Then assign a custom IMarkdownTransformer:

public class MarkdigTransformer : IMarkdownTransformer

{

private Markdig.MarkdownPipeline Pipeline { get; } =

Markdig.MarkdownExtensions.UseAdvancedExtensions(new Markdig.MarkdownPipelineBuilder()).Build();

public string Transform(string markdown) => Markdig.Markdown.ToHtml(markdown, Pipeline);

}

MarkdownConfig.Transformer = new MarkdigTransformer();

partial

The {{#partial}} block in

TemplatePartialBlock.cs

lets you create In Memory partials which is useful when working with partial filters like selectPartial as

it lets you declare multiple partials within the same page, instead of needing to define them in individual files, e.g:

{{#partial order}}

Order {{ it.OrderId }}: {{ it.OrderDate | dateFormat }}

{{/partial}}

{{#partial customer}}

Customer {{ it.CustomerId }} {{ it.CompanyName | raw }}

{{ it.Orders | selectPartial: order }}{{/partial}}

{{ customers

| where => it.Region = 'WA'

| assignTo: waCustomers

}}

Customers from Washington and their orders:

{{ waCustomers | selectPartial: customer }}

See docs on Inline partials for a Live comparison of using in memory partials.

html

The purpose of the html blocks is to pack a suite of generically useful functionality commonly used when generating html. All html blocks inherit the same functionality with blocks registered for the most popular HTML elements, currently:

ul, ol, li, div, p, form, input, select, option, textarea, button, table, tr, td, thead, tbody, tfoot,

dl, dt, dd, span, a, img, em, b, i, strong.

Ultimately they reduce boilerplate, e.g. you can generate a menu list with a single block:

{{#ul {each:items, id:'menu', class:'nav'} }}

<li>{{it}}</li>

{{/ul}}

A more advanced example showcasing many of its different features is below:

{{#ul {if:hasAccess, each:items, where:'Age > 27',

class:['nav', !disclaimerAccepted ? 'blur' : ''], id:`menu-${id}`, selected:true} }}

{{#li {class: {alt:isOdd(index), active:Name==highlight} }} {{Name}} {{/li}}

{{else}}

<div>no items</div>

{{/ul}}

This example utilizes many of the features in html blocks, namely:

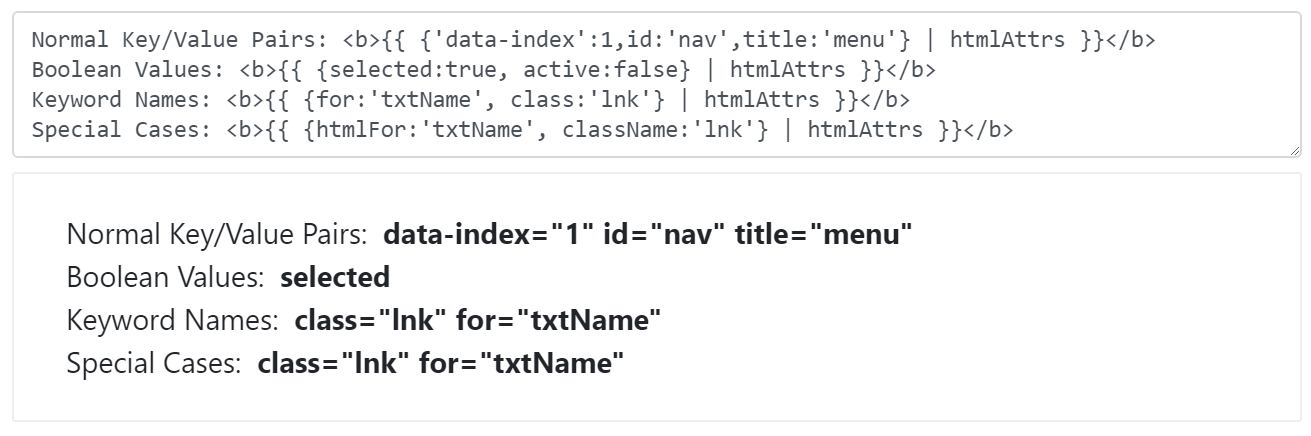

if- only render the template if truthyeach- render the template for each item in the collectionwhere- filter the collectionit- change the name of each elementitbindingclass- special property implementing Vue's special class bindings where an object literal can be used to emit a list of class names for all truthy properties, an array can be used to display a list of class names or you can instead use a string of class names.

All other properties like id and selected are treated like HTML attributes where if the property is a boolean like selected it's only displayed

if it's true otherwise all other html attribute names and values are emitted as normal.

For a better illustration we can implement the same functionality above without using any html blocks:

{{#if hasAccess}}

{{ items | where => it.Age > 27 | assignTo: items }}

{{#if !isEmpty(items) }}

<ul {{ ['nav', !disclaimerAccepted ? 'blur' : ''] | htmlClass }} id="menu-{{id}}">

{{#each items}}

<li {{ {alt:isOdd(index), active:Name==highlight} | htmlClass }}>{{Name}}</li>

{{/each}}

</ul>

{{else}}

<div>no items</div>

{{/if}}

{{/if}}

The same functionality using C# Razor with the latest C# language features enabled can be implemented with:

@{

var persons = (items as IEnumerable<Person>)?.Where(x => x.Age > 27);

}

@if (hasAccess)

{

if (persons?.Any() == true)

{

<ul id="menu-@id" class="nav @(!disclaimerAccepted ? "hide" : "")">

@{

var index = 0;

}

@foreach (var person in persons)

{

<li class="@(index++ % 2 == 1 ? "alt " : "" )@(person.Name == activeName ? "active" : "")">

@person.Name

</li>

}

</ul>

}

else

{

<div>no items</div>

}

}

Removing Blocks

Like everything else in Templates, all built-in Blocks can be removed. To make it easy to remove groups of related blocks you can just remove the

plugin that registered them using the RemovePlugins() API, e.g:

var context = new TemplateContext()

.RemovePlugins(x => x is TemplateDefaultBlocks) // Remove default blocks

.RemovePlugins(x => x is TemplateHtmlBlocks) // Remove all html blocks

.Init();

Or use the OnAfterPlugins callback to remove any individual blocks or filters that were added by any plugin.

E.g. the capture block can be removed with:

var context = new TemplateContext {

OnAfterPlugins = ctx => ctx.RemoveBlocks(x => x.Name == "capture")

}

.Init();

Page Based Routing

Another convenient feature that makes developing websites with Templates more enjoyable is its new support for conventional page-based routing which enables pretty urls inferred from the pages file and directory names.

To recap each page can be requested with or without its .html extension:

| path | page |

|---|---|

| /db | |

| /db.html | /db.html |

| /posts/new | |

| /posts/new.html | /posts/new.html |

The default route / maps to the index.html in the directory if it exists, e.g:

| path | page |

|---|---|

| / | /index.html |

| /index.html | /index.html |

Dynamic Page Routes

In addition to these static conventions, Template Pages now supports Nuxt-like Dynamic Routes where any file or directory names prefixed with an _underscore enables a wildcard path which assigns the matching path component to the arguments name:

| path | page | arguments |

|---|---|---|

| /ServiceStack | /_user/index.html | user=ServiceStack |

| /posts/markdown-example | /posts/_slug/index.html | slug=markdown-example |

| /posts/markdown-example/edit | /posts/_slug/edit.html | slug=markdown-example |

Layout and partial recommended naming conventions

As the _underscore prefix for declaring wildcard pages is also what is used to declare "hidden" pages, to distinguish them from hidden

partials and layouts, the recommendation is for them to include layout and partial their name, e,g:

- _layout.html

- _alt-layout.html

- _menu-partial.html

Pages with layout or partial in their name remain hidden and are ignored in wildcard path resolution.

If following the recommended _{name}-partial.html naming convention, you'll be able to reference them using just their name:

{{ 'menu' | partial }} // Equivalent to:

{{ '_menu-partial' | partial }}

Init Pages

As Template Pages continues growing in functionality there's becoming less places in which you'd need to reach for C# to be able to handle, support for Init pages reduces the need even further.

Just as how Global.asax.cs can be used to run Startup initialization logic in ASP.NET Web Applications and Startup.cs in .NET Core Apps,

you can now add a /_init.html page for Templates logic that's only executed once on Startup.

This is used in the Blog Web App's _init.html where it will create a new

blog.sqlite database if it doesn't exist seeded with the UserInfo and Posts Tables and initial data, e.g:

{{ `CREATE TABLE IF NOT EXISTS "UserInfo"

(

"UserName" VARCHAR(8000) PRIMARY KEY,

"DisplayName" VARCHAR(8000) NULL,

"AvatarUrl" VARCHAR(8000) NULL,

"AvatarUrlLarge" VARCHAR(8000) NULL,

"Created" VARCHAR(8000) NOT NULL,

"Modified" VARCHAR(8000) NOT NULL

);`

| dbExec

}}

{{ dbScalar(`SELECT COUNT(*) FROM Post`) | assignTo: postsCount }}

{{#if postsCount == 0 }}

========================================

Seed with initial UserInfo and Post data

========================================

...

{{/if}

{{ htmlError }}

The output of the _init page is captured in the initout argument which can be later inspected as a normal template argument as seen in

log.html:

<div>

Output from init.html:

<pre>{{initout | raw}}</pre>

</div>

If there was an Exception with any of the SQL Statements it will be displayed in the {{ htmlError }} filter which can be

later viewed in the /log page above.

::

Buffered View Pages

After noticing performance regressions from non-buffering Templates output when hosted behind a reverse proxy, Template Pages served by

SharpPageFeature are now being buffered. Whilst having Templates writing directly to the OutputStream showed marginal gains when

accessed directly from Kestrel, they had a negative impact on page download speed when running behind a reverse proxy which is

the current recommendation for hosting .NET Core Web Apps.

As they don't yield performance improvements universally, Template Pages served by ServiceStack's SharpPageFeature are now buffered before being written to the HTTP OutputStream (using a pooled MemoryStream), this change also makes Template Pages more flexible which can implement features like Dynamic Sharp APIs that weren't possible before.

Note: only

*.htmlPages evaluated by ServiceStack in response to HTTP Requests are buffered, i.e. Rendering Templates directly usingPageResultorcontext.EvaluateTemplate()continue to serialize to the specified OutputStream without buffering.

Dynamic Sharp APIs

In addition to being productive dynamic language for generating dynamic HTML pages, Template Pages can also be used to rapidly develop Web APIs which can take advantage of the new support for Dynamic Page Based Routes to rapidly develop data-driven JSON APIs and make them available under ideal pretty URLs whilst utilizing the same Live Development workflow that doesn't need to define any C# Types or execute any builds - as all development can happen in real-time whilst the App is running.

The only difference between a Template Page that generates HTML or a Template Page that returns an API Response is that Sharp APIs

return a value using the return filter.

For comparison, to create a Hello World C# ServiceStack Service you would typically create a Request DTO, Response DTO and a Service implementation:

[Route("/hello/{Name}")]

public class Hello : IReturn<HelloResponse>

{

public string Name { get; set; }

}

public class HelloResponse

{

public string Result { get; set; }

}

public class HelloService : Service

{

public object Any(Hello request) => new HelloResponse { Result = $"Hello, {request.Name}!" };

}

/hello API Page

Usage: /hello/

An API which returns the same wire response as above can be implemented in Sharp APIs by creating a page at /hello/_name/index.html that includes the 1-liner:

{{ { result: `Hello, ${name}!` } | return }}

Which supports the same content negotiation as a ServiceStack Service where calling it in a browser will generate a human-friendly HTML Page:

Or calling it with a JSON HTTP client containing Accept: application/json HTTP Header or with a ?format=json query string will

render the API response in the JSON Format:

Alternatively you can force a JSON Response by specifying the Content Type in the return arguments:

{{ { result: `Hello, ${name}!` } | return({ format: 'json' }) }}

// Equivalent to:

{{ { result: `Hello, ${name}!` } | return({ contentType: 'application/json' }) }}

More API examples showing the versatility of this feature is contained in the new blog.web-app.io which only uses Templates and Dynamic Sharp APIs to implement all of its functionality.

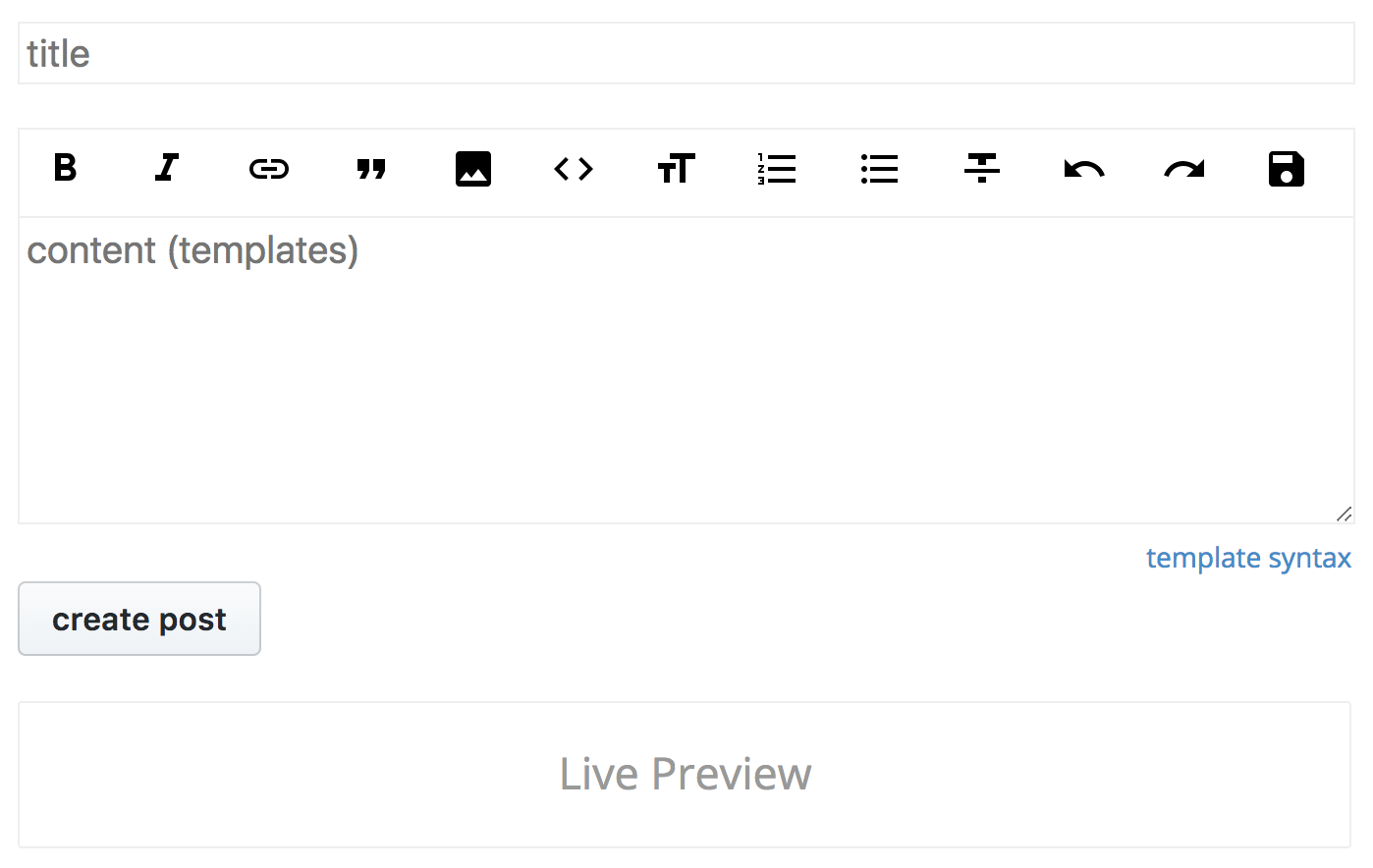

/preview API Page

Usage: /preview?content=

The /preview.html API page uses this to force a plain-text response with:

{{ content | evalTemplate({use:{plugins:'MarkdownTemplatePlugin'}}) | assignTo:response }}

{{ response | return({ contentType:'text/plain' }) }}

The preview API above is what provides the new Blog Web App's Live Preview feature where it will render any

#Script provided in the content Query String or HTTP Post Form Data, e.g:

<ul>

<li>

<a href="http://blog.web-app.io/preview?content={{10|times|select:{pow(index,2)},}}">

/preview?content={{10|times|select:{pow(index,2)},}}

</a>

</li>

</ul>

Which renders the plain text response:

0,1,4,9,16,25,36,49,64,81,

/_user/api Page

Usage: //api

The /_user/api.html API page shows an example of how easy it is to

create data-driven APIs where you can literally return the response of a parameterized SQL query using the dbSelect filter and returning

the results:

{{ `SELECT *

FROM Post p INNER JOIN UserInfo u on p.CreatedBy = u.UserName

WHERE UserName = @user

ORDER BY p.Created DESC`

| dbSelect({ user })

| return }}

The user argument is populated as a result of dynamic route from the _user directory name which will let you view all

@ServiceStack posts with:

Which also benefits from ServiceStack's multiple built-in formats where the same API can be returned in:

- /ServiceStack/api?format=json

- /ServiceStack/api?format=csv

- /ServiceStack/api?format=xml

- /ServiceStack/api?format=jsv

/posts/_slug/api Page

Usage: /posts//api

The /posts/_slug/api.html page shows an example of using the

httpResult filter to return a custom HTTP Response where if the post with the specified slug does not exist it will return a

404 Post was not found HTTP Response:

{{ `SELECT *

FROM Post p INNER JOIN UserInfo u on p.CreatedBy = u.UserName

WHERE Slug = @slug

ORDER BY p.Created DESC`

| dbSingle({ slug })

| assignTo: post

}}

{{ post ?? httpResult({ status:404, statusDescription:'Post was not found' })

| return }}

The httpResult filter returns a ServiceStack HttpResult which allows for the following customizations:

httpResult({

status: 404,

status: 'NotFound' // can also use .NET HttpStatusCode enum name

statusDescription: 'Post was not found',

response: post,

format: 'json',

contentType: 'application/json',

'X-Powered-By': '#Script',

})

Any other unknown arguments like 'X-Powered-By' are returned as HTTP Response Headers.

Returning the httpResult above behaves similarly to customizing a HTTP response using return arguments:

{{ post | return({ format:'json', 'X-Powered-By':'#Script' }) }}

Using the explicit httpResult filter is useful for returning a custom HTTP Response without a Response Body, e.g. the New Post page

uses httpFilter to

redirect back to the Users posts page

after they've successfully created a new Post:

{{#if success}}

{{ httpResult({ status:301, Location:`/${userName}` }) | return }}

{{/if}}

For more examples and info on Sharp APIs checkout to the Sharp APIs docs.

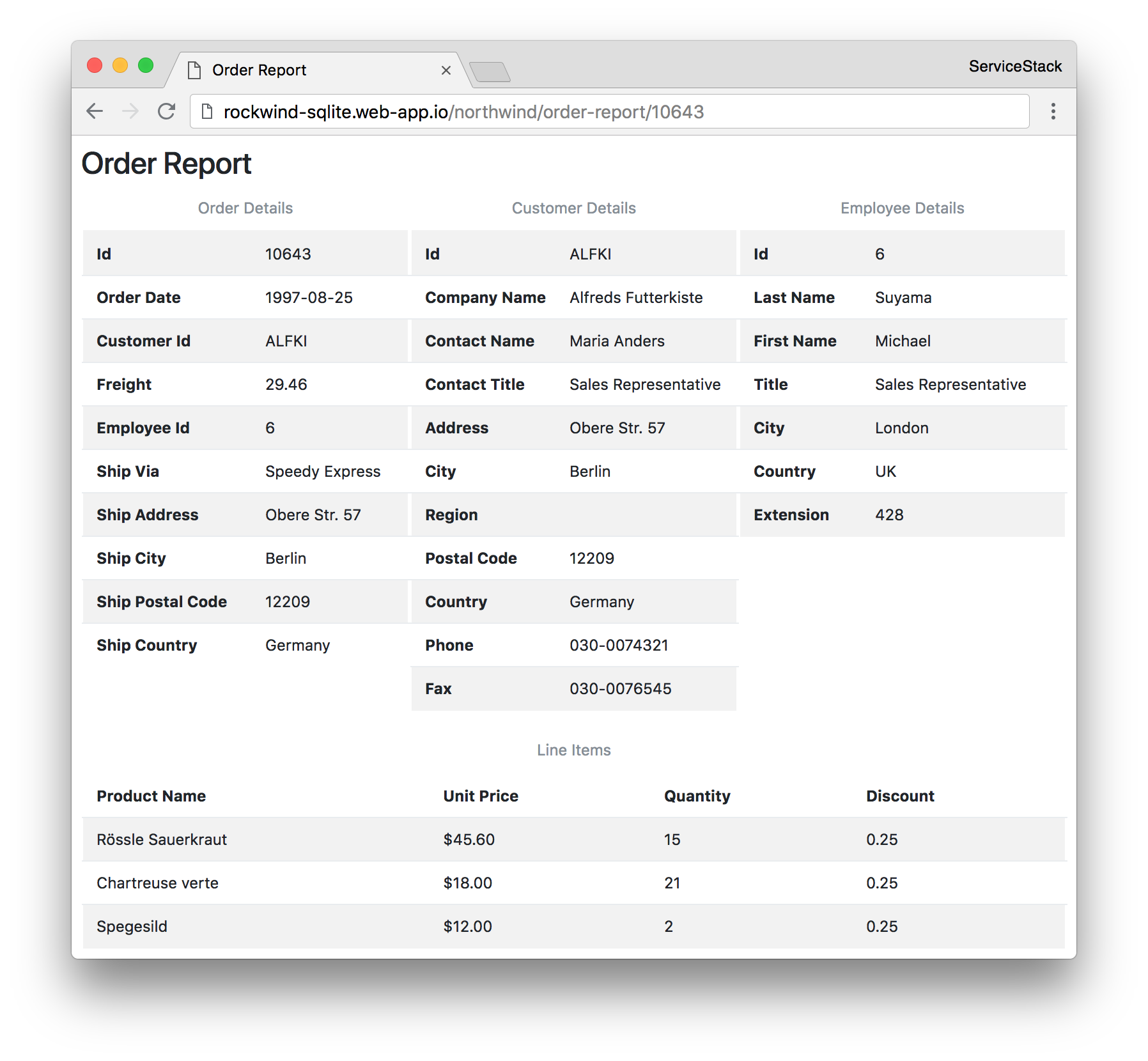

Order Report Example

The combination of features in the new Templates makes easy work of typically tedious tasks, e.g. if you were tasked to create a report that contained all information about a Northwind Order displayed on a single page, you can create a new page at:

packed with all Queries you need to run and execute them with a DB Scripts and display them with a HTML Scripts:

<!--

title: Order Report

-->

{{ `SELECT o.Id, OrderDate, CustomerId, Freight, e.Id as EmployeeId, s.CompanyName as ShipVia,

ShipAddress, ShipCity, ShipPostalCode, ShipCountry

FROM ${sqlQuote("Order")} o

INNER JOIN

Employee e ON o.EmployeeId = e.Id

INNER JOIN

Shipper s ON o.ShipVia = s.Id

WHERE o.Id = @id`

| dbSingle({ id }) | assignTo: order }}

{{#with order}}

{{ "table table-striped" | assignTo: className }}

<style>table {border: 5px solid transparent} th {white-space: nowrap}</style>

<div style="display:flex">

{{ order | htmlDump({ caption: 'Order Details', className }) }}

{{ `SELECT * FROM Customer WHERE Id = @CustomerId`

| dbSingle({ CustomerId }) | htmlDump({ caption: `Customer Details`, className }) }}

{{ `SELECT Id,LastName,FirstName,Title,City,Country,Extension FROM Employee WHERE Id = @EmployeeId`

| dbSingle({ EmployeeId }) | htmlDump({ caption: `Employee Details`, className }) }}

</div>

{{ `SELECT p.ProductName, ${sqlCurrency("od.UnitPrice")} UnitPrice, Quantity, Discount

FROM OrderDetail od

INNER JOIN

Product p ON od.ProductId = p.Id

WHERE OrderId = @id`

| dbSelect({ id })

| htmlDump({ caption: "Line Items", className }) }}

{{else}}

{{ `There is no Order with id: ${id}` }}

{{/with}}

This will let you view the complete details of any order at the following URL:

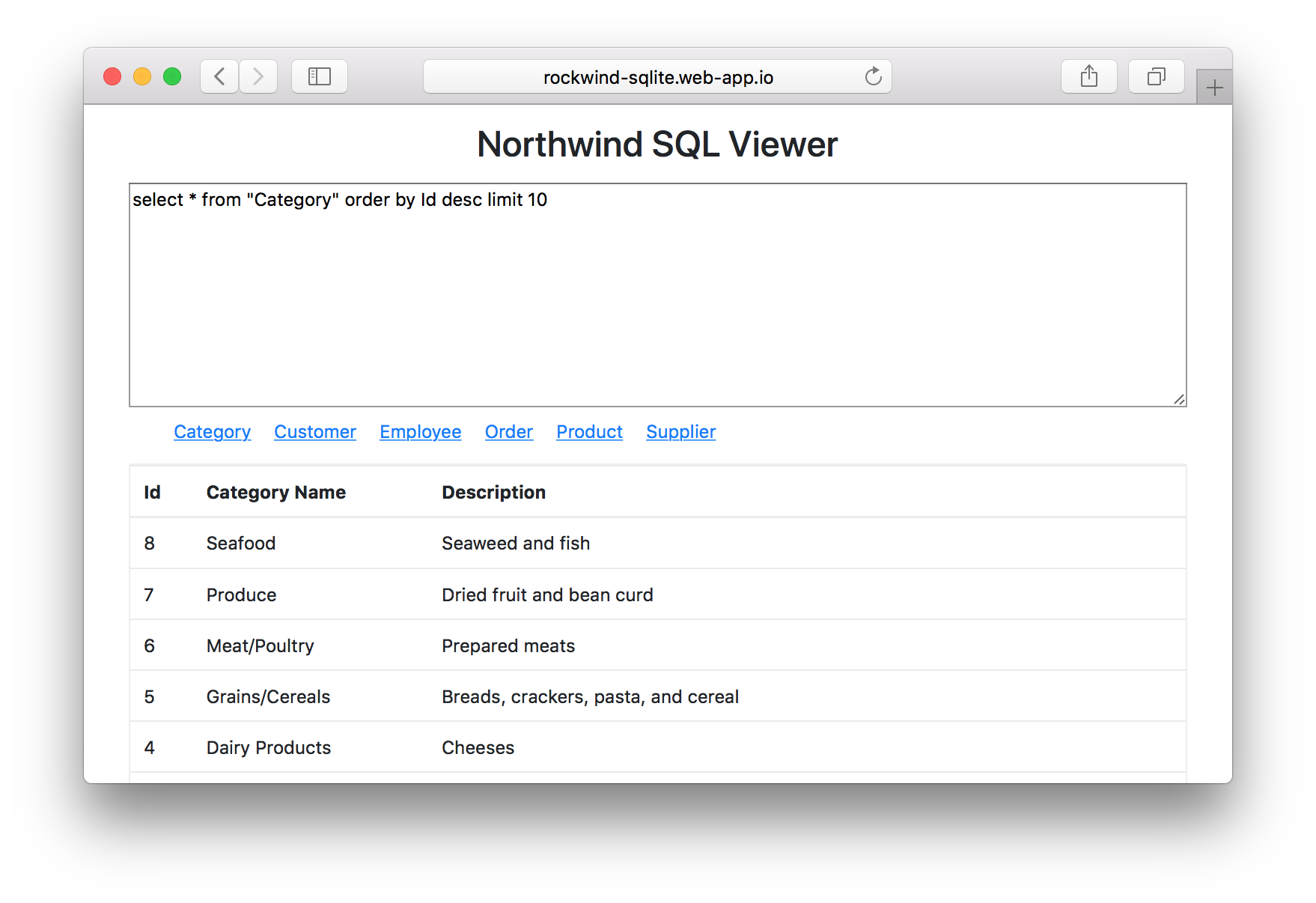

SQL Studio Example

To take the ad hoc SQL Query example even further, it also becomes trivial to implement a SQL Viewer to run ad hoc queries on your App's configured database.

The Northwind SQL Viewer above was developed using the 2 Template Pages below:

/northwind/sql/index.html

A Template Page to render the UI, shortcut links to quickly see the last 10 rows of each table, a <textarea/> to capture the SQL Query which

is sent to an API on every keystroke where the results are displayed instantly:

<h2>Northwind SQL Viewer</h2>

<textarea name="sql">select * from "Customer" order by Id desc limit 10</textarea>

<ul class="tables">

<li>Category</li>

<li>Customer</li>

<li>Employee</li>

<li>Order</li>

<li>Product</li>

<li>Supplier</li>

</ul>

<div class="preview"></div>

<style>/*...*/</style>

<script>

let textarea = document.querySelector("textarea");

let listItems = document.querySelectorAll('.tables li');

for (let i=0; i<listItems.length; i++) {

listItems[i].addEventListener('click', function(e){

var table = e.target.innerHTML;

textarea.value = 'select * from "' + table + '" order by Id desc limit 10';

textarea.dispatchEvent(new Event("input", { bubbles: true, cancelable: true }));

});

}

// Enable Live Preview of SQL

textarea.addEventListener("input", livepreview, false);

livepreview({ target: textarea });

function livepreview(e) {

let el = e.target;

let sel = '.preview';

if (el.value.trim() == "") {

document.querySelector(sel).innerHTML = "";

return;

}

let formData = new FormData();

formData.append("sql", el.value);

fetch("api", {

method: "post",

body: formData

}).then(function(r) { return r.text(); })

.then(function(r) { document.querySelector(sel).innerHTML = r; });

}

</script>

/northwind/sql/api.html

All that's left is to implement the API which just needs to check to ensure the SQL does not contain any destructive operations using the

isUnsafeSql DB Scripts, if it doesn't execute the SQL with the dbSelect DB Scripts, generate a HTML Table with htmlDump and return

the partial HTML fragment with return:

{{#if isUnsafeSql(sql) }}

{{ `<div class="alert alert-danger">Potentially unsafe SQL detected</div>` | return }}

{{/if}}

{{ sql | dbSelect | htmlDump | return }}

Live Development Workflow

Thanks to the live development workflow of Template Pages, this is the quickest way we've previously been able to implement any of this functionality. Where all development can happen at runtime with no compilation or builds, yielding a highly productive iterative workflow to implement common functionality like viewing ad hoc SQL Queries in Excel or even just to rapidly prototype APIs so they can be consumed immediately by Client Applications before formalizing them into Typed ServiceStack Services where they can take advantage of its rich typed metadata and ecosystem.

New in Sharp Apps

Any functionality added in #Script is inherited in Sharp Apps - ServiceStack's

solution for developing entire .NET Core Web Apps without compilation, using just #Script.

This release also includes a number of new features which makes Web Apps even more capable:

Customizable Auth Providers

Authentication can now be configured using plain text config in your app.settings where initially you need register the AuthFeature

plugin as normal by specifying it in the features list:

features AuthFeature

Then using AuthFeature.AuthProviders you can specify which Auth Providers you want to have registered, e.g:

AuthFeature.AuthProviders TwitterAuthProvider, GithubAuthProvider

Each Auth Provider checks the Web Apps app.settings for its Auth Provider specific configuration it needs, e.g. to configure both

Twitter and GitHub Auth Providers you would populate it with your OAuth Apps details:

oauth.RedirectUrl http://127.0.0.1:5000/

oauth.CallbackUrl http://127.0.0.1:5000/auth/{0}

oauth.twitter.ConsumerKey {Twitter App Consumer Key}

oauth.twitter.ConsumerSecret {Twitter App Consumer Secret Key}

oauth.github.ClientId {GitHub Client Id}

oauth.github.ClientSecret {GitHub Client Secret}

oauth.github.Scopes {GitHub Auth Scopes}

Customizable Markdown Providers

By default Web Apps now utilize Markdig implementation to render its Markdown. You can also switch it back to the built-in Markdown provider that ServiceStack uses with:

markdownProvider MarkdownDeep

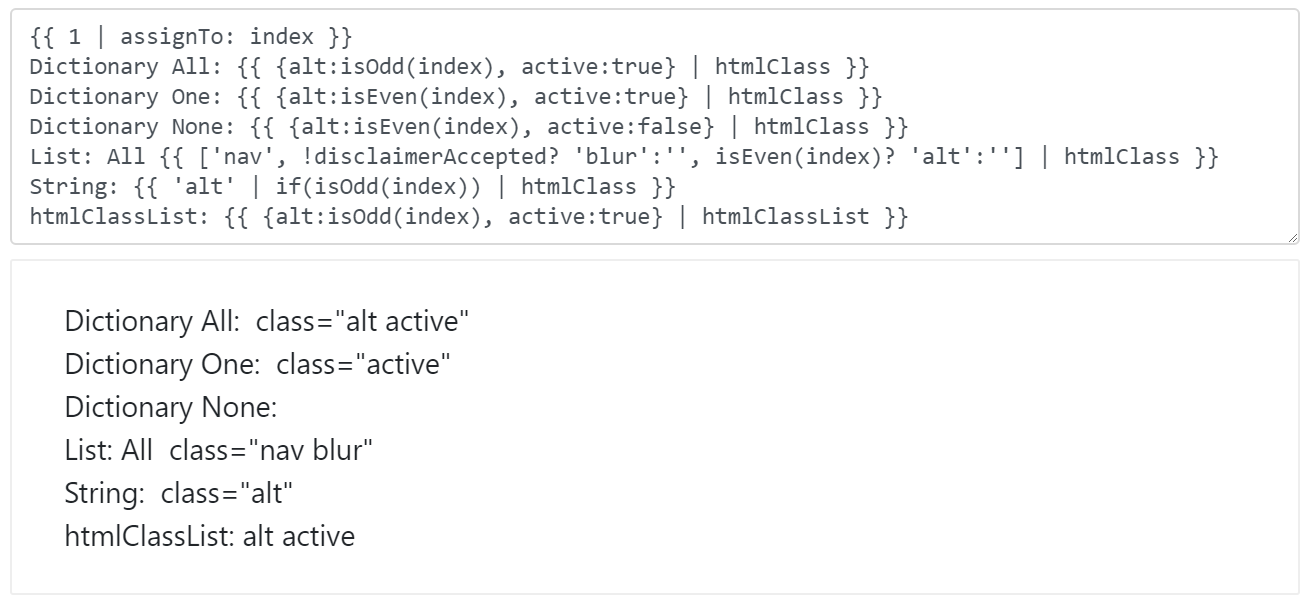

Rich Template Config Arguments

Any app.settings configs that are prefixed with args. are made available to Template Pages and any arguments starting with

a { or [ are automatically converted into a JS object:

args.blog { name:'blog.web-app.io', href:'/' }

args.tags ['technology','marketing']

Where they can be referenced as an object or an array directly:

<a href="{{blog.href}}">{{blog.name}}</a>

{{#each tags}} <em>{{it}}</em> {{/each}}

The alternative approach is to give each argument value a different name:

args.blogName blog.web-app.io

args.blogHref /

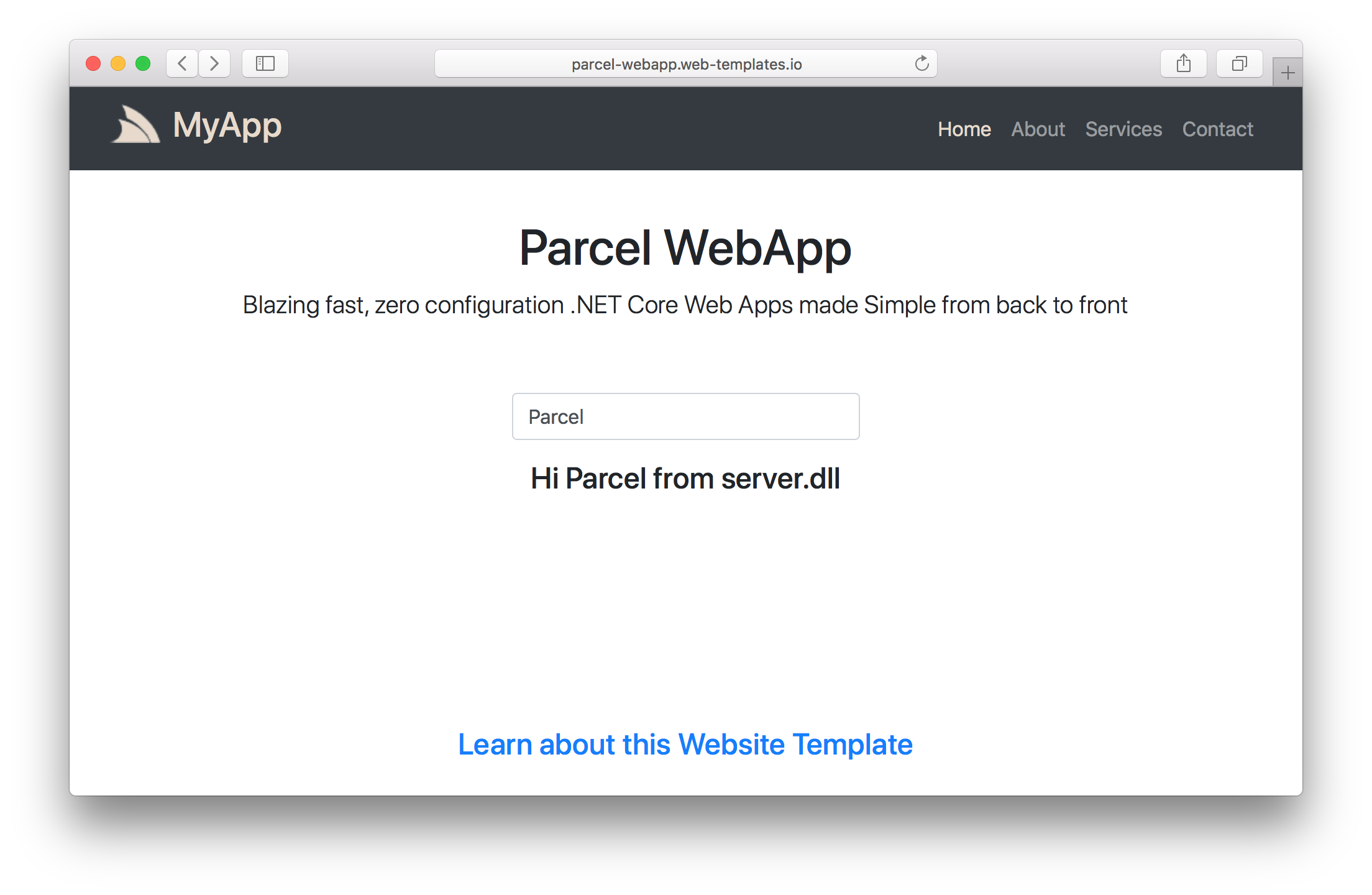

New Parcel Web App Template

We're especially excited about this new template which combines the simplicity of developing modern JavaScript Apps with the parcel template with the simplicity of developing .NET Core Apps with #Script Sharp Apps to provide a unified solution for creating rich Apps in a live rapid development workflow without compilation allowing the creation of pure Cloud Apps and simplified rsync deployments.

Layout

This template is maintained in the following directory structure:

/app- Your Web App's published source code and any plugins/client- The Parcel managed Client App where client source code is maintained/server- Extend your Web App with an optionalserver.dllplugin containing additional Server functionality/web- The Web App binaries

Most development will happen within /client which is automatically published to /app using parcel's CLI that's invoked from the included npm scripts.

client

The difference with bare-app is that the client source code is maintained in the /client folder and uses Parcel JS to automatically bundle and publish your App to /app/wwwroot which is updated with live changes during development.

The client folder also contains the npm package.json which contains all npm scripts required during development.

If this is the first time using Parcel, you will need to install its global CLI:

$ npm install -g parcel-bundler

Then you can run a watched parcel build of your Web App with:

$ npm run dev

Parcel is a zero configuration bundler which inspects your .html files to automatically transpile and bundle all your .js and .css assets and other web resources like TypeScript .ts source files into a static .html website synced at /app/wwwroot.

Then to start the ServiceStack Server to host your Web App run:

$ npm run server

Which will host your App at http://localhost:5000 which in debug mode will enable hot reloading

which will automatically reload web pages as it detects any file changes.

server

To enable even greater functionality, this Web Apps template is also pre-configured with a custom Server project where you can extend your Web App with Plugins where all Plugins, Services, Filters, etc are automatically wired and made available to your Web App.

This template includes a simple ServerPlugin.cs which contains an Empty ServerPlugin and Hello Service:

public class ServerPlugin : IPlugin

{

public void Register(IAppHost appHost)

{

}

}

//[Route("/hello/{Name}")] // Handled by /hello/_name.html API page, uncomment to take over

public class Hello : IReturn<HelloResponse>

{

public string Name { get; set; }

}

public class HelloResponse

{

public string Result { get; set; }

}

public class MyServices : Service

{

public object Any(Hello request)

{

return new HelloResponse { Result = $"Hi {request.Name} from server.dll" };

}

}

Build the server.csproj project and copy the resulting server.dll to /app/plugins/server.dll and start the server with the latest plugin:

$ npm run server

This will automatically load any Plugins, Services, Filters, etc and make them available to your Web App.

One benefit of creating your APIs with C# ServiceStack Services instead of Sharp APIs is that you can generate TypeScript DTOs with:

$ npm run dtos

Which saves generate DTOs for all your ServiceStack Services in dtos.ts which can then be accessed in your TypeScript source code.

If preferred you can instead develop Server APIs with Sharp APIs, an example is included in /client/hello/_name.html

{{ { result: `Hi ${name} from /hello/_name.html` } | return }}

Which as it uses the same data structure as the Hello Service above, can be called with ServiceStack's JsonServiceClient and generated TypeScript DTOs.

The /client/index.ts shows an example of this where initially the App calls the C# Hello ServiceStack Service:

import { client } from "./shared";

import { Hello, HelloResponse } from "./dtos";

const result = document.querySelector("#result")!;

document.querySelector("#Name")!.addEventListener("input", async e => {

const value = (e.target as HTMLInputElement).value;

if (value != "") {

const request = new Hello();

request.name = value;

const response = await client.get(request);

// const response = await client.get<HelloResponse>(`/hello/${request.name}`); //call /hello/_name.html

result.innerHTML = response.result;

} else {

result.innerHTML = "";

}

});

While your App is running you can toggle the uncommented the line and hit Ctrl+S to save index.ts which Parcel will automatically transpile and publish to /app/wwwroot where it will be detected and automatically reloaded with the latest changes. Now typing in the text field will display the response from calling the /hello/_name.html API Page instead.

Deployments

During development Parcel maintains a debug and source-code friendly version of your App. Before deploying you can build an optimized production version of your App with:

$ npm run build

Which will bundle and minify all .css, .js and .html assets and publish to /app/wwwroot.

Then to deploy Web Apps you just need to copy the /app and /web folders to any server with .NET Core 2.1 runtime installed.

See the Deploying Web Apps docs for more info.

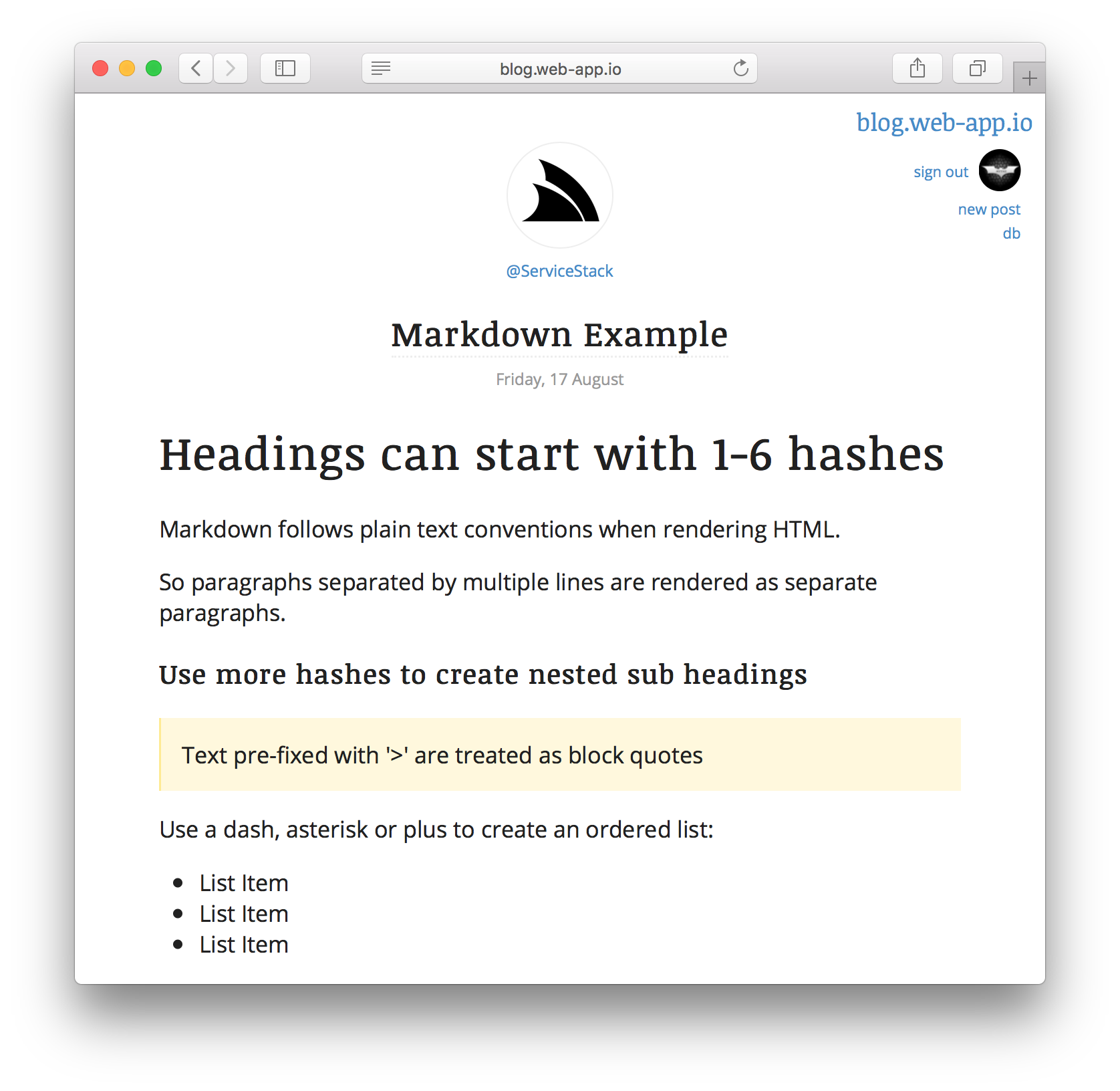

New Blog Web App

As we like to do when introducing new major features, we've created a new Live Demo to showcase many of the new features added to Templates. Exploring the source code of Live Demos helps learning how to make use of new features as viewed in the context of a working example.

Ultimate Simplicity

The new Blog App is a ServiceStack Web App developed

entirely using #Script which eliminates much of the complexity inherent in developing .NET Web Applications which by their nature

results in highly customizable Web Apps where their entire functionality can be modified in real-time whilst the App is running, that uses

syntax simple enough to be enhanced by non-developers like Designers and Content Creators courtesy of its approachable

Handlebars-like and familiar

JavaScript syntax.