We've got another jam-packed release with improvements and polish across the board, starting with exciting new API Key and JWT Auth Providers enabling fast, stateless and centralized Auth Services, a modernized API surface for OrmLite, new GEO capabilities in Redis, Logging for Slack, performance and memory improvements across all ServiceStack and libraries including useful utilities you can reuse to improve performance in your own Apps.

Authentication

ServiceStack's Authentication is built around the simple

HTTP Session

model where after successful authentication a

Typed Users Session

is persisted in the registered ICacheClient. A Cookie is then set on the HTTP Response in order to

establish an Authenticated Session for subsequent requests. Prior to this release the Users Session was

always persisted, including for IAuthWithRequest Auth Providers which would send Authentication Info with

each Request, these include:

BasicAuthProvider- Allow users to authenticate with HTTP Basic AuthDigestAuthProvider- Allow users to authenticate with HTTP Digest AuthenticationAspNetWindowsAuthProvider- Allow users to authenticate with integrated Windows Auth

Sessionless "Auth With Request" Providers

By default all IAuthWithRequest Auth Providers no longer persist the Users Session in the Cache, the result

of which requires each of the above Auth Providers to resend their Credentials / Auth Info on every request,

as was already the current and expected behavior in all Service Clients which supported these Authentication

options.

Previously only the First Request required sending Auth Info, as any subsequent requests could access the previously established Authenticated Session. Whilst it shouldn't be required, should you need to restore the previous behavior, you can persist Sessions with:

Plugins.Add(new AuthFeature(..., new IAuthProvider[] {

new BasicAuthProvider { PersistSession = true },

});

No longer persisting User Sessions doesn't impact the existing Auth functionality as the Authenticated Users

Session is simply attached to the current Request inside the IRequest.Items dictionary,

conceptually similar to:

req.Items[Keywords.Session] = new AuthUserSession { IsAuthenticated = true, ... };

Which is what gets returned whenever the Session is accessed from inside your Services:

var session = base.GetSession();

var session = base.SessionAs<AuthUserSession>();

Or outside the Service, anywhere you have access to the current IRequest:

var session = req.GetSession();

var session = req.SessionAs<AuthUserSession>();

All above APIs return the Typed User Session attached to the request.

This refresher on ServiceStack Sessions which now sees IAuthWithRequest sessions only lasting within

the scope of the request will serve useful in understanding the new Auth Providers as they also both

implement IAuthWithRequest and authenticate with every request.

API Key Auth Provider

The new API Key Auth Provider provides an alternative method for allowing external 3rd Parties access to your protected Services without needing to specify a password. API Keys is the preferred approach for many well-known public API providers used in system-to-system scenarios for several reasons:

- Simple - It integrates easily with existing HTTP Auth functionality

- Independent from Password - Limits exposure to the much more sensitive master user passwords that should ideally never be stored in plain-text. Resetting User's Password or password reset strategies wont invalidate existing systems configured to use API Keys

- Entropy - API Keys are typically much more secure than most normal User Passwords. The configurable default has 24 bytes of entropy (Guids have 16 bytes) generated from a secure random number generator that encodes to 32 chars using URL-safe Base64 (Same as Stripe)

- Performance - Thanks to their much greater entropy and independence from user-chosen passwords, API Keys are validated as fast as possible using a datastore Index. This is contrast to validating hashed user passwords which as a goal require usage of slower and more computationally expensive algorithms to try make brute force attacks infeasible

Like most ServiceStack providers the new API Key Auth Provider is simple to use, integrates seamlessly with ServiceStack existing Auth model and includes Typed end-to-end client/server support.

For familiarity and utility we've modelled our implementation around Stripe's API Key functionality whilst sharing many of the benefits of ServiceStack's Auth Providers:

Simple and Integrated

Like all of ServiceStack's Auth Providers

registration is as easy as adding ApiKeyAuthProvider to the AuthFeature list of Auth Providers:

Plugins.Add(new AuthFeature(...,

new IAuthProvider[] {

new ApiKeyAuthProvider(AppSettings),

new CredentialsAuthProvider(AppSettings),

//...

}));

The ApiKeyAuthProvider works similarly to the other ServiceStack IAuthWithRequest providers where a

successful API Key initializes the current IRequest with the user's Authenticated Session. It also adds the

ApiKey

POCO Model to the request which can be accessed with:

ApiKey apiKey = req.GetApiKey();

The ApiKey can be later inspected throughout the

request pipeline

to determine which API Key, Type and Environment was used.

Interoperable

Using existing HTTP Functionality makes it simple and interoperable to use with any HTTP Client even command-line clients like curl where API Keys can be specified in the Username of HTTP Basic Auth:

curl https://api.stripe.com/v1/charges -u yDOr26HsxyhpuRB3qbG07qfCmDhqutnA:

Or as a HTTP Bearer Token in the Authorization HTTP Request Header:

curl https://api.stripe.com/v1/charges -H "Authorization: Bearer yDOr26HsxyhpuRB3qbG07qfCmDhqutnA"

Both of these methods are built into most HTTP Clients. Here are a few different ways which you can send them using ServiceStack's .NET Service Clients:

var client = new JsonServiceClient(baseUrl) {

Credentials = new NetworkCredential(apiKey, "")

};

var client = new JsonHttpClient(baseUrl) {

BearerToken = apiKey

};

Or using the HTTP Utils extension methods:

var response = baseUrl.CombineWith("/secured").GetStringFromUrl(

requestFilter: req => req.AddBasicAuth(apiKey, ""));

var response = await "https://example.org/secured".GetJsonFromUrlAsync(

requestFilter: req => req.AddBearerToken(apiKey));

Multiple Auth Repositories

The necessary functionality to support API Keys has been implemented in the following supported Auth Repositories:

OrmLiteAuthRepository- Supporting most major RDBMSRedisAuthRepository- Uses Redis back-end data storeDynamoDbAuthRepository- Uses AWS DynamoDB data storeInMemoryAuthRepository- Uses InMemory Auth Repository

And requires no additional configuration as it just utilizes the existing registered IAuthRepository.

Multiple API Key Types and Environments

You can specify any number of different Key Types for use in multiple environments for each user. Keys are generated upon User Registration where it generates both a live and test key for the secret Key Type by default. To also create both a "secret" and "publishable" API Key, configure it with:

Plugins.Add(new AuthFeature(...,

new IAuthProvider[] {

new ApiKeyAuthProvider(AppSettings) {

KeyTypes = new[] { "secret", "publishable" },

}

});

If preferred, any of the API Key Provider options can instead be specified in

App Settings following the

apikey.{PropertyName} format, e.g:

<add key="apikey.KeyTypes" value="secret,publishable" />

Multitenancy

Thanks to the ServiceStack's trivial support for enabling Multitenancy, the minimal configuration required to register and API Key Auth Provider that persists to a LiveDb SQL Server database and also allows Services called with an Test API Key to query the alternative TestDb database instead, is just:

class AppHost : AppSelfHostBase

{

public AppHost() : base("API Key Multitenancy Example", typeof(AppHost).Assembly) { }

public override void Configure(Container container)

{

//Create and register an OrmLite DB Factory configured to use Live DB by default

var dbFactory = new OrmLiteConnectionFactory(

AppSettings.GetString("LiveDb"), SqlServerDialect.Provider);

container.Register<IDbConnectionFactory>(dbFactory);

// Register a "TestDb" Named Connection

dbFactory.RegisterConnection("TestDb",

AppSettings.GetString("TestDb"), SqlServerDialect.Provider);

//Tell ServiceStack you want to persist User Auth Info in SQL Server

container.Register<IAuthRepository>(c => new OrmLiteAuthRepository(dbFactory));

//Register the AuthFeature with the API Key Auth Provider

Plugins.Add(new AuthFeature(() => new AuthUserSession(),

new IAuthProvider[] {

new ApiKeyAuthProvider(AppSettings)

});

}

public override IDbConnection GetDbConnection(IRequest req = null)

{

//If an API Test Key was used return DB connection to TestDb instead:

return req.GetApiKey()?.Environment == "test"

? TryResolve<IDbConnectionFactory>().OpenDbConnection("TestDb")

: base.GetDbConnection(req);

}

}

Now whenever a Test API Key was used to call an Authenticated Service, all base.Db Queries or

AutoQuery Services will query TestDb instead.

API Key Defaults

The API Key Auth Provider has several options to customize its behavior with all but delegate Filters being able to be specified in AppSettings as well:

new ApiKeyAuthProvider

{

// Whether to only permit access via API Key from a secure connection. (default true)

public bool RequireSecureConnection { get; set; }

// Generate different keys for different environments. (default live,test)

public string[] Environments { get; set; }

// Different types of Keys each user can have. (default secret)

public string[] KeyTypes { get; set; }

// How much entropy should the generated keys have. (default 24)

public int KeySizeBytes { get; set; }

/// Whether to automatically expire keys. (default no expiry)

public TimeSpan? ExpireKeysAfter { get; set; }

// Automatically create the ApiKey Table for Auth Repositories which need it. (default true)

public bool InitSchema { get; set; }

// Change how API Key is generated

public CreateApiKeyDelegate GenerateApiKey { get; set; }

// Run custom filter after API Key is created

public Action<ApiKey> CreateApiKeyFilter { get; set; }

}

Should you need to, you can access API Keys from the Auth Repository directly through the following interface:

public interface IManageApiKeys

{

void InitApiKeySchema();

bool ApiKeyExists(string apiKey);

ApiKey GetApiKey(string apiKey);

List<ApiKey> GetUserApiKeys(string userId);

void StoreAll(IEnumerable<ApiKey> apiKeys);

}

This interface also defines what's required in order to implement API Keys support on a Custom AuthRepository.

For Auth Repositories which implement it, you can access the interface by resolving IAuthRepository from

the IOC and casting it to the above interface, e.g:

var apiRepo = (IManageApiKeys)HostContext.TryResolve<IAuthRepository>();

var apiKeys = apiRepo.GetUserApiKeys(session.UserAuthId);

Built-in API Key Services

To give end-users access to their keys the API Key Auth Provider enables 2 Services: the GetApiKeys

Service to return all valid User API Keys for the specified environment:

//GET /apikeys/live

var response = client.Get(new GetApiKeys { Environment = "live" });

response.Results.PrintDump(); //User's "live" API Keys

And the RegenrateApiKeys Service to invalidate all current API Keys and generate new ones for the

specified environment:

//POST /apikeys/regenerate/live

var response = client.Post(new RegenrateApiKeys { Environment = "live" });

response.Results.PrintDump(); //User's new "live" API Keys

You can modify which built-in Services you want registered, or modify the custom routes to where you

want them to be available by modifying the ServiceRoutes collection. E.g. you can prevent it from

registering any Services by setting ServiceRoutes to an empty collection:

new ApiKeyAuthProvider { ServiceRoutes = new Dictionary<Type, string[]>() }

JWT Auth Provider

Even more exciting than the new API Key Provider is the new integrated Auth solution for the popular

JSON Web Tokens (JWT) industry standard which is easily enabled by registering

the JwtAuthProvider with the AuthFeature plugin:

Plugins.Add(new AuthFeature(...,

new IAuthProvider[] {

new JwtAuthProvider(AppSettings) { AuthKey = AesUtils.CreateKey() },

new CredentialsAuthProvider(AppSettings),

//...

}));

At a minimum you'll need to specify the AuthKey that will be used to Sign and Verify JWT tokens.

Whilst creating a new one in memory as above will work, a new Auth Key will be created every time the

AppDomain recycles which will invalidate all existing JWT Tokens created with the previous key.

So you'll typically want to generate the AuthKey out-of-band and configure it with the JwtAuthProvider at

registration which you can do in code using any of the AppSettings providers:

new JwtAuthProvider(AppSettings) { AuthKeyBase64 = AppSettings.GetString("AuthKeyBase64") }

Or alternatively you can configure most JwtAuthProvider properties in your Web.config <appSettings/>

(default AppSettings Provider) following the jwt.{PropertyName} format:

<add key="jwt.AuthKeyBase64" value="{Base64AuthKey}" />

As with all crypto keys you'll want to keep them confidential as if anyone gets a hold of your AuthKey they'll be able to forge and sign their own JWT tokens letting them be able to impersonate any user, roles or permissions!

RequireSecureConnection

Both API Key and JWT Auth Providers defaults to RequireSecureConnection=true which mandates for

Authentication via either Provider to happen over a secure (HTTPS) connection as both bearer tokens should

be kept highly confidential. You can specify RequireSecureConnection=false to disable this requirement

for testing or within controlled internal environments.

Sending JWT with Service Clients

Just like API Keys, JWT Tokens can be sent using the Bearer Token support in all HTTP and Service Clients:

var client = new JsonServiceClient(baseUrl) {

BearerToken = jwtToken

};

var response = await "https://example.org/secured".GetJsonFromUrlAsync(

requestFilter: req => req.AddBearerToken(jwtToken));

The Service Clients offer additional high-level functionality where it's able to transparently request

a new JWT Token after it expires by handling when the configured JWT Token becomes invalidated in the

OnAuthenticationRequired callback. Here we can retrieve a new JWT Token that we can fetch using a

different Service Client accessing a centralized and independent Auth Microservice that's configured with

both API Key and JWT Token Auth Providers. Web can fetch a new JWT Token by calling ServiceStack's

built-in Authenticate Service with our secret API Key (that by default never invalidates unless revoked).

If authenticated, sending an empty Authenticate() DTO will return the currently Authenticated User Info

that also generates a new JWT Token from the User's Authenticated Session and returns it in the BearerToken

Response DTO property which we can use to update our invalidated JWT Token.

All together we can configure our Service Client to transparently refresh expired JWT Tokens with just:

var authClient = JsonServiceClient(centralAuthBaseUrl) {

Credentials = new NetworkCredential(apiKey, "")

};

var client = new JsonServiceClient(baseUrl);

client.OnAuthenticationRequired = () => {

client.BearerToken = authClient.Send(new Authenticate()).BearerToken;

};

Sending JWT using Cookies

To improve accessibility with Ajax clients

JWT Tokens can also be sent using the ss-tok Cookie, e.g:

var client = new JsonServiceClient(baseUrl);

client.SetCookie("ss-tok", jwtToken);

We'll walk through an example of how you can access JWT Tokens as well as how you can convert Authenticated Sessions into JWT Tokens and assign it to use a Secure and HttpOnly Cookie later on.

JWT Overview

A nice property of JWT tokens is that they allow for truly stateless authentication where API Keys and user credentials can be maintained in a decentralized Auth Service that's kept isolated from the rest of your System, making them optimal for use in Microservice architectures.

Being self-contained lends JWT tokens to more scalable, performant and flexible architectures as they don't require any I/O or any state to be accessed from App Servers to validate the JWT Tokens, this is unlike all other Auth Providers which requires at least a DB, Cache or Network hit to authenticate the user.

A good introduction into JWT is available from the JWT website: https://jwt.io/introduction/

Essentially JWT's consist of 3 parts separated by . with each part encoded in

Base64url Encoding making it safe to encode both text and

binary using only URL-safe (i.e. non-escaping required) chars in the following format:

Base64UrlHeader.Base64UrlPayload.Base64UrlSignature

Where just like the API Key, JWT's can be sent as a Bearer Token in the Authorization HTTP Request Header.

JWT Header

The header typically consists of two parts: the type of the token and the hashing algorithm being used which is typically just:

{

"alg": "HS256",

"typ": "JWT"

}

We also send the "kid" Key Id used to identify which key should be used to validate the signature to help with seamless key rotations in future. If not specified the KeyId defaults to the first 3 chars of the Base64 HMAC or RSA Public Key Modulus.

JWT Payload

The Payload contains the essential information of a JWT Token which is made up of "claims", i.e. statements and metadata about a user which are categorized into 3 groups:

- Registered Claim Names - containing a known set of reserved names predefined in the JWT Standard

- Public Claim Names - additional common names that are registered in the IANA "JSON Web Token Claims" registry or otherwise adopt a Collision-Resistant name, e.g. prefixed by a namespace

- Private Claim Names - any other metadata you wish to include about an entity

We use the Payload to store essential information about the user which we use to validate the token and populate the session. Which typically contains:

- iss (Issuer) - the principal that issued the

JWT. Can be set with

JwtAuthProvider.Issuer, defaults to ssjwt - sub (Subject) - identifies the subject of the JWT, used to store the User's UserAuthId

- iat (Issued At) - when JWT Token was issued.

Can use

InvalidateTokensIssuedBeforeto invalidate tokens issued before a specific date - exp (Expiration Time) - when the JWT expires.

Initialized with

JwtAuthProvider.ExpireTokensInfrom date of issue (default 14 days) - aud (Audience) - identifies the recipient of

the JWT. Can be set with

JwtAuthProvider.Audience, defaults tonull(Optional)

The remaining information in the JWT Payload is used to populate the Users Session, to maximize interoperability we've used the most appropriate Public Claim Names where possible:

- email <-

session.Email - given_name <-

session.FirstName - family_name <-

session.LastName - name <-

session.DisplayName - preferred_username <-

session.UserName - picture <-

session.ProfileUrl

We also need to capture Users Roles and Permissions but as there's no Public Claim Name for this yet we're using Azure's Active Directory Conventions where User Roles are stored in roles as a JSON Array and similarly, Permissions are stored in perms.

To keep the JWT Token small we're only storing the essential User Info above in the Token, which means when

the Token is restored it will only be partially populated. You can detect when a Session was partially

populated from a JWT Token with the new FromToken boolean property.

Modifying the Payload

Whilst only limited info is embedded in the payload by default, all matching

AuthUserSession

properties embedded in the token will also be populated on the Session, which you can add to the payload

using the CreatePayloadFilter delegate. So if you also want to have access to when the user was registered

you can add it to the payload with:

new JwtAuthProvider(AppSettings)

{

CreatePayloadFilter = (payload,session) =>

payload["CreatedAt"] = session.CreatedAt.ToUnixTime().ToString()

}

You can also use the filter to modify any existing property which you can use to change the behavior of the JWT Token, e.g. we can add a special exception extending the JWT Expiration to all Users from Acme Inc with:

new JwtAuthProvider(AppSettings)

{

CreatePayloadFilter = (payload,session) => {

if (session.Email.EndsWith("@acme.com"))

payload["exp"] = DateTime.UtcNow.AddYears(1).ToUnixTime().ToString();

}

}

Likewise you can modify JWT Headers with the CreateHeaderFilter delegate and modify how the Users Session

is populated with the PopulateSessionFilter.

JWT Signature

JWT Tokens are possible courtesy of the cryptographic signature added to the end of the message that's used

to Authenticate and Verify that a Message hasn't been tampered with. As long as the message signature

validates with our AuthKey we can be certain the contents of the message haven't changed from when it was

created by either ourselves or someone else with access to our AuthKey.

JWT standard allows for a number of different Hashing Algorithms although requires at least the HM256

HMAC SHA-256 to be supported which is the default. The full list of Symmetric HMAC and Asymmetric RSA

Algorithms JwtAuthProvider supports include:

- HM256 - Symmetric HMAC SHA-256 algorithm

- HS384 - Symmetric HMAC SHA-384 algorithm

- HS512 - Symmetric HMAC SHA-512 algorithm

- RS256 - Asymmetric RSA with PKCS#1 padding with SHA-256

- RS384 - Asymmetric RSA with PKCS#1 padding with SHA-384

- RS512 - Asymmetric RSA with PKCS#1 padding with SHA-512

HMAC is the simplest to use as it lets you use the same AuthKey to Sign and Verify the message.

But if preferred you can use a RSA Keys to sign and verify tokens by changing the HashAlgorithm and

specifying a RSA Private Key:

new JwtAuthProvider(AppSettings) {

HashAlgorithm = "RS256",

PrivateKeyXml = AppSettings.GetString("PrivateKeyXml")

}

If you don't have a RSA Private Key, one can be created with:

var privateKey = RsaUtils.CreatePrivateKeyParams(RsaKeyLengths.Bit2048);

And its public key can be extracted using ToPublicRsaParameters() extension method, e.g:

var publicKey = privateKey.ToPublicRsaParameters();

Then to serialize RSA Keys, you can then export them to XML with:

var privateKeyXml = privateKey.ToPrivateKeyXml()

var publicKeyXml = privateKey.ToPublicKeyXml();

The behavior of using RSA to sign the JWT Tokens is mostly transparent but instead of using the AuthKey to both Sign and Verify the JWT Payload, it's signed with the Private Key and verified using the Public Key. New tokens will also have the alg JWT Header set to RS256 to reflect the new HashAlgorithm used.

Encrypted JWE Tokens

Something that's not immediately obvious is that while JWT Tokens are signed to prevent tampering and verify authenticity, they're not encrypted and can easily be read by decoding the URL-safe Base64 string. This is a feature of JWT where it allows Client Apps to inspect the User's claims and hide functionality they don't have access to, it also means that JWT Tokens are debuggable and can be inspected for whenever you need to track down unexpected behavior.

But there may be times when you want to embed sensitive information in your JWT Tokens in which case you'll want to enable Encryption, which can be done with:

new JwtAuthProvider(AppSettings) {

PrivateKeyXml = AppSettings.GetString("PrivateKeyXml"),

EncryptPayload = true

}

When turning on encryption, tokens are instead created following the JSON Web Encryption (JWE) standard where they'll be encoded in the 5-part JWE Compact Serialization format:

BASE64URL(UTF8(JWE Protected Header)) || '.' ||

BASE64URL(JWE Encrypted Key) || '.' ||

BASE64URL(JWE Initialization Vector) || '.' ||

BASE64URL(JWE Ciphertext) || '.' ||

BASE64URL(JWE Authentication Tag)

JwtAuthProvider's JWE implementation uses RSAES OAEP for Key Encryption and AES/128/CBC HMAC SHA256 for Content Encryption, closely following JWE's AES_128_CBC_HMAC_SHA_256 Example where a new MAC Auth and AES Crypt Key and IV are created for each Token. The Content Encryption Key (CEK) used to Encrypt and Authenticate the payload is encrypted using the Public Key and decrypted with the Private Key so only Systems with access to the Private Key will be able to Decrypt, Validate and Read the Token's payload.

Stateless Auth Microservices

One of JWT's most appealing features is its ability to decouple the System that provides User Authentication Services and issues tokens from all the other Systems but are still able provide protected Services although no longer needs access to a User database or Session data store to facilitate it, as sessions can now be embedded in Tokens and its state maintained and sent by clients instead of accessed from each App Server. This is ideal for Microservice architectures where Auth Services can be isolated into a single externalized System.

With this use-case in mind we've decoupled JwtAuthProvider in 2 classes:

- JwtAuthProviderReader - Responsible for validating and creating Authenticated User Sessions from tokens

- JwtAuthProvider -

Inherits

JwtAuthProviderReaderto also be able to Issue, Encrypt and provide access to tokens

Services only Validating Tokens

This lets us configure our Microservices that we want to enable Authentication via JWT Tokens down to just:

public override void Configure(Container container)

{

Plugins.Add(new AuthFeature(() => new AuthUserSession(),

new IAuthProvider[] {

new JwtAuthProviderReader(AppSettings) {

HashAlgorithm = "RS256",

PublicKeyXml = AppSettings.GetString("PublicKeyXml")

},

}));

}

Which no longer needs access to a IUserAuthRepository or Sessions since they're populated entirely from JWT Tokens. Whilst you can use the default HS256 HashAlgorithm, RSA is ideal for this use-case as you can limit access to the PrivateKey to only the central Auth Service issuing the tokens and then only distribute the PublicKey to each Service which needs to validate them.

Service Issuing Tokens

As we can now contain all our Systems Auth Functionality to a single System we can open it up to support multiple Auth Providers as it only needs to be maintained in a central location but is still able to benefit all our Microservices that are only configured to validate JWT Tokens.

Here's a popular Auth Server configuration example which stores all User Auth information as well as User Sessions in SQL Server and is configured to support many of ServiceStack's Auth and OAuth providers:

public override void Configure(Container container)

{

//Store UserAuth in SQL Server

var dbFactory = new OrmLiteConnectionFactory(

AppSettings.GetString("LiveDb"), SqlServerDialect.Provider);

container.Register<IDbConnectionFactory>(dbFactory);

container.Register<IAuthRepository>(c =>

new OrmLiteAuthRepository(dbFactory) { UseDistinctRoleTables = true });

//Create UserAuth RDBMS Tables

container.Resolve<IAuthRepository>().InitSchema();

//Also store User Sessions in SQL Server

container.RegisterAs<OrmLiteCacheClient, ICacheClient>();

container.Resolve<ICacheClient>().InitSchema();

//Add Support for

Plugins.Add(new AuthFeature(() => new AuthUserSession(),

new IAuthProvider[] {

new JwtAuthProvider(AppSettings) {

HashAlgorithm = "RS256",

PrivateKeyXml = AppSettings.GetString("PrivateKeyXml")

},

new ApiKeyAuthProvider(AppSettings), //Sign-in with API Key

new CredentialsAuthProvider(), //Sign-in with UserName/Password credentials

new BasicAuthProvider(), //Sign-in with HTTP Basic Auth

new DigestAuthProvider(AppSettings), //Sign-in with HTTP Digest Auth

new TwitterAuthProvider(AppSettings), //Sign-in with Twitter

new FacebookAuthProvider(AppSettings), //Sign-in with Facebook

new YahooOpenIdOAuthProvider(AppSettings), //Sign-in with Yahoo OpenId

new OpenIdOAuthProvider(AppSettings), //Sign-in with Custom OpenId

new GoogleOAuth2Provider(AppSettings), //Sign-in with Google OAuth2 Provider

new LinkedInOAuth2Provider(AppSettings), //Sign-in with LinkedIn OAuth2 Provider

new GithubAuthProvider(AppSettings), //Sign-in with GitHub OAuth Provider

new YandexAuthProvider(AppSettings), //Sign-in with Yandex OAuth Provider

new VkAuthProvider(AppSettings), //Sign-in with VK.com OAuth Provider

}));

}

With this setup we can Authenticate using any of the supported Auth Providers with our central Auth Server, retrieve the generated Token and use it to communicate with any our Microservices configured to validate tokens:

Retrieve Token with API Key

var authClient = JsonServiceClient(centralAuthBaseUrl) {

Credentials = new NetworkCredential(apiKey, "")

};

var jwtToken = authClient.Send(new Authenticate()).BearerToken;

var client = new JsonServiceClient(service1BaseUrl) { BearerToken = jwtToken };

var response = client.Get(new Secured { ... });

Retrieve Token with HTTP Basic Auth

var authClient = JsonServiceClient(centralAuthBaseUrl) {

Credentials = new NetworkCredential(username, password)

};

var jwtToken = authClient.Send(new Authenticate()).BearerToken;

Retrieve Token with Credentials Auth

var authClient = JsonServiceClient(centralAuthBaseUrl);

var jwtToken = authClient.Send(new Authenticate {

provider = "credentials",

UserName = username,

Password = password

}).BearerToken;

Convert Sessions to Tokens

Another useful Service that JwtAuthProvider provides is being able to Convert your current Authenticated

Session into a Token. Authenticating via Credentials Auth establishes an Authenticated Session with the

server which is captured in the

Session Cookies

that gets populated on the HTTP Client. This lets us access protected Services immediately after we've

successfully Authenticated, e.g:

var authResponse = client.Send(new Authenticate {

provider = "credentials",

UserName = username,

Password = password

});

var response = client.Get(new Secured { ... });

However this only establishes an Authenticated Session to a single Server. Another way we can access

our Token is to call the ConvertSessionToToken Service which will convert our currently Authenticated

Session into a JWT Token which we can use instead to communicate with all our independent Services, e.g:

var tokenResponse = client.Send(new ConvertSessionToToken());

var jwtToken = client.GetTokenCookie(); //From ss-tok Cookie

var client2 = new JsonServiceClient(service2BaseUrl) { BearerToken = jwtToken };

var response = client2.Get(new Secured2 { ... });

var client3 = new JsonServiceClient(service3BaseUrl) { BearerToken = jwtToken };

var response = client3.Get(new Secured3 { ... });

Tokens are returned in the Secure HttpOnly ss-tok Cookie, accessible from the GetTokenCookie()

extension method as seen above.

The default behavior of ConvertSessionToToken is to remove the Current Session from the Auth Server

which will prevent access to protected Services using our previously Authenticated Session.

If you still want to preserve your existing Session you can indicate this with:

var tokenResponse = client.Send(new ConvertSessionToToken { PreserveSession = true });

Ajax Clients

Using Cookies is the

recommended way for using JWT Tokens in Web Applications

since the HttpOnly Cookie flag will prevent it from being accessible from JavaScript making them immune

to XSS attacks whilst the Secure flag will ensure that the JWT Token is only ever transmitted over HTTPS.

You can convert your Session into a Token and set the ss-tok Cookie in your web page by sending an Ajax

request to /session-to-token, e.g:

$.post("/session-to-token");

Likewise this API lets you convert Sessions created by any of the OAuth providers into a stateless JWT Token.

JWT Configuration

The JWT Auth Provider provides the following options to customize its behavior:

class JwtAuthProviderReader

{

// The RSA Bit Key Length to use

static RsaKeyLengths UseRsaKeyLength = RsaKeyLengths.Bit2048

// Different HMAC Algorithms supported

Dictionary<string, Func<byte[], byte[], byte[]>> HmacAlgorithms

// Different RSA Signing Algorithms supported

Dictionary<string, Func<RSAParameters, byte[], byte[]>> RsaSignAlgorithms

Dictionary<string, Func<RSAParameters, byte[], byte[], bool>> RsaVerifyAlgorithms

// Whether to only allow access via API Key from a secure connection. (default true)

bool RequireSecureConnection

// Run custom filter after JWT Header is created

Action<JsonObject, IAuthSession> CreateHeaderFilter

// Run custom filter after JWT Payload is created

Action<JsonObject, IAuthSession> CreatePayloadFilter

// Run custom filter after session is restored from a JWT Token

Action<IAuthSession, JsonObject, IRequest> PopulateSessionFilter

// Whether to encrypt JWE Payload (default false).

// Uses RSA-OAEP for Key Encryption and AES/128/CBC HMAC SHA256 for Conent Encryption

bool EncryptPayload

// Which Hash Algorithm should be used to sign the JWT Token. (default HS256)

string HashAlgorithm

// Whether to only allow processing of JWT Tokens using the configured HashAlgorithm. (default true)

bool RequireHashAlgorithm

// The Issuer to embed in the token. (default ssjwt)

string Issuer

// The Audience to embed in the token. (default null)

string Audience

// What Id to use to identify the Key used to sign the token. (default First 3 chars of Base64 Key)

string KeyId

// The AuthKey used to sign the JWT Token

byte[] AuthKey

// Convenient overload to initialize AuthKey with Base64 string

string AuthKeyBase64

// The RSA Private Key used to Sign the JWT Token when RSA is used

RSAParameters? PrivateKey

// Convenient overload to intialize the Private Key via exported XML

string PrivateKeyXml

// The RSA Public Key used to Verify the JWT Token when RSA is used

RSAParameters? PublicKey

// Convenient overload to intialize the Public Key via exported XML

string PublicKeyXml

// How long should JWT Tokens be valid for. (default 14 days)

TimeSpan ExpireTokensIn

// Whether to invalidate all JWT Tokens issued before a specified date.

DateTime? InvalidateTokensIssuedBefore

// Modify the registration of ConvertSessionToToken Service

Dictionary<Type, string[]> ServiceRoutes

}

Further Examples

More examples of both the new API Key and JWT Auth Providers are available in StatelessAuthTests.

OrmLite

Cleaner, Modernized API Surface

As mentioned in the last release

we've moved OrmLite's deprecated APIs into the ServiceStack.OrmLite.Legacy namespace leaving a clean, modern

API surface in OrmLite's default namespace.

This primarily affects the original OrmLite APIs ending with *Fmt which were used to provide a familiar

API for C# developers based on C#'s string.Format(), e.g:

var tracks = db.SelectFmt<Track>("Artist = {0} AND Album = {1}", "Nirvana", "Nevermind");

Whilst you can continue using the legacy API by adding the ServiceStack.OrmLite.Legacy namespace, it's also

a good time to consider switching using any of the recommended parameterized APIs below:

var tracks = db.Select<Track>(x => x.Artist == "Nirvana" && x.Album == "Nevermind");

var q = db.From<Track>()

.Where(x => x.Artist == "Nirvana" && x.Album == "Nevermind");

var tracks = db.Select(q);

var tracks = db.Select<Track>("Artist = @artist AND Album = @album",

new { artist = "Nirvana", album = "Nevermind" });

var tracks = db.SqlList<Track>(

"SELECT * FROM Track WHERE Artist = @artist AND Album = @album",

new { artist = "Nirvana", album = "Nevermind" });

The other API's that have been moved are those that inject an SqlExpression<T>, e.g:

var tracks = db.Select<Track>(q =>

q.Where(x => x.Artist == "Nirvana" && x.Album == "Nevermind"));

This overload would confuse intellisense where lambda's could be injected with either T or

SqlExpression<T>. We've now removed the SqlExpression<T> overloads so now the only typed POCO parameter

is injected. Existing APIs can use db.From<T>() to resolve any build errors, e.g:

var tracks = db.Select(db.From<Track>()

.Where(x => x.Artist == "Nirvana" && x.Album == "Nevermind"));

Parameterized by default

The OrmLiteConfig.UseParameterizeSqlExpressions option that could be used to disable parameterized

SqlExpressions and revert to using in-line escaped SQL has been removed in along with all its dependent

functionality, so now all queries just use db params.

Improved partial Updates and Inserts APIs

One of the limitations we had with using LINQ Expressions was the lack of support for assignment expressions which meant we previously needed to do capture which fields you wanted updated in partial updates, e.g:

db.UpdateOnly(new Poco { Age = 22 }, onlyFields:p => p.Age, where:p => p.Name == "Justin Bieber");

//increments age by 1

db.UpdateAdd(new Poco { Age = 1 }, onlyFields:p => p.Age, where:p => p.Name == "Justin Bieber");

Taking a leaf from PocoDynamo we've added a better API using a lambda expression which now saves us from having to specify which fields to update twice since we're able to infer them from the returned Member Init Expression, e.g:

db.UpdateOnly(() => new Poco { Age = 22 }, where: p => p.Name == "Justin Bieber");

//increments age by 1

db.UpdateAdd(() => new Poco { Age = 1 }, where: p => p.Name == "Justin Bieber");

Like most API's, async equivalents are also available:

await db.UpdateOnlyAsync(() => new Poco { Age = 22 }, where: p => p.Name == "Justin Bieber");

await db.UpdateOnlyAsync(() => new Poco { Age = 1 }, where: p => p.Name == "Justin Bieber");

Likewise we've extended this feature to partial INSERT's as well:

db.InsertOnly(() => new Poco { Name = "Justin Bieber", Age = 22 });

await db.InsertOnlyAsync(() => new Poco { Name = "Justin Bieber", Age = 22 });

New ColumnExists API

We've added support for a Typed ColumnExists API across all supported RDBMS's which makes it easy to

inspect the state of an RDBMS Table which can be used to determine what modifications you want on it, e.g:

class Poco

{

public int Id { get; set; }

public string Name { get; set; }

public string Ssn { get; set; }

}

db.DropTable<Poco>();

db.TableExists<Poco>(); //= false

db.CreateTable<Poco>();

db.TableExists<Poco>(); //= true

db.ColumnExists<Poco>(x => x.Ssn); //= true

db.DropColumn<Poco>(x => x.Ssn);

db.ColumnExists<Poco>(x => x.Ssn); //= false

In a future version of your Table POCO you can use ColumnExists to detect which columns haven't been

added yet, then use AddColumn to add it, e.g:

class Poco

{

public int Id { get; set; }

public string Name { get; set; }

[Default(0)]

public int Age { get; set; }

}

if (!db.ColumnExists<Poco>(x => x.Age)) //= false

db.AddColumn<Poco>(x => x.Age);

db.ColumnExists<Poco>(x => x.Age); //= true

The [Default] attribute lets you specify a default value, required when adding non-nullable columns to

a table with existing rows.

New SelectMulti API

Previously the only Typed API available to select data across multiple joined tables was to use a Custom POCO with all the columns you want from any of the joined tables, e.g:

List<FullCustomerInfo> customers = db.Select<FullCustomerInfo>(

db.From<Customer>().Join<CustomerAddress>());

The new SelectMulti API now lets you use your existing POCO's to access results from multiple joined tables

by returning them in a Typed Tuple:

var q = db.From<Customer>()

.Join<Customer, CustomerAddress>()

.Join<Customer, Order>()

.Where(x => x.CreatedDate >= new DateTime(2016,01,01))

.And<CustomerAddress>(x => x.Country == "Australia");

var results = db.SelectMulti<Customer, CustomerAddress, Order>(q);

foreach (var tuple in results)

{

Customer customer = tuple.Item1;

CustomerAddress custAddress = tuple.Item2;

Order custOrder = tuple.Item3;

}

Using OrmLite with Dapper

One of the benefits of Micro ORM's is that they can be used together, so alternatively you could use a combination of OrmLite to create the typed query using its built-in Reference Conventions and then use OrmLite's embedded version of Dapper to Query Multiple result-sets into your existing POCOs:

var q = db.From<Customer>()

.Join<Customer, CustomerAddress>()

.Join<Customer, Order>()

.Where(x => x.CreatedDate >= new DateTime(2016,01,01))

.And<CustomerAddress>(x => x.Country == "Australia")

.Select("*");

using (var multi = db.QueryMultiple(q.ToSelectStatement()))

{

var results = multi.Read<Customer, CustomerAddress, Order,

Tuple<Customer,CustomerAddress,Order>>(Tuple.Create).ToList();

foreach (var tuple in results)

{

Customer customer = tuple.Item1;

CustomerAddress custAddress = tuple.Item2;

Order custOrder = tuple.Item3;

}

}

Multiple APIs to fetch data

One of the benefits of OrmLite is how the query is constructed and executed is independent from how the

results are mapped. So it doesn't matter whether the query used raw custom SQL or a Typed SQL Expression,

OrmLite just looks at the dataset returned to workout how the results should be mapped. This allows you

to use any of the loose-typed Convenience APIs to fetch

data from multiple joined tables. In addition to using

untyped .NET Collections, we've

also now added support for Select<dynamic> as well:

var q = db.From<Employee>()

.Join<Department>()

.Select<Employee, Department>((e, d) => new { e.FirstName, e.LastName, d.Name });

List<dynamic> results = db.Select<dynamic>(q);

foreach (dynamic result in results)

{

string firstName = result.FirstName;

string lastName = result.LastName;

string deptName = result.Name;

}

CustomSelect Attribute

The new [CustomSelect] can be used to define properties you want populated from a Custom SQL Function or

Expression instead of a normal persisted column, e.g:

public class Block

{

public int Id { get; set; }

public int Width { get; set; }

public int Height { get; set; }

[CustomSelect("Width * Height")]

public int Area { get; set; }

[Default(OrmLiteVariables.SystemUtc)]

public DateTime CreatedDate { get; set; }

[CustomSelect("FORMAT(CreatedDate, 'yyyy-MM-dd')")]

public string DateFormat { get; set; }

}

db.Insert(new Block { Id = 1, Width = 10, Height = 5 });

var block = db.SingleById<Block>(1);

block.Area.Print(); //= 50

block.DateFormat.Print(); //= 2016-06-08

OrmLiteAuthRepository

OrmLiteAuthRepository can now be configured to store User Auth data in a separate database with:

container.Register<IAuthRepository>(c =>

new OrmLiteAuthRepository(c.Resolve<IDbConnectionFactory>()), namedConnnection:"AuthDb");

Optimize LIKE Searches

One of the primary goals of OrmLite is to expose and RDBMS agnostic Typed API Surface which will allow you to easily switch databases, or access multiple databases at the same time with the same behavior.

One instance where this can have an impact is needing to use UPPER() in LIKE searches to enable

case-insensitive LIKE queries across all RDBMS. The drawback of this is that LIKE Queries are not able

to use any existing RDBMS indexes. We can disable this feature and return to the default RDBMS behavior with:

OrmLiteConfig.StripUpperInLike = true;

Allowing all LIKE Searches in OrmLite or AutoQuery to use any available RDBMS Index.

OrmLite Community Contributions

We'd like to thank Evgeny Morozov from the ServiceStack Community who contributed several fixes and performance improvements to OrmLite in this release.

ServiceStack.Redis

New Redis GEO Operations

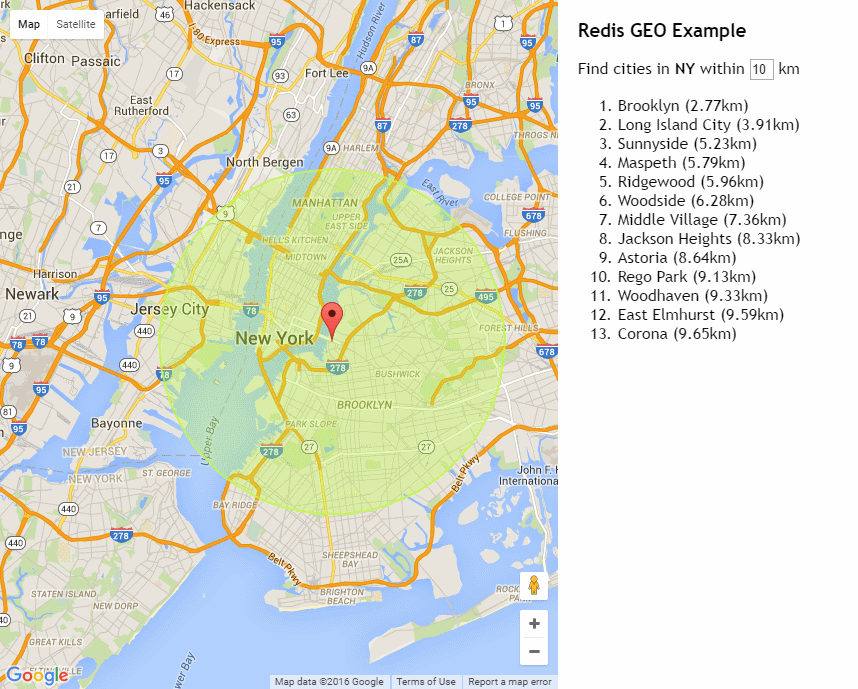

The latest release of Redis 3.2.0 brings it exciting new GEO capabilities which will let you store Lat/Long coordinates in Redis and query locations within a specified radius.

To demonstrate this functionality we've created a new Redis GEO Live Demo which lets you click on anywhere in the U.S. to find the list of nearest cities within a given radius:

Live Demo: http://redisgeo.netcore.io

Redis GEO just imports postal data coordinates for each State from the geonames.org postal data for the specified country.

Only the single Service below was required to implement the Apps functionality which just returns all locations for a given state within a specified radius, sorted by nearest location:

[Route("/georesults/{State}")]

public class FindGeoResults : IReturn<List<RedisGeoResult>>

{

public string State { get; set; }

public long? WithinKm { get; set; }

public double Lng { get; set; }

public double Lat { get; set; }

}

public class RedisGeoServices : Service

{

public object Any(FindGeoResults request)

{

var results = Redis.FindGeoResultsInRadius(request.State,

longitude: request.Lng, latitude: request.Lat,

radius: request.WithinKm.GetValueOrDefault(20), unit: RedisGeoUnit.Kilometers,

sortByNearest: true);

return results;

}

}

Redis's new GEO Operations are available in the new ServiceStack.Redis API's below:

public interface IRedisClient

{

//...

long AddGeoMember(string key, double longitude, double latitude, string member);

long AddGeoMembers(string key, params RedisGeo[] geoPoints);

double CalculateDistanceBetweenGeoMembers(string key, string from, string to, string unit=null);

string[] GetGeohashes(string key, params string[] members);

List<RedisGeo> GetGeoCoordinates(string key, params string[] members);

string[] FindGeoMembersInRadius(string key, double lng, double lat, double radius, string unit);

List<RedisGeoResult> FindGeoResultsInRadius(string key, double lng, double lat, double radius,

string unit, int? count = null, bool? sortByNearest = null);

string[] FindGeoMembersInRadius(string key, string member, double radius, string unit);

List<RedisGeoResult> FindGeoResultsInRadius(string key, string member, double radius,

string unit, int? count = null, bool? sortByNearest = null);

}

public interface IRedisNativeClient

{

//...

long GeoAdd(string key, double longitude, double latitude, string member);

long GeoAdd(string key, params RedisGeo[] geoPoints);

double GeoDist(string key, string fromMember, string toMember, string unit = null);

string[] GeoHash(string key, params string[] members);

List<RedisGeo> GeoPos(string key, params string[] members);

List<RedisGeoResult> GeoRadius(string key, double lng, double lat, double radius, string unit,

bool withCoords=false, bool withDist=false, bool withHash=false, int? count=null, bool? asc=null);

List<RedisGeoResult> GeoRadiusByMember(string key, string member, double radius, string unit,

bool withCoords=false, bool withDist=false, bool withHash=false, int? count=null, bool? asc=null);

}

Binary Key APIs

New APIs were added to RedisNativeClient which lets persist and access data using a binary key:

class RedisNativeClient

{

void Set(byte[] key, byte[] value, int expirySeconds, long expiryMs = 0);

void SetEx(byte[] key, int expireInSeconds, byte[] value);

byte[] Get(byte[] key);

long HSet(byte[] hashId, byte[] key, byte[] value);

byte[] HGet(byte[] hashId, byte[] key);

long Del(byte[] key);

long HDel(byte[] hashId, byte[] key);

bool PExpire(byte[] key, long ttlMs);

bool Expire(byte[] key, int seconds);

}

Slack Logger

The new Slack Logger can be used to send Logging to a custom Slack Channel which is a nice interactive way for your development team on Slack to see and discuss logging messages as they come in.

To start using it first download it from NuGet:

PM> Install-Package ServiceStack.Logging.Slack

Then configure it with the channels you want to log it to, e.g:

LogManager.LogFactory = new SlackLogFactory("{GeneratedSlackUrlFromCreatingIncomingWebhook}",

debugEnabled:true)

{

//Alternate default channel than one specified when creating Incoming Webhook.

DefaultChannel = "other-default-channel",

//Custom channel for Fatal logs. Warn, Info etc will fallback to DefaultChannel or

//channel specified when Incoming Webhook was created.

FatalChannel = "more-grog-logs",

//Custom bot username other than default

BotUsername = "Guybrush Threepwood",

//Custom channel prefix can be provided to help filter logs from different users or environments.

ChannelPrefix = System.Security.Principal.WindowsIdentity.GetCurrent().Name

};

LogManager.LogFactory = new SlackLogFactory(appSettings);

Some more usage examples are available in SlackLogFactoryTests.

Performance and Memory improvements

Several performance and memory usage improvements were also added across the board in this release where

all ServiceStack libraries have now switched to using a ThreadStatic StringBuilder Cache where possible

to reuse existing StringBuilder instances and save on Heap allocations.

For similar improvements you can also use the new StringBuilderCache in your own code where you'd just

need to call Allocate() to get access to a reset StringBuilder instance and call ReturnAndFree()

when you're done to access the string and return the StringBuilder to the cache, e.g:

public static string ToMd5Hash(this Stream stream)

{

var hash = MD5.Create().ComputeHash(stream);

var sb = StringBuilderCache.Allocate();

for (var i = 0; i < hash.Length; i++)

{

sb.Append(hash[i].ToString("x2"));

}

return StringBuilderCache.ReturnAndFree(sb);

}

There's also a StringBuilderCacheAlt for when you need access to 2x StringBuilders at the same time.

String Parsing APIs

In several areas we used SplitOnFirst() and SplitOnLast() String extension methods to parse strings

which returns a string[] containing the string components we were interested in, e.g we can use it to

fetch the Type name from a Generic Type Definition with:

var genericTypeName = type.GetGenericTypeDefinition().Name.SplitOnFirst('`')[0];

Whilst this works it creates a temporary array to store the components. We can get the same result and

save a Heap Allocation by using the new LeftPart API instead:

var genericTypeName = type.GetGenericTypeDefinition().Name.LeftPart('`');

Which in addition to saving unnecessary allocations, is shorter and reads better, win, win!

The new APIs have the same behavior as the existing SplitOnFirst() and SplitOnLast() extension methods below:

str.LeftPart(':') == str.SplitOnFirst(':')[0]

str.RightPart(':') == str.SplitOnFirst(':').Last()

str.LastLeftPart(':') == str.SplitOnLast(':')[0]

str.LastRightPart(':') == str.SplitOnLast(':').Last()

TypeConstants

We've switched to using the new

TypeConstants

which holds static instances of many popular empty collections and Task<T> results which you can reuse

instead of creating new instances, e.g:

TypeConstants.EmptyStringArray == new string[0];

TypeConstants.EmptyObjectArray == new object[0];

TypeConstants<CustomType>.EmptyArray == new T[0];

CachedExpressionCompiler

We've added MVC's CachedExpressionCompiler to ServiceStack.Common and where possible are now using it in-place of Compiling LINQ expressions directly in all of ServiceStack libraries.

GetActivator and GetCachedGenericType

The new GetCachedGenericType() and GetActivator() API's caches and improves performance when dynamically

creating instances of generic types. We can use this to return a cached compiled delegate accepting any

number of object[] args to dynamically create instances of Typed Tuples, e.g:

var genericArgs = new[] { typeof(TypeA), typeof(TypeB) };

Type genericType = typeof(Tuple<,>).GetCachedGenericType(genericArgs);

var ctor = genericType.GetConstructor(genericArgs);

var activator = ctor.GetActivator();

var tuple = (Tuple<TypeA, TypeB>) activator(new TypeA(), new TypeB());

Object Pools

We've added the Object pooling classes that Roslyn's code-base uses in ServiceStack.Text.Pools which lets

you create reusable object pools of instances. The available pools include:

ObjectPool<T>PooledObject<T>SharedPoolsStringBuilderPool

Enum.HasFlag

To reduce allocations we've replaced our usage

of Enum.HasFlag to using bit-wise operations instead.

Other Features

Add ServiceStack Reference Wildcards

The IncludeType option in all

Add ServiceStack Reference

languages now allow specifying a .* wildcard suffix on Request DTO's as a shorthand to return all

dependent DTOs for that Service, e.g:

IncludeTypes: RequestDto.*

Special thanks to @donaldgray for contributing this feature.

New ServerEventsClient APIs

Use new Typed GetChannelSubscribers APIs added to C#/.NET ServerEventsClient to fetch Channel Subscribers, e.g:

var clientA = new ServerEventsClient("A");

var channelASubscribers = clientA.GetChannelSubscribers();

var channelASubscribers = await clientA.GetChannelSubscribersAsync();

New IServiceClient APIs

Use the new Typed extension methods to fetch Session Cookies from all Service Clients:

var sessionId = client.GetSessionId();

newClient.SetSessionId(sessionId);

var permSessionId = client.GetPermanentSessionId();

newClient.SetPermanentSessionId(permSessionId);

var token = client.GetTokenCookie();

RegisterServicesInAssembly

Plugins can use the new RegisterServicesInAssembly() API to register multiple Services in a specified

assembly, e.g:

appHost.RegisterServicesInAssembly(GetType().Assembly);

FluentValidation

The current IRequest is now injected and can be used within child Collection Validators as well, e.g:

public class MyRequestValidator : AbstractValidator<CustomRequestError>

{

public MyRequestValidator()

{

RuleSet(ApplyTo.Post | ApplyTo.Put | ApplyTo.Get, () =>

{

var req = base.Request;

RuleFor(c => c.Name)

.Must(x => !base.Request.PathInfo.ContainsAny("-", ".", " "));

RuleFor(x => x.Items).SetCollectionValidator(new MyRequestItemValidator());

});

}

}

public class MyRequestItemValidator : AbstractValidator<CustomRequestItem>

{

public MyRequestItemValidator()

{

RuleFor(x => x.Name)

.Must(x => !base.Request.QueryString["Items"].ContainsAny("-", ".", " "));

}

}

HTTP Utils

Equivalent Async APIs have been added matching all Sync HTTP Utils APIs which are now all mockable, e.g:

using (new HttpResultsFilter { BytesResult = "mocked".ToUtf8Bytes() })

{

//= "mocked".ToUtf8Bytes():

var mocked = await url.GetBytesFromUrlAsync();

mocked = await url.GetBytesFromUrlAsync(accept: "image/png");

mocked = await url.PostBytesToUrlAsync(requestBody:"postdata=1".ToUtf8Bytes());

}