**[AutoGen's](/autoquery/autogen)** code generation is programmatically customizable where the generated types can be easily augmented with additional declarative attributes to inject your App's conventions into the auto generated Services & Types to apply custom behavior like Authorization & additional validation rules.

After codifying your system conventions the generated classes can optionally be "ejected" where code-first development can continue as normal.

This feature enables rewriting parts or modernizing legacy systems with the least amount of time & effort, once Servicified you can take advantage of declarative features like Multitenancy, Optimistic Concurrency & Validation, enable automatic features like Executable Audit History, allow business users to maintain validation rules in its RDBMS, manage them through **Studio** & have them applied instantly at runtime

and visibly surfaced through ServiceStack's myriad of [client UI auto-binding options](/world-validation). **Studio** can then enable stakeholders with an instant UI to quickly access and search through their data, import custom queries directly into Excel or access them in other registered Content Types through a custom UI where fine-grained app-level access can be applied to customize which tables & operations different users have.

### gRPC's Typed protoc Universe

**[AutoGen's](/autoquery/autogen)** code generation is programmatically customizable where the generated types can be easily augmented with additional declarative attributes to inject your App's conventions into the auto generated Services & Types to apply custom behavior like Authorization & additional validation rules.

After codifying your system conventions the generated classes can optionally be "ejected" where code-first development can continue as normal.

This feature enables rewriting parts or modernizing legacy systems with the least amount of time & effort, once Servicified you can take advantage of declarative features like Multitenancy, Optimistic Concurrency & Validation, enable automatic features like Executable Audit History, allow business users to maintain validation rules in its RDBMS, manage them through **Studio** & have them applied instantly at runtime

and visibly surfaced through ServiceStack's myriad of [client UI auto-binding options](/world-validation). **Studio** can then enable stakeholders with an instant UI to quickly access and search through their data, import custom queries directly into Excel or access them in other registered Content Types through a custom UI where fine-grained app-level access can be applied to customize which tables & operations different users have.

### gRPC's Typed protoc Universe

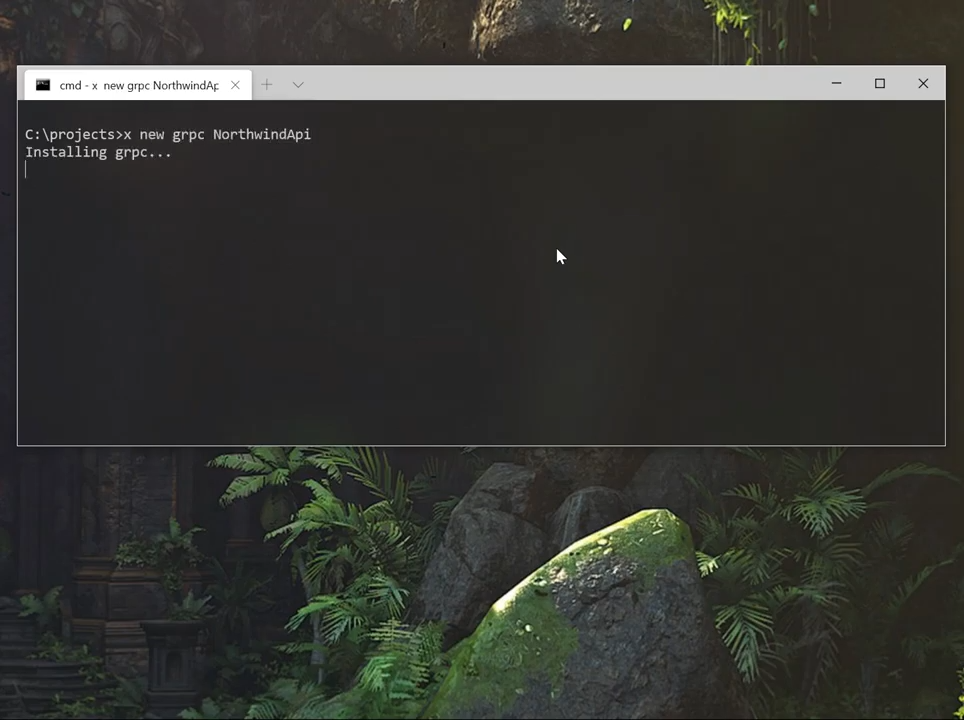

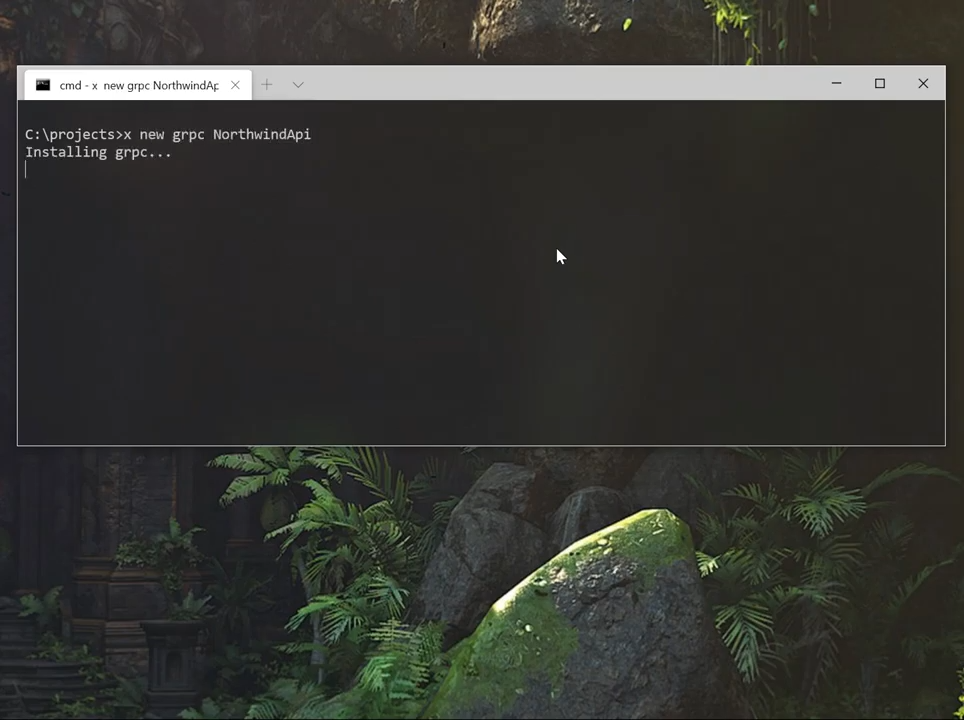

This quick demo shows an example of instantly Servicifying a database & accesses it via gRPC in minutes, starting with a new [grpc](https://github.com/NetCoreTemplates/grpc) project from scratch, it [mixes](/mix-tool) in [autocrudgen](https://gist.github.com/gistlyn/464a80c15cb3af4f41db7810082dc00c) to configure **AutoGen** to generate AutoQuery services for the registered [sqlite](https://gist.github.com/gistlyn/768d7b330b8c977f43310b954ceea668) RDBMS that's copied into the project from the [northwind.sqlite](https://gist.github.com/gistlyn/97d0bcd3ebd582e06c85f8400683e037) gist.

Once the servicified App is running it accesses the gRPC Services in a new Dart Console App using the UX-friendly [Dart gRPC support in the x dotnet tool](/grpc/dart) to call the protoc generated Services:

> YouTube: [youtu.be/5NNCaWMviXU](https://youtu.be/5NNCaWMviXU)

[](https://youtu.be/5NNCaWMviXU)

### Flutter gRPC Android App

This quick demo shows an example of instantly Servicifying a database & accesses it via gRPC in minutes, starting with a new [grpc](https://github.com/NetCoreTemplates/grpc) project from scratch, it [mixes](/mix-tool) in [autocrudgen](https://gist.github.com/gistlyn/464a80c15cb3af4f41db7810082dc00c) to configure **AutoGen** to generate AutoQuery services for the registered [sqlite](https://gist.github.com/gistlyn/768d7b330b8c977f43310b954ceea668) RDBMS that's copied into the project from the [northwind.sqlite](https://gist.github.com/gistlyn/97d0bcd3ebd582e06c85f8400683e037) gist.

Once the servicified App is running it accesses the gRPC Services in a new Dart Console App using the UX-friendly [Dart gRPC support in the x dotnet tool](/grpc/dart) to call the protoc generated Services:

> YouTube: [youtu.be/5NNCaWMviXU](https://youtu.be/5NNCaWMviXU)

[](https://youtu.be/5NNCaWMviXU)

### Flutter gRPC Android App

And if you can access it from Dart, you can access it from all platforms Dart runs on - the most exciting is Google's [Flutter](https://flutter.dev) UI Kit for building beautiful, natively compiled applications for Mobile, Web, and Desktop from a single codebase:

> YouTube: [youtu.be/3iz9aM1AlGA](https://youtu.be/3iz9aM1AlGA)

[](https://youtu.be/3iz9aM1AlGA)

## React Native Typed Client

And if you can access it from Dart, you can access it from all platforms Dart runs on - the most exciting is Google's [Flutter](https://flutter.dev) UI Kit for building beautiful, natively compiled applications for Mobile, Web, and Desktop from a single codebase:

> YouTube: [youtu.be/3iz9aM1AlGA](https://youtu.be/3iz9aM1AlGA)

[](https://youtu.be/3iz9aM1AlGA)

## React Native Typed Client

gRPC is just [one of the endpoints ServiceStack Services](/why-servicestack#multiple-clients) can be accessed from, for an even richer & more integrated development UX they're also available in all popular Mobile, Web & Desktop languages [Add ServiceStack Reference](/add-servicestack-reference) supports.

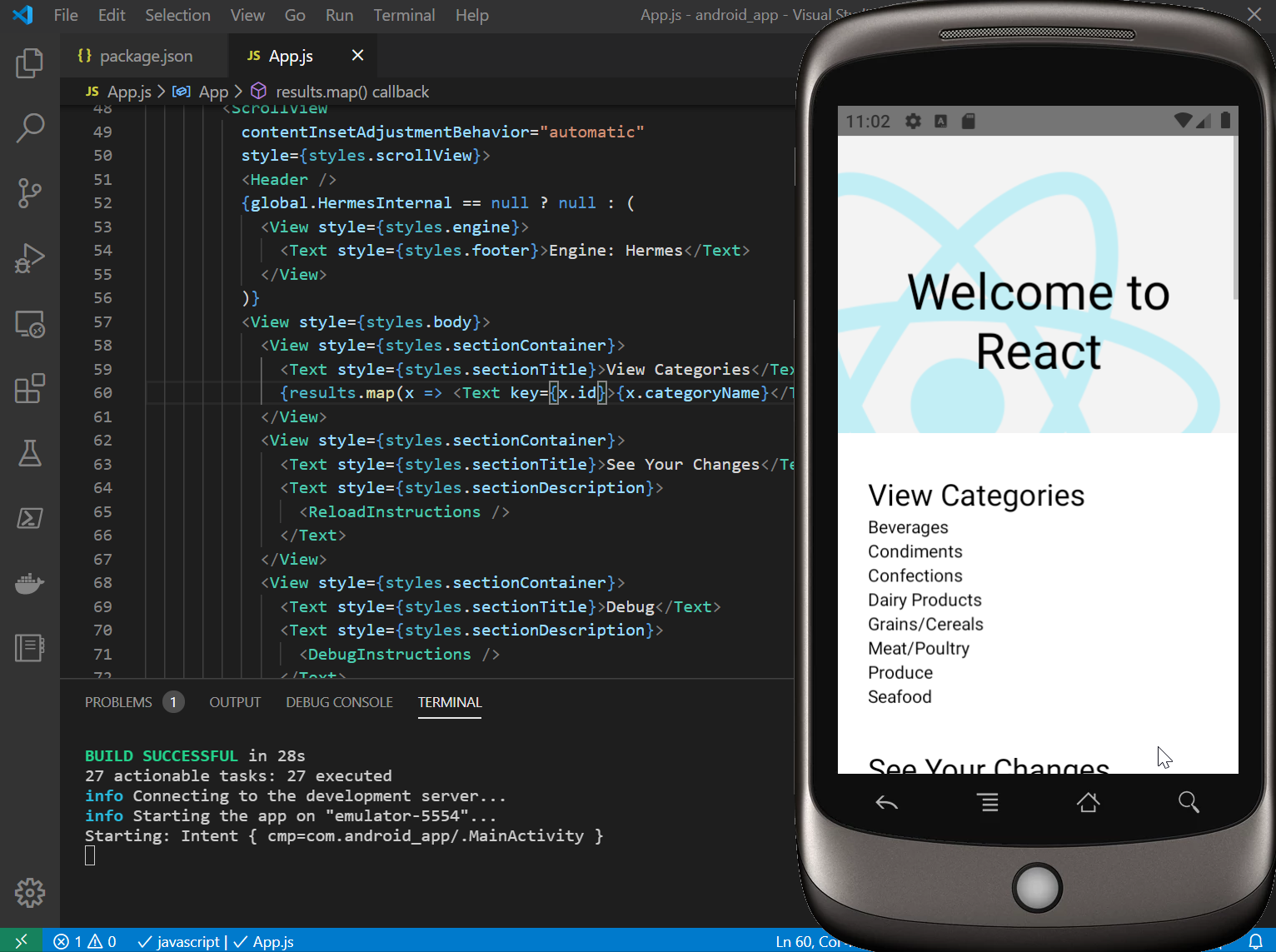

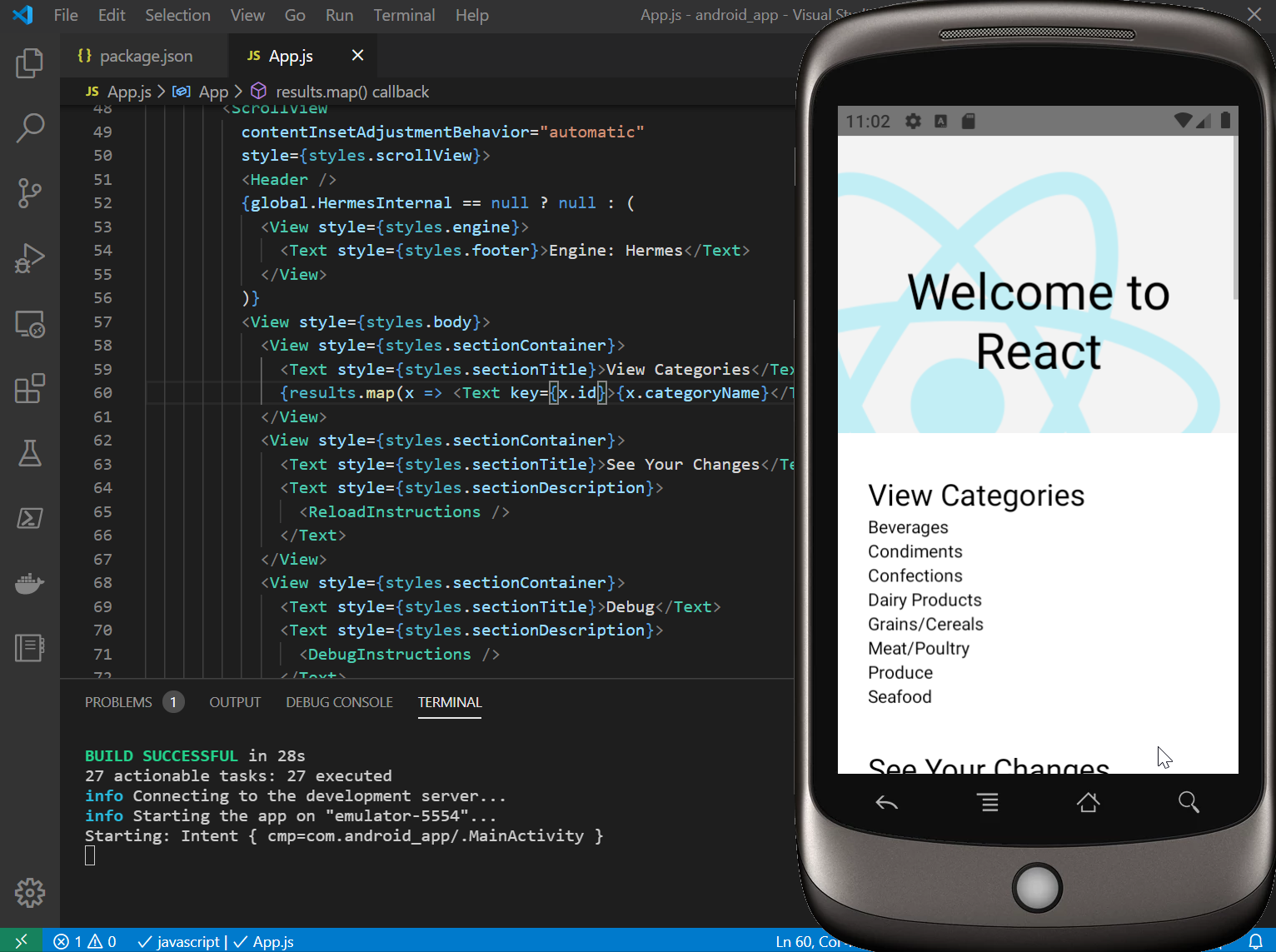

Like [TypeScript](/typescript-add-servicestack-reference) which can be used in Browser & Node TypeScript code-bases as well as JavaScript-only code-bases like [React Native](https://reactnative.dev) - a highly productive Reactive UI for developing iOS and Android Apps:

[](https://youtu.be/6-SiLAbY63w)

::: info YouTube

[youtu.be/6-SiLAbY63w](https://youtu.be/6-SiLAbY63w)

:::

# Explore ServiceStack

Source: https://docs.servicestack.net/explore-servicestack

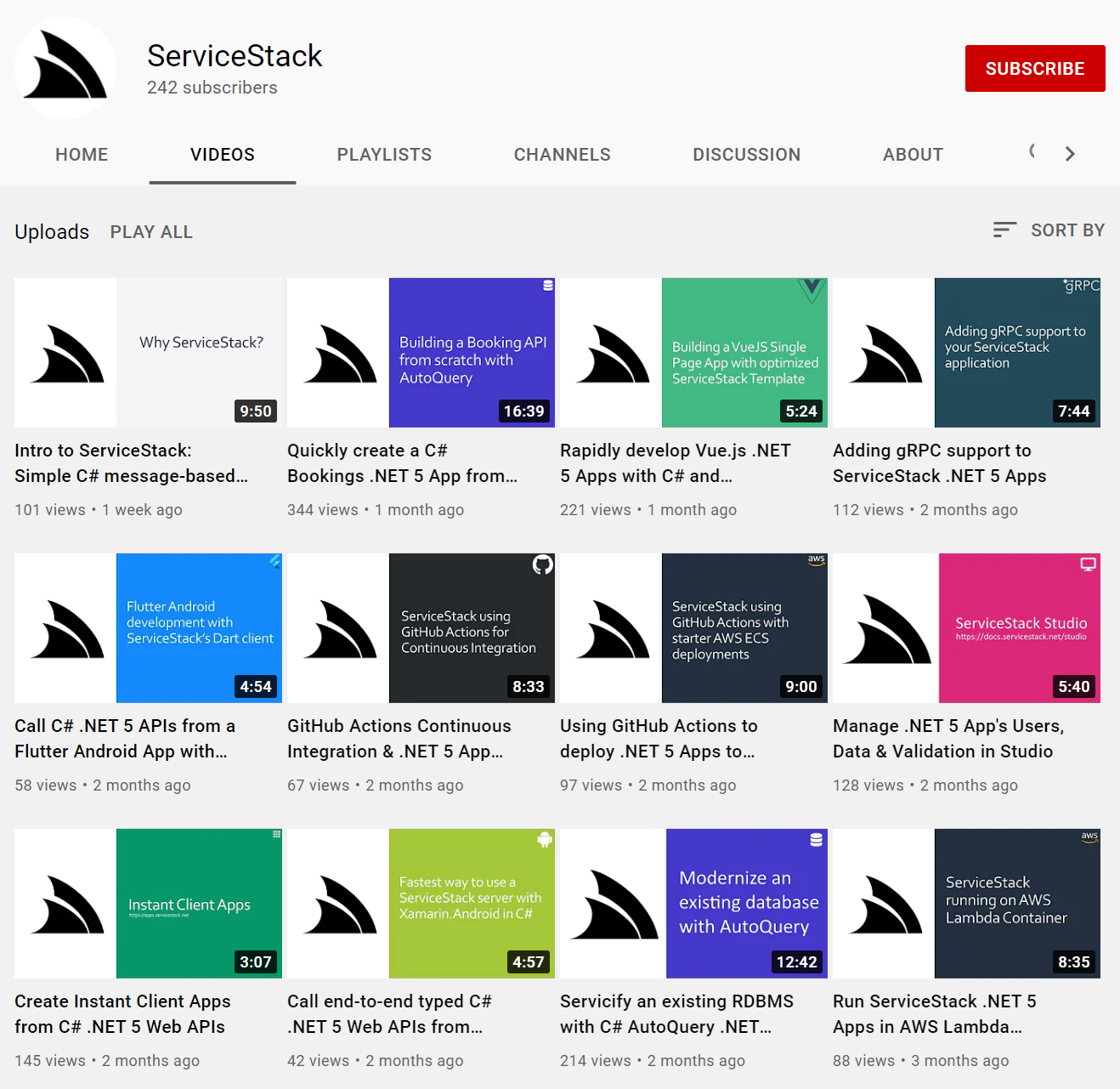

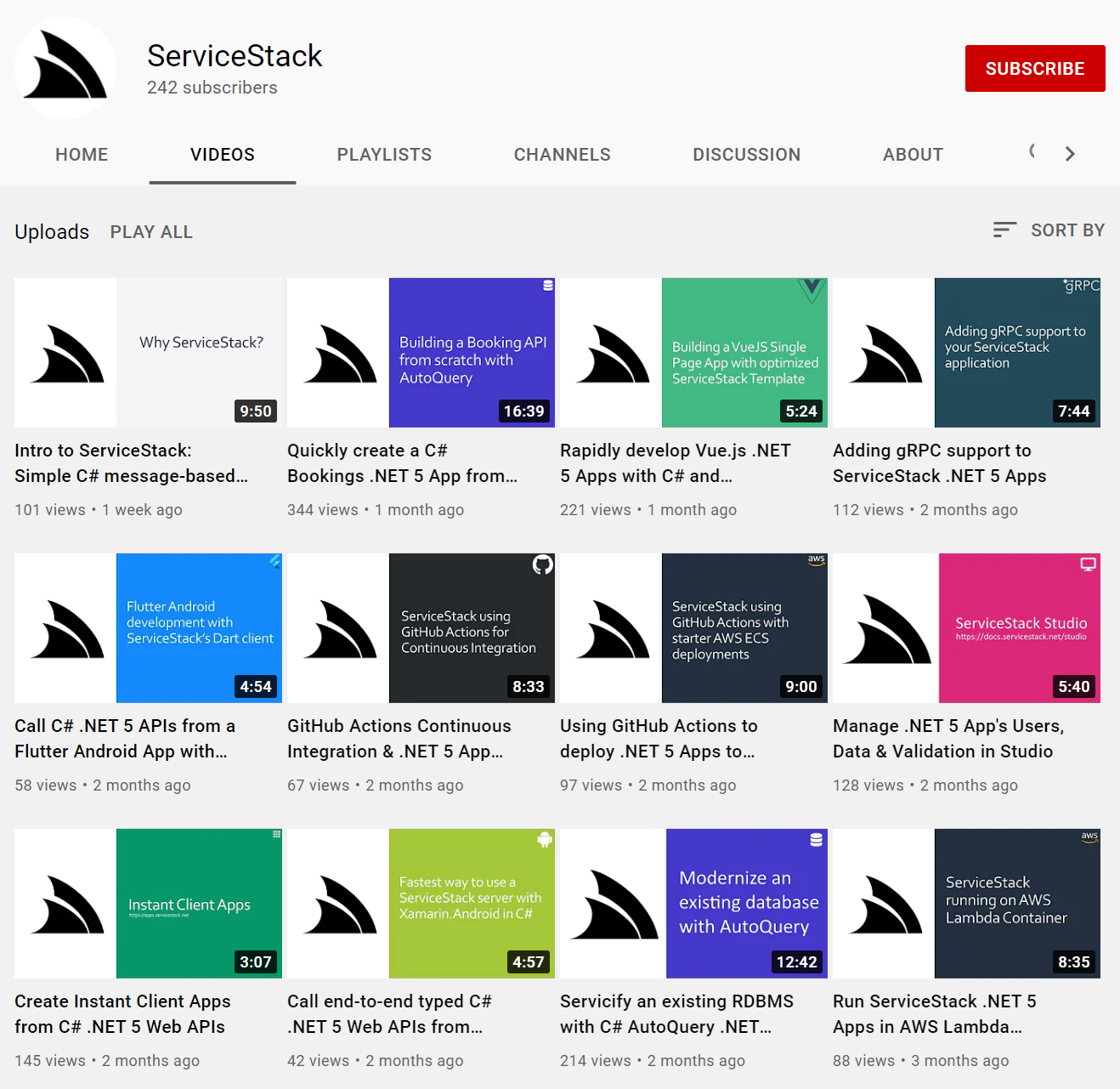

If you're completely new to ServiceStack, the [YouTube channel](https://www.youtube.com/channel/UC0kXKGVU4NHcwNdDdRiAJSA/videos) is a great way to explore some of the possibilities easily enabled with ServiceStack:

[](https://www.youtube.com/channel/UC0kXKGVU4NHcwNdDdRiAJSA/videos)

## Explore ServiceStack Apps

A great way to learn new technology is to explore existing Apps built with it, where for ServiceStack you can find a number of simple focused Apps at:

### [.NET Core Apps](https://github.com/NetCoreApps/LiveDemos)

### [.NET Framework Apps](https://github.com/ServiceStackApps/LiveDemos#live-servicestack-demos)

### [Sharp Apps](https://sharpscript.net/sharp-apps/app-index)

Many Apps are well documented like [World Validation](/world-validation) which covers how to re-implement a simple Contacts App UI in

**10 popular Web Development approaches** - all calling the same ServiceStack Services.

ServiceStack is a single code-base implementation that supports [.NET's most popular Server platforms](/why-servicestack#multiple-hosting-options) with

near perfect source-code [compatibility with .NET Core](/netcore) so all .NET Frameworks Apps are still relevant in .NET Core, e.g.

the [EmailContacts guidance](https://github.com/ServiceStackApps/EmailContacts) walks through the recommended setup and physical layout structure of typical medium-sized ServiceStack projects, including complete documentation of how to create the solution from scratch, whilst explaining all the ServiceStack features it makes use of along the way.

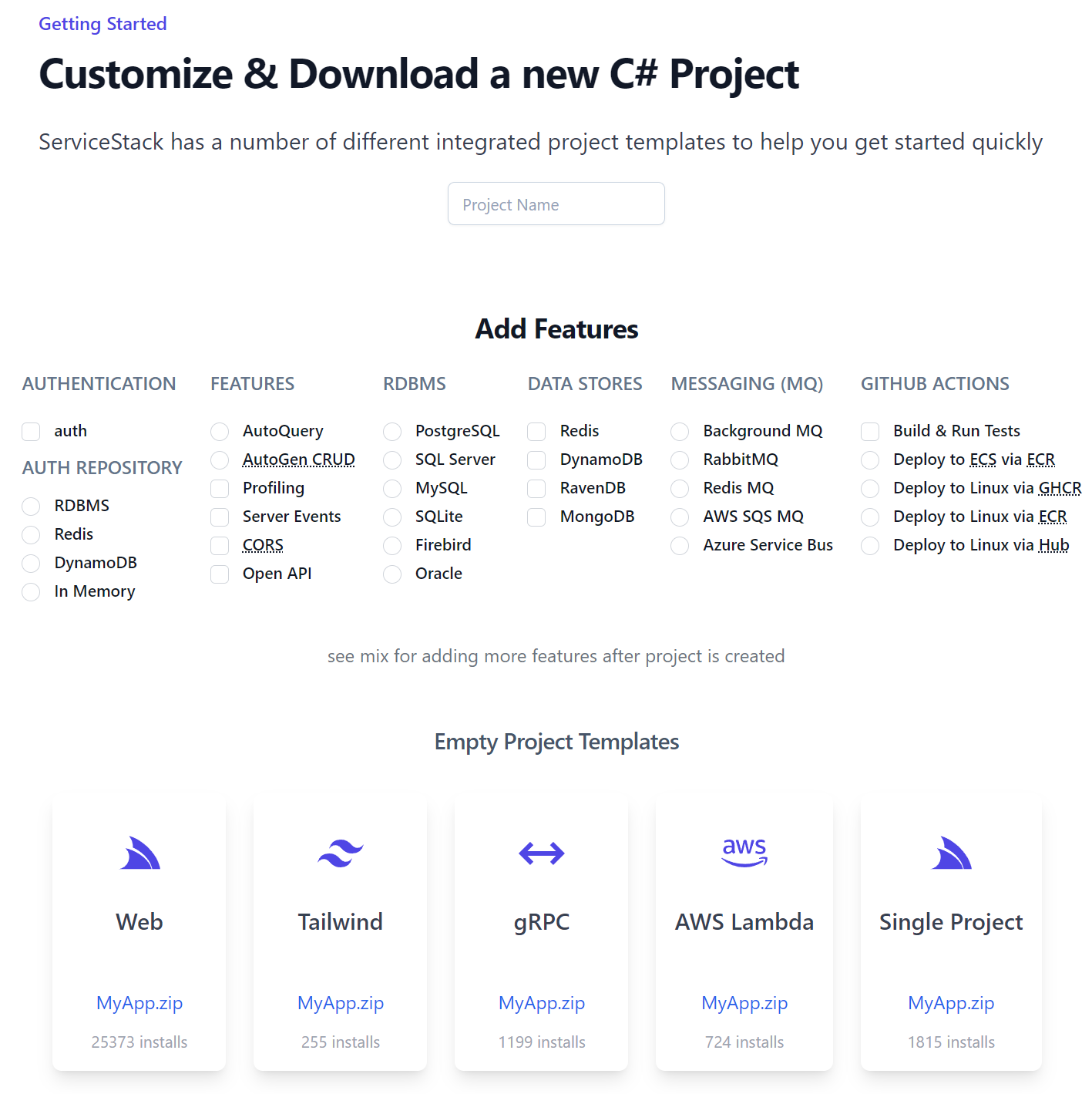

## Starting Project Templates

Once you've familiarized yourself with ServiceStack and are ready to use it in action, get started with a customized starting project template from our online template builder at:

gRPC is just [one of the endpoints ServiceStack Services](/why-servicestack#multiple-clients) can be accessed from, for an even richer & more integrated development UX they're also available in all popular Mobile, Web & Desktop languages [Add ServiceStack Reference](/add-servicestack-reference) supports.

Like [TypeScript](/typescript-add-servicestack-reference) which can be used in Browser & Node TypeScript code-bases as well as JavaScript-only code-bases like [React Native](https://reactnative.dev) - a highly productive Reactive UI for developing iOS and Android Apps:

[](https://youtu.be/6-SiLAbY63w)

::: info YouTube

[youtu.be/6-SiLAbY63w](https://youtu.be/6-SiLAbY63w)

:::

# Explore ServiceStack

Source: https://docs.servicestack.net/explore-servicestack

If you're completely new to ServiceStack, the [YouTube channel](https://www.youtube.com/channel/UC0kXKGVU4NHcwNdDdRiAJSA/videos) is a great way to explore some of the possibilities easily enabled with ServiceStack:

[](https://www.youtube.com/channel/UC0kXKGVU4NHcwNdDdRiAJSA/videos)

## Explore ServiceStack Apps

A great way to learn new technology is to explore existing Apps built with it, where for ServiceStack you can find a number of simple focused Apps at:

### [.NET Core Apps](https://github.com/NetCoreApps/LiveDemos)

### [.NET Framework Apps](https://github.com/ServiceStackApps/LiveDemos#live-servicestack-demos)

### [Sharp Apps](https://sharpscript.net/sharp-apps/app-index)

Many Apps are well documented like [World Validation](/world-validation) which covers how to re-implement a simple Contacts App UI in

**10 popular Web Development approaches** - all calling the same ServiceStack Services.

ServiceStack is a single code-base implementation that supports [.NET's most popular Server platforms](/why-servicestack#multiple-hosting-options) with

near perfect source-code [compatibility with .NET Core](/netcore) so all .NET Frameworks Apps are still relevant in .NET Core, e.g.

the [EmailContacts guidance](https://github.com/ServiceStackApps/EmailContacts) walks through the recommended setup and physical layout structure of typical medium-sized ServiceStack projects, including complete documentation of how to create the solution from scratch, whilst explaining all the ServiceStack features it makes use of along the way.

## Starting Project Templates

Once you've familiarized yourself with ServiceStack and are ready to use it in action, get started with a customized starting project template from our online template builder at:

servicestack.net/start

[](https://servicestack.net/start) # ServiceStack v10 Source: https://docs.servicestack.net/releases/v10_00

#### Watched builds

A recommended alternative to running your project from your IDE is to run a watched build using `dotnet watch` from a terminal:

:::sh

dotnet watch

:::

Where it will automatically rebuild & restart your App when it detects any changes to your App's source files.

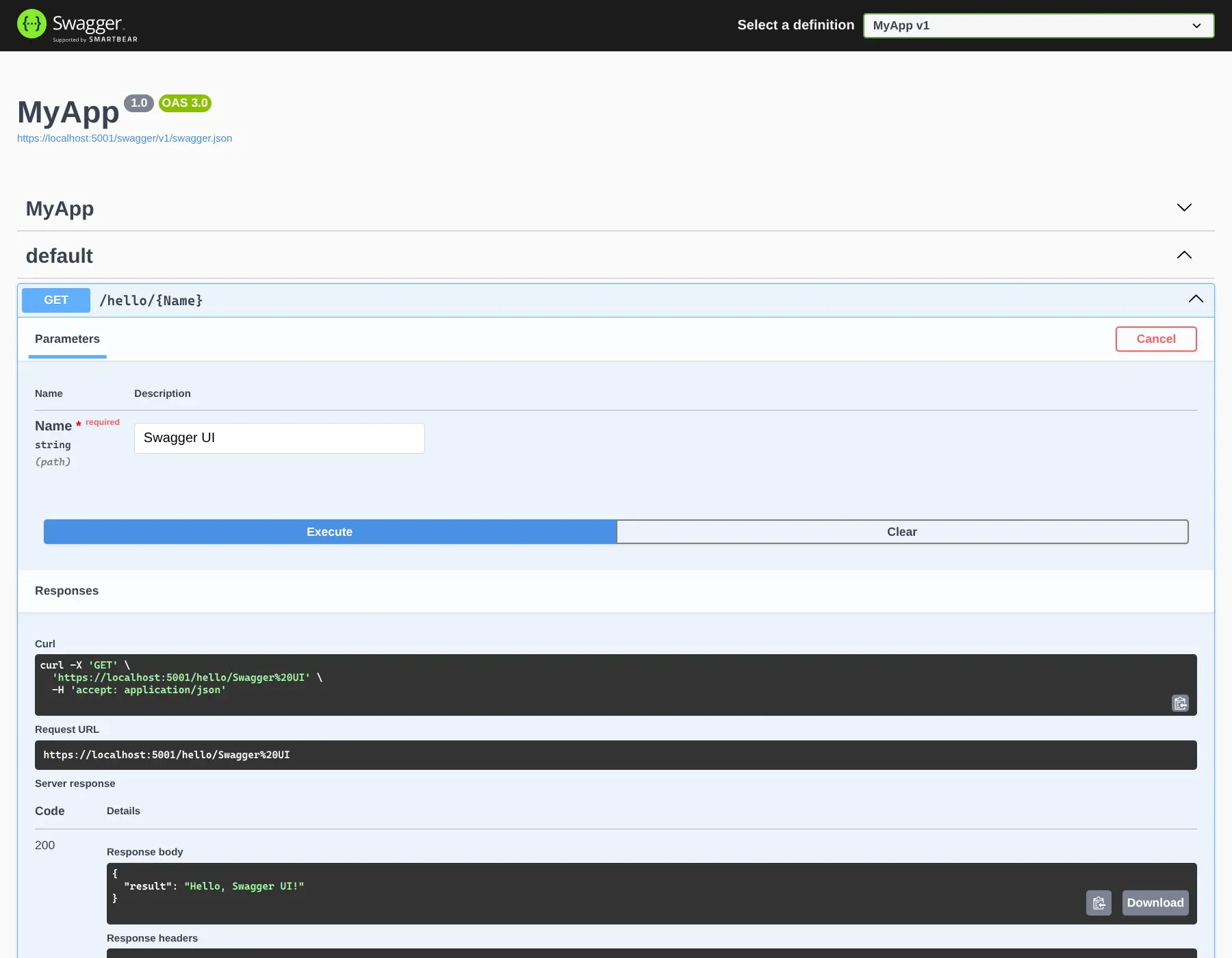

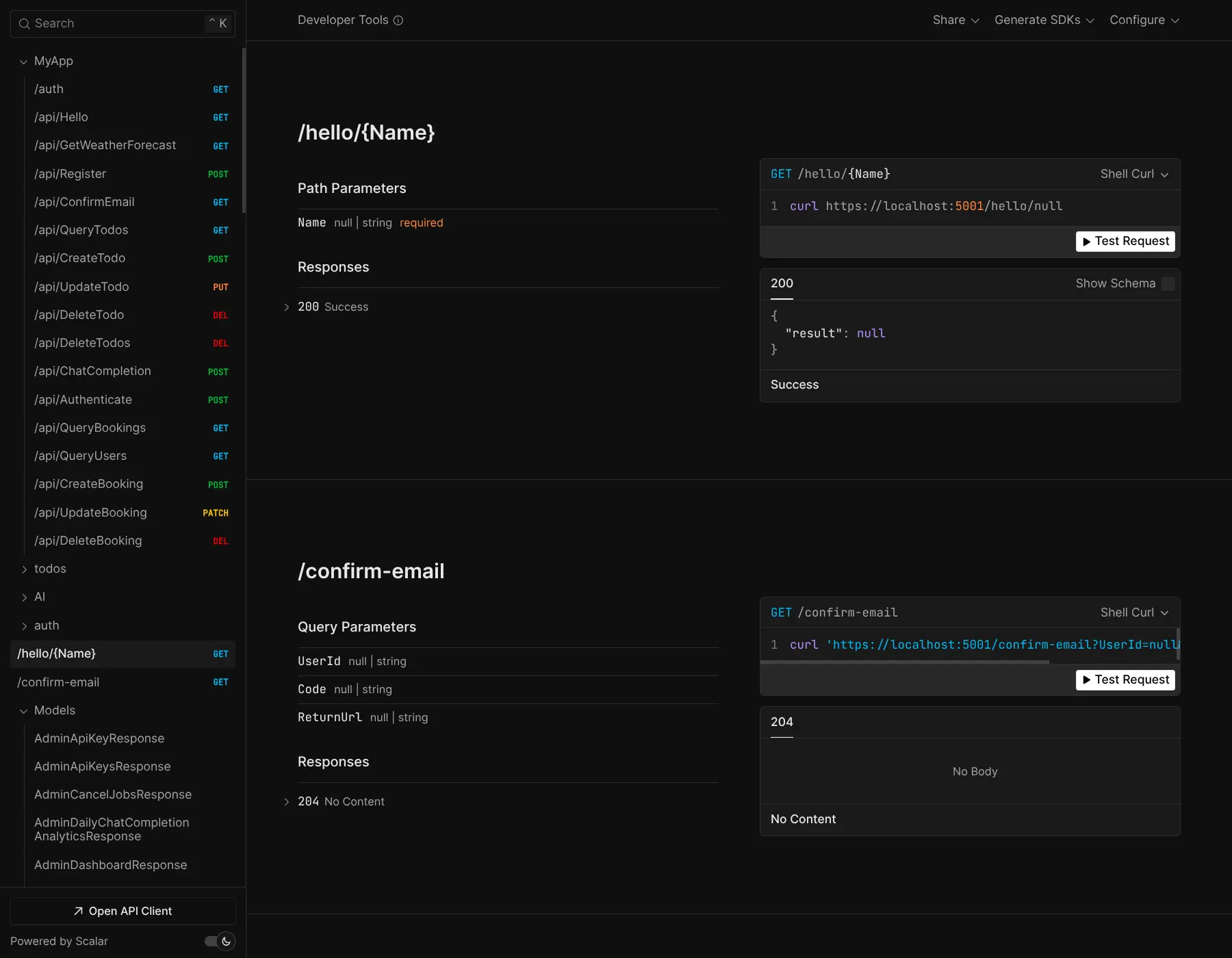

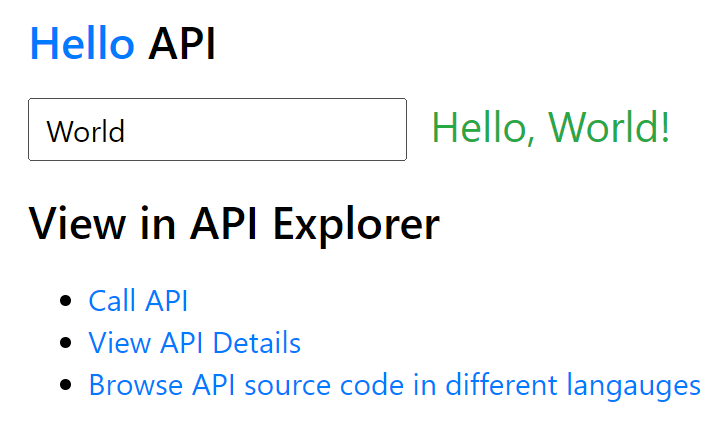

### How does it work?

Now that your new project is running, let's have a look at what we have. The template comes with a single web service route which comes from the Request DTO (Data Transfer Object) which is located in the [Hello.cs](https://github.com/NetCoreTemplates/web/blob/master/MyApp.ServiceModel/Hello.cs) file:

```csharp

[Route("/hello/{Name}")]

public class Hello : IReturn

#### Watched builds

A recommended alternative to running your project from your IDE is to run a watched build using `dotnet watch` from a terminal:

:::sh

dotnet watch

:::

Where it will automatically rebuild & restart your App when it detects any changes to your App's source files.

### How does it work?

Now that your new project is running, let's have a look at what we have. The template comes with a single web service route which comes from the Request DTO (Data Transfer Object) which is located in the [Hello.cs](https://github.com/NetCoreTemplates/web/blob/master/MyApp.ServiceModel/Hello.cs) file:

```csharp

[Route("/hello/{Name}")]

public class Hello : IReturn ### Host Project

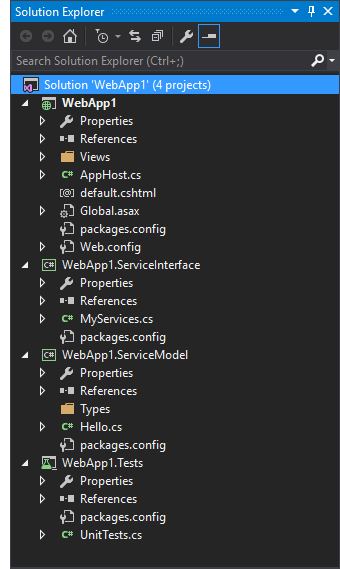

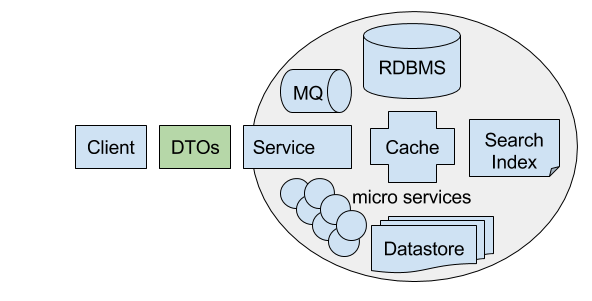

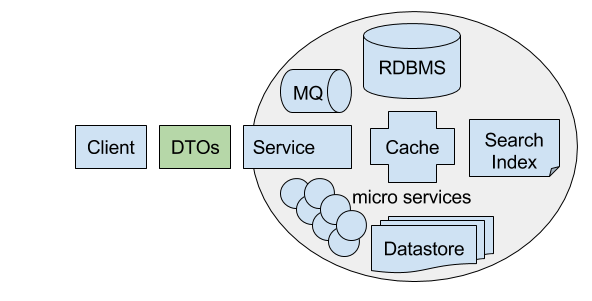

The Host project contains your AppHost which references and registers all your App's concrete dependencies in its IOC and is the central location where all App configuration and global behavior is maintained. It also references all Web Assets like Razor Views, JS, CSS, Images, Fonts, etc. that's needed to be deployed with the App. The AppHost is the top-level project which references all dependencies used by your App whose role is akin to an orchestrator and conduit where it decides what functionality is made available and which concrete implementations are used. By design it references all other (non-test) projects whilst nothing references it and as a goal should be kept free of any App or Business logic.

### ServiceInterface Project

The ServiceInterface project is the implementation project where all Business Logic and Services live which typically references every other project except the Host projects. Small and Medium projects can maintain all their implementation here where logic can be grouped under feature folders. Large solutions can split this project into more manageable cohesive and modular projects which we also recommend encapsulates any dependencies they might use.

### ServiceModel Project

The ServiceModel Project contains all your Application's DTOs which is what defines your Services contract, keeping them isolated from any Server implementation is how your Service is able to encapsulate its capabilities and make them available behind a remote facade. There should be only one ServiceModel project per solution which contains all your DTOs and should be implementation, dependency and logic-free which should only reference the impl/dep-free **ServiceStack.Interfaces.dll** contract assembly to ensure Service contracts are decoupled from its implementation, enforces interoperability ensuring that your Services don't mandate specific client implementations and will ensure this is the only project clients need to be able to call any of your Services by either referencing the **ServiceModel.dll** directly or downloading the DTOs from a remote ServiceStack instance using [Add ServiceStack Reference](/add-servicestack-reference):

### Test Project

The Unit Test project contains all your Unit and Integration tests. It's also a Host project that typically references all other non-Host projects in the solution and contains a combination of concrete and mock dependencies depending on what's being tested. See the [Testing Docs](/testing) for more information on testing ServiceStack projects.

## Learn ServiceStack Guide

If you're new to ServiceStack we recommend stepping through [ServiceStack's Getting Started Guide](https://servicestack.net/start/project-overview)

to get familiar with the basics.

## API Client Examples

### jQuery Ajax

ServiceStack's clean Web Services makes it simple and intuitive to be able to call ServiceStack Services from any ajax client, e.g. from a traditional [Bootstrap Website using jQuery](https://github.com/ServiceStack/Templates/blob/master/src/ServiceStackVS/BootstrapWebApp/BootstrapWebApp/default.cshtml):

```html

```

### Rich JsonApiClient & Typed DTOs

The modern recommended alternative to jQuery that works in all modern browsers is using your APIs built-in [JavaScript typed DTOs](/javascript-add-servicestack-reference) with the [@servicestack/client](/javascript-client) library

from a [JavaScript Module](https://developer.mozilla.org/en-US/docs/Web/JavaScript/Guide/Modules).

We recommend using an [importmap](https://developer.mozilla.org/en-US/docs/Web/HTML/Element/script/type/importmap)

to specify where **@servicestack/client** should be loaded from, e.g:

```html

```

This lets us reference the **@servicestack/client** package name in our source code instead of its physical location:

```html

```

```html

```

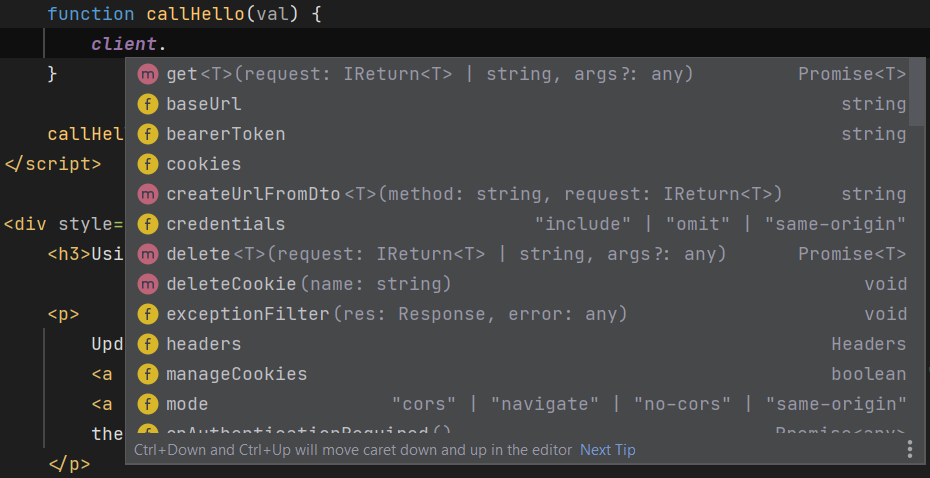

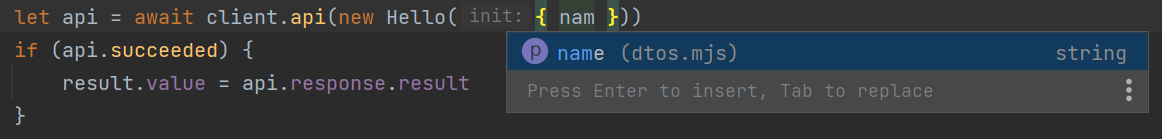

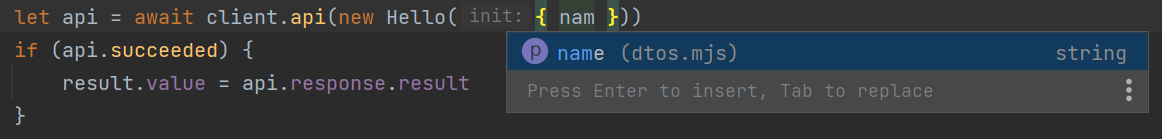

### Enable static analysis and intelli-sense

For better IDE intelli-sense during development, save the annotated Typed DTOs to disk with the [x dotnet tool](/dotnet-tool):

:::sh

x mjs

:::

Then reference it instead to enable IDE static analysis when calling Typed APIs from JavaScript:

```js

import { Hello } from '/js/dtos.mjs'

client.api(new Hello({ name }))

```

To also enable static analysis for **@servicestack/client**, install the dependency-free library as a dev dependency:

:::sh

npm install -D @servicestack/client

:::

Where only its TypeScript definitions are used by the IDE during development to enable its type-checking and intelli-sense.

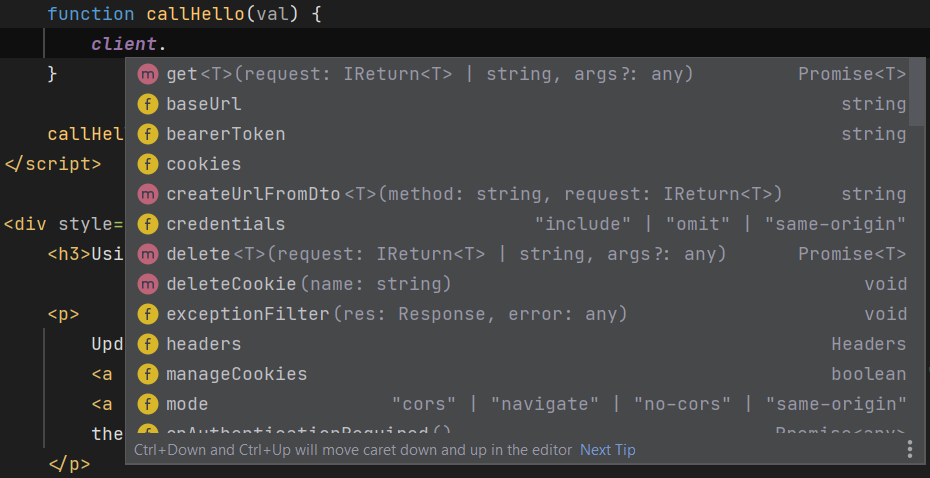

### Rich intelli-sense support

Where you'll be able to benefit from rich intelli-sense support in smart IDEs like [Rider](https://www.jetbrains.com/rider/) for

both the client library:

As well as your App's server generated DTOs:

So even simple Apps without complex bundling solutions or external dependencies can still benefit from a rich typed authoring

experience without any additional build time or tooling complexity.

## Create Empty ServiceStack Apps

::include empty-projects.md::

### Any TypeScript or JavaScript Web, Node.js or React Native App

The same TypeScript [JsonServiceClient](/javascript-client) can also be used in more sophisticated JavaScript Apps like

[React Native](/typescript-add-servicestack-reference#react-native-jsonserviceclient) to [Node.js Server Apps](https://github.com/ServiceStackApps/typescript-server-events) such as this example using TypeScript & [Vue Single-File Components](https://vuejs.org/guide/scaling-up/sfc.html):

```html

### Host Project

The Host project contains your AppHost which references and registers all your App's concrete dependencies in its IOC and is the central location where all App configuration and global behavior is maintained. It also references all Web Assets like Razor Views, JS, CSS, Images, Fonts, etc. that's needed to be deployed with the App. The AppHost is the top-level project which references all dependencies used by your App whose role is akin to an orchestrator and conduit where it decides what functionality is made available and which concrete implementations are used. By design it references all other (non-test) projects whilst nothing references it and as a goal should be kept free of any App or Business logic.

### ServiceInterface Project

The ServiceInterface project is the implementation project where all Business Logic and Services live which typically references every other project except the Host projects. Small and Medium projects can maintain all their implementation here where logic can be grouped under feature folders. Large solutions can split this project into more manageable cohesive and modular projects which we also recommend encapsulates any dependencies they might use.

### ServiceModel Project

The ServiceModel Project contains all your Application's DTOs which is what defines your Services contract, keeping them isolated from any Server implementation is how your Service is able to encapsulate its capabilities and make them available behind a remote facade. There should be only one ServiceModel project per solution which contains all your DTOs and should be implementation, dependency and logic-free which should only reference the impl/dep-free **ServiceStack.Interfaces.dll** contract assembly to ensure Service contracts are decoupled from its implementation, enforces interoperability ensuring that your Services don't mandate specific client implementations and will ensure this is the only project clients need to be able to call any of your Services by either referencing the **ServiceModel.dll** directly or downloading the DTOs from a remote ServiceStack instance using [Add ServiceStack Reference](/add-servicestack-reference):

### Test Project

The Unit Test project contains all your Unit and Integration tests. It's also a Host project that typically references all other non-Host projects in the solution and contains a combination of concrete and mock dependencies depending on what's being tested. See the [Testing Docs](/testing) for more information on testing ServiceStack projects.

## Learn ServiceStack Guide

If you're new to ServiceStack we recommend stepping through [ServiceStack's Getting Started Guide](https://servicestack.net/start/project-overview)

to get familiar with the basics.

## API Client Examples

### jQuery Ajax

ServiceStack's clean Web Services makes it simple and intuitive to be able to call ServiceStack Services from any ajax client, e.g. from a traditional [Bootstrap Website using jQuery](https://github.com/ServiceStack/Templates/blob/master/src/ServiceStackVS/BootstrapWebApp/BootstrapWebApp/default.cshtml):

```html

```

### Rich JsonApiClient & Typed DTOs

The modern recommended alternative to jQuery that works in all modern browsers is using your APIs built-in [JavaScript typed DTOs](/javascript-add-servicestack-reference) with the [@servicestack/client](/javascript-client) library

from a [JavaScript Module](https://developer.mozilla.org/en-US/docs/Web/JavaScript/Guide/Modules).

We recommend using an [importmap](https://developer.mozilla.org/en-US/docs/Web/HTML/Element/script/type/importmap)

to specify where **@servicestack/client** should be loaded from, e.g:

```html

```

This lets us reference the **@servicestack/client** package name in our source code instead of its physical location:

```html

```

```html

```

### Enable static analysis and intelli-sense

For better IDE intelli-sense during development, save the annotated Typed DTOs to disk with the [x dotnet tool](/dotnet-tool):

:::sh

x mjs

:::

Then reference it instead to enable IDE static analysis when calling Typed APIs from JavaScript:

```js

import { Hello } from '/js/dtos.mjs'

client.api(new Hello({ name }))

```

To also enable static analysis for **@servicestack/client**, install the dependency-free library as a dev dependency:

:::sh

npm install -D @servicestack/client

:::

Where only its TypeScript definitions are used by the IDE during development to enable its type-checking and intelli-sense.

### Rich intelli-sense support

Where you'll be able to benefit from rich intelli-sense support in smart IDEs like [Rider](https://www.jetbrains.com/rider/) for

both the client library:

As well as your App's server generated DTOs:

So even simple Apps without complex bundling solutions or external dependencies can still benefit from a rich typed authoring

experience without any additional build time or tooling complexity.

## Create Empty ServiceStack Apps

::include empty-projects.md::

### Any TypeScript or JavaScript Web, Node.js or React Native App

The same TypeScript [JsonServiceClient](/javascript-client) can also be used in more sophisticated JavaScript Apps like

[React Native](/typescript-add-servicestack-reference#react-native-jsonserviceclient) to [Node.js Server Apps](https://github.com/ServiceStackApps/typescript-server-events) such as this example using TypeScript & [Vue Single-File Components](https://vuejs.org/guide/scaling-up/sfc.html):

```html

{{ error.message }}

{{ api.loading ? 'Loading...' : api.response.result }}

```

Compare and contrast with other major SPA JavaScript Frameworks:

- [Vue 3 HelloApi.mjs](https://github.com/NetCoreTemplates/blazor-vue/blob/main/MyApp/wwwroot/posts/components/HelloApi.mjs)

- [Vue HelloApi.vue](https://github.com/NetCoreTemplates/vue-spa/blob/main/MyApp.Client/src/_posts/components/HelloApi.vue)

- [Next.js with swrClient](https://github.com/NetCoreTemplates/nextjs/blob/main/ui/components/intro.tsx)

- [React HelloApi.tsx](https://github.com/NetCoreTemplates/react-spa/blob/master/MyApp/src/components/Home/HelloApi.tsx)

- [Angular HelloApi.ts](https://github.com/NetCoreTemplates/angular-spa/blob/master/MyApp/src/app/home/HelloApi.ts)

### Web, Mobile and Desktop Apps

Use [Add ServiceStack Reference](/add-servicestack-reference) to enable typed integrations for the most popular languages to develop Web, Mobile & Desktop Apps.

### Full .NET Project Templates

The above `init` projects allow you to create a minimal web app, to create a more complete ServiceStack App with the recommended project structure, start with one of our C# project templates instead:

### [C# Project Templates Overview](/templates/)

## Simple, Modern Razor Pages & MVC Vue 3 Tailwind Templates

The new Tailwind Razor Pages & MVC Templates enable rapid development of Modern Tailwind Apps without the [pitfalls plaguing SPAs](https://servicestack.net/posts/javascript):